Hadoop之深入HDFS原理<二>

Posted 月疯

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hadoop之深入HDFS原理<二>相关的知识,希望对你有一定的参考价值。

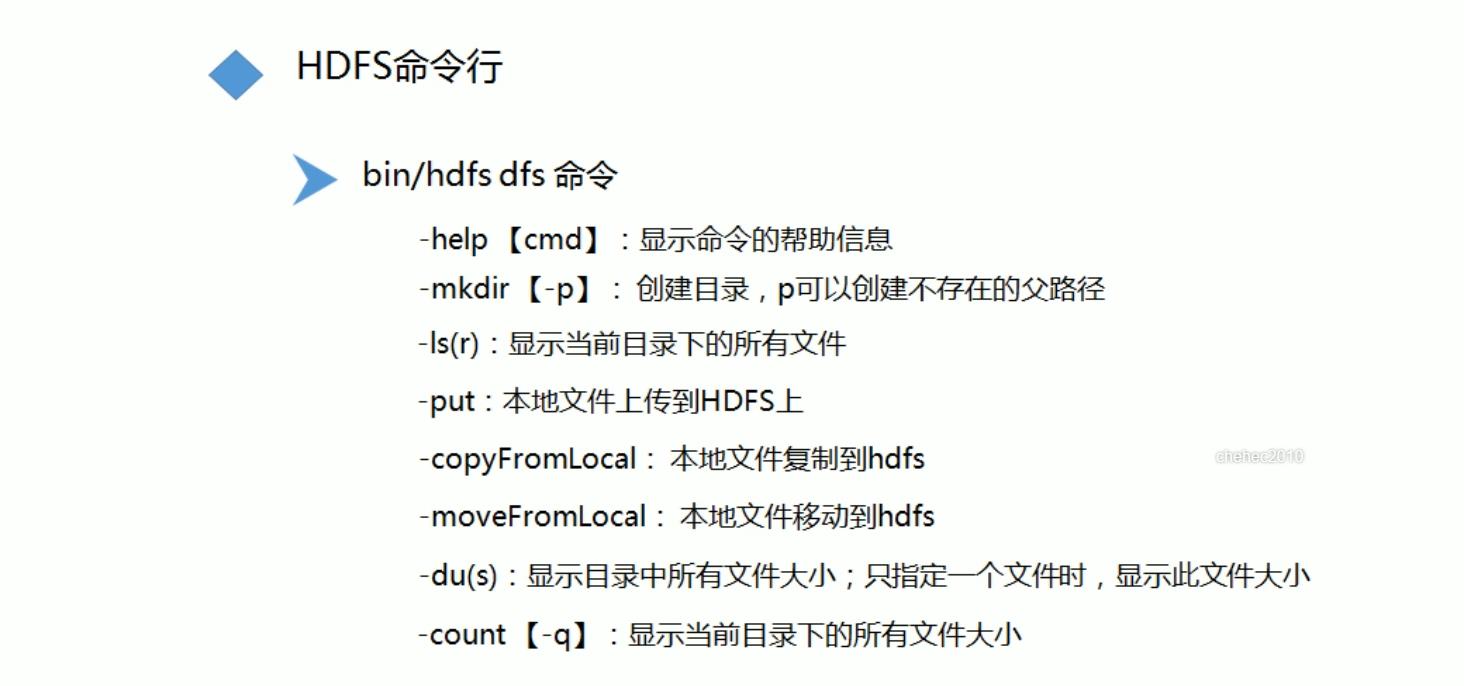

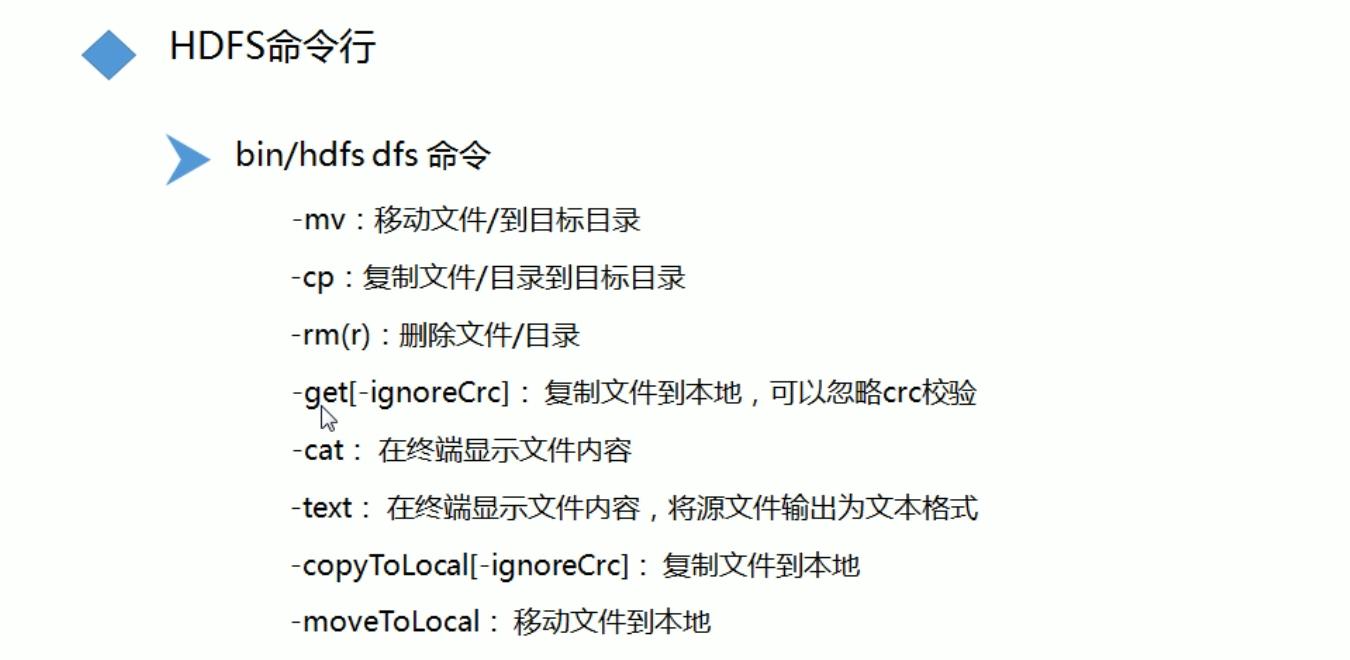

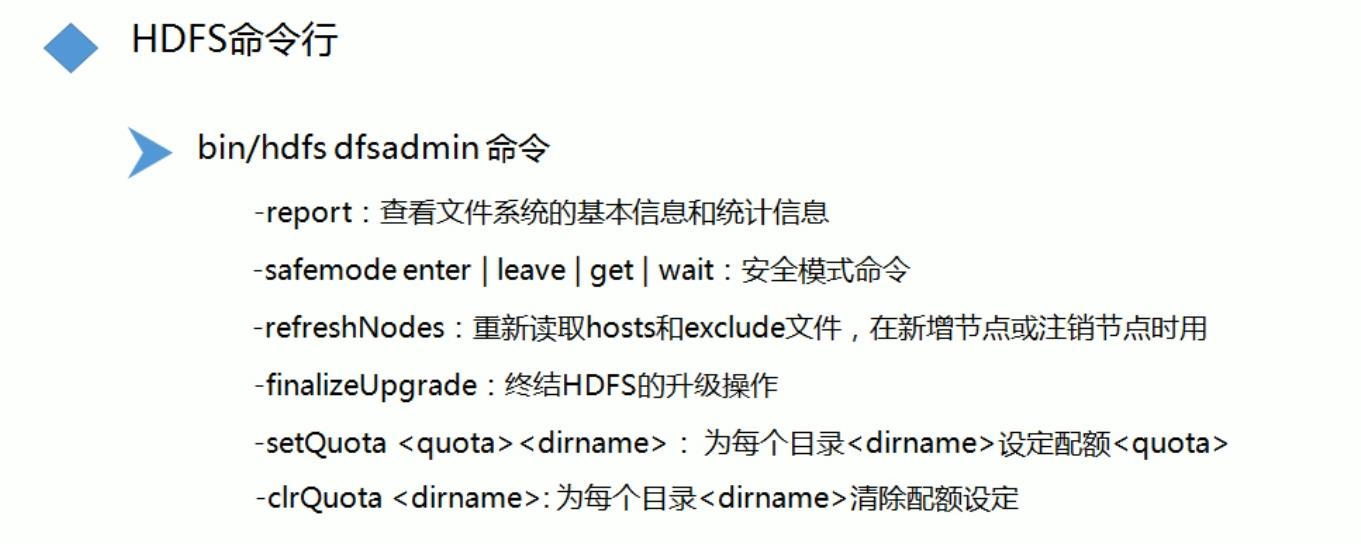

HDFS命令行操作:

hadoop运维工程司需要掌握的命令:

HDFS JAVA API

API文档:

http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.9.0/api/index.html

访问入口类:FIleSystem

创建目录:mkdirs

上传文件:create/put或copyFromLocalFile

列出目录内容:listStatus

显示文件或目录的元数据:getFileStatus

下载文件:open/get或copyToLocalFile

删除文件或目录:delete

注意:Hadoop默认支持权限控制,可将其关闭

hadfs-site.xml文件;dfs.permissions.enabled设置成false

文件系统的端口是50070

练习HDFSJava操作:maven依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>groupId</groupId>

<artifactId>hadoop</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.9.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.9.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.9.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.9.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>2.9.0</version>

<scope>provided</scope>

</dependency>

</dependencies>

</project>代码:

package com.hadoop;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

public class Main

public static void main(String[] args)

// write your code here

//生成文件系统

public static FileSystem getHadoopFileSystem()

FileSystem fs =null;

//根据配置文件创建HDFS对象

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://hadoop-senior.test.com:9000");

//设置副本策略

conf.set("dfs.replication","1");

try

//根据配置文件创建HDFS对象

fs = FileSystem.get(conf);

catch (Exception e)

e.printStackTrace();

return fs;

//创建目录

public static boolean createPath()

boolean result = false;

//获取文件系统

FileSystem fs =getHadoopFileSystem();

//调用系统文件系统mkdirs创建目录

Path path=new Path("/test");

try

result = fs.mkdirs(path);

catch (IOException e)

e.printStackTrace();

finally

try

//将文件关闭

fs.close();

catch (IOException e)

e.printStackTrace();

return result;

//创建文件\\写入数据

public static boolean createFile(String pathName)

String content="nihao";

boolean result = false;

FileSystem fs =getHadoopFileSystem();

try

FSDataOutputStream out=fs.create(new Path(pathName));

out.writeUTF(content);

result = true;

catch (IOException e)

e.printStackTrace();

finally

try

fs.close();

catch (IOException e)

e.printStackTrace();

return result;

//本地上传文件到hdfs

public static void putFileHDFS()

FileSystem fs=getHadoopFileSystem();

//如果上传的路径不存在会创建;如果该路径文件已存在,就会覆盖

Path localPath=new Path("/usr/local/software/datas/test.txt");

Path hdfsPath=new Path("/test/test.txt");

try

//复制本地文件到HDFS

fs.copyFromLocalFile(localPath,hdfsPath);

catch (IOException e)

e.printStackTrace();

finally

try

fs.close();

catch (IOException e)

e.printStackTrace();

//上传文件(通过输入和输出流)

public static void putFileHDFS2(String outFile,String inFile)

//获取文件系统

FileSystem fileSystem=getHadoopFileSystem();

//创建输出文件

try

//通过上传文件,生成输出流

FSDataOutputStream out=fileSystem.create(new Path(outFile));

//通过本地文件生成输入流

FileInputStream in=new FileInputStream(inFile);

//通过IOUtils的copyBytes方法传递数据流

//buffSize:缓冲区大小

//是否进行关闭,设置true,传输完毕,自动关闭输出输入流

IOUtils.copyBytes(in,out,4096,true);

catch(Exception e)

e.printStackTrace();

//获取上传文件的基本信息

public static void list()

FileSystem fs=getHadoopFileSystem();

Path path=new Path("/test");

try

//遍历目录,使用FileSystem的listStatus

FileStatus[] fileStatuList = fs.listStatus(path);

//使用FileStatus对象查看file状态

for(FileStatus filestatus:fileStatuList)

String isDir =filestatus.isDirectory()? "目录":"文件";

String name = filestatus.getPath().toString();

System.out.print(isDir+" "+name);

catch (IOException e)

e.printStackTrace();

finally

try

fs.close();

catch (IOException e)

e.printStackTrace();

//下载文件

public static void getFileFromHDFS()

FileSystem fs =getHadoopFileSystem();

Path HDFSPath=new Path("/test/test.txt");

Path localPath=new Path("/usr/local/software/datas/test.txt");

try

//false:不删除服务器文件

//HDFSPath:本地目录

//localPath:服务器目录

//true:由于本地文件系统window系统,没有安装hadoop环境,所以使用第四个参数指定用本地文件系统

fs.copyToLocalFile(false,HDFSPath,localPath,true);

catch (IOException e)

e.printStackTrace();

finally

try

fs.close();

catch (IOException e)

e.printStackTrace();

//通过输入输出流下载文件

public static void getFileFromHDFS1(String inFile,String outFile)

FileSystem fs =getHadoopFileSystem();

try

//hdfs文件通过输入流读取到内存

FSDataInputStream in=fs.open(new Path(inFile));

//内存中的数据通过输出流输出到本地

FileOutputStream out=new FileOutputStream(outFile);

IOUtils.copyBytes(in,out,4096,true);

//也可以直接输出到控制台

// IOUtils.copyBytes(in,System.out,4096,true);

catch (IOException e)

e.printStackTrace();

finally

try

fs.close();

catch (IOException e)

e.printStackTrace();

//删除文件或者目录

public static boolean delete()

boolean result =false;

FileSystem fs =getHadoopFileSystem();

Path path=new Path("/test/test.txt");

try

result=fs.delete(path,true);

catch (IOException e)

e.printStackTrace();

finally

try

fs.close();

catch (IOException e)

e.printStackTrace();

return result;

以上是关于Hadoop之深入HDFS原理<二>的主要内容,如果未能解决你的问题,请参考以下文章