[云原生专题-18]:容器 - docker自带的集群管理工具swarm - 手工搭建集群服务全过程详细解读

Posted 文火冰糖的硅基工坊

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[云原生专题-18]:容器 - docker自带的集群管理工具swarm - 手工搭建集群服务全过程详细解读相关的知识,希望对你有一定的参考价值。

作者主页(文火冰糖的硅基工坊):文火冰糖(王文兵)的博客_文火冰糖的硅基工坊_CSDN博客

本文网址:https://blog.csdn.net/HiWangWenBing/article/details/122743643

目录

2.1 搭建leader manager 角色(swarm int)

2.2 搭建普通 manager 角色 (swarm join as mananger)

2.3 搭建worker 角色 (swarm join as worker)

第四步:搭建集群服务(docker service)--在管理节点上执行命令

4.7 在线滚动升级服务镜像的版本: service update

准备工作:集群规划

第一步:搭建云服务器

1.1 安装服务器

(1)服务器

- 创建5台服务器, 即实例数=5,一次性创建5台服务器

- 5台服务器在同一个默认网络中

- 1核2G

- 标准共享型

- 操作系统选择CentOS

(2)网络

- 分配共有IP地址

- 默认专有网络

- 按流量使用,1M带宽

(3)默认安全组

- 设定公网远程服务服务器的用户名和密码

- 添加80端口,用于后续nginx服务的测试。

1.2 安装后检查

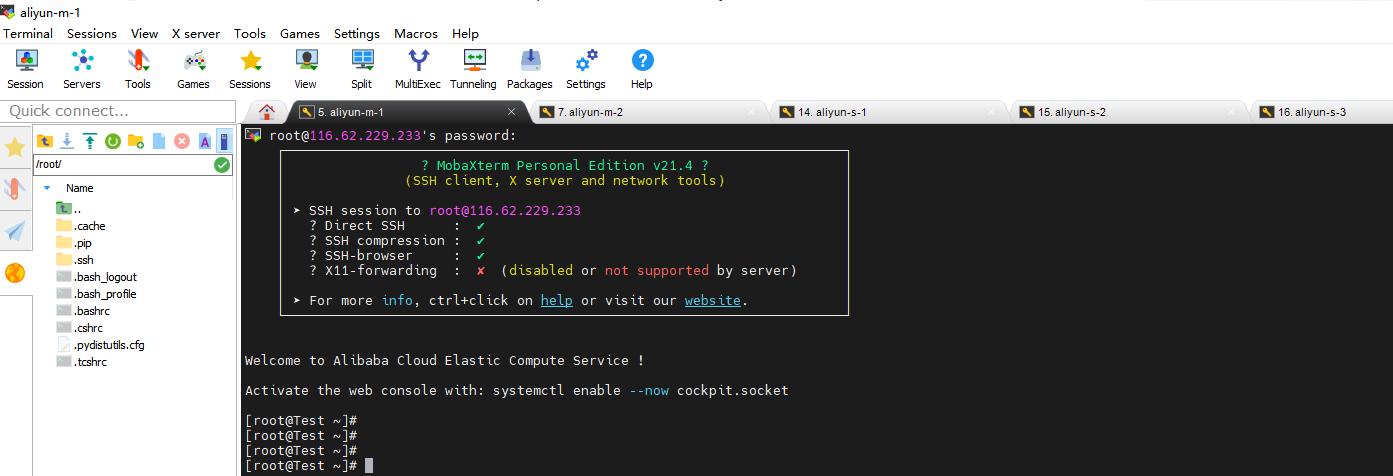

(1)通过公网IP登录到每台服务器

(2) 为服务器划分角色

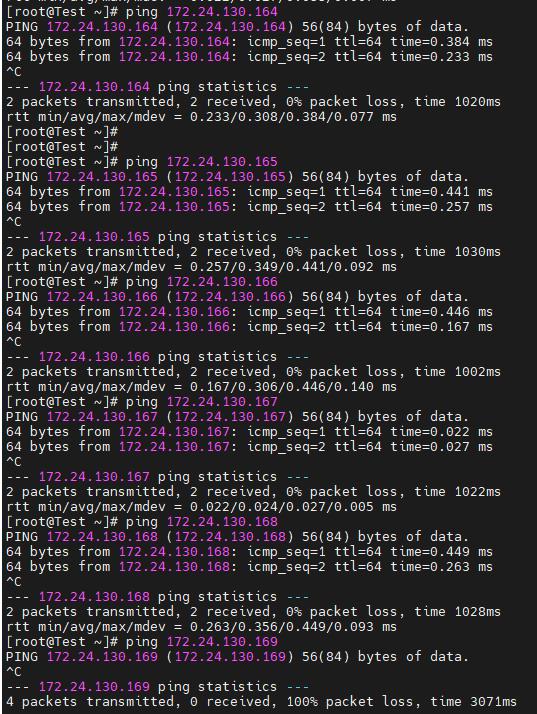

leader mananger:172.24.130.164

normal manager:172.24.130.165

worker1:172.24.130.166

worker2:172.24.130.167

worker3:172.24.130.168

(3)安装ifconfig和ping工具

$ yum install -y yum-utils

$ yum install iputils

$ yum install net-tools.x86_64(4)通过私网IP可以ping通

第二步:搭建docker环境(云平台手工操作)

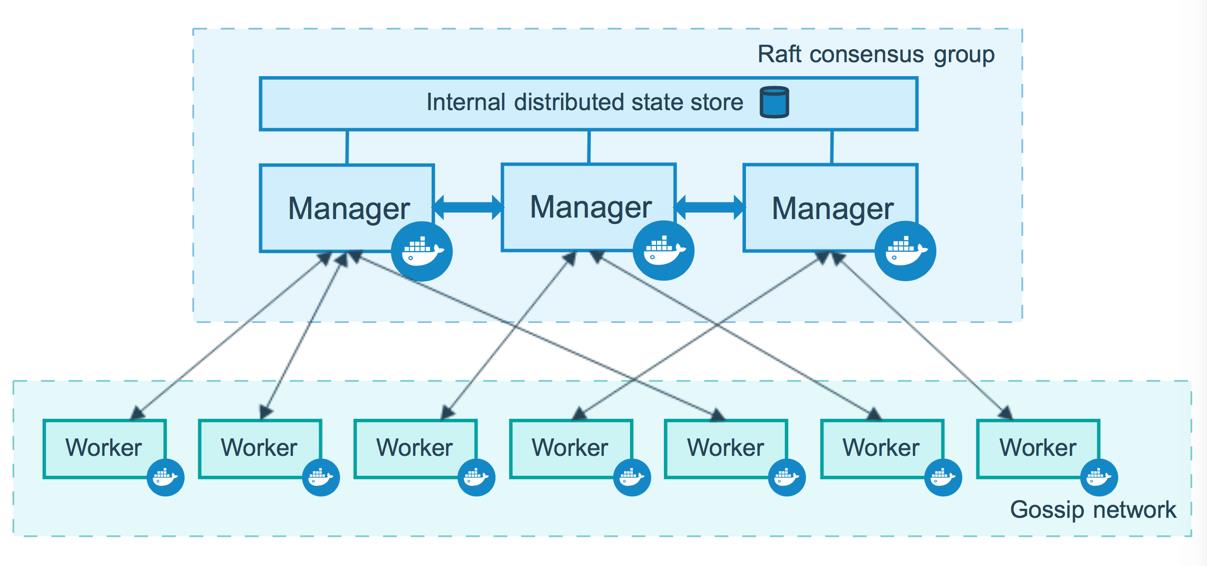

How nodes work | Docker Documentation

2.1 为每台虚拟服务器安装docker环境

(1)分别ssh到每台虚拟服务器中

(2)一键安装docker环境

# 增加阿里云镜像repo

$ yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

$ curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun2.2 为每台虚拟服务器启动docker环境

$ systemctl start docker

$ docker version

$ docker ps

$ docker images

[root@Test ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Docker Buildx (Docker Inc., v0.7.1-docker)

scan: Docker Scan (Docker Inc., v0.12.0)

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 20.10.12

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 io.containerd.runtime.v1.linux runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7b11cfaabd73bb80907dd23182b9347b4245eb5d

runc version: v1.0.2-0-g52b36a2

init version: de40ad0

Security Options:

seccomp

Profile: default

Kernel Version: 4.18.0-348.2.1.el8_5.x86_64

Operating System: CentOS Linux 8

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 759.7MiB

Name: Test

ID: QOVF:RC73:VI4W:TAUR:3NIE:GSAW:6HMY:L2SM:LRWF:DYIZ:5BJT:SQTB

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

第三步:搭建集群角色(swarm)

2.1 搭建leader manager 角色(swarm int)

(1)命令

$ docker swarm init --advertise-addr 172.24.130.164 #这里的 IP 为创建机器时分配的私有ip。(2)输出

[root@Test ~]# docker swarm init --advertise-addr 172.24.130.164

Swarm initialized: current node (tkox6q8o48l7b2aofzygzvjow) is now a manager.

如何添加woker:

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-23znzigz1ycuuy1hmp77a4nxp2w8ffvri3zwelc8fx4aeo2xr6-bpda5otyc47k1iz064353adwj 172.24.130.164:2377

如何添加manager:

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

以上输出,证明已经初始化成功。需要把以下这行复制出来,在增加工作节点时会用到。

在集群中,

--token为集群的标识,其组成为token-id + leader mananger ip地址 + leader mananger的端口号。

(3) docker swarm用法

[root@Test ~]# docker swarm

Usage: docker swarm COMMAND

Manage Swarm

Commands:

ca Display and rotate the root CA

init Initialize a swarm

join Join a swarm as a node and/or manager

join-token Manage join tokens

leave Leave the swarm

unlock Unlock swarm

unlock-key Manage the unlock key

update Update the swarm

Run 'docker swarm COMMAND --help' for more information on a command.

2.2 搭建普通 manager 角色 (swarm join as mananger)

(1) 在leader manager上执行如下命令,获取以manager的身份加入到swarm集群中的方法

$ docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-23znzigz1ycuuy1hmp77a4nxp2w8ffvri3zwelc8fx4aeo2xr6-262vije87ut842ejp8cmfdwqn 172.24.130.164:2377

(2)登录到mananger-2上,执行上述输出结果中的命令,

docker swarm join --token SWMTKN-1-23znzigz1ycuuy1hmp77a4nxp2w8ffvri3zwelc8fx4aeo2xr6-262vije87ut842ejp8cmfdwqn 172.24.130.164:2377This node joined a swarm as a manager.

2.3 搭建worker 角色 (swarm join as worker)

(1)在leader mananger节点上执行如下命令

$ docker swarm join-token workerTo add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-23znzigz1ycuuy1hmp77a4nxp2w8ffvri3zwelc8fx4aeo2xr6-bpda5otyc47k1iz064353adwj 172.24.130.165:2377

(2)在每个worker机器分别执行如下命令:

[root@Test ~]# docker swarm join --token SWMTKN-1-23znzigz1ycuuy1hmp77a4nxp2w8ffvri3zwelc8fx4aeo2xr6-bpda5otyc47k1iz064353adwj 172.24.130.164:2377

This node joined a swarm as a worker.

2.4 查看集群的节点情况

(1)docker node命令

[root@Test ~]# docker node

Usage: docker node COMMAND

Manage Swarm nodes

Commands:

demote Demote one or more nodes from manager in the swarm

inspect Display detailed information on one or more nodes

ls List nodes in the swarm

promote Promote one or more nodes to manager in the swarm

ps List tasks running on one or more nodes, defaults to current node

rm Remove one or more nodes from the swarm

update Update a node

从上述命令可以看出,可以通过rm命令把部分节点从swarm集群中删除。也可以通过ls命令展现当前集群中的所有节点。

(2)查看集群中的所有节点

[root@Test ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

notb2otq9cd6ie5tcs99ozoam Test Ready Active 20.10.12

s59g2ygqm8lbrngpxhyt3zzg3 Test Ready Active 20.10.12

stea3vvu3hj9x619yb64e5kjo Test Ready Active 20.10.12

tkox6q8o48l7b2aofzygzvjow Test Ready Active Leader 20.10.12

zclgojyadj75g4qtx5bmyj9kk * Test Ready Active Reachable 20.10.12

从上面的输出可以看出,5个节点都按照各自规划的身份,已经加入到网络,

现在就可以,集群中节点部署服务docker service了。

第四步:搭建集群服务(docker service)--在管理节点上执行命令

4.1 docer service命令

[root@Test ~]# docker service

Usage: docker service COMMAND

Manage services

Commands:

create Create a new service

inspect Display detailed information on one or more services

logs Fetch the logs of a service or task

ls List services

ps List the tasks of one or more services

rm Remove one or more services

rollback Revert changes to a service's configuration

scale Scale one or multiple replicated services

update Update a service

4.2 新创建服务

在一个工作节点上创建一个名为 helloworld 的服务,这里是随机指派给一个工作节点:

# 展现当前已有的service

$ docker service ls

# 删除已有的service(现在测试已经部署的service)

$ docker service rm

# 部署自己的service

$ [root@Test ~]# docker service create --replicas 2 --name my_nginx -p 80:80 nginx

[root@Test ~]# docker service create --replicas 2 --name my_nginx -p 80:80 nginx

d326pd2c5mmiosc9m5lwbdp2f

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

4.3 查看服务

(1)查看所有服务

[root@Test ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

d326pd2c5mmi my_nginx replicated 2/2 nginx:latest *:80->80/tcp

这里有一个服务,my_nginx ,有两个实例,服务的端口为80.

(2)在master上查看指定service的详情

[root@Test ~]# docker service ps my_nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

k9n4h3u0fprx my_nginx.1 nginx:latest Test Running Running 6 minutes ago

7jainolzyb20 my_nginx.2 nginx:latest Test Running Running 6 minutes ago

(3)每台服务器查看在自身服务上的部署情形

选择其中服务器:

[root@Test ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3b7a1a984b42 nginx:latest "/docker-entrypoint.…" 5 minutes ago Up 5 minutes 80/tcp my_nginx.7.lctwil7sohn4l0h62vbvgagju

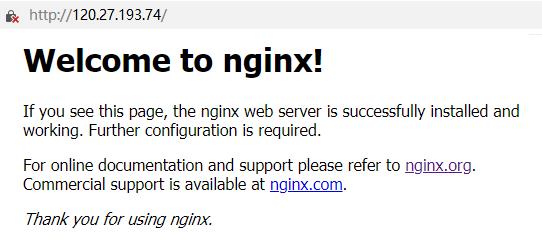

4.4 验证服务

在5个服务器公网地址,分别执行http远程访问

http://116.62.229.233/

http://120.26.75.192/

http://120.27.251.144/

http://121.199.64.187/

http://120.27.193.74/

我们会发现一个神奇的现象:

虽然我们只部署了nginx服务的两个实例,但居然通过集群服务器的所有地址,都可以访问相同的nginx服务。

这其实就是集群服务的优势:

无论后续部署多少个实例,都可以通过相同的IP地址访问服务,自动实现work节点的负载均衡,以及多个IP地址的汇集功能 。

也就是说,5个公网IP地址是为集群共享,所有部署的服务,也是为集群共享。

由docker swarm来实现上述功能。

4.5 服务实例的弹性增加

我们可以根据实际用户的访问流量,非常方便、简洁、快速地、弹性部署服务的实例。

[root@Test ~]# docker service scale my_nginx=8

my_nginx scaled to 8

overall progress: 8 out of 8 tasks

1/8: running [==================================================>]

2/8: running [==================================================>]

3/8: running [==================================================>]

4/8: running [==================================================>]

5/8: running [==================================================>]

6/8: running [==================================================>]

7/8: running [==================================================>]

8/8: running [==================================================>]

verify: Service converged

[root@Test ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

d326pd2c5mmi my_nginx replicated 8/8 nginx:latest *:80->80/tcp

[root@Test ~]# docker service ps my_nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

k9n4h3u0fprx my_nginx.1 nginx:latest Test Running Running 25 minutes ago

7jainolzyb20 my_nginx.2 nginx:latest Test Running Running 25 minutes ago

5ib5pvokzul3 my_nginx.3 nginx:latest Test Running Running 53 seconds ago

h0l58xuot3b1 my_nginx.4 nginx:latest Test Running Running 53 seconds ago

fj6nfnh4iwhc my_nginx.5 nginx:latest Test Running Running 52 seconds ago

m4qhmh2ng8ff my_nginx.6 nginx:latest Test Running Running 52 seconds ago

lctwil7sohn4 my_nginx.7 nginx:latest Test Running Running 53 seconds ago

9mk5v48d6m9x my_nginx.8 nginx:latest Test Running Running 53 seconds ago

登录到每个服务器上,查看这8个服务中,部署在自身服务器上有 几个:

[root@Test ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3b7a1a984b42 nginx:latest "/docker-entrypoint.…" 5 minutes ago Up 5 minutes 80/tcp my_nginx.7.lctwil7sohn4l0h62vbvgagju

174f62943bf2 nginx:latest "/docker-entrypoint.…" 29 minutes ago Up 29 minutes 80/tcp my_nginx.2.7jainolzyb2012xuzswo1nfpr

[root@Test ~]#

4.6 服务实例的弹性删除

[root@Test ~]# docker service scale my_nginx=4

my_nginx scaled to 4

overall progress: 4 out of 4 tasks

1/4: running [==================================================>]

2/4: running [==================================================>]

3/4: running [==================================================>]

4/4: running [==================================================>]

verify: Service converged

[root@Test ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

d326pd2c5mmi my_nginx replicated 4/4 nginx:latest *:80->80/tcp

[root@Test ~]#

4.7 在线滚动升级服务镜像的版本: service update

$ docker service update --image XXX

4.8 停止某个节点接受服务请求

[root@Test ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

notb2otq9cd6ie5tcs99ozoam Test Ready Active 20.10.12

s59g2ygqm8lbrngpxhyt3zzg3 Test Ready Active 20.10.12

stea3vvu3hj9x619yb64e5kjo Test Ready Active 20.10.12

tkox6q8o48l7b2aofzygzvjow * Test Ready Active Leader 20.10.12

zclgojyadj75g4qtx5bmyj9kk Test Ready Active Reachable 20.10.12

[root@Test ~]# docker node update --availability drain notb2otq9cd6ie5tcs99ozoam

notb2otq9cd6ie5tcs99ozoam

[root@Test ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

notb2otq9cd6ie5tcs99ozoam Test Ready Drain 20.10.12

s59g2ygqm8lbrngpxhyt3zzg3 Test Ready Active 20.10.12

stea3vvu3hj9x619yb64e5kjo Test Ready Active 20.10.12

tkox6q8o48l7b2aofzygzvjow * Test Ready Active Leader 20.10.12

zclgojyadj75g4qtx5bmyj9kk Test Ready Active Reachable 20.10.12

[root@Test ~]#

通过一条简单的命令,就可以让某个节点停止提供服务。

备注:

停止服务,并不是,其服务器的IP地址不可访问,而是在SWARM在该节点不参与负载均衡调度。

对该服务器的所有访问,都会均衡到其他服务器上。

作者主页(文火冰糖的硅基工坊):文火冰糖(王文兵)的博客_文火冰糖的硅基工坊_CSDN博客

本文网址:https://blog.csdn.net/HiWangWenBing/article/details/122743643

以上是关于[云原生专题-18]:容器 - docker自带的集群管理工具swarm - 手工搭建集群服务全过程详细解读的主要内容,如果未能解决你的问题,请参考以下文章

[云原生专题-15]:容器 - docker主机管理工具-docker machine

[云原生专题-6]:容器 - Docker概述以及虚拟机的比较

[云原生专题-9]:容器 - Docker的启动与关闭:runstop