pytorch loss

Posted 东东就是我

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了pytorch loss相关的知识,希望对你有一定的参考价值。

1.损失函数简介

损失函数,又叫目标函数,用于计算真实值和预测值之间差异的函数。

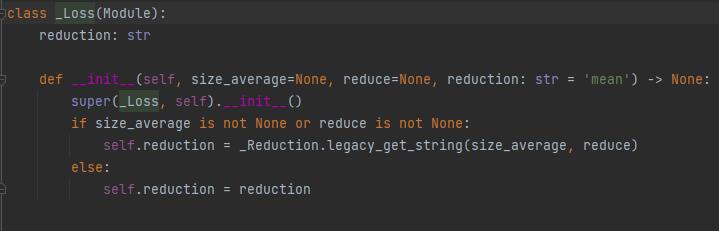

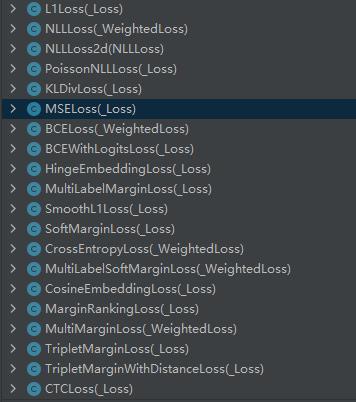

pytorch loss基类是_Loss ,其中_Loss又继承Module类

其中每个loss,只需要实现forward就好

其中每次训练的时候都要

loss.backward()

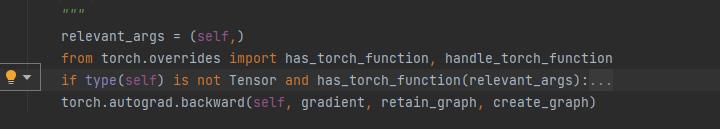

但是我在loss函数里没找到backward()这个函数,就有点奇怪。后来发现原来loss是一个tensor,而tensor中是有backward的,

而且是调用的autograd里面的backward

1.1 L1Loss

ℓ

(

x

,

y

)

=

L

=

l

1

,

…

,

l

N

⊤

,

l

n

=

∣

x

n

−

y

n

∣

,

\\ell(x, y) = L = \\l_1,\\dots,l_N\\^\\top, \\quad l_n = \\left| x_n - y_n \\right|,

ℓ(x,y)=L=l1,…,lN⊤,ln=∣xn−yn∣,

N是batch_size 如果没有设置reduction ,默认采用mean

ℓ

(

x

,

y

)

=

mean

(

L

)

,

if reduction

=

’mean’;

sum

(

L

)

,

if reduction

=

’sum’.

\\ell(x, y) = \\begincases \\operatornamemean(L), & \\textif reduction = \\text'mean';\\\\ \\operatornamesum(L), & \\textif reduction = \\text'sum'. \\endcases

ℓ(x,y)=mean(L),sum(L),if reduction=’mean’;if reduction=’sum’.

import torch.nn as nn

import torch

def validate_loss(input,target):

return torch.mean(torch.abs(input-target))

loss=nn.L1Loss()

input=torch.randn(3,5,requires_grad=True)

target=torch.randn(3,5)

output=loss(input,target)

print("default loss:", output)

output = validate_loss(input, target)

print("validate loss:", output)

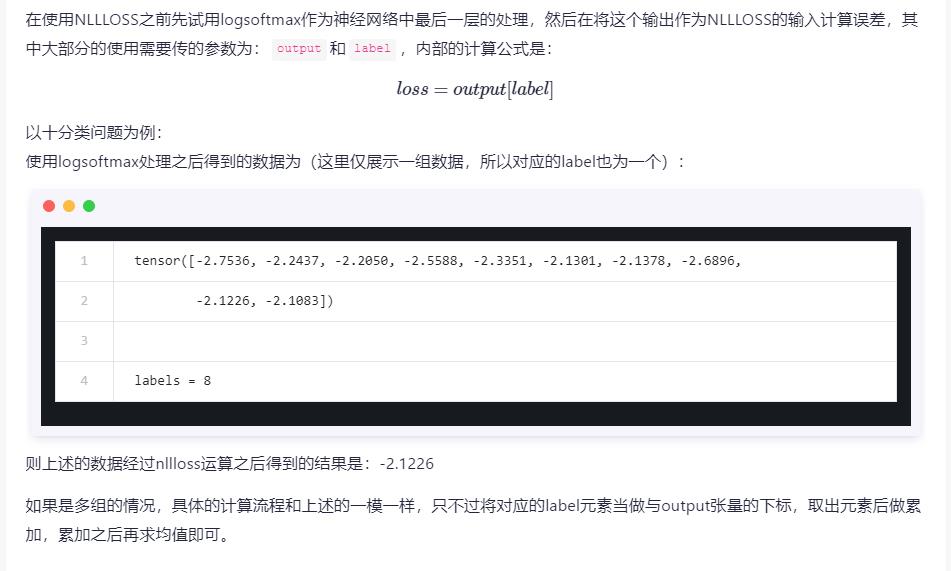

1.2NLLLoss 多分类

https://zhuanlan.zhihu.com/p/338318581

ℓ

(

x

,

y

)

=

L

=

l

1

,

…

,

l

N

⊤

,

l

n

=

−

w

y

n

x

n

,

y

n

,

w

c

=

weight

[

c

]

⋅

1

c

≠

ignore_index

\\ell(x, y) = L = \\l_1,\\dots,l_N\\^\\top, \\quad l_n = - w_y_n x_n,y_n, \\quad w_c = \\textweight[c] \\cdot \\mathbb1\\c \\not= \\textignore\\_index\\

ℓ(x,y)=L=l1,…,lN⊤,ln=−wynxn,yn,wc=weight[c]⋅1c=ignore_index

x是输入,y是label ,w是类别权重

ℓ ( x , y ) = ∑ n = 1 N 1 ∑ n = 1 N w y n l n , if reduction = ’mean’; ∑ n = 1 N l n , if reduction = ’sum’. \\ell(x, y) = \\begincases \\sum_n=1^N \\frac1\\sum_n=1^N w_y_n l_n, & \\textif reduction = \\text'mean';\\\\ \\sum_n=1^N l_n, & \\textif reduction = \\text'sum'. \\endcases ℓ(x,y)=∑n=1N∑n=1Nwyn1ln,∑n=1Nln,if reduction=’mean’;if reduction=’sum’.

def validate_loss(input,target):

val = 0

for li_x, li_y in zip(input, target):

val+=li_x[li_y]

return torch.abs(val / len(target))

loss = nn.NLLLoss()

m = nn.LogSoftmax(dim=1)

input=torch.randn(3,5,requires_grad=True)

target = torch.tensor([1, 0, 4])

output = loss(m(input), target)

print("default loss:", output)

output = validate_loss(m(input), target)

print("validate loss:", output)

>>>

>>>

# 2D loss example (used, for example, with image inputs)

def validate_loss(input,target):

val = 0

for li_x, li_y in zip(input, target):

dim0, dim1 = li_y.shape

li_x=li_x.tolist()

li_y=li_y.tolist()

# 遍历张量

for i in range(dim0):

for j in range(dim1):

element = li_y[i][j]

res=li_x[element][i][j]

val+=res

return val / 320

N, C = 5, 4

loss = nn.NLLLoss()

# input is of size N x C x height x width

data = torch.randn(N, 16, 10, 10)

conv = nn.Conv2d(16, C, (3, 3))

m = nn.LogSoftmax(dim=1)

# each element in target has to have 0 <= value < C

target = torch.empty(N, 8, 8, dtype=torch.long).random_(0, C)

input=m(conv(data))

output = loss(input, target)

print("default loss:", output)

output = validate_loss(input, target)

print("validate loss:", output)

1.3PoissonNLLoss

真实标签服从泊松分布的负对数似然损失,神经网络的输出作为泊松分布的参数λ 。

target

∼

P

o

i

s

s

o

n

(

input

)

\\texttarget \\sim \\mathrmPoisson(\\textinput)

target∼Poisson(input)

loss

(

input

,

target

)

=

input

−

target

∗

log

(

input

)

+

log

(

target!

)

\\textloss(\\textinput, \\texttarget) = \\textinput - \\texttarget * \\log(\\textinput) + \\log(\\texttarget!)

loss(input,target)=input−target∗log(input)+log(target!)

loss = nn.PoissonNLLLoss()

log_input = torch.randn(5, 2, requires_grad=True)

target = torch.randn(5, 2)

output = loss(log_input, target)

output.backward()

1.4KLDivLoss

l ( x , y ) = L = l 1 , … , l N , l n = y n ⋅ ( log y n − x n ) l(x,y) = L = \\ l_1,\\dots,l_N \\, \\quad l_n = y_n \\cdot \\left( \\log y_n - x_n \\right) l(x,y)=L=l1,…,lN,ln=yn⋅(logyn−xn)

ℓ

(

x

,

y

)

=

mean

(

L

)

,

if reduction

=

’mean’;

sum

以上是关于pytorch loss的主要内容,如果未能解决你的问题,请参考以下文章