研究开源gpt-2-simple项目,跑一个简单的模型,然后生成一段对话。用的是 Intel(R) Core(TM) i7-9700,8核8线程,训练最小的模型200次跑1个小时20分钟

Posted fly-iot

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了研究开源gpt-2-simple项目,跑一个简单的模型,然后生成一段对话。用的是 Intel(R) Core(TM) i7-9700,8核8线程,训练最小的模型200次跑1个小时20分钟相关的知识,希望对你有一定的参考价值。

目录

前言

本文的原文连接是:

https://blog.csdn.net/freewebsys/article/details/108971807

未经博主允许不得转载。

博主CSDN地址是:https://blog.csdn.net/freewebsys

博主掘金地址是:https://juejin.cn/user/585379920479288

博主知乎地址是:https://www.zhihu.com/people/freewebsystem

1,关于gpt2的几个例子学习

快速使用docker 镜像进行环境搭建。

相关的chatGpt项目有:

gpt2官方模型:

https://github.com/openai/gpt-2

6.1K 星星:

https://github.com/Morizeyao/GPT2-Chinese

2.4K 星星:

https://github.com/yangjianxin1/GPT2-chitchat

1.6K 星星:

https://github.com/imcaspar/gpt2-ml

先找个简单的进行研究:

3.2K 星星:

https://github.com/minimaxir/gpt-2-simple

2,使用docker配置环境

先弄官方的例子,使用tensorflow的2.12 的镜像,因显卡驱动的问题,只能用cpu进行运算:

git clone https://github.com/minimaxir/gpt-2-simple

cd gpt-2-simple

docker run --name gpt2simple -itd -v `pwd`:/data -p 8888:8888 tensorflow/tensorflow:latest

版本说明,这边用的就是最小的版本:能跑就行。

latest: minimal image with TensorFlow Serving binary installed and ready to serve!

:latest-gpu: minimal image with TensorFlow Serving binary installed and ready to serve on GPUs!

:latest-devel - include all source/dependencies/toolchain to develop, along with a compiled binary that works on CPUs

:latest-devel-gpu - include all source dependencies/toolchain (cuda9/cudnn7) to develop, along with a compiled binary that works on NVIDIA GPUs.

然后进入docker 镜像中执行命令:

当然也可以使用Dockerfile 但是网速慢,且容易出错:

docker exec -it gpt2simple bash

############### 以下是登陆后执行:

sed -i 's/archive.ubuntu.com/mirrors.aliyun.com/g' /etc/apt/sources.list

sed -i 's/security.ubuntu.com/mirrors.aliyun.com/g' /etc/apt/sources.list

mkdir /root/.pip/

# 增加 pip 的源

echo "[global]" > ~/.pip/pip.conf

echo "index-url = https://mirrors.aliyun.com/pypi/simple/" >> ~/.pip/pip.conf

echo "[install]" >> ~/.pip/pip.conf

echo "trusted-host=mirrors.aliyun.com" >> ~/.pip/pip.conf

cd /data

#注释掉 tensorflow 依赖

sed -i 's/tensorflow/#tensorflow/g' requirements.txt

pip3 install -r requirements.txt

3,使用uget工具下载模型,文件大容易卡死

sudo apt install uget

然后就是网络特别的慢了。根本下载不了,就卡在进度中。几个特别大的模型,最大的6G。

一个比一个大,不知道压缩没有:

498M:

https://openaipublic.blob.core.windows.net/gpt-2/models/124M/model.ckpt.data-00000-of-00001

1.42G

https://openaipublic.blob.core.windows.net/gpt-2/models/355M/model.ckpt.data-00000-of-00001

3.10G

https://openaipublic.blob.core.windows.net/gpt-2/models/774M/model.ckpt.data-00000-of-00001

6.23G

https://openaipublic.blob.core.windows.net/gpt-2/models/1558M/model.ckpt.data-00000-of-00001

使用工具下载模型,命令行执行的时候容易卡死:

这个云地址不支持多线程下载,就下载了一个最小的124M的模型。

先尝个新鲜就行。

剩下的文件可以单独下载:

gpt2 里面的代码,去掉模型文件其他用脚本下载,哎网络是个大问题。

也没有国内的镜像。

download_model.py 124M

修改了代码,去掉了最大的model.ckpt.data 这个单独下载,下载了拷贝进去。

import os

import sys

import requests

from tqdm import tqdm

if len(sys.argv) != 2:

print('You must enter the model name as a parameter, e.g.: download_model.py 124M')

sys.exit(1)

model = sys.argv[1]

subdir = os.path.join('models', model)

if not os.path.exists(subdir):

os.makedirs(subdir)

subdir = subdir.replace('\\\\','/') # needed for Windows

for filename in ['checkpoint','encoder.json','hparams.json', 'model.ckpt.index', 'model.ckpt.meta', 'vocab.bpe']:

r = requests.get("https://openaipublic.blob.core.windows.net/gpt-2/" + subdir + "/" + filename, stream=True)

with open(os.path.join(subdir, filename), 'wb') as f:

file_size = int(r.headers["content-length"])

chunk_size = 1000

with tqdm(ncols=100, desc="Fetching " + filename, total=file_size, unit_scale=True) as pbar:

# 1k for chunk_size, since Ethernet packet size is around 1500 bytes

for chunk in r.iter_content(chunk_size=chunk_size):

f.write(chunk)

pbar.update(chunk_size)

4,研究使用gpt2-simple执行demo,训练200次

然后运行demo.py 代码

项目代码:

提前把模型和文件准备好:

https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt

另存为,在工程目录

shakespeare.txt

gpt-2-simple/models$ tree

.

└── 124M

├── checkpoint

├── encoder.json

├── hparams.json

├── model.ckpt.data-00000-of-00001

├── model.ckpt.index

├── model.ckpt.meta

└── vocab.bpe

1 directory, 7 files

https://github.com/minimaxir/gpt-2-simple

import gpt_2_simple as gpt2

import os

import requests

model_name = "124M"

file_name = "shakespeare.txt"

sess = gpt2.start_tf_sess()

print("########### init start ###########")

gpt2.finetune(sess,

file_name,

model_name=model_name,

steps=200) # steps is max number of training steps

gpt2.generate(sess)

print("########### finish ###########")

执行:

time python demo.py

real 80m14.186s

user 513m37.158s

sys 37m45.501s

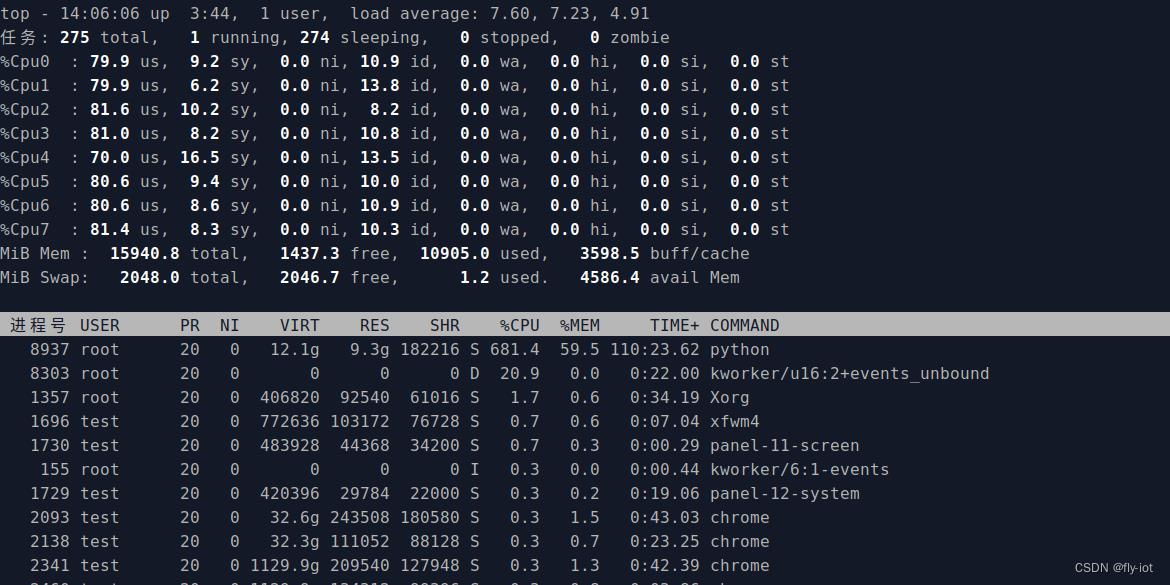

开始训练,做测试,模型训练200次。耗时是 1小时 20分钟。

用的是 Intel® Core™ i7-9700 CPU @ 3.00GHz,8核8线程的。

使用CPU训练,没有显卡。

cpu都是80%,load 是 7 ,风扇已经呼呼转了。

然后生成对话:

demo-run.py

import gpt_2_simple as gpt2

sess = gpt2.start_tf_sess()

gpt2.load_gpt2(sess)

gpt2.generate(sess)

执行结果,没有cpu/gpu 优化:

python demo_generate.py

2023-03-03 13:11:53.801232: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-03-03 13:11:55.191519: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-03-03 13:11:57.054783: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:357] MLIR V1 optimization pass is not enabled

Loading checkpoint checkpoint/run1/model-200

Ministers' policy: policy

I am the king, and

I shall have none of you;

But, in the desire of your majesty,

I shall take your honour's honour,

And give you no better honour than

To be a king and a king's son,

And my honour shall have no more than that

Which you have given to me.

GLOUCESTER:

MONTAGUE:

Mistress:

Go, go, go, go, go, go, go, go, go!

GLOUCESTER:

You have done well, my lord;

I was but a piece of a body;

And, if thou meet me, I'll take thy pleasure;

And, if thou be not satisfied

I'll give thee another way, or let

My tongue hope that thou wilt find a friend:

I'll be your business, my lord.

MONTAGUE:

Go, go, go, go!

GLOUCESTER:

Go, go, go!

MONTAGUE:

Go, go, go!

GLOUCESTER:

You have been so well met, my lord,

I'll look you to the point:

If thou wilt find a friend, I'll be satisfied;

Thou hast no other choice but to be a king.

MONTAGUE:

Go, go, go!

GLOUCESTER:

Go, go, go!

MONTAGUE:

Go, go, go!

GLOUCESTER:

Go, go, go!

MONTAGUE:

Go, go, go!

GLOUCESTER:

Go, go, go!

KING RICHARD II:

A villain, if you have any, is a villain without a villain.

WARWICK:

I have seen the villain, not a villain,

But--

KING RICHARD II:

Here is the villain.

WARWICK:

A villain.

KING RICHARD II:

But a villain, let him not speak with you.

WARWICK:

Why, then, is there in this house no man of valour?

KING RICHARD II:

The Lord Northumberland, the Earl of Wiltshire,

The noble Earl of Wiltshire, and the Duke of Norfolk

All villainous.

WARWICK:

And here comes the villain?

KING RICHARD II:

He is a villain, if you be a villain.

每次生成的对话都不一样呢。可以多运行几次,生成的内容都是不一样的。

5,总结

ai果然是高技术含量的东西,代码啥的不多,就是没有太看懂。

然后消耗CPU和GPU资源,也是非常消耗硬件的。

这个很小的模型训练200次,都这么费时间,更何况是大数据量多参数的模型呢!!

同时这个基础设施也要搭建起来呢,有个项目要研究下了,就是

https://www.kubeflow.org/

得去研究服务器集群了,因为Nvidia的限制,服务器上跑的都是又贵又性能低的显卡。

但是可以本地跑集群做训练呢!!!

本文的原文连接是:

https://blog.csdn.net/freewebsys/article/details/108971807

以上是关于研究开源gpt-2-simple项目,跑一个简单的模型,然后生成一段对话。用的是 Intel(R) Core(TM) i7-9700,8核8线程,训练最小的模型200次跑1个小时20分钟的主要内容,如果未能解决你的问题,请参考以下文章