Elasticsearch:Elasticsearch 中的父级和兄弟级聚合

Posted Elastic 中国社区官方博客

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Elasticsearch:Elasticsearch 中的父级和兄弟级聚合相关的知识,希望对你有一定的参考价值。

从广义上讲,我们可以将聚合分为两种类型:父聚合(parent aggregation)和兄弟聚合(sibling aggregation)。 你可能会发现它们有点令人困惑,所以让我们看看它们是什么以及如何使用它们。

准备数据

我们使用 Kibana 中自带的索引例子来进行展示:

这样我们就在 Elasticsearch 中创建了一个叫做 kibana_sample_data_logs 的索引。

Parent aggregations

父聚合是一组聚合,它们处理来自父聚合的输入以产生新的桶,然后将其添加到现有桶中。 看看下面的代码清单。

我们先使用如下的一个聚合得到每天的文档数:

GET kibana_sample_data_logs/_search?filter_path=aggregations

"size": 0,

"aggs":

"count_per_day":

"date_histogram":

"field": "@timestamp",

"calendar_interval": "day"

上面的聚合所生成的结果为:

"aggregations":

"count_per_day":

"buckets": [

"key_as_string": "2023-01-22T00:00:00.000Z",

"key": 1674345600000,

"doc_count": 249

,

"key_as_string": "2023-01-23T00:00:00.000Z",

"key": 1674432000000,

"doc_count": 231

,

"key_as_string": "2023-01-24T00:00:00.000Z",

"key": 1674518400000,

"doc_count": 230

,

"key_as_string": "2023-01-25T00:00:00.000Z",

"key": 1674604800000,

"doc_count": 236

,

"key_as_string": "2023-01-26T00:00:00.000Z",

"key": 1674691200000,

"doc_count": 230

,

"key_as_string": "2023-01-27T00:00:00.000Z",

"key": 1674777600000,

"doc_count": 230

,

"key_as_string": "2023-01-28T00:00:00.000Z",

"key": 1674864000000,

"doc_count": 229

,

"key_as_string": "2023-01-29T00:00:00.000Z",

"key": 1674950400000,

"doc_count": 231

,

"key_as_string": "2023-01-30T00:00:00.000Z",

"key": 1675036800000,

"doc_count": 230

,

"key_as_string": "2023-01-31T00:00:00.000Z",

"key": 1675123200000,

"doc_count": 230

,

"key_as_string": "2023-02-01T00:00:00.000Z",

"key": 1675209600000,

"doc_count": 230

,

"key_as_string": "2023-02-02T00:00:00.000Z",

"key": 1675296000000,

"doc_count": 230

,

"key_as_string": "2023-02-03T00:00:00.000Z",

"key": 1675382400000,

"doc_count": 230

,

"key_as_string": "2023-02-04T00:00:00.000Z",

"key": 1675468800000,

"doc_count": 230

,

"key_as_string": "2023-02-05T00:00:00.000Z",

"key": 1675555200000,

"doc_count": 230

,

"key_as_string": "2023-02-06T00:00:00.000Z",

"key": 1675641600000,

"doc_count": 230

,

"key_as_string": "2023-02-07T00:00:00.000Z",

"key": 1675728000000,

"doc_count": 230

,

"key_as_string": "2023-02-08T00:00:00.000Z",

"key": 1675814400000,

"doc_count": 229

,

"key_as_string": "2023-02-09T00:00:00.000Z",

"key": 1675900800000,

"doc_count": 231

,

"key_as_string": "2023-02-10T00:00:00.000Z",

"key": 1675987200000,

"doc_count": 230

,

"key_as_string": "2023-02-11T00:00:00.000Z",

"key": 1676073600000,

"doc_count": 230

,

"key_as_string": "2023-02-12T00:00:00.000Z",

"key": 1676160000000,

"doc_count": 230

,

"key_as_string": "2023-02-13T00:00:00.000Z",

"key": 1676246400000,

"doc_count": 230

,

"key_as_string": "2023-02-14T00:00:00.000Z",

"key": 1676332800000,

"doc_count": 230

,

"key_as_string": "2023-02-15T00:00:00.000Z",

"key": 1676419200000,

"doc_count": 230

,

"key_as_string": "2023-02-16T00:00:00.000Z",

"key": 1676505600000,

"doc_count": 230

,

"key_as_string": "2023-02-17T00:00:00.000Z",

"key": 1676592000000,

"doc_count": 230

,

"key_as_string": "2023-02-18T00:00:00.000Z",

"key": 1676678400000,

"doc_count": 230

,

"key_as_string": "2023-02-19T00:00:00.000Z",

"key": 1676764800000,

"doc_count": 229

,

"key_as_string": "2023-02-20T00:00:00.000Z",

"key": 1676851200000,

"doc_count": 231

,

"key_as_string": "2023-02-21T00:00:00.000Z",

"key": 1676937600000,

"doc_count": 173

,

"key_as_string": "2023-02-22T00:00:00.000Z",

"key": 1677024000000,

"doc_count": 230

,

"key_as_string": "2023-02-23T00:00:00.000Z",

"key": 1677110400000,

"doc_count": 230

,

"key_as_string": "2023-02-24T00:00:00.000Z",

"key": 1677196800000,

"doc_count": 230

,

"key_as_string": "2023-02-25T00:00:00.000Z",

"key": 1677283200000,

"doc_count": 229

,

"key_as_string": "2023-02-26T00:00:00.000Z",

"key": 1677369600000,

"doc_count": 231

,

"key_as_string": "2023-02-27T00:00:00.000Z",

"key": 1677456000000,

"doc_count": 230

,

"key_as_string": "2023-02-28T00:00:00.000Z",

"key": 1677542400000,

"doc_count": 229

,

"key_as_string": "2023-03-01T00:00:00.000Z",

"key": 1677628800000,

"doc_count": 231

,

"key_as_string": "2023-03-02T00:00:00.000Z",

"key": 1677715200000,

"doc_count": 230

,

"key_as_string": "2023-03-03T00:00:00.000Z",

"key": 1677801600000,

"doc_count": 230

,

"key_as_string": "2023-03-04T00:00:00.000Z",

"key": 1677888000000,

"doc_count": 329

,

"key_as_string": "2023-03-05T00:00:00.000Z",

"key": 1677974400000,

"doc_count": 231

,

"key_as_string": "2023-03-06T00:00:00.000Z",

"key": 1678060800000,

"doc_count": 230

,

"key_as_string": "2023-03-07T00:00:00.000Z",

"key": 1678147200000,

"doc_count": 230

,

"key_as_string": "2023-03-08T00:00:00.000Z",

"key": 1678233600000,

"doc_count": 230

,

"key_as_string": "2023-03-09T00:00:00.000Z",

"key": 1678320000000,

"doc_count": 230

,

"key_as_string": "2023-03-10T00:00:00.000Z",

"key": 1678406400000,

"doc_count": 230

,

"key_as_string": "2023-03-11T00:00:00.000Z",

"key": 1678492800000,

"doc_count": 230

,

"key_as_string": "2023-03-12T00:00:00.000Z",

"key": 1678579200000,

"doc_count": 230

,

"key_as_string": "2023-03-13T00:00:00.000Z",

"key": 1678665600000,

"doc_count": 230

,

"key_as_string": "2023-03-14T00:00:00.000Z",

"key": 1678752000000,

"doc_count": 230

,

"key_as_string": "2023-03-15T00:00:00.000Z",

"key": 1678838400000,

"doc_count": 230

,

"key_as_string": "2023-03-16T00:00:00.000Z",

"key": 1678924800000,

"doc_count": 230

,

"key_as_string": "2023-03-17T00:00:00.000Z",

"key": 1679011200000,

"doc_count": 230

,

"key_as_string": "2023-03-18T00:00:00.000Z",

"key": 1679097600000,

"doc_count": 230

,

"key_as_string": "2023-03-19T00:00:00.000Z",

"key": 1679184000000,

"doc_count": 230

,

"key_as_string": "2023-03-20T00:00:00.000Z",

"key": 1679270400000,

"doc_count": 230

,

"key_as_string": "2023-03-21T00:00:00.000Z",

"key": 1679356800000,

"doc_count": 230

,

"key_as_string": "2023-03-22T00:00:00.000Z",

"key": 1679443200000,

"doc_count": 230

,

"key_as_string": "2023-03-23T00:00:00.000Z",

"key": 1679529600000,

"doc_count": 205

]

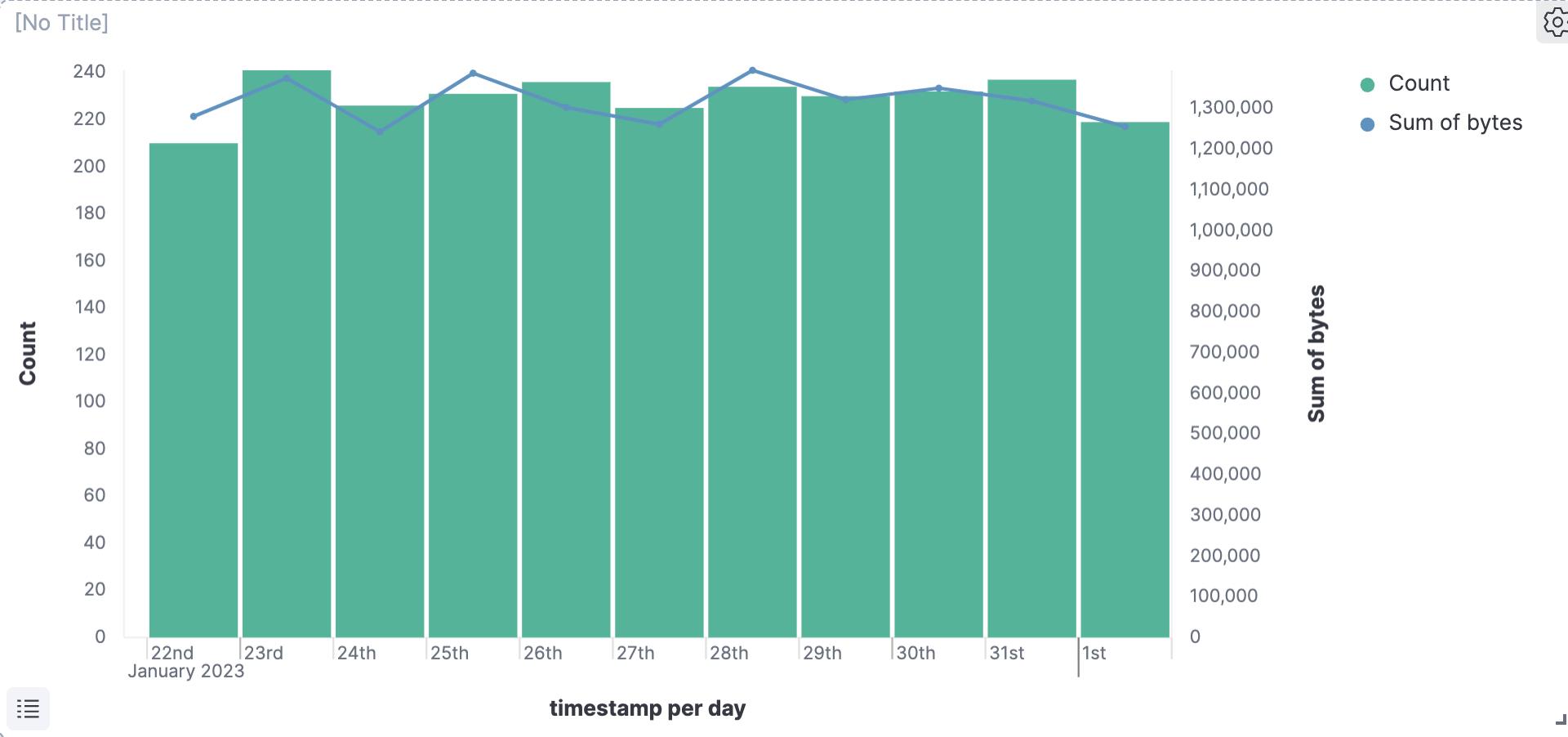

如果用图来表达的话就是:

可以看到每天的文档数据。下面的问题是,我们想求出每个桶里的下载数据的总和,那该怎么办呢?

我们可以使用如下的聚合:

GET kibana_sample_data_logs/_search?filter_path=aggregations

"size": 0,

"aggs":

"count_per_day":

"date_histogram":

"field": "@timestamp",

"calendar_interval": "day"

,

"aggs":

"total_bytes_per_day":

"sum":

"field": "bytes"

在上面,我们根据每天的文档数,把里面的 bytes 字段加起来。这样我们可以看到每天下载的数据量是多少。上面聚合的结果为:

"aggregations":

"count_per_day":

"buckets": [

"key_as_string": "2023-01-22T00:00:00.000Z",

"key": 1674345600000,

"doc_count": 249,

"total_bytes_per_day":

"value": 1531493

,

"key_as_string": "2023-01-23T00:00:00.000Z",

"key": 1674432000000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1272610

,

"key_as_string": "2023-01-24T00:00:00.000Z",

"key": 1674518400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1265206

,

"key_as_string": "2023-01-25T00:00:00.000Z",

"key": 1674604800000,

"doc_count": 236,

"total_bytes_per_day":

"value": 1408912

,

"key_as_string": "2023-01-26T00:00:00.000Z",

"key": 1674691200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1308255

,

"key_as_string": "2023-01-27T00:00:00.000Z",

"key": 1674777600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1259611

,

"key_as_string": "2023-01-28T00:00:00.000Z",

"key": 1674864000000,

"doc_count": 229,

"total_bytes_per_day":

"value": 1354113

,

"key_as_string": "2023-01-29T00:00:00.000Z",

"key": 1674950400000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1344454

,

"key_as_string": "2023-01-30T00:00:00.000Z",

"key": 1675036800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1352342

,

"key_as_string": "2023-01-31T00:00:00.000Z",

"key": 1675123200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1262058

,

"key_as_string": "2023-02-01T00:00:00.000Z",

"key": 1675209600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1347502

,

"key_as_string": "2023-02-02T00:00:00.000Z",

"key": 1675296000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1250818

,

"key_as_string": "2023-02-03T00:00:00.000Z",

"key": 1675382400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1354851

,

"key_as_string": "2023-02-04T00:00:00.000Z",

"key": 1675468800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1215747

,

"key_as_string": "2023-02-05T00:00:00.000Z",

"key": 1675555200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1405353

,

"key_as_string": "2023-02-06T00:00:00.000Z",

"key": 1675641600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1334883

,

"key_as_string": "2023-02-07T00:00:00.000Z",

"key": 1675728000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1382164

,

"key_as_string": "2023-02-08T00:00:00.000Z",

"key": 1675814400000,

"doc_count": 229,

"total_bytes_per_day":

"value": 1326963

,

"key_as_string": "2023-02-09T00:00:00.000Z",

"key": 1675900800000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1309513

,

"key_as_string": "2023-02-10T00:00:00.000Z",

"key": 1675987200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1197116

,

"key_as_string": "2023-02-11T00:00:00.000Z",

"key": 1676073600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1372478

,

"key_as_string": "2023-02-12T00:00:00.000Z",

"key": 1676160000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1321734

,

"key_as_string": "2023-02-13T00:00:00.000Z",

"key": 1676246400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1289226

,

"key_as_string": "2023-02-14T00:00:00.000Z",

"key": 1676332800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1325093

,

"key_as_string": "2023-02-15T00:00:00.000Z",

"key": 1676419200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1250263

,

"key_as_string": "2023-02-16T00:00:00.000Z",

"key": 1676505600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1294967

,

"key_as_string": "2023-02-17T00:00:00.000Z",

"key": 1676592000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1289010

,

"key_as_string": "2023-02-18T00:00:00.000Z",

"key": 1676678400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1285469

,

"key_as_string": "2023-02-19T00:00:00.000Z",

"key": 1676764800000,

"doc_count": 229,

"total_bytes_per_day":

"value": 1327017

,

"key_as_string": "2023-02-20T00:00:00.000Z",

"key": 1676851200000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1368197

,

"key_as_string": "2023-02-21T00:00:00.000Z",

"key": 1676937600000,

"doc_count": 173,

"total_bytes_per_day":

"value": 1030140

,

"key_as_string": "2023-02-22T00:00:00.000Z",

"key": 1677024000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1269612

,

"key_as_string": "2023-02-23T00:00:00.000Z",

"key": 1677110400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1266399

,

"key_as_string": "2023-02-24T00:00:00.000Z",

"key": 1677196800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1188422

,

"key_as_string": "2023-02-25T00:00:00.000Z",

"key": 1677283200000,

"doc_count": 229,

"total_bytes_per_day":

"value": 1240567

,

"key_as_string": "2023-02-26T00:00:00.000Z",

"key": 1677369600000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1350170

,

"key_as_string": "2023-02-27T00:00:00.000Z",

"key": 1677456000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1327906

,

"key_as_string": "2023-02-28T00:00:00.000Z",

"key": 1677542400000,

"doc_count": 229,

"total_bytes_per_day":

"value": 1324946

,

"key_as_string": "2023-03-01T00:00:00.000Z",

"key": 1677628800000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1327983

,

"key_as_string": "2023-03-02T00:00:00.000Z",

"key": 1677715200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1371387

,

"key_as_string": "2023-03-03T00:00:00.000Z",

"key": 1677801600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1335187

,

"key_as_string": "2023-03-04T00:00:00.000Z",

"key": 1677888000000,

"doc_count": 329,

"total_bytes_per_day":

"value": 1558542

,

"key_as_string": "2023-03-05T00:00:00.000Z",

"key": 1677974400000,

"doc_count": 231,

"total_bytes_per_day":

"value": 1298767

,

"key_as_string": "2023-03-06T00:00:00.000Z",

"key": 1678060800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1285049

,

"key_as_string": "2023-03-07T00:00:00.000Z",

"key": 1678147200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1376259

,

"key_as_string": "2023-03-08T00:00:00.000Z",

"key": 1678233600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1283288

,

"key_as_string": "2023-03-09T00:00:00.000Z",

"key": 1678320000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1318528

,

"key_as_string": "2023-03-10T00:00:00.000Z",

"key": 1678406400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1290236

,

"key_as_string": "2023-03-11T00:00:00.000Z",

"key": 1678492800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1286164

,

"key_as_string": "2023-03-12T00:00:00.000Z",

"key": 1678579200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1316296

,

"key_as_string": "2023-03-13T00:00:00.000Z",

"key": 1678665600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1229930

,

"key_as_string": "2023-03-14T00:00:00.000Z",

"key": 1678752000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1345543

,

"key_as_string": "2023-03-15T00:00:00.000Z",

"key": 1678838400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1238545

,

"key_as_string": "2023-03-16T00:00:00.000Z",

"key": 1678924800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1320320

,

"key_as_string": "2023-03-17T00:00:00.000Z",

"key": 1679011200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1304937

,

"key_as_string": "2023-03-18T00:00:00.000Z",

"key": 1679097600000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1311265

,

"key_as_string": "2023-03-19T00:00:00.000Z",

"key": 1679184000000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1274538

,

"key_as_string": "2023-03-20T00:00:00.000Z",

"key": 1679270400000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1332873

,

"key_as_string": "2023-03-21T00:00:00.000Z",

"key": 1679356800000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1354188

,

"key_as_string": "2023-03-22T00:00:00.000Z",

"key": 1679443200000,

"doc_count": 230,

"total_bytes_per_day":

"value": 1175987

,

"key_as_string": "2023-03-23T00:00:00.000Z",

"key": 1679529600000,

"doc_count": 205,

"total_bytes_per_day":

"value": 1184297

]

从上面的结果中我们可以看出来:除了一个文档总数,我们还看到一个下载 bytes 的总数量。如果我们用可视化的方式来进行表达,那就是:

如果仔细观察(见下图),聚合 total_bytes_per_day 是作为父级 count_per_day 聚合的子项创建的。 它与 date_histogram 处于同一级别。

这种聚合的结果会在现有存储桶内生成一组存储桶。 下图显示了这个结果。 正如你在上图中所见,total_bytes_per_day 聚合生成隐藏在主 date_histogram 存储桶下的新存储桶。

Sibling aggregations

Sibling aggregations, 也即兄弟聚合是那些在兄弟聚合的同一级别上产生新聚合的聚合。 以下清单中的代码创建了一个聚合,其中包含两个处于同一级别的查询(因此,我们称它们为兄弟)。

GET kibana_sample_data_logs/_search?filter_path=aggregations

"size": 0,

"aggs":

"count_per_day":

"date_histogram":

"field": "@timestamp",

"calendar_interval": "day"

,

"totoal_bytes_of_download":

"sum":

"field": "bytes"

在上面,count_per_day 和 totoal_bytes_of_download 聚合在同一级别定义。 如果我们在聚合折叠的情况下拍摄查询快照,我们将以图形方式看到它们,如下图

当我们执行兄弟查询时,会产生新的桶集; 然而,与创建桶并将其添加到现有桶的父聚合不同,兄弟聚合、新聚合或新桶是在根聚合级别创建的。 上一个清单中的查询生成下图中的聚合结果,其中包含为每个兄弟聚合新创建的存储桶。

希望本篇文章的讲述能让你对 parent aggregation 及 sibiling aggregation 有更多的了解。

以上是关于Elasticsearch:Elasticsearch 中的父级和兄弟级聚合的主要内容,如果未能解决你的问题,请参考以下文章