❤️ ❤️ ❤️ 爆:使用ChatGPT+Streamlit快速构建机器学习数据集划分应用程序!!!

Posted AI算法蒋同学

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了❤️ ❤️ ❤️ 爆:使用ChatGPT+Streamlit快速构建机器学习数据集划分应用程序!!!相关的知识,希望对你有一定的参考价值。

❤️ ❤️ ❤️ 爆:使用ChatGPT+Streamlit快速构建机器学习数据集划分应用程序!!!

ChatGPT 对于 Python 程序员有用吗?特别是我们这些使用 Python 进行数据处理、数据清洗和构建机器学习模型的人?我们试试就知道了。

来自 OpenAI 的 ChatGPT 是什么?假设你已经知道了。网上铺天盖地的宣传呐喊,我想不再需要介绍了。加入您碰巧不知道 ChatGPT 是什么,赶快去查查,不然会被人嘲笑的,然后再回来继续。

与 ChatGPT 的对话可以是有趣的,甚至是有启发性的。但是让我们看看它实际上有多大帮助。

让我们看看 ChatGPT 在根据我们提供的规范编写代码时能做些什么。我们将从简单开始,并从那里开始构建。

一、简单数据集处理脚本

我认为最好从某种数据处理脚本开始。因为我打算稍后让 ChatGPT 使用各种 Python 库编写一些机器学习脚本,所以这似乎是一个合理的起点。

1.目标

首先,我想尝试加载一个数据集; 确保所有数据集值都是数值,或者将它们转换为数值; 检查缺失的值; 并将数据集分割为训练集和测试集。

2.用户提示

Write a Python script that does the following:

- loads a dataset from csv on disk to Pandas DataFrame

- ensures that dataset is entirely numeric; converts string values to numeric and tracks this conversion in a dictionary to reference later

- checks for missing values in the DataFrame and imputes missing values for any record that has one missing value; entirely drops a record that has more than one missing value

- splits the dataset into test and training sets编写一个 Python 脚本,执行以下操作:

- 将磁盘上的 csv 数据集加载到 Pandas DataFrame

- 确保数据集完全是数字; 将字符串值转换为数字,并在字典中跟踪这种转换,以便以后引用

- 检查 DataFrame 中是否缺少值,并为缺少一个值的任何记录计算缺少值; 完全删除一个缺少多个值的记录

- 将数据集分成测试集和训练集

3.GhatGPT返回代码

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

# Load the dataset from csv to a Pandas DataFrame

df = pd.read_csv("dataset.csv")

# Ensure the dataset is entirely numeric

# Convert string values to numeric and track this conversion in a dictionary to reference later

string_columns = df.columns[df.dtypes == 'object']

string_to_numeric =

for column in string_columns:

unique_values = df[column].unique()

mapping = value: i for i, value in enumerate(unique_values)

string_to_numeric[column] = mapping

df[column] = df[column].map(mapping)

# Check for missing values in the DataFrame

missing_values = df.isna().sum()

# Impute missing values for any record that has one missing value; entirely drop a record that has more than one missing value

for column, missing_value_count in missing_values.items():

if missing_value_count == 1:

df[column].fillna(df[column].mean(), inplace=True)

elif missing_value_count > 1:

df.dropna(subset=[column], inplace=True)

# Split the dataset into test and training sets

train_data, test_data, train_target, test_target = train_test_split(df.drop('target', axis=1), df['target'], test_size=0.2, random_state=0)

注意: 此脚本假定目标变量存储在 DataFrame 中名为 target 的列中。您需要根据数据集的结构修改此代码。

4.结果分析

ChatGPT 生成了一个有用的脚本。它可以加载一个data.csv的数据集,以及训练集/测试集分割的比例,并记录了这两点以及如果需要的话如何更改它们。总的来说,任务完成了。

二、重构我们的代码

1.目标

现在我们已经有了一个执行某些操作的脚本,让我们对它进行一些重构。良好编码的基础是使用函数来组织逻辑代码,促进重用,并确保您不会重复。

2.用户提示

Rewrite the above script to use a series of functions that make sense

重写上面的脚本,使用一系列有意义的函数

3.GhatGPT返回代码

Here’s the script refactored into a series of functions that make sense:

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

def load_dataset(filepath):

"""Loads a dataset from a csv file to a Pandas DataFrame"""

return pd.read_csv(filepath)

def convert_to_numeric(df):

"""Ensures the dataset is entirely numeric and converts string values to numeric"""

string_columns = df.columns[df.dtypes == 'object']

string_to_numeric =

for column in string_columns:

unique_values = df[column].unique()

mapping = value: i for i, value in enumerate(unique_values)

string_to_numeric[column] = mapping

df[column] = df[column].map(mapping)

return df, string_to_numeric

def handle_missing_values(df):

"""Handles missing values by imputing a mean for records with one missing value, and dropping records with more than one missing value"""

missing_values = df.isna().sum()

for column, missing_value_count in missing_values.items():

if missing_value_count == 1:

df[column].fillna(df[column].mean(), inplace=True)

elif missing_value_count > 1:

df.dropna(subset=[column], inplace=True)

return df

def split_dataset(df, target_column, test_size=0.2, random_state=0):

"""Splits the dataset into test and training sets"""

train_data, test_data, train_target, test_target = train_test_split(df.drop(target_column, axis=1), df[target_column], test_size=test_size, random_state=random_state)

return train_data, test_data, train_target, test_target

# Load the dataset from csv to a Pandas DataFrame

df = load_dataset("dataset.csv")

# Convert string values to numeric and handle missing values

df, string_to_numeric = convert_to_numeric(df)

df = handle_missing_values(df)

# Split the dataset into test and training sets

train_data, test_data, train_target, test_target = split_dataset(df, "target")

Note: This script assumes that the target variable is stored in a column named target in the DataFrame. You’ll need to modify this code based on the structure of your dataset.

在这里,ChatGPT使用了四个函数:

1.load_dataset(filepath) :加载 csv 数据集

2.convert_to_numeric(df) :将字符串值转换为数值。

3.handle_missing_values(df):处理数据集中缺少的值。

4.split_dataset(df, target_column, test_size=0.2, random_state=0) :将数据集划分为测试集和训练集。

测试样例使用这些函数来加载、转换和清理数据集,然后将其拆分为测试集和训练集。

数据集的路径和测试比例是这些函数的输入。

4.结果分析

我们从 ChatGPT 的第一个脚本可以看出一些非常明显的逻辑代码块,可以将这些代码块按功能封装成函数。ChatGPT 做了我期望它做的事情。同样,生成了一个合格的脚本。

三、扩展我们的代码

1.目标

我们的脚本现在看起来好看点了,因为它使用了一些函数。接下来,让我们稍微扩展一下脚本,以便用户可以在运行脚本时指定一些参数。

2.用户提示

Rewrite the above program to solicit the following from the command line in the form of arguments, and use them in the program:

- the path/name of the dataset csv file

- the train/test split size

- the threshold for the number of missing values per record to drop after which to drop entire record, instead of imputing the missing values重写上面的程序,以参数的形式从命令行获取以下内容,并在程序中使用它们:

- 数据集 csv 文件的路径/名称

- 训练集/测试集的分割比例

- 每条记录丢失值数量的阈值,在该阈值之后删除整条记录,而不是输入丢失值

3.GhatGPT返回代码

Here’s the script refactored to solicit the arguments from the command line:

import pandas as pd

import numpy as np

import argparse

from sklearn.model_selection import train_test_split

def load_dataset(filepath):

"""Loads a dataset from a csv file to a Pandas DataFrame"""

return pd.read_csv(filepath)

def convert_to_numeric(df):

"""Ensures the dataset is entirely numeric and converts string values to numeric"""

string_columns = df.columns[df.dtypes == 'object']

string_to_numeric =

for column in string_columns:

unique_values = df[column].unique()

mapping = value: i for i, value in enumerate(unique_values)

string_to_numeric[column] = mapping

df[column] = df[column].map(mapping)

return df, string_to_numeric

def handle_missing_values(df, missing_value_threshold):

"""Handles missing values by imputing a mean for records with one missing value, and dropping records with more than one missing value"""

missing_values = df.isna().sum()

for column, missing_value_count in missing_values.items():

if missing_value_count <= missing_value_threshold:

df[column].fillna(df[column].mean(), inplace=True)

elif missing_value_count > missing_value_threshold:

df.dropna(subset=[column], inplace=True)

return df

def split_dataset(df, target_column, test_size=0.2, random_state=0):

"""Splits the dataset into test and training sets"""

train_data, test_data, train_target, test_target = train_test_split(df.drop(target_column, axis=1), df[target_column], test_size=test_size, random_state=random_state)

return train_data, test_data, train_target, test_target

if __name__ == '__main__':

# Define the command line arguments

parser = argparse.ArgumentParser(description='Handles missing values and splits a dataset into test and training sets')

parser.add_argument('filepath', type=str, help='The path/name of the dataset csv file')

parser.add_argument('test_size', type=float, help='The test/train split size')

parser.add_argument('missing_value_threshold', type=int, help='The number of missing values per record after which to drop the entire record, instead of imputing the missing values')

args = parser.parse_args()

# Load the dataset from csv to a Pandas DataFrame

df = load_dataset(args.filepath)

# Convert string values to numeric and handle missing values

df, string_to_numeric = convert_to_numeric(df)

df = handle_missing_values(df, args.missing_value_threshold)

# Split the dataset into test and training sets

train_data, test_data, train_target, test_target = split_dataset(df, df.columns[-1], test_size=args.test_size)

This script can be run from the command line with the following command format:

python script_name.py filepath test_size missing_value_threshold

4.结果分析

老实说,这里不需要太多的分析,因为 ChatGPT 的注释已经很好地解释了它的功能。他竟然告诉我们该怎么执行代码!!!

四、将我们的代码转换为一个 Streamlit 应用程序

1.目标

现在,让假设我们想让这段代码在应用程序中使用。让 ChatGPT 将这些函数包装到 Streamlit 应用程序中。

2.用户提示

Rewrite the above program as a Streamlit app, allowing for the user to provide the same arguments as in the command line args above

将上面的程序重写为 Streamlit 应用程序,允许用户提供与上面命令行 args 中相同的参数

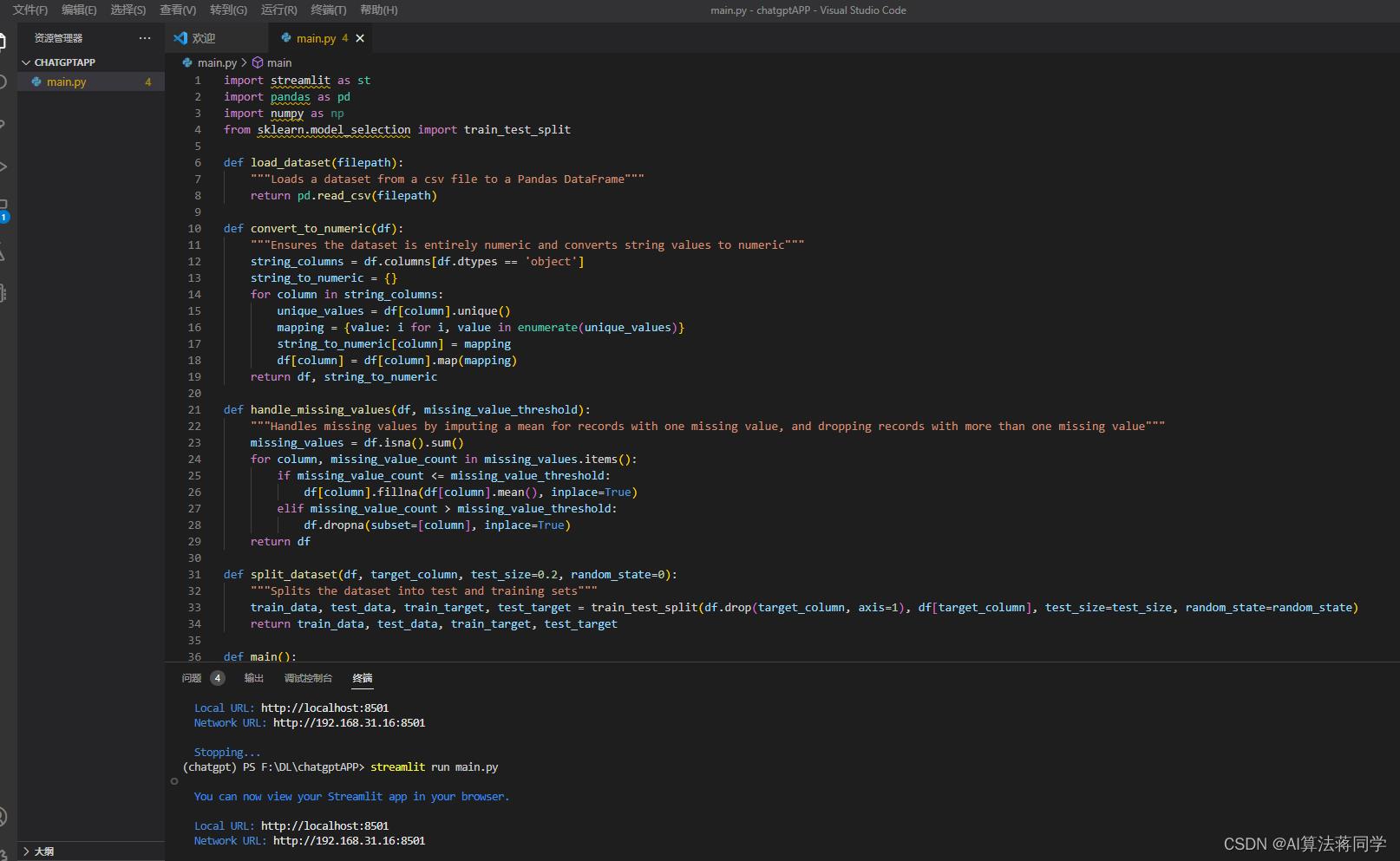

3.GhatGPT返回代码

Here’s the script refactored as a Streamlit app:

import streamlit as st

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

def load_dataset(filepath):

"""Loads a dataset from a csv file to a Pandas DataFrame"""

return pd.read_csv(filepath)

def convert_to_numeric(df):

"""Ensures the dataset is entirely numeric and converts string values to numeric"""

string_columns = df.columns[df.dtypes == 'object']

string_to_numeric =

for column in string_columns:

unique_values = df[column].unique()

mapping = value: i for i, value in enumerate(unique_values)

string_to_numeric[column] = mapping

df[column] = df[column].map(mapping)

return df, string_to_numeric

def handle_missing_values(df, missing_value_threshold):

"""Handles missing values by imputing a mean for records with one missing value, and dropping records with more than one missing value"""

missing_values = df.isna().sum()

for column, missing_value_count in missing_values.items():

if missing_value_count <= missing_value_threshold:

df[column].fillna(df[column].mean(), inplace=True)

elif missing_value_count > missing_value_threshold:

df.dropna(subset=[column], inplace=True)

return df

def split_dataset(df, target_column, test_size=0.2, random_state=0):

"""Splits the dataset into test and training sets"""

train_data, test_data, train_target, test_target = train_test_split(df.drop(target_column, axis=1), df[target_column], test_size=test_size, random_state=random_state)

return train_data, test_data, train_target, test_target

def main():

"""Main function for the Streamlit app"""

st.title("Dataset Preprocessing App")

# Get the file path, test size, and missing value threshold from the user

file_path = st.text_input("Enter the path/name of the dataset csv file:")

test_size = st.slider("Enter the test/train split size:", 0.0, 1.0, 0.2)

missing_value_threshold = st.slider("Enter the number of missing values per record after which to drop the entire record, instead of imputing the missing values:", 0, 100, 1)

# Load the dataset and convert string values to numeric

df = load_dataset(file_path)

df, string_to_numeric = convert_to_numeric(df)

# Handle missing values

df = handle_missing_values(df, missing_value_threshold)

# Split the dataset into test and training sets

train_data, test_data, train_target, test_target = split_dataset(df, df.columns[-1], test_size=test_size)

# Show the final processed data

st.write("Processed Dataset:")

st.write(df)

st.write("Train Data:")

st.write(train_data)

st.write("Train Target:")

st.write(train_target)

st.write("Test Data:")

st.write(test_data)

st.write("Test Target:")

st.write(test_target)

if name == 'main':

main()

To run the Streamlit app, you can save the above code in a

.pyfile and run it in the command line with the following command:要运行 Streamlit 应用程序,可以将上面的代码保存在‘.py‘ 文件中,并使用以下命令在命令行中运行它:

streamlit run file_name.py

4.结果分析

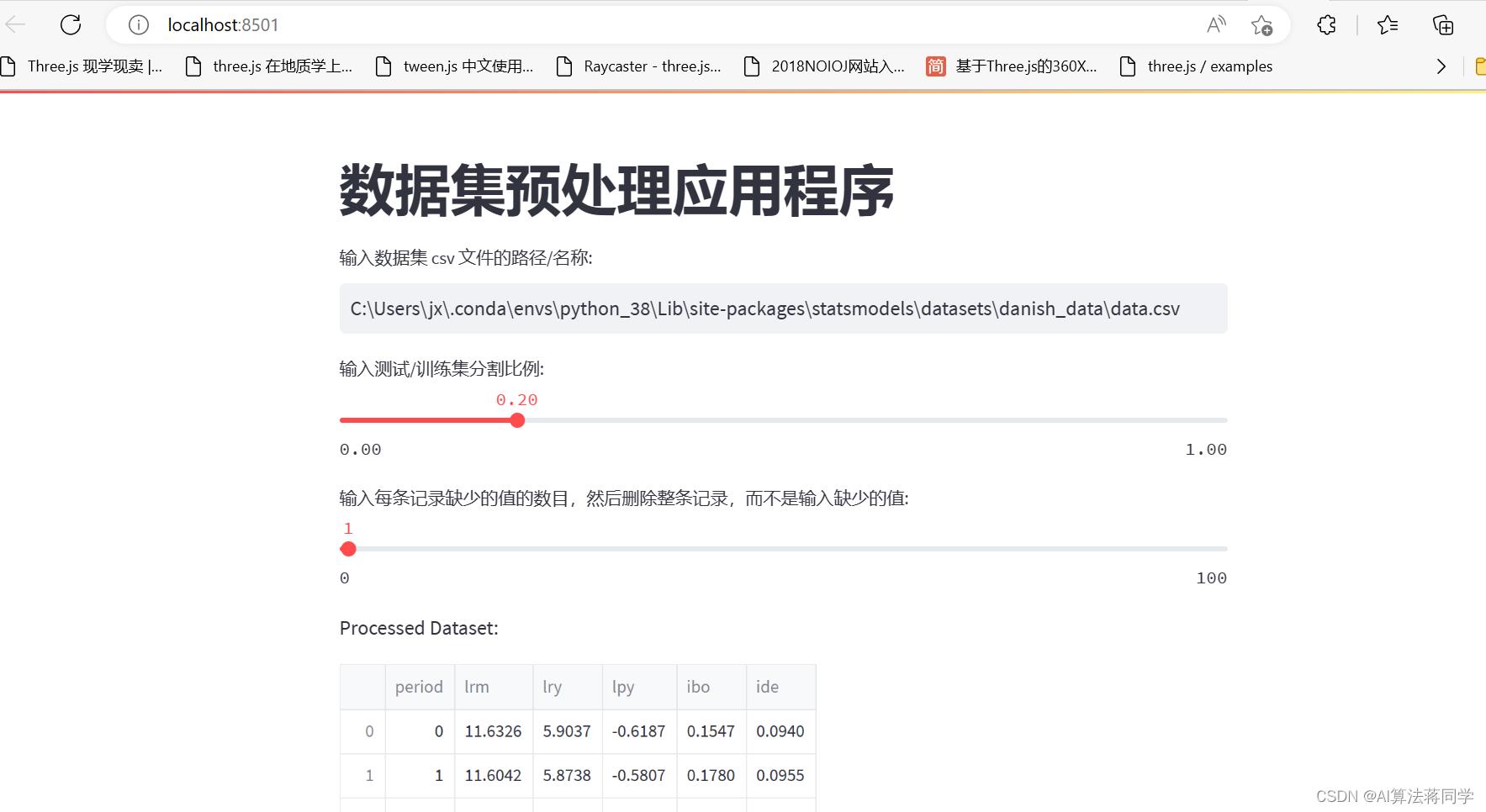

它做了它该做的事。ChatGPT 利用我们的功能,建立了一个简单的 Streamlit Web应用。

五、总结

完成以上四步之后,我们检验下代码是否可以正常运行,需要做一下几步操作:

1、安装streamlit 库

pip install streamlit

2、安装 scikit-learn库

pip install scikit-learn

3、开开心心的执行

streamlit run main.py

vscode代码:

运行的web程序:

搞定!NB class ChatGPT!

以上是关于❤️ ❤️ ❤️ 爆:使用ChatGPT+Streamlit快速构建机器学习数据集划分应用程序!!!的主要内容,如果未能解决你的问题,请参考以下文章