ElasticSearch备份快照到HDFS-2.6(CDH5.6.0)

Posted 洽洽老大

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ElasticSearch备份快照到HDFS-2.6(CDH5.6.0)相关的知识,希望对你有一定的参考价值。

repository-hdfs安装包的下载地址: https://oss.sonatype.org/content/repositories/snapshots/org/elasticsearch/elasticsearch-repository-hdfs/

背景说明

由于业务需要将ES-2.2.1版本升级到ES-2.3.2版本,需要将index迁移过去,查了很多资料,最后决定用es的快照/恢复模块来实现索引迁移。

在这里我采用CDH5.6.0的HDFS作为快照的存储文件系统,在ES中安装repository-hdfs这个插件

hdfs的路径为:hdfs://192.168.10.236:8020

在线安装踩过的坑(失败)

按照repository-hdfs插件地址的说明,可以用

bin/plugin install elasticsearch/elasticsearch-repository-hdfs/2.3.2在线安装,然而安装的时候一直报错,经过检查,是plugin-descriptor.properties里面的版本设置问题,ES-2.3.2版本却对应着2.3.1的安装包,最后执行bin/plugin install elasticsearch/elasticsearch-repository-hdfs/2.3.1安装成功于是按照repository-hdfs插件地址的说明

repositories

hdfs:

uri: "hdfs://<host>:<port>/" # optional - Hadoop file-system URI

path: "some/path" # required - path with the file-system where data is stored/loaded

load_defaults: "true" # optional - whether to load the default Hadoop configuration (default) or not

conf_location: "extra-cfg.xml" # optional - Hadoop configuration XML to be loaded (use commas for multi values)

conf.<key> : "<value>" # optional - 'inlined' key=value added to the Hadoop configuration

concurrent_streams: 5 # optional - the number of concurrent streams (defaults to 5)

compress: "false" # optional - whether to compress the metadata or not (default)

user: "foobar" # optional - user name for creating the file system. Typically used along-side the uri for `SIMPLE` authentication

## Kerberos specific settings

user_keytab: "other/path" # optional - user keytab

user_principal: "buckethead" # optional - user's Kerberos principal name

user_principal_hostname: "gw" # optional - hostname replacing the user's Kerberos principal $host

在elasticsearch.yml配置文件后加上,

repositories.hdfs:

uri: "hdfs://192.168.10.236:8020"

path: "/es"

load_defaults: "true"

concurrent_streams: 5

conf_location: "core-site.xml,hdfs-site.xml"

compress: "true"把CDH5.6.0中的HDFS里的/etc/hadoop/conf/hdfs-site.xml和core-site.xml复制到ES每个节点的/elasticsearch-2.3.2/conf/下,并重启ES集群

3.创建repository,执行

```

curl -XPUT '192.168.10.225:9201/_snapshot/backup?pretty' -d '

"type": "hdfs",

"settings":

"uri": "hdfs://192.168.10.236:8020",

"path": "/es",

"load_defaults": "true"

'

```

结果报了Server IPC version 9 cannot communicate with client version 4的错误,原因是在线安装的插件里的jar包版本太旧,在线安装宣告失败。

离线安装repository-hdfs插件(成功)

1. 在repository-hdfs插件仓库中下载对应版本的插件,我这里下载elasticsearch-repository-hdfs-2.3.2.BUILD-20160516.063716-13-hadoop2.zip

2. 将zip通过scp发送到每个es节点

scp download/elasticsearch-repository-hdfs-2.3.2.BUILD-20160516.063716-13-hadoop2.zip qiaqia@192.168.10.225:/opt3. 安装

bin/plugin install file:///opt/elasticsearch-repository-hdfs-2.3.2.BUILD-20160516.063716-13-hadoop2.zip 3. 配置

在elasticsearch.yml配置文件后加上(必须,否则会有权限问题)

# 禁用jsm,使快照能保存于hdfs

security.manager.enabled: false至于下面的配置,可以加上,也可以通过restful api配置

repositories.hdfs:

uri: "hdfs://192.168.10.236:8020"

path: "/es"

load_defaults: "true"

concurrent_streams: 5

conf_location: "core-site.xml,hdfs-site.xml"

compress: "true"重启每个节点

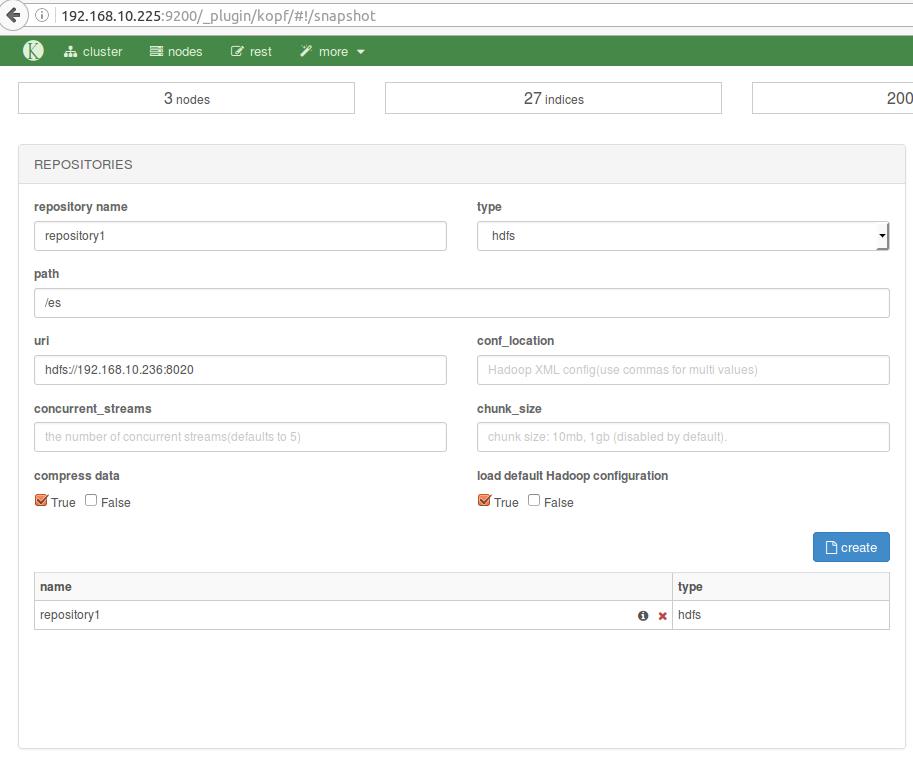

4. 新建repository (采用kopf插件)

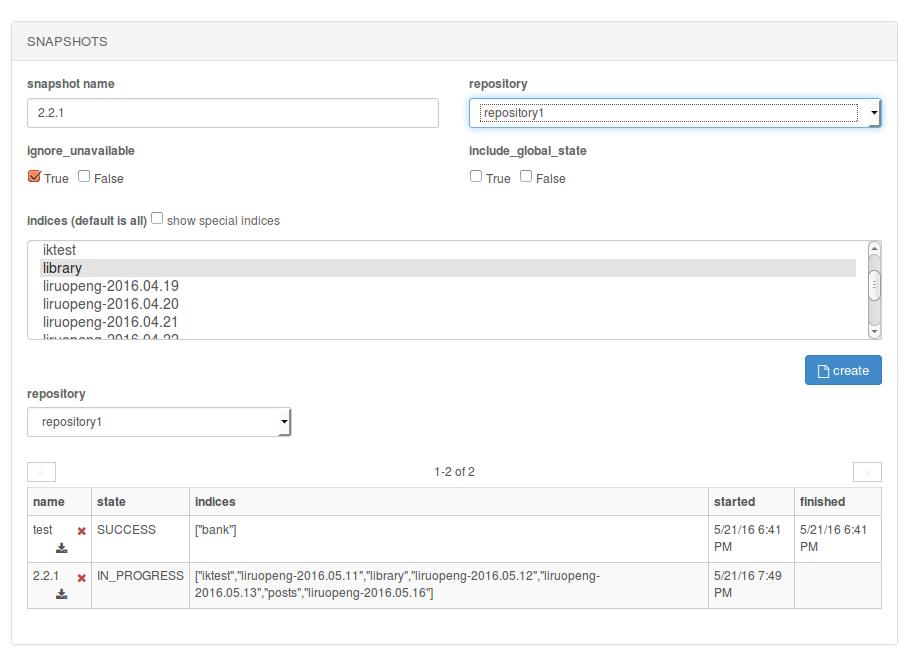

5. 创建快照

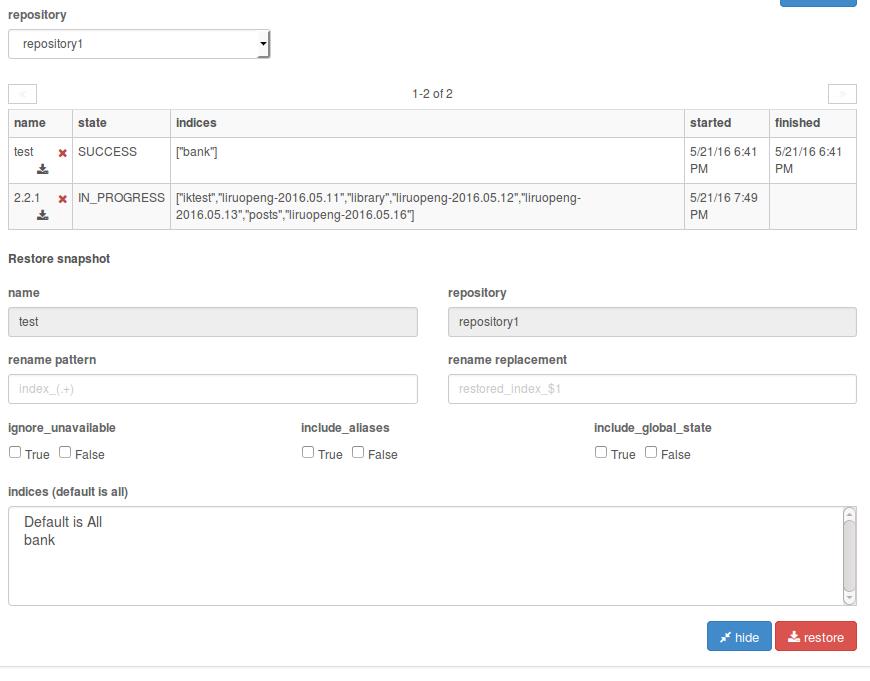

6. 恢复快照

以上是关于ElasticSearch备份快照到HDFS-2.6(CDH5.6.0)的主要内容,如果未能解决你的问题,请参考以下文章