LeNet神经网络-pytorch实现

Posted TOPthemaster

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了LeNet神经网络-pytorch实现相关的知识,希望对你有一定的参考价值。

LeNet

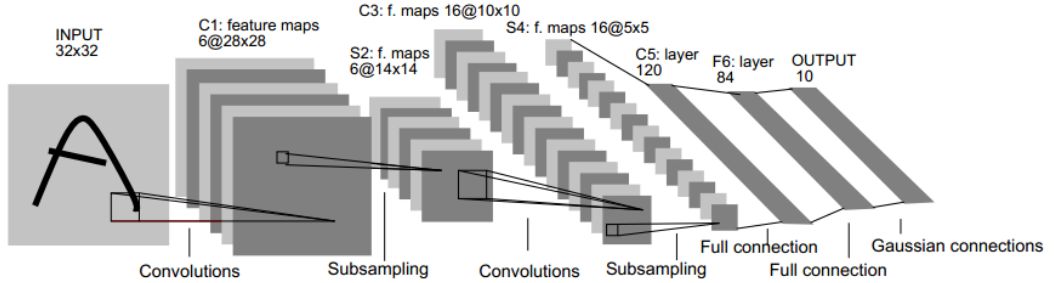

1.网络架构

抛开SVM支持向量机和MLP多层感知机,接触的第一个CNN网络架构

如图所示可见其结构为:

输入的二维图像处理后,先经过两次卷积层到池化层,再经过全连接层,最后使用softmax分类作为输出层。

2.pytorch网络设计

网络构造代码部分:

class LeNet5(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 6, 5, padding=2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), (2, 2))

x = x.view(x.shape[0], -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

3.网络测试

网络测试部分我使用的是minist数据集,为了贴近真实(主要是方便我自己懂),在下载了数据集之后将其转为了图片数据集,更为直观。数据集分为train 和test两部分,在测试中需要做如下配置:

1.依赖资源引入

draw_tool是一个自己编写的绘制loss,acc的画图库,device使用了我电脑的1050ti显卡。

import torch

from matplotlib import pyplot as plt

import torch.optim as optim

from torch.autograd import Variable

import torch.nn.functional as F

from torch import nn

from torchsummary import summary

from torchvision import transforms

from torch.utils.data import Dataset, DataLoader

from PIL import Image

import draw_tool

root = "F:/pycharm/dataset/mnist/MNIST/"

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

draw = draw_tool.draw_tool()

2.数据集的读取和分类

#加载图片

def default_loader(path):

return Image.open(path).convert('RGB')

#构造标注和图片相关

class MyDataset(Dataset):

def __init__(self, txt, transform=None, target_transform=None, loader=default_loader):

fh = open(txt, 'r')

imgs = []

for line in fh:

line = line.strip('\\n')

line = line.rstrip()

words = line.split()

imgs.append((words[0], int(words[1])))

self.imgs = imgs

self.transform = transform

self.target_transform = target_transform

self.loader = loader

def __getitem__(self, index):

fn, label = self.imgs[index]

img = self.loader(fn)

if self.transform is not None:

img = self.transform(img)

return img, label

def __len__(self):

return len(self.imgs)

train_data = MyDataset(txt=root + 'rawtrain.txt', transform=transforms.ToTensor())

test_data = MyDataset(txt=root + 'rawtest.txt', transform=transforms.ToTensor())

train_loader = DataLoader(dataset=train_data, batch_size=31, shuffle=True)

test_loader = DataLoader(dataset=test_data, batch_size=31, shuffle=True)

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))

])

3.模型训练设置

model = LeNet5()

#使用softmax分类

criterion = torch.nn.CrossEntropyLoss()

#设置随机梯度下降 学习率和L2正则

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

#使用GPU训练

model = model.to(device)

4.训练

每训练一个epoch 做一次平均loss train acc test acc的计算绘制

def train(epoch):

running_loss = 0.0

num_correct = 0.0

total = 0

correct = 0

total = 0

test_acc = 0.0

# train

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs = inputs.to(device)

target = target.to(device)

optimizer.zero_grad()

# forward + backward + update

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

_, predicted = torch.max(outputs.data, dim=1)

total += target.size(0)

num_correct += (predicted == target).sum().item()

# #test

# with torch.no_grad():

# for data in test_loader:

# images, labels = data

# images = images.to(device)

# labels = labels.to(device)

# outputs = model(images)

# _, predicted = torch.max(outputs.data, dim=1)

# total += labels.size(0)

#

# correct += (predicted == labels).sum().item()

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / len(train_loader)))

# print('Accuracy on test set: %d %%' % (100 * correct / total))

# test_acc=100 * correct / total

test_acc = test()

acc = (num_correct / len(train_loader.dataset) * 100)

print("num_correct=")

print(acc)

running_loss /= len(train_loader)

draw.new_data(running_loss, acc, test_acc, 2)

draw.draw()

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

test_acc = 100 * correct / total

print('Accuracy on test set: ', test_acc, '%')

return test_acc

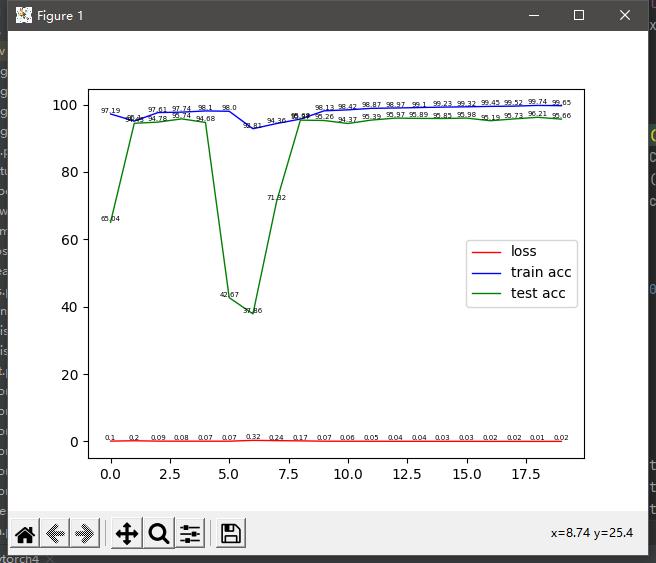

5.结果统计

if __name__ == '__main__':

for epoch in range(20):

train(epoch)

torch.save(model.state_dict(), "minist_last.pth")

draw.show()

从图中效果可以看到随着训练次数的增加,loss在不断下降,train acc 和test acc 也在慢慢收敛,最终达到了train acc=97% test acc=94%的效果,但发现曲线依然还有优化的空间,训练次数不是很足够。

这里我将训练参数保留了下来,然后接着在前面基础上继续训练,观察结果。

在继续训练的过程中出现了测试准确率突然下滑的现象,目前我不知道为什么,但最高的精度可达96.21%,效果还算不错。

以上是关于LeNet神经网络-pytorch实现的主要内容,如果未能解决你的问题,请参考以下文章