Spark环境搭建(CentOS7)

Posted 莫问今朝

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Spark环境搭建(CentOS7)相关的知识,希望对你有一定的参考价值。

1. 首先要安装java8,参考

【Linux】Linux服务器(centos7)环境搭建java/python3/nginx

2. 然后安装scala

首先下载scala, 解压,然后

vim /etc/profile

在文件末尾添加, 把路径换成自己的解压路径

export PATH=$PATH:/usr/local/scala-2.12.6/bin

然后加载新的环境变量并检查是否安装成功

source /etc/profile [root@localhost local]# scala -version Scala code runner version 2.12.6 -- Copyright 2002-2018, LAMP/EPFL and Lightbend, Inc. [root@localhost local]#

3.下载spark,解压

在刚才那个环境变量配置文件 /etc/profile 中添加

export SPARK_HOME=/usr/local/spark-2.3.1-bin-hadoop2.7 export PATH=$SPARK_HOME/bin:$PATH

然后source加载, 到安装目录的bin目录下启动

[root@localhost local]# cd /usr/local/spark-2.3.1-bin-hadoop2.7/bin [root@localhost bin]# ./spark-shell 2018-09-13 21:40:51 WARN Utils:66 - Your hostname, localhost.localdomain resolves to a loopback address: 127.0.0.1; using 192.168.0.150 instead (on interface ens33) 2018-09-13 21:40:51 WARN Utils:66 - Set SPARK_LOCAL_IP if you need to bind to another address 2018-09-13 21:40:51 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 2018-09-13 21:40:58 WARN Utils:66 - Service \'SparkUI\' could not bind on port 4040. Attempting port 4041. Spark context Web UI available at http://192.168.0.150:4041 Spark context available as \'sc\' (master = local[*], app id = local-1536846059114). Spark session available as \'spark\'. Welcome to ____ __ / __/__ ___ _____/ /__ _\\ \\/ _ \\/ _ `/ __/ \'_/ /___/ .__/\\_,_/_/ /_/\\_\\ version 2.3.1 /_/ Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181) Type in expressions to have them evaluated. Type :help for more information. scala>

4. 克隆3个虚拟机,并把4个虚拟机的ip固定,参考:【Linux】CentOS操作和问题汇总

这样我们一共就有4个虚拟机用于搭建集群

5. 修改主机名, 查看主机名

[root@localhost ~]# hostname localhost.localdomain

或者

[root@localhost ~]# hostnamectl Static hostname: localhost.localdomain Icon name: computer-vm Chassis: vm Machine ID: e44f84b669ba4711b250a7cd48d7c30f Boot ID: 7eeae3e7a7d549ccb2480523f0b887b8 Virtualization: vmware Operating System: CentOS Linux 7 (Core) CPE OS Name: cpe:/o:centos:centos:7 Kernel: Linux 3.10.0-862.el7.x86_64 Architecture: x86-64

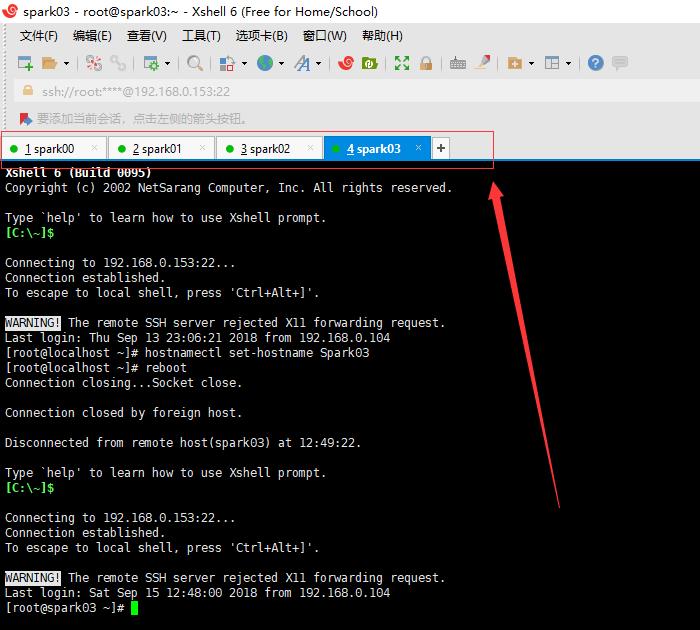

修改主机名并重启虚拟机

[root@localhost ~]# hostnamectl set-hostname Spark00 [root@localhost ~]# reboot

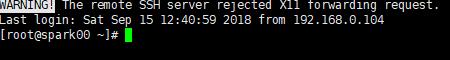

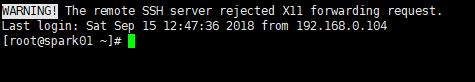

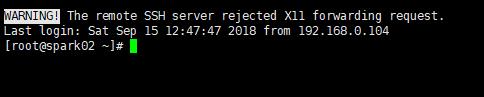

重连后 @localhost变成了 @Spark00

Last login: Sat Sep 15 12:05:22 2018 from 192.168.0.104 [root@spark00 ~]#

同样的方法把另外三台分别改称spark01, spark02, spark03, 如下

XShell命名改成一样的便于管理

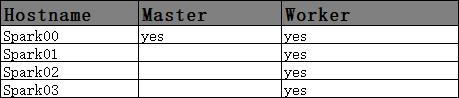

集群角色安排

以上是关于Spark环境搭建(CentOS7)的主要内容,如果未能解决你的问题,请参考以下文章