logstash 添加nginx日志

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了logstash 添加nginx日志相关的知识,希望对你有一定的参考价值。

选择需求分类废话少说直接上图

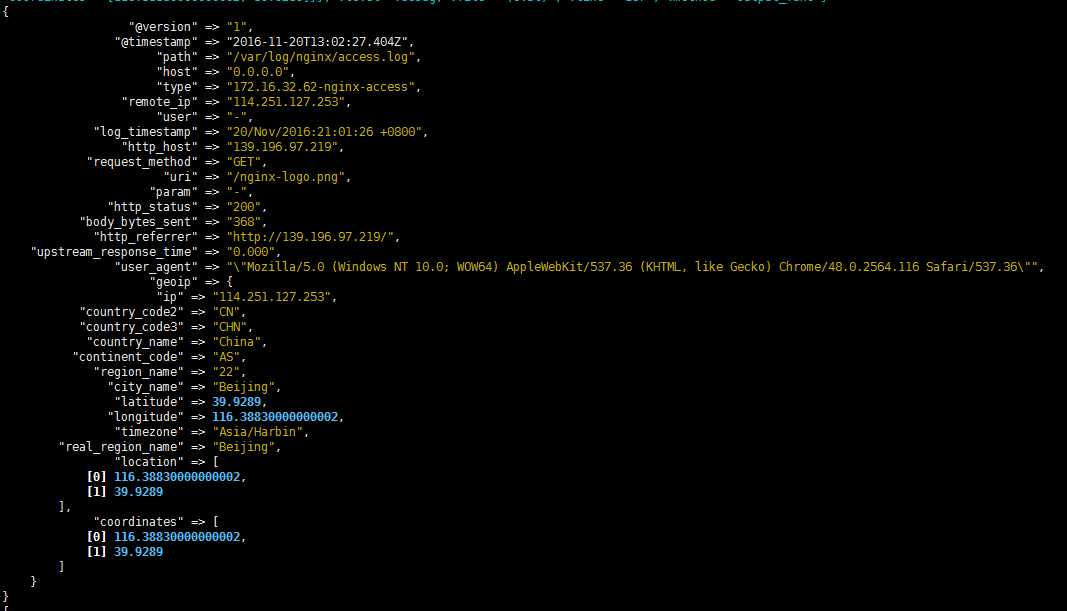

第一张图:

2.此图搭配的日志格式是:

log_format main ‘$remote_addr - $remote_user [$time_local] $http_host $request_method "$uri" "$query_string" ‘

‘$status $body_bytes_sent "$http_referer" $upstream_status $upstream_addr $request_time $upstream_response_time ‘

‘"$http_user_agent" "$http_cdn_src_ip" "$http_x_forwarded_for"‘ ;

3.写正则放在那里呢?

放在logstash 解压目录下,创建一个 patterns/nginx文件

URIPARM1 [A-Za-z0-9$.+!*‘|(){},[email protected]#%&/=:;_?\\-\\[\\]]*

URIPATH1 (?:/[A-Za-z0-9$.+!*‘(){},~:;[email protected]#%&_\\- ]*)+

URI1 (%{URIPROTO}://)?(?:%{USER}(?::[^@]*)[email protected])?(?:%{URIHOST})?(?:%{URIPATHPARAM})?

HOSTPORT %{IPORHOST}:%{POSINT}

NGINXACCESS %{IPORHOST:remote_ip} - (%{USERNAME:user}|-) \\[%{HTTPDATE:log_timestamp}\\] %{HOSTNAME:http_host} %{WORD:request_method} \\"%{URIPATH1:uri}\\" \\"%{URIPARM1:param}\\" %{BASE10NUM:http_status} (?:%{BASE10NUM:body_bytes_sent}|-) \\"(?:%{URI1:http_referrer}|-)\\" (%{BASE10NUM:upstream_status}|-) (?:%{HOSTPORT:upstream_addr}|-) (%{BASE16FLOAT:upstream_response_time}|-) (%{BASE16FLOAT:request_time}|-) (?:%{QUOTEDSTRING:user_agent}|-) \\"(%{IPV4:client_ip}|-)\\" \\"(%{WORD:x_forword_for}|-)\\"

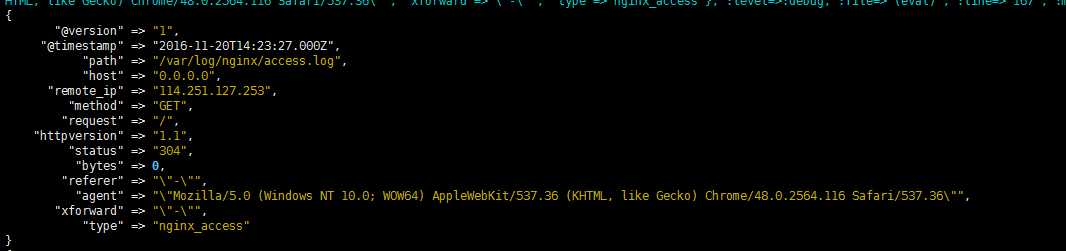

第二类 nginx默认log格式:

1.日志格式

log_format main ‘$remote_addr - $remote_user [$time_local] "$request" ‘

‘$status $body_bytes_sent "$http_referer" ‘

‘"$http_user_agent" "$http_x_forwarded_for"‘;

2.patterns/nginx

WZ ([^ ]*)

NGINXACCESS %{IP:remote_ip} \\- \\- \\[%{HTTPDATE:timestamp}\\] "%{WORD:method} %{WZ:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}

3.一段小代码

input {

file {

path => [ "/var/log/nginx/access.log" ]

start_position => "beginning"

ignore_older => 0

}

}

filter {

grok {

match => { "message" => "%{NGINXACCESS}" }

}

geoip {

source => "remote_ip"

target => "geoip"

database => "/etc/logstash/GeoLiteCity.dat"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

convert => [ "response","integer" ]

convert => [ "bytes","integer" ]

replace => { "type" => "nginx_access" }

remove_field => "message"

}

date {

match => [ "timestamp","dd/MMM/yyyy:HH:mm:ss Z"]

}

mutate {

remove_field => "timestamp"

}

}

output {

elasticsearch {

hosts => ["elk01:9200","elk02:9200","elk03:9200"]

index => "logstash-nginx-access-%{+YYYY.MM.dd}"

}

stdout {codec => rubydebug} #调试的时候用的

}

以上两种都已经测试了

以上是关于logstash 添加nginx日志的主要内容,如果未能解决你的问题,请参考以下文章

ELK总结——第三篇Logstash监控Nginx日志存入redis,实现服务解耦

ELK之八----Logstash结合kafka收集系统日志和nginx日志