AWS S3日志文件通过服务器上传到elk

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了AWS S3日志文件通过服务器上传到elk相关的知识,希望对你有一定的参考价值。

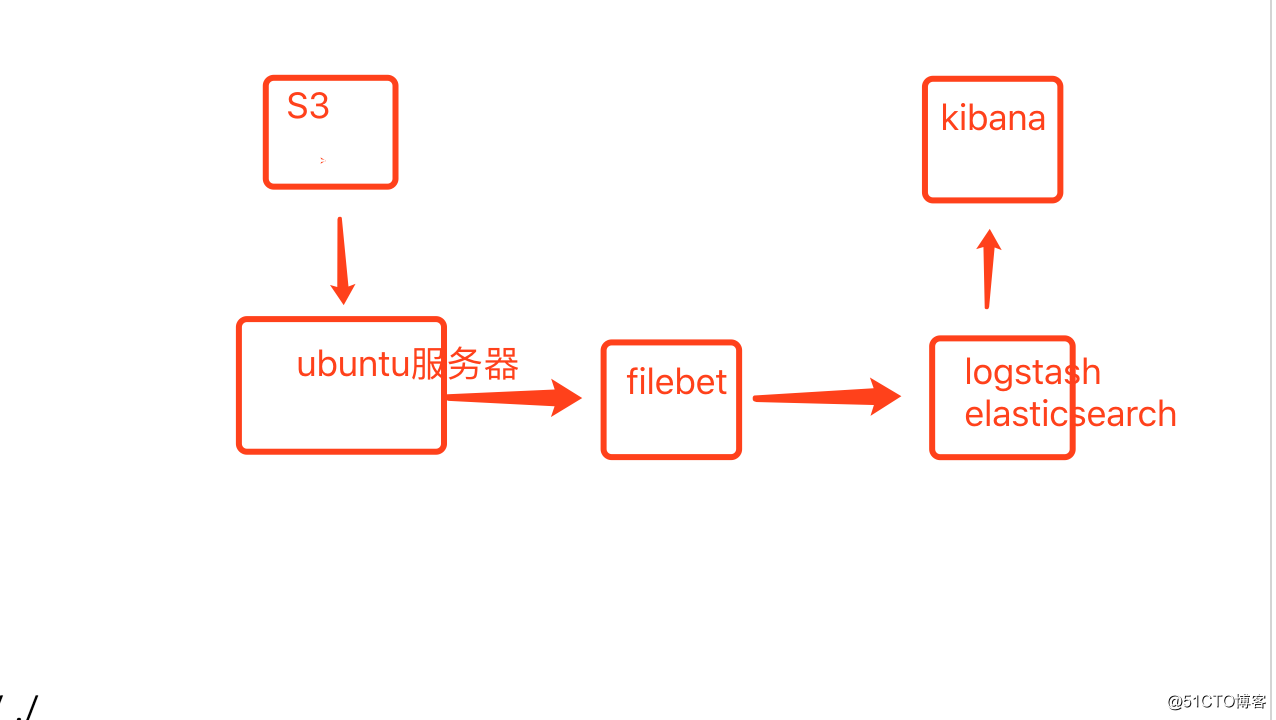

通过elk查看s3产生的大量日志

首先理清思路

首先从s3上用s3cmd命令将logs同步下来 再将日志写到文件 再通过elk展示出来

一、安装s3cmd命令

S3cmd工具的安装与简单使用:

参考文档

https://www.cnblogs.com/xd502djj/p/3604783.html https://github.com/s3tools/s3cmd/releases

先下载S3cmd安装包 从github中获取

mkdir /home/tools/ && cd /home/tools/ wget https://github.com/s3tools/s3cmd/releases/download/v2.0.1/s3cmd-2.0.1.tar.gz tar xf s3cmd-2.0.1.tar.gz mv s3cmd-2.0.1 /usr/local/ mv /usr/local/ s3cmd-2.0.1 /usr/local/s3cmd ln -s /usr/local/s3cmd/s3cmd /usr/bin/s3cmd

安装完成之后 使用 s3cmd –configure 设置key

主要就是access key和 secure key 配置完成之后会生成下边的配置文件

[[email protected] s3cmd]# cat /root/.s3cfg [default] access_key = AKIAI4Q3PTOQ5xxxxxxx aws s3的access key 必须 access_token = add_encoding_exts = add_headers = bucket_location = US ca_certs_file = cache_file = check_ssl_certificate = True check_ssl_hostname = True cloudfront_host = cloudfront.amazonaws.com default_mime_type = binary/octet-stream delay_updates = False delete_after = False delete_after_fetch = False delete_removed = False dry_run = False enable_multipart = True encrypt = False expiry_date = expiry_days = expiry_prefix = follow_symlinks = False force = False get_continue = False gpg_command = /usr/bin/gpg gpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_passphrase = aviagames guess_mime_type = True host_base = s3.amazonaws.com host_bucket = %(bucket)s.s3.amazonaws.com human_readable_sizes = False invalidate_default_index_on_cf = False invalidate_default_index_root_on_cf = True invalidate_on_cf = False kms_key = limit = -1 limitrate = 0 list_md5 = False log_target_prefix = long_listing = False max_delete = -1 mime_type = multipart_chunk_size_mb = 15 multipart_max_chunks = 10000 preserve_attrs = True progress_meter = True proxy_host = proxy_port = 0 put_continue = False recursive = False recv_chunk = 65536 reduced_redundancy = False requester_pays = False restore_days = 1 restore_priority = Standard secret_key = 0uoniJrn9qQhAnxxxxxxCZxxxxxxxxxxxx aws s3的secret_key 必须 send_chunk = 65536 server_side_encryption = False signature_v2 = False signurl_use_https = False simpledb_host = sdb.amazonaws.com skip_existing = False socket_timeout = 300 stats = False stop_on_error = False storage_class = urlencoding_mode = normal use_http_expect = False use_https = False use_mime_magic = True verbosity = WARNING website_endpoint = http://%(bucket)s.s3-website-%(location)s.amazonaws.com/ website_error = website_index = index.html

二、s3cmd命令安装完成之后 编写脚本

#!/bin/bash

#进入S3同步目录

mkdir /server/s3dir/logs/ -p && cd /server/s3dir/logs/

#每隔5分钟将S3的日志列表放到S3.log文件中

#while true

#do

/usr/bin/s3cmd ls s3://bigbearsdk/logs/ >S3.log

#执行同步命令确认 S3与服务器 日志一样

/usr/bin/s3cmd sync --skip-existing s3://bigbearsdk/logs/ ./

#done

#当天的日志排并序追加到一个文件

grep $(date +%F) S3.log |sort -nk1,2 |awk -F [/] '{print $NF}' > date.log

sed -i 's#\_#\\_#g' date.log

sed -i 's#<#\\\<#g' date.log

sed -i 's#\ #\\ #g' date.log

sed -i 's#>#\\\>#g' date.log

##[ -f ELK.log ] &&

#{

# cat ELK.log >> ELK_$(date +%F).log

# echo > ELK.log

# find /home/tools/ -name ELK*.log -mtime +7 |xargs rm -f

#}

#将每个文件的日志都追加到S3上传日志中

while read line

do

echo "$line"|sed 's#(#\\\(#g'|sed 's#)#\\\)#g'| sed 's#\_#\\_#g'|sed 's#<#\\\<#g'|sed 's#>#\\\>#g'|sed 's#\ #\\ #g' >while.log

head -1 while.log |xargs cat >> /server/s3dir/s3elk.log

done < date.log这样的话 S3日志里边的内容全都到了 s3elk.log 这个文件中 再通过elk监控日志

未完待续。。。

有意向加微信 Dellinger_blue

以上是关于AWS S3日志文件通过服务器上传到elk的主要内容,如果未能解决你的问题,请参考以下文章