配置两个不同kerberos认证中心的集群间的互信

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了配置两个不同kerberos认证中心的集群间的互信相关的知识,希望对你有一定的参考价值。

参考技术A 两个Hadoop集群开启Kerberos验证后,集群间不能够相互访问,需要实现Kerberos之间的互信,使用Hadoop集群A的客户端访问Hadoop集群B的服务(实质上是使用Kerberos Realm A上的Ticket实现访问Realm B的服务)。先决条件:

1)两个集群(XDF.COM和HADOOP.COM)均开启Kerberos认证

2)Kerberos的REALM分别设置为XDF.COM和HADOOP.COM

步骤如下:

实现 DXDF.COM 和 HADOOP.COM 之间的跨域互信,例如使用 XDF.COM 的客户端访问 HADOOP.COM 中的服务,两个REALM需要共同拥有名为krbtgt/ HADOOP.COM@XDF.COM 的principal,两个Keys需要保证密码,version number和加密方式一致。默认情况下互信是单向的, HADOOP.COM 的客户端访问 XDF.COM 的服务,两个REALM需要有krbtgt/ XDF.COM@HADOOP.COM 的principal。

向两个集群中添加krbtgt principal

要验证两个entries具有匹配的kvno和加密type,查看命令使用getprinc <principal_name>

使用hadoop org.apache.hadoop.security.HadoopKerberosName <principal-name>来实现验证,例如:

第一种方式是配置shared hierarchy of names,这个是默认及比较简单的方式,第二种方式是在krb5.conf文件中改变capaths,复杂但是比较灵活,这里采用第二种方式。

在两个集群的节点的/etc/krb5.conf文件配置domain和realm的映射关系,例如:在XDF cluster中配置:

在HADOOP Cluster中配置:

配置成'.'是表示没有intermediate realms

为了是XDF 可以访问HADOOP的KDC,需要将HADOOP的KDC Server配置到XDF cluster中,如下,反之相同:

在domain_realm中,一般配置成'.XDF.COM'和'XDF.COM'的格式,'.'前缀保证kerberos将所有的XDF.COM的主机均映射到XDF.COM realm。但是如果集群中的主机名不是以XDF.COM为后缀的格式,那么需要在domain_realm中配置主机与realm的映射关系,例XDF.nn.local映射为XDF.COM,需要增加XDF.nn.local = XDF.COM。

重启kerberos服务

在hdfs-site.xml,设置允许的realms

在hdfs-site.xml中设置dfs.namenode.kerberos.principal.pattern为"*"

这个是客户端的匹配规则用于控制允许的认证realms,如果该参数不配置,会有下面的异常:

1)使用hdfs命令测试XDF 和HADOOP 集群间的数据访问,例如在XDF Cluster中kinit admin@XDF.CON,然后运行hdfs命令:

在HADOOP.COM中进行相同的操作

2)运行distcp程序将XDF的数据复制到HADOOP集群,命令如下:

两集群的/etc/krb5.conf完整文件内容如下:

大数据问题排查系列-大数据集群开启 kerberos 认证后 HIVE 作业执行失败

大数据问题排查系列-大数据集群开启 kerberos 认证后 HIVE 作业执行失败

1 前言

大家好,我是明哥!

本文是大数据问题排查系列 的 kerberos问题排查子序列博文之一,讲述大数据集群开启 kerberos 安全认证后,hive作业执行失败的根本原因,解决方法与背后的原理和机制。

以下是正文。

2 问题现象

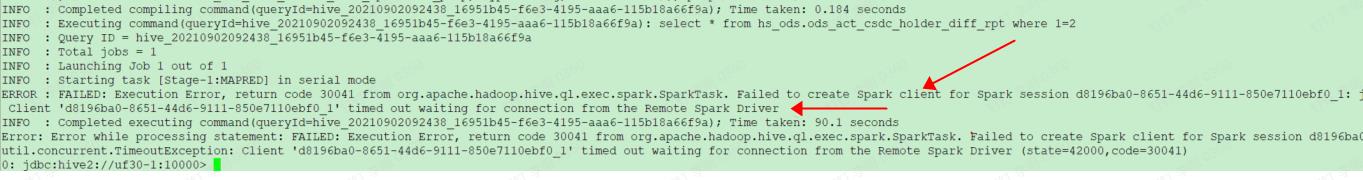

大数据集群开启 kerberos 安全认证后,HIVE ON SPARK 作业执行失败。通过客户端 beeline 提交作业,报错 spark client 创建失败,其报错信息是:

Failed to create spark client for spark session xxx: java.util.concurrent.TimeoutException: client xxx timedout waiting for connection from the remote spark driver

或者是:

Failed to create spark client for spark session xxx: java.lang.RuntimeException: spark-submit

客户端 beeline 的报错信息截图如下图所示:

3 问题分析

按照问题排查的常规思路,我们首先查看 hiveserver2 的日志,能发现核心报错信息 “Error while waiting for Remote Spark Driver to connect back to HiveServer2”,hiveserver2 的完整相关日志如下所示:

2021-09-02 11:01:29,496 ERROR org.apache.hive.spark.client.SparkClientImpl: [HiveServer2-Background-Pool: Thread-135]: Error while waiting for Remote Spark Driver to connect back to HiveServer2.

java.util.concurrent.ExecutionException: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41) ~[netty-common-4.1.17.Final.jar:4.1.17.Final]

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:103) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:90) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:104) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.<init>(RemoteHiveSparkClient.java:100) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:77) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:131) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1334) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:256) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.access$600(SQLOperation.java:92) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:345) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_201]

at javax.security.auth.Subject.doAs(Subject.java:422) [?:1.8.0_201]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875) [hadoop-common-3.0.0-cdh6.3.2.jar:?]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:357) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_201]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_201]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_201]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_201]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_201]

Caused by: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

at org.apache.hive.spark.client.SparkClientImpl$2.run(SparkClientImpl.java:495) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

... 1 more

2021-09-02 11:01:29,505 ERROR org.apache.hadoop.hive.ql.exec.spark.SparkTask: [HiveServer2-Background-Pool: Thread-135]: Failed to execute Spark task "Stage-1"

org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session f43a158c-168a-4117-8993-8f1780913715_0: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.getHiveException(SparkSessionImpl.java:286) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:135) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1334) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:256) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.access$600(SQLOperation.java:92) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:345) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at java.security.AccessController.doPrivileged(Native Method) ~[?以上是关于配置两个不同kerberos认证中心的集群间的互信的主要内容,如果未能解决你的问题,请参考以下文章

kafka kerberos 认证访问与非认证访问共存下的ACL问题

关于hadoop登陆kerberos时设置环境变量问题的思考