如何使用eclipse maven构建hbase开发环境

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了如何使用eclipse maven构建hbase开发环境相关的知识,希望对你有一定的参考价值。

步骤如下:1:从HBase集群中复制一份Hbase部署文件,放置在开发端某一目录下(如在/app/hadoop/hbase096目录下)。

2:在eclipse里新建一个java项目HBase,然后选择项目属性,在Libraries->Add External JARs...,然后选择/app/hadoop/hbase096/lib下相关的JAR包,如果只是测试用的话,就简单一点,将所有的JAR选上。

3:在项目HBase下增加一个文件夹conf,将Hbase集群的配置文件hbase-site.xml复制到该目录,然后选择项目属性在Libraries->Add Class Folder,将刚刚增加的conf目录选上。

4:在HBase项目中增加一个chapter12的package,然后增加一个HBaseTestCase的class,然后将《Hadoop实战第2版》12章的代码复制进去,做适当的修改,代码如下:

package chapter12;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes;

public class HBaseTestCase

//声明静态配置 HBaseConfiguration

static Configuration cfg=HBaseConfiguration.create();

//创建一张表,通过HBaseAdmin HTableDescriptor来创建

public static void creat(String tablename,String columnFamily) throws Exception

HBaseAdmin admin = new HBaseAdmin(cfg);

if (admin.tableExists(tablename))

System.out.println("table Exists!");

System.exit(0);

else

HTableDescriptor tableDesc = new HTableDescriptor(tablename);

tableDesc.addFamily(new HColumnDescriptor(columnFamily));

admin.createTable(tableDesc);

System.out.println("create table success!");

//添加一条数据,通过HTable Put为已经存在的表来添加数据

public static void put(String tablename,String row, String columnFamily,String column,String data) throws Exception

HTable table = new HTable(cfg, tablename);

Put p1=new Put(Bytes.toBytes(row));

p1.add(Bytes.toBytes(columnFamily), Bytes.toBytes(column), Bytes.toBytes(data));

table.put(p1);

System.out.println("put '"+row+"','"+columnFamily+":"+column+"','"+data+"'");

public static void get(String tablename,String row) throws IOException

HTable table=new HTable(cfg,tablename);

Get g=new Get(Bytes.toBytes(row));

Result result=table.get(g);

System.out.println("Get: "+result);

//显示所有数据,通过HTable Scan来获取已有表的信息

public static void scan(String tablename) throws Exception

HTable table = new HTable(cfg, tablename);

Scan s = new Scan();

ResultScanner rs = table.getScanner(s);

for(Result r:rs)

System.out.println("Scan: "+r);

public static boolean delete(String tablename) throws IOException

HBaseAdmin admin=new HBaseAdmin(cfg);

if(admin.tableExists(tablename))

try

admin.disableTable(tablename);

admin.deleteTable(tablename);

catch(Exception ex)

ex.printStackTrace();

return false;

return true;

public static void main (String [] agrs)

String tablename="hbase_tb";

String columnFamily="cf";

try

HBaseTestCase.creat(tablename, columnFamily);

HBaseTestCase.put(tablename, "row1", columnFamily, "cl1", "data");

HBaseTestCase.get(tablename, "row1");

HBaseTestCase.scan(tablename);

/* if(true==HBaseTestCase.delete(tablename))

System.out.println("Delete table:"+tablename+"success!");

*/

catch (Exception e)

e.printStackTrace();

5:设置运行配置,然后运行。运行前将Hbase集群先启动。

6:检验,使用hbase shell查看hbase,发现已经建立表hbase_tb。 参考技术A 环境需求

Eclipse版本:eclipse-jee-mars-1

操作系统:Ubuntu15.04

Hadoop:1.2.1

HBase:0.94.13

搭建过程

运行Eclipse,创建一个新的Java工程“hbaseTest”,右键项目根目录,选择 “Properties”->“Java Build Path”->“Library”->“Add External JARs”,将HBase解压后根目录下的hbase-0.94.13-security.jar、hbase-0.94.13-security-tests.jar和lib子目录下所有jar 包添加到本工程的Classpath下。

工程根目录下创建文件夹conf,将hbase解压后根目录下的conf文件夹内的hbase-site.xml拷贝过来。

甘道夫Eclipse+Maven搭建HBase开发环境及HBaseDAO代码演示样例

环境:

Win764bit

Eclipse Version: Kepler Service Release 1

java version "1.7.0_40"

第一步:Eclipse中新建Maven项目。编辑pom.xml并更新下载jar包

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>fulong.bigdata</groupId>

<artifactId>myHbase</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>0.96.2-hadoop2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.2.0</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.7</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

</dependencies>

</project>

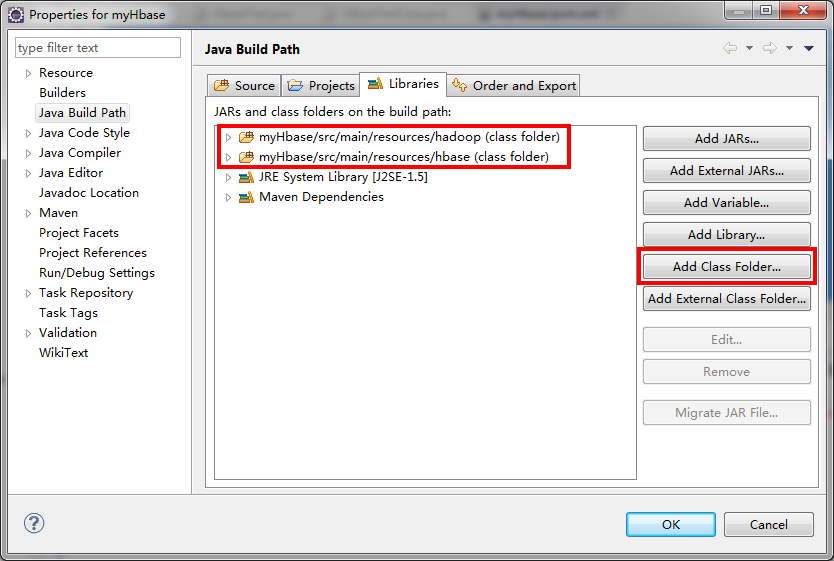

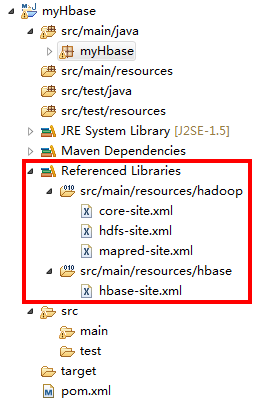

第二步:将目标集群的Hadoop和HBase配置文件复制到project中

目的是为了让project能找到Zookeeper及Hbase Master。

配置文件在project中的路径为:

/src/main/resources/hadoop

/src/main/resources/hbase

然后将这两个文件夹加入进project的classpath中:

终于文件夹结构例如以下:

第三步:hbase-site.xml中加入

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

</property> 第四步:编写Java程序调用Hbase接口

该代码包括了部分经常使用HBase接口。

package myHbase;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.util.Bytes;

public class HBaseDAO {

static Configuration conf = HBaseConfiguration.create();

/**

* create a table :table_name(columnFamily)

* @param tablename

* @param columnFamily

* @throws Exception

*/

public static void createTable(String tablename, String columnFamily) throws Exception {

HBaseAdmin admin = new HBaseAdmin(conf);

if(admin.tableExists(tablename)) {

System.out.println("Table exists!");

System.exit(0);

}

else {

HTableDescriptor tableDesc = new HTableDescriptor(TableName.valueOf(tablename));

tableDesc.addFamily(new HColumnDescriptor(columnFamily));

admin.createTable(tableDesc);

System.out.println("create table success!");

}

admin.close();

}

/**

* delete table ,caution!!!!!! ,dangerous!!!!!!

* @param tablename

* @return

* @throws IOException

*/

public static boolean deleteTable(String tablename) throws IOException {

HBaseAdmin admin = new HBaseAdmin(conf);

if(admin.tableExists(tablename)) {

try {

admin.disableTable(tablename);

admin.deleteTable(tablename);

} catch (Exception e) {

// TODO: handle exception

e.printStackTrace();

admin.close();

return false;

}

}

admin.close();

return true;

}

/**

* put a cell data into a row identified by rowKey,columnFamily,identifier

* @param HTable, create by : HTable table = new HTable(conf, "tablename")

* @param rowKey

* @param columnFamily

* @param identifier

* @param data

* @throws Exception

*/

public static void putCell(HTable table, String rowKey, String columnFamily, String identifier, String data) throws Exception{

Put p1 = new Put(Bytes.toBytes(rowKey));

p1.add(Bytes.toBytes(columnFamily), Bytes.toBytes(identifier), Bytes.toBytes(data));

table.put(p1);

System.out.println("put ‘"+rowKey+"‘, ‘"+columnFamily+":"+identifier+"‘, ‘"+data+"‘");

}

/**

* get a row identified by rowkey

* @param HTable, create by : HTable table = new HTable(conf, "tablename")

* @param rowKey

* @throws Exception

*/

public static Result getRow(HTable table, String rowKey) throws Exception {

Get get = new Get(Bytes.toBytes(rowKey));

Result result = table.get(get);

System.out.println("Get: "+result);

return result;

}

/**

* delete a row identified by rowkey

* @param HTable, create by : HTable table = new HTable(conf, "tablename")

* @param rowKey

* @throws Exception

*/

public static void deleteRow(HTable table, String rowKey) throws Exception {

Delete delete = new Delete(Bytes.toBytes(rowKey));

table.delete(delete);

System.out.println("Delete row: "+rowKey);

}

/**

* return all row from a table

* @param HTable, create by : HTable table = new HTable(conf, "tablename")

* @throws Exception

*/

public static ResultScanner scanAll(HTable table) throws Exception {

Scan s =new Scan();

ResultScanner rs = table.getScanner(s);

return rs;

}

/**

* return a range of rows specified by startrow and endrow

* @param HTable, create by : HTable table = new HTable(conf, "tablename")

* @param startrow

* @param endrow

* @throws Exception

*/

public static ResultScanner scanRange(HTable table,String startrow,String endrow) throws Exception {

Scan s =new Scan(Bytes.toBytes(startrow),Bytes.toBytes(endrow));

ResultScanner rs = table.getScanner(s);

return rs;

}

/**

* return a range of rows filtered by specified condition

* @param HTable, create by : HTable table = new HTable(conf, "tablename")

* @param startrow

* @param filter

* @throws Exception

*/

public static ResultScanner scanFilter(HTable table,String startrow, Filter filter) throws Exception {

Scan s =new Scan(Bytes.toBytes(startrow),filter);

ResultScanner rs = table.getScanner(s);

return rs;

}

public static void main(String[] args) throws Exception {

// TODO Auto-generated method stub

HTable table = new HTable(conf, "apitable");

// ResultScanner rs = HBaseDAO.scanRange(table, "2013-07-10*", "2013-07-11*");

// ResultScanner rs = HBaseDAO.scanRange(table, "100001", "100003");

ResultScanner rs = HBaseDAO.scanAll(table);

for(Result r:rs) {

System.out.println("Scan: "+r);

}

table.close();

// HBaseDAO.createTable("apitable", "testcf");

// HBaseDAO.putRow("apitable", "100001", "testcf", "name", "liyang");

// HBaseDAO.putRow("apitable", "100003", "testcf", "name", "leon");

// HBaseDAO.deleteRow("apitable", "100002");

// HBaseDAO.getRow("apitable", "100003");

// HBaseDAO.deleteTable("apitable");

}

}

以上是关于如何使用eclipse maven构建hbase开发环境的主要内容,如果未能解决你的问题,请参考以下文章