Web API之基于H5客户端分段上传大文件

Posted 冰封的心

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Web API之基于H5客户端分段上传大文件相关的知识,希望对你有一定的参考价值。

http://www.cnblogs.com/OneDirection/articles/7285739.html

查询很多资料没有遇到合适的,对于MultipartFormDataStreamProvider 也并是很适合,总会出现问题。于是放弃,使用了传统的InputStream 分段处理完之后做merge处理。

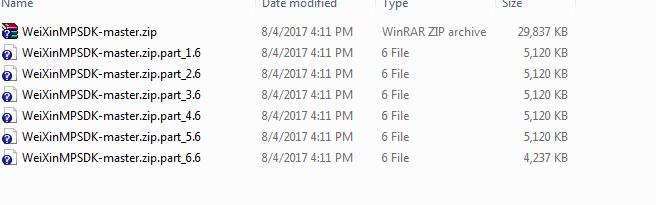

前台分段规则 命名要规范,传递分段总文件数便于判定分段上传完成做merge处理。merge需要根据分段顺序并在merge成功后删除分段文件。

var FilePartName = file.name + ".part_" + PartCount + "." + TotalParts;

前台JS代码:

<script>

$(document).ready(function () {

$(\'#btnUpload\').click(function () {

var files = $(\'#uploadfile\')[0].files;

for (var i = 0; i < files.length; i++) {

UploadFile(files[i]);

}

}

);

});

function UploadFileChunk(Chunk, FileName) {

var fd = new FormData();

fd.append(\'file\', Chunk, FileName);

fd.append(\'UserName\', \'ming.lu@genewiz.com\');

fd.append(\'BusinessLine\', \'1\');

fd.append(\'ServiceItemType\', \'109\');

fd.append(\'Comment\', \'This is order comment for GA order\');

fd.append(\'UserId\', \'43F0FEDF-E9AF-4289-B71B-54807BCB8CD9\');

$.ajax({

type: "POST",

url: \'http://localhost:50821/api/customer/GA/SaveSangerCameraOrder\',

contentType: false,

processData: false,

data: fd,

success: function (data) {

alert(data);

},

error: function (data) {

alert(data.status + " : " + data.statusText + " : " + data.responseText);

}

});

}

function UploadFile(TargetFile) {

// create array to store the buffer chunks

var FileChunk = [];

// the file object itself that we will work with

var file = TargetFile;

// set up other initial vars

var MaxFileSizeMB = 5;

var BufferChunkSize = MaxFileSizeMB * (1024 * 1024);

var ReadBuffer_Size = 1024;

var FileStreamPos = 0;

// set the initial chunk length

var EndPos = BufferChunkSize;

var Size = file.size;

// add to the FileChunk array until we get to the end of the file

while (FileStreamPos < Size) {

// "slice" the file from the starting position/offset, to the required length

FileChunk.push(file.slice(FileStreamPos, EndPos));

FileStreamPos = EndPos; // jump by the amount read

EndPos = FileStreamPos + BufferChunkSize; // set next chunk length

}

// get total number of "files" we will be sending

var TotalParts = FileChunk.length;

var PartCount = 0;

// loop through, pulling the first item from the array each time and sending it

while (chunk = FileChunk.shift()) {

PartCount++;

// file name convention

var FilePartName = file.name + ".part_" + PartCount + "." + TotalParts;

// send the file

UploadFileChunk(chunk, FilePartName);

}

}

</script>

MaxFileSizeMB参数设置分段大小 ,测试例子为5M

chunk = FileChunk.shift() 在分段上传成功之后删除已上传分段

|

1

|

HTML代码: |

<h2>Test Multiple Chunk Upload</h2>

<p>

<input type="file" name="uploadfile" id="uploadfile" multiple="multiple" />

<br />

<a href="#" id="btnUpload" class="btn btn-primary">Upload file</a>

</p>

后台webapi controller代码:

/// <summary>

/// Upload sanger camera order

/// </summary>

/// <param name="model">user id and user email are required</param>

/// <returns></returns>

[HttpPost]

[Route("api/customer/GA/SaveSangerCameraOrder")]

[MimeMultipart]

public HttpResponseMessage SaveSangerCameraOrder()

{

var files = HttpContext.Current.Request.Files;

if (files.Count <= 0)

{

return new HttpResponseMessage()

{

StatusCode = System.Net.HttpStatusCode.OK,

Content = new StringContent(ConstantStringHelper.API_FAILED)

};

}

//receice request form parameters

var userName = HttpContext.Current.Request.Form["UserName"];

var businessLine = HttpContext.Current.Request.Form["BusinessLine"];

var serviceItemType = HttpContext.Current.Request.Form["ServiceItemType"];

var comment = HttpContext.Current.Request.Form["Comment"];

var userId = HttpContext.Current.Request.Form["UserId"];

if (string.IsNullOrEmpty(userName)) userName = "UnknownUser";

string dateTimeTicket = string.Format("{0:yyyy-MM-dd}", System.DateTime.Now);

var storagePath = ConfigurationManager.AppSettings["SangerOrderStorageLocation"].ToString();

var fileSavePath = storagePath + @"/" + userName + "/" + dateTimeTicket + "/";

foreach (string file in files)

{

var FileDataContent = HttpContext.Current.Request.Files[file];

if (FileDataContent != null && FileDataContent.ContentLength > 0)

{

// take the input stream, and save it to a temp folder using

// the original file.part name posted

var stream = FileDataContent.InputStream;

var fileName = Path.GetFileName(FileDataContent.FileName);

var UploadPath = HttpContext.Current.Request.MapPath(fileSavePath);

Directory.CreateDirectory(UploadPath);

string path = Path.Combine(UploadPath, fileName);

if (System.IO.File.Exists(path))

System.IO.File.Delete(path);

using (var fileStream = System.IO.File.Create(path))

{

stream.CopyTo(fileStream);

}

// Once the file part is saved, see if we have enough to merge it

Utils UT = new Utils();

var isMergedSuccess = UT.MergeFile(path);

if (isMergedSuccess)

{

//generate txt document for customer comment

string timeTicket = string.Format("{0:yyyyMMddHHmmss}", System.DateTime.Now);

FileStream fs = new FileStream(UploadPath + timeTicket + "OrderComment.txt", FileMode.Create);

//get byte

byte[] data = System.Text.Encoding.Default.GetBytes(comment);

//write

fs.Write(data, 0, data.Length);

//clear and clost stream

fs.Flush();

fs.Close();

}

}

}

return new HttpResponseMessage()

{

StatusCode = System.Net.HttpStatusCode.OK,

Content = new StringContent(ConstantStringHelper.API_SUCCESS)

};

}

工具类代码:

记得关闭stream

public class Utils

{

public string FileName { get; set; }

public string TempFolder { get; set; }

public int MaxFileSizeMB { get; set; }

public List<String> FileParts { get; set; }

public Utils()

{

FileParts = new List<string>();

}

/// <summary>

/// original name + ".part_N.X" (N = file part number, X = total files)

/// Objective = enumerate files in folder, look for all matching parts of split file. If found, merge and return true.

/// </summary>

/// <param name="FileName"></param>

/// <returns></returns>

public bool MergeFile(string FileName)

{

bool rslt = false;

// parse out the different tokens from the filename according to the convention

string partToken = ".part_";

string baseFileName = FileName.Substring(0, FileName.IndexOf(partToken));

string trailingTokens = FileName.Substring(FileName.IndexOf(partToken) + partToken.Length);

int FileIndex = 0;

int FileCount = 0;

int.TryParse(trailingTokens.Substring(0, trailingTokens.IndexOf(".")), out FileIndex);

int.TryParse(trailingTokens.Substring(trailingTokens.IndexOf(".") + 1), out FileCount);

// get a list of all file parts in the temp folder

string Searchpattern = Path.GetFileName(baseFileName) + partToken + "*";

string[] FilesList = Directory.GetFiles(Path.GetDirectoryName(FileName), Searchpattern);

// merge .. improvement would be to confirm individual parts are there / correctly in sequence, a security check would also be important

// only proceed if we have received all the file chunks

if (FilesList.Count() == FileCount)

{

// use a singleton to stop overlapping processes

if (!MergeFileManager.Instance.InUse(baseFileName))

{

MergeFileManager.Instance.AddFile(baseFileName);

if (File.Exists(baseFileName))

File.Delete(baseFileName);

// add each file located to a list so we can get them into

// the correct order for rebuilding the file

List<SortedFile> MergeList = new List<SortedFile>();

foreach (string file in FilesList)

{

SortedFile sFile = new SortedFile();

sFile.FileName = file;

baseFileName = file.Substring(0, file.IndexOf(partToken));

trailingTokens = file.Substring(file.IndexOf(partToken) + partToken.Length);

int.TryParse(trailingTokens.Substring(0, trailingTokens.IndexOf(".")), out FileIndex);

sFile.FileOrder = FileIndex;

MergeList.Add(sFile);

}

// sort by the file-part number to ensure we merge back in the correct order

var MergeOrder = MergeList.OrderBy(s => s.FileOrder).ToList();

using (FileStream FS = new FileStream(baseFileName, FileMode.Create))

{

try

{

// merge each file chunk back into one contiguous file stream

foreach (var chunk in MergeOrder)

{

using (FileStream fileChunk = new FileStream(chunk.FileName, FileMode.Open))

{

fileChunk.CopyTo(FS);

fileChunk.Flush();

fileChunk.Close();

fileChunk.Dispose();

}

}

foreach (var item in FilesList)

{

if (File.Exists(item))

File.Delete(item);

}

}

catch (Exception ex)

{

FS.Flush();

FS.Close();

FS.Dispose();

throw new Exception(ex.Message);

}

}

rslt = true;

// unlock the file from singleton

MergeFileManager.Instance.RemoveFile(baseFileName);

}

}

return rslt;

}

public List<string> SplitFiles()

{

//bool rslt = false;

string BaseFileName = Path.GetFileName(FileName);

// set the size of file chunk we are going to split into

int BufferChunkSize = 5 * (1024 * 1024); //5MB

// set a buffer size and an array to store the buffer data as we read it

const int READBUFFER_SIZE = 1024;

byte[] FSBuffer = new byte[READBUFFER_SIZE];

// open the file to read it into chunks

using (FileStream FS = new FileStream(FileName, FileMode.Open, FileAccess.Read, FileShare.Read))

{

// calculate the number of files that will be created

int TotalFileParts = 0;

if (FS.Length < BufferChunkSize)

{

TotalFileParts = 1;

}

else

{

float PreciseFileParts = ((float)FS.Length / (float)BufferChunkSize);

TotalFileParts = (int)Math.Ceiling(PreciseFileParts);

}

int FilePartCount = 0;

// scan through the file, and each time we get enough data to fill a chunk, write out that file

while (FS.Position < FS.Length)

{

string FilePartName = String.Format("{0}.part_{1}.{2}",

BaseFileName, (FilePartCount + 1).ToString(), TotalFileParts.ToString());

FilePartName = Path.Combine(TempFolder, FilePartName);

FileParts.Add(FilePartName);

using (FileStream FilePart = new FileStream(FilePartName, FileMode.Create))

{

int bytesRemaining = BufferChunkSize;

int bytesRead = 0;

while (bytesRemaining > 0 && (bytesRead = FS.Read(FSBuffer, 0,

Math.Min(bytesRemaining, READBUFFER_SIZE))) > 0)

{

FilePart.Write(FSBuffer, 0, bytesRead);

bytesRemaining -= bytesRead;

}

}

// file written, loop for next chunk

FilePartCount++;

}

}

return FileParts;

//return rslt;

}

}

public struct SortedFile

{

public int FileOrder { get; set; }

public String FileName { get; set; }

}

public class MergeFileManager

{

private static MergeFileManager instance;

private List<string> MergeFileList;

private MergeFileManager()

{

try

{

MergeFileList = new List<string>();

}

catch { }

}

public static MergeFileManager Instance

{

get

{

if (instance == null)

instance = new MergeFileManager();

return instance;

}

}

public void AddFile(string BaseFileName)

{

MergeFileList.Add(BaseFileName);

}

public bool InUse(string BaseFileName)

{

return MergeFileList.Contains(BaseFileName);

}

public bool RemoveFile(string BaseFileName)

{

return MergeFileList.Remove(BaseFileName);

}

}

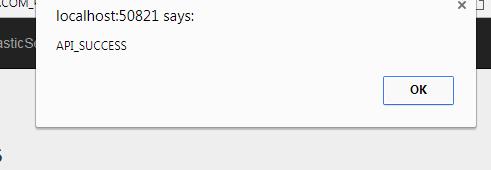

如该文件分六段上传,则会提示六次success,可以按照自己业务处理。

以上是关于Web API之基于H5客户端分段上传大文件的主要内容,如果未能解决你的问题,请参考以下文章