nginx+keepalived的高可用负载均衡集群构建

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了nginx+keepalived的高可用负载均衡集群构建相关的知识,希望对你有一定的参考价值。

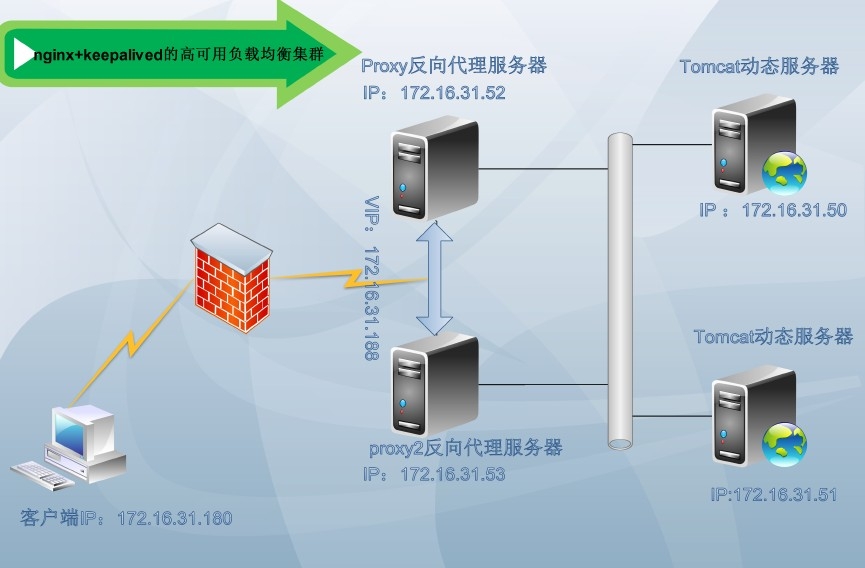

实验架构图:

实验环境

Nginx和Keepalived原理介绍

参考博客:http://467754239.blog.51cto.com/4878013/1541421

1、nginx

nginx进程基于于Master+Slave(worker)多进程模型,自身具有非常稳定的子进程管理功能。在Master进程分配模式下,Master进程永远不进行业务处理,只是进行任务分发,

从而达到Master进程的存活高可靠性,Slave(worker)进程所有的业务信号都 由主进程发出,Slave(worker)进程所有的超时任务都会被Master中止,属于非阻塞式任务模型。

2、keepalived

Keepalived是Linux下面实现VRRP 备份路由的高可靠性运行件。基于Keepalived设计的服务模式能够真正做到主服务器和备份服务器故障时IP瞬间无缝交接,作用:

主要用作RealServer的健康状态检查以及LoadBalance主机和BackUP主机之间failover的实现

3、单点故障

Nginx有很强代理功能,但是一台nginx就形成了单点,现在使用keepalived来解决这个问题,keepalived的故障转移时间很短.

Nginx+keepalived双机实现nginx反向代理服务的高可用,一台nginx挂掉之后不影响应用也不影响内网访问外网.

4、此架构需要考虑的问题

1) Master没挂,则Master占有vip且nginx运行在Master上

2) Master挂了,则backup抢占vip且在backup上运行nginx服务

3) 如果master服务器上的nginx服务挂了,则vip资源转移到backup服务器上

4) 检测后端服务器的健康状态

5、叙述

Master和Backup两边都开启nginx服务,无论Master还是Backup,当其中的一个keepalived服务停止后,vip都会漂移到keepalived服务还在的节点上,

如果要想使nginx服务挂了,vip也漂移到另一个节点,则必须用脚本或者在配置文件里面用shell命令来控制。

首先必须明确后端服务器的健康状态检测keepalived在这种架构上是无法检测的,后端服务器的健康状态检测是有nginx来判断的,但是nginx的检测机制有一定的缺陷,后端服务器某一个宕机之后,nginx还是会分发请求给它,在一定的时间内后端服务响应不了,nginx则会发给另外一个服务器,然后当客户的请求来了,nginx会一段时间内不会把请求分发给已经宕机的服务器,但是过一段时间后,nginx还是会把分发请求发给宕机的服务器上。

实验实现:

HA高可用集群构建前提:

1.proxy和proxy2节点时间必须同步;

建议使用ntp协议进行;

参考博客:http://sohudrgon.blog.51cto.com/3088108/1598314

2、节点之间必须要通过主机名互相通信;

建议使用hosts文件;

通信中使用的名字必须与其节点为上“uname -n”命令展示出的名字保持一致;

[[email protected] ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

172.16.31.52 proxy.stu31.com proxy

172.16.31.53 proxy2.stu31.com proxy2

172.16.31.50 tom1.stu31.com tom1

172.16.31.51 tom2.stu31.com tom2

3、节点之间彼此root用户能基于ssh密钥方式进行通信;

节点proxy:

# ssh-keygen -t rsa -P ""

# ssh-copy-id -i .ssh/id_rsa.pub proxy2

节点proxy2:

# ssh-keygen -t rsa -P ""

# ssh-copy-id -i .ssh/id_rsa.pub proxy

测试ssh无密钥通信:

[[email protected] ~]# date ; ssh proxy2 date

Fri Jan 16 15:38:36 CST 2015

Fri Jan 16 15:38:36 CST 2015

一.安装nginx

1.两个节点都安装nginx

# yum install nginx-1.6.2-1.el6.ngx.x86_64.rpm

2.分别在两台机器上创建不同的测试页面[为了测试]

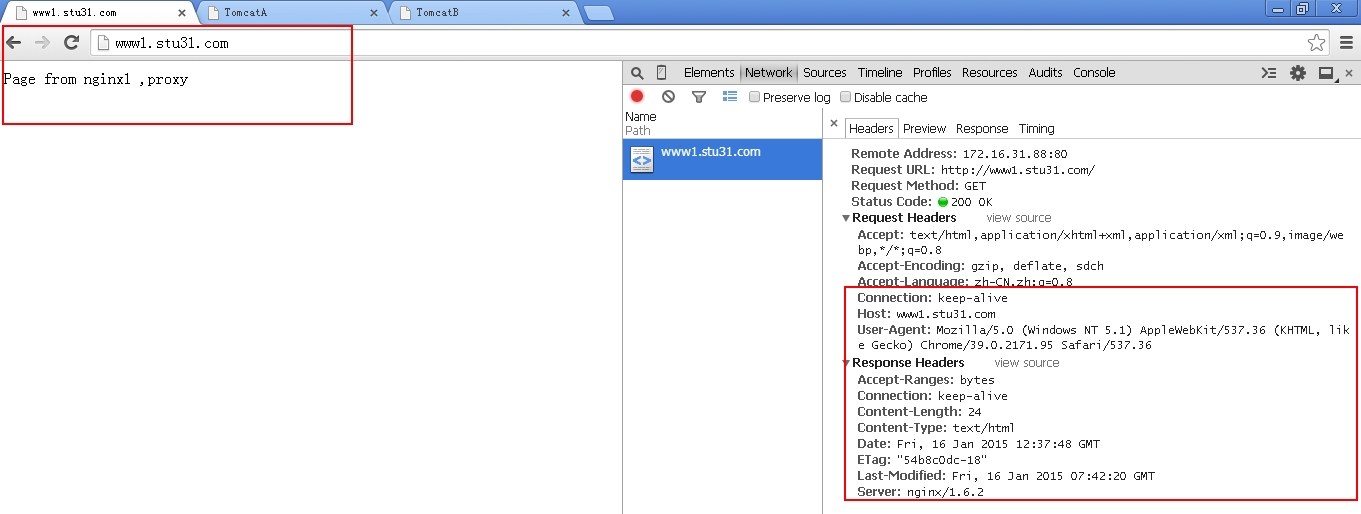

[[email protected] ~]# echo "Page from nginx1 ,proxy" >/usr/share/nginx/html/index.html

[[email protected] ~]# echo "Page from nginx2 ,proxy2" >/usr/share/nginx/html/index.html

3.配置nginx的配置文件

配置nginx服务器将动态内容反向代理到后端tomcat服务器组,而静态内容直接访问本地的nginx服务器;

定义后端tomcat服务器组:

[[email protected] ~]# vim /etc/nginx/nginx.conf

#添加如下后端服务器组

upstream tcsrvs {

ip_hash;

server 172.16.31.50:8080;

server 172.16.31.51:8080;

}

定义反向代理:

[[email protected] nginx]# pwd

/etc/nginx

[[email protected] nginx]# vim conf.d/default.conf

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log /var/log/nginx/log/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

location ~* \.(jsp|do)$ {

proxy_pass http://tcsrvs;

}

}

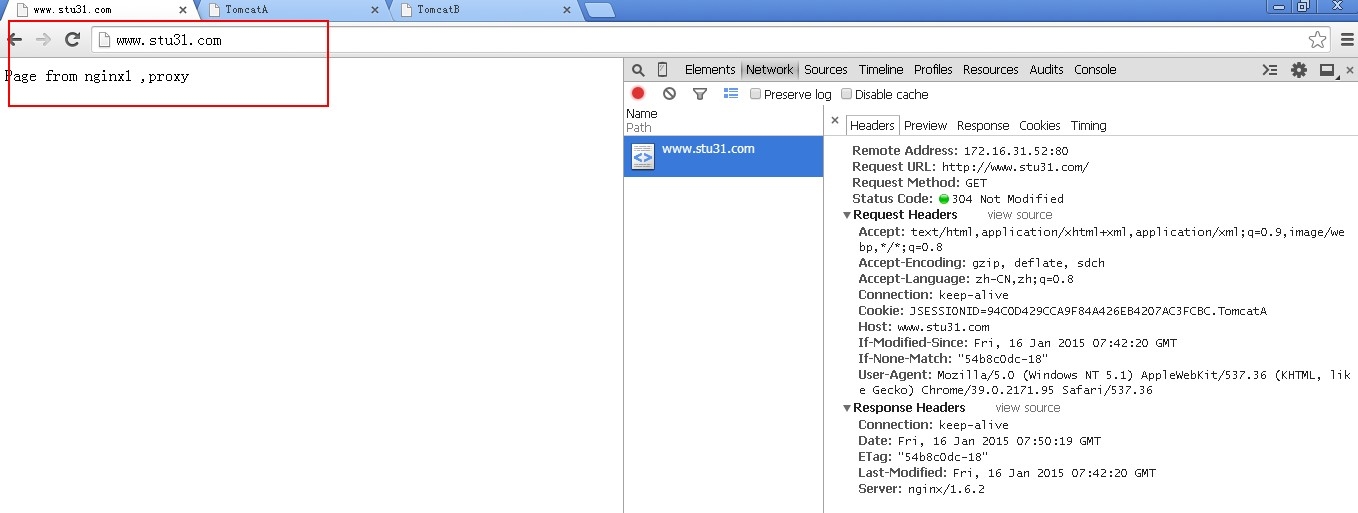

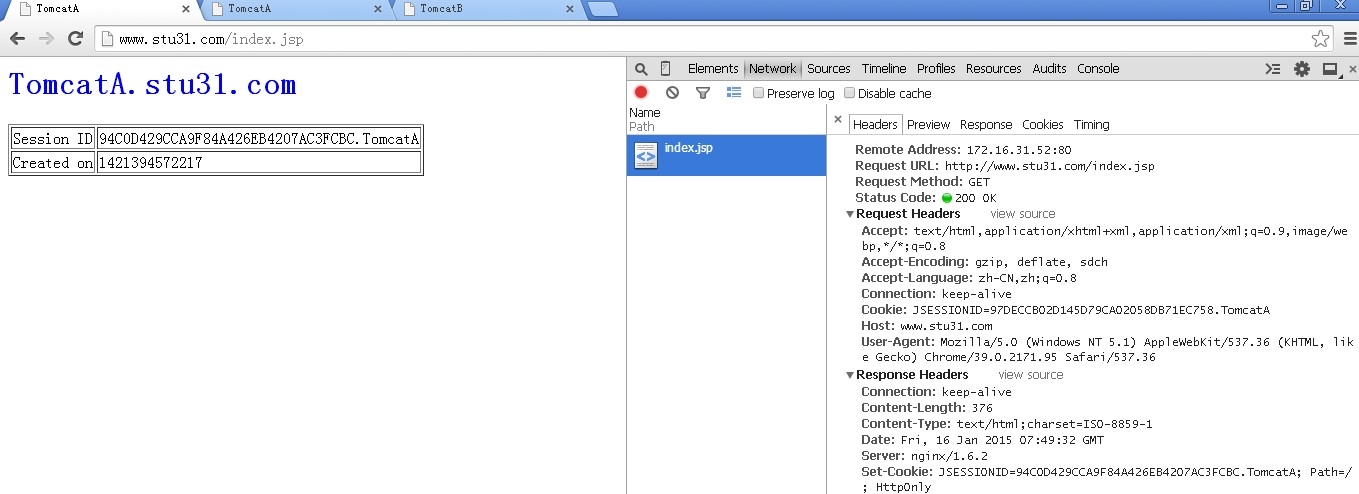

3.启动nginx服务访问测试

静态内容是本地nginx提供的页面:

动态页面丢到后端的tomcat服务器了:

到节点tom1和tom2创建tomcat的测试页:

节点tom1:

[[email protected] testapp]# pwd

/usr/local/tomcat/webapps/ROOT

[[email protected] testapp]# vim index.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

<h1><font color="red">TomcatA.stu31.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("stu31.com","stu31.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

节点tom2:

[[email protected] testapp]# pwd

/usr/local/tomcat/webapps/ROOT

[[email protected] testapp]# vim index.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatB </title></head>

<body>

<h1><font color="red">TomcatB.stu31.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("stu31.com","stu31.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

复制配置文件到节点proxy2:

[[email protected] nginx]# scp nginx.conf proxy2:/etc/nginx/

nginx.conf 100% 740 0.7KB/s 00:00

[[email protected] nginx]# scp conf.d/default.conf proxy2:/etc/nginx/conf.d/

default.conf 100% 1167 1.1KB/s 00:00

二.keepalived安装与配置

CentOS 6.6 是1.2.13版本的keepalived,已经够用了,最新版本的keepalived是1.2.15;

1.两个节点安装keepalived软件

# yum install -y keepalived

2.配置keepalived

修改keepalived配置文件

keepalived的文件路径/etc/keepalived/keepalived.conf

主节点MASTER node:

! Configuration File for keepalived #全局定义

global_defs {

notification_email { #指定keepalived在发生事件时(比如切换),需要发送的email对象,可以多个,每行一个

}

notification_email_from [email protected]

smtp_server 127.0.0.1 #指定发送email的smtp服务器

smtp_connect_timeout 30

router_id LVS_DEVEL #运行keepalived的机器的一个标识

}

vrrp_instance VI_1 {

state MASTER #为主服务器

interface eth0 #监听的本地网卡接口

virtual_router_id 100 #主辅virtual_router_id号必须相同

mcast_src_ip=172.16.31.52 #主nginx的ip地址

priority 100 #优先级为100,此值越大优先级越大 就为master 权重值

advert_int 1 #VRRP Multicast 广播周期秒数;心跳检测时间,单位秒

authentication {

auth_type PASS #vrrp认证方式

auth_pass oracle #vrrp口令

}

virtual_ipaddress { #VRRP HA 虚拟地址 如果有多个VIP,继续换行填写

172.16.31.188/24 dev eth0

}

}

备用节点BACKUP node:

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 100

mcast_src_ip=172.16.31.53

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass oracle

}

virutal_ipaddress {

172.16.31.188/24 dev eth0

}

}

3.启动keepalived服务

设置keepalived开机启动:

# chkconfig keepalived on

启动两个节点的keepalived服务:

[[email protected] ~]# service keepalived start ; ssh proxy2 "service keepalived start"

4.查看vip状态

#首先在master节点上查看vip的状态

[[email protected] keepalived]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:3b:23:60 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.52/16 brd 172.16.255.255 scope global eth0

inet 172.16.31.188/16 scope global secondary eth0

inet6 fe80::a00:27ff:fe3b:2360/64 scope link

valid_lft forever preferred_lft forever

#其次在backup节点上查看vip的状态

[[email protected] keepalived]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:6e:bd:28 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.53/16 brd 172.16.255.255 scope global eth0

inet6 fe80::a00:27ff:fe6e:bd28/64 scope link

valid_lft forever preferred_lft forever

5.查看keepalived服务启动后选举VIP的过程日志:

MASTER节点的日志:

当启动keepalived服务的时候,会根据配置文件的优先级来竞选谁为master,从日志来看172.16.31.52竞选master

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 16:31:06 proxy Keepalived[5807]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 16:31:06 proxy Keepalived[5809]: Starting Healthcheck child process, pid=5811

Jan 16 16:31:06 proxy Keepalived[5809]: Starting VRRP child process, pid=5812

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Registering Kernel netlink reflector

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Registering Kernel netlink command channel

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Registering Kernel netlink reflector

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Registering Kernel netlink command channel

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Registering gratuitous ARP shared channel

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Configuration is using : 62912 Bytes

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: Using LinkWatch kernel netlink reflector...

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Configuration is using : 7455 Bytes

Jan 16 16:31:06 proxy Keepalived_healthcheckers[5811]: Using LinkWatch kernel netlink reflector...

Jan 16 16:31:06 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 16:31:07 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 16:31:07 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 16:31:07 proxy Keepalived_healthcheckers[5811]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 16:31:07 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 16:31:07 proxy Keepalived_vrrp[5812]: receive an invalid ip number count associated with VRID!

Jan 16 16:31:07 proxy Keepalived_vrrp[5812]: bogus VRRP packet received on eth0 !!!

Jan 16 16:31:07 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Dropping received VRRP packet...

Jan 16 16:31:08 proxy Keepalived_vrrp[5812]: receive an invalid ip number count associated with VRID!

Jan 16 16:31:08 proxy Keepalived_vrrp[5812]: bogus VRRP packet received on eth0 !!!

Jan 16 16:31:08 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Dropping received VRRP packet...

Jan 16 16:31:09 proxy Keepalived_vrrp[5812]: receive an invalid ip number count associated with VRID!

Jan 16 16:31:09 proxy Keepalived_vrrp[5812]: bogus VRRP packet received on eth0 !!!

Jan 16 16:31:09 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Dropping received VRRP packet...

Jan 16 16:31:12 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

BACKUP节点的日志;

由于优先级低,就成为了备用节点;

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 16:31:09 proxy2 Keepalived[2176]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 16:31:09 proxy2 Keepalived[2178]: Starting Healthcheck child process, pid=2180

Jan 16 16:31:09 proxy2 Keepalived[2178]: Starting VRRP child process, pid=2181

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Registering Kernel netlink reflector

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Registering Kernel netlink command channel

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Registering Kernel netlink reflector

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Registering Kernel netlink command channel

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Registering gratuitous ARP shared channel

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Configuration is using : 7455 Bytes

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Configuration is using : 62912 Bytes

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: Using LinkWatch kernel netlink reflector...

Jan 16 16:31:09 proxy2 Keepalived_healthcheckers[2180]: Using LinkWatch kernel netlink reflector...

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 16 16:31:09 proxy2 Keepalived_vrrp[2181]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

6.我们停止MASTER节点的keepalived服务,那么BACKUP节点会成为主节点。

主节点停止keepalived服务:

[[email protected] keepalived]# service keepalived stop

Stopping keepalived: [ OK ]

我们通过日志来查看自动切换的过程:

主节点的VIP自动移除:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 16:37:33 proxy Keepalived[5809]: Stopping Keepalived v1.2.13 (10/15,2014)

Jan 16 16:37:33 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) sending 0 priority

Jan 16 16:37:33 proxy Keepalived_vrrp[5812]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 16 16:37:33 proxy Keepalived_healthcheckers[5811]: Netlink reflector reports IP 172.16.31.188 removed

备用节点自动竞选成主节点,获取VIP:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 16:37:34 proxy2 Keepalived_vrrp[2181]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 16:37:35 proxy2 Keepalived_vrrp[2181]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 16:37:35 proxy2 Keepalived_vrrp[2181]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 16:37:35 proxy2 Keepalived_vrrp[2181]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 16:37:35 proxy2 Keepalived_healthcheckers[2180]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 16:37:40 proxy2 Keepalived_vrrp[2181]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

7.我们将主节点重新启动,并测试节点主备切换时间:

[[email protected] keepalived]# service keepalived start

Starting keepalived: [ OK ]

我在物理机上测试主备切换时间,间隔差不多一秒钟左右:

C:\Users\GuoGang>ping -t 172.16.31.188

正在 Ping 172.16.31.188 具有 32 字节的数据:

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

请求超时。

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

来自 172.16.31.188 的回复: 字节=32 时间<1ms TTL=64

172.16.31.188 的 Ping 统计信息:

数据包: 已发送 = 9,已接收 = 8,丢失 = 1 (11% 丢失),

8.更改DNS服务器的IP为虚拟ip

DNS服务器构建请参考博客:http://sohudrgon.blog.51cto.com/3088108/1588344

# vim /var/named/stu31.com.zone

$TTL 600

$ORIGIN stu31.com.

@ IN SOA ns1.stu31.com. root.stu31.com. (

2014121801

1D

5M

1W

1H)

@ IN NS ns1.stu31.com.

ns1 IN A 172.16.31.52

www IN A 172.16.31.188

重启named服务器;

9.访问测试:

三.Keepalived服务根据nginx状态实现自动切换配置

1.默认情况下,keepalived工作模式并不能直接监控nginx服务,只有当keepalived服务挂掉后才能主备切换,nginx服务挂掉后不能实现主备服务器的切换,但是我们的目的就是要实现nginx服务keepalived挂掉后,都要主备切换。

以上有两种方法可以实现

A.keepalived配置文件中可以支持shell脚本,写个监听nginx服务的脚本就可以了

B.单独写个脚本来监听nginx和keepalived服务

keepalived的样板文件中有配置文件专门探测服务正常与否:

[[email protected] keepalived]# ls /usr/share/doc/keepalived-1.2.13/samples/

keepalived.conf.fwmark keepalived.conf.track_interface

keepalived.conf.HTTP_GET.port keepalived.conf.virtualhost

keepalived.conf.inhibit keepalived.conf.virtual_server_group

keepalived.conf.IPv6 keepalived.conf.vrrp

keepalived.conf.misc_check keepalived.conf.vrrp.localcheck

keepalived.conf.misc_check_arg keepalived.conf.vrrp.lvs_syncd

keepalived.conf.quorum keepalived.conf.vrrp.routes

keepalived.conf.sample keepalived.conf.vrrp.scripts

keepalived.conf.SMTP_CHECK keepalived.conf.vrrp.static_ipaddress

keepalived.conf.SSL_GET keepalived.conf.vrrp.sync

keepalived.conf.status_code sample.misccheck.smbcheck.sh

就是keepalived.conf.vrrp.localcheck这个样例文件中讲解了所有的探测服务正常与否的方法;

2.基于第一种情况我们在keepalived的配置文件中加入如下探测nginx服务是否正常:

主备节点都需要添加:

vrrp_script

chk_nginx { #检测nginx服务是否在运行有很多方式,比如进程,用脚本检测等等

script "killall -0 nginx" #用shell命令检查nginx服务是否存在

interval 1 #时间间隔为1秒检测一次

weight -2 #当nginx的服务不存在了,就把当前的权重-2

fall 2 #测试失败的次数

rise 1 #测试成功的次数

}

然后在vrrp_instance配置段中引用定义的脚本名称;

track_script {

chk_nginx #引用上面的vrrp_script定义的脚本名称

}

实例测试:

MASTER节点的配置文件:

[[email protected] keepalived]# cat keepalived.conf

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_nginx { #检测nginx服务是否在运行有很多方式,比如进程,用脚本检测等等

script "killall -0 nginx" #用shell命令检查nginx服务是否存在

interval 1 #时间间隔为1秒检测一次

weight -2 #当nginx的服务不存在了,就把当前的权重-2

fall 2 #测试失败的次数

rise 1 #测试成功的次数

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 100

mcast_src_ip=172.16.31.52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass oracle

}

virtual_ipaddress {

172.16.31.188/16 dev eth0

}

track_script {

chk_nginx #引用上面的vrrp_script定义的脚本名称

}

}

BACKUP节点的配置文件:

[[email protected] keepalived]# cat keepalived.conf

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_nginx { #检测nginx服务是否在运行有很多方式,比如进程,用脚本检测等等

script "killall -0 nginx" #用shell命令检查nginx服务是否存在

interval 1 #时间间隔为1秒检测一次

weight -2 #当nginx的服务不存在了,就把当前的权重-2

fall 2 #测试失败的次数

rise 1 #测试成功的次数

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 100

mcast_src_ip=172.16.31.53

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass oracle

}

virtual_ipaddress {

172.16.31.188/16 dev eth0

}

track_script {

chk_nginx #引用上面的vrrp_script定义的脚本名称

}

}

重启keepalived服务;我们将主节点的nginx服务停止;测试keepalived服务是否自动切换到备用节点:

重启服务;

[[email protected] keepalived]# service keepalived restart ; ssh proxy2 "service keepalived restart"

Stopping keepalived: [ OK ]

Starting keepalived: [ OK ]

Stopping keepalived: [ OK ]

Starting keepalived: [ OK ]

查看日志可以发现已经在检查nginx服务了:

MASTE节点的启动日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 17:18:02 proxy Keepalived[6098]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 17:18:02 proxy Keepalived[6100]: Starting Healthcheck child process, pid=6102

Jan 16 17:18:02 proxy Keepalived[6100]: Starting VRRP child process, pid=6104

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Registering Kernel netlink reflector

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Registering Kernel netlink reflector

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Registering Kernel netlink command channel

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Registering Kernel netlink command channel

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Registering gratuitous ARP shared channel

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Configuration is using : 7495 Bytes

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Configuration is using : 65170 Bytes

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: Using LinkWatch kernel netlink reflector...

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 16 17:18:02 proxy Keepalived_healthcheckers[6102]: Using LinkWatch kernel netlink reflector...

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: VRRP_Script(chk_nginx) succeeded

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 17:18:02 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election

Jan 16 17:18:03 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 17:18:03 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 17:18:03 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 17:18:03 proxy Keepalived_healthcheckers[6102]: Netlink reflector reports IP 172.16.31.188 added

BACKUP节点的启动日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 17:18:03 proxy2 Keepalived[25883]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 17:18:03 proxy2 Keepalived[25885]: Starting Healthcheck child process, pid=25887

Jan 16 17:18:03 proxy2 Keepalived[25885]: Starting VRRP child process, pid=25888

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Registering Kernel netlink reflector

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Registering Kernel netlink reflector

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Registering Kernel netlink command channel

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Registering Kernel netlink command channel

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Registering gratuitous ARP shared channel

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Configuration is using : 7495 Bytes

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Configuration is using : 65170 Bytes

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: Using LinkWatch kernel netlink reflector...

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 16 17:18:03 proxy2 Keepalived_healthcheckers[25887]: Using LinkWatch kernel netlink reflector...

Jan 16 17:18:03 proxy2 Keepalived_vrrp[25888]: VRRP_Script(chk_nginx) succeeded

只是检测了nginx服务;

我们在MASTER节点关闭nginx服务后观察:

[[email protected] keepalived]# service nginx stop

Stopping nginx: [ OK ]

观察日志,主节点检查服务失败,移除VIP地址;

MASTE节点的启动日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 17:21:20 proxy Keepalived_vrrp[6104]: VRRP_Script(chk_nginx) failed

Jan 16 17:21:22 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) Received higher prio advert

Jan 16 17:21:22 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 16 17:21:22 proxy Keepalived_vrrp[6104]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 16 17:21:22 proxy Keepalived_healthcheckers[6102]: Netlink reflector reports IP 172.16.31.188 removed

备用节点检查nginx服务正常,进行自动加载VIP:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 17:21:22 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) forcing a new MASTER election

Jan 16 17:21:22 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) forcing a new MASTER election

Jan 16 17:21:23 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 17:21:24 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 17:21:24 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 17:21:24 proxy2 Keepalived_healthcheckers[25887]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 17:21:24 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 17:21:29 proxy2 Keepalived_vrrp[25888]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

至此,内置检测脚本测试服务正常与否的设置就成功。

3.基于第二种情况,我们单独写一个脚本来探测nginx服务是否正常;探测nginx进程正常与否

我们需要跟上面的设置区分开来,如果使用独立脚本,上面的内置脚本就不用设置了。

#vim nginxpidcheck.sh

#!/bin/bash

while :

do

nginxpid=`ps -C nginx --no-header | wc -l`

if [ $nginxpid -eq 0 ];then

/usr/local/nginx/sbin/nginx

sleep 5

nginxpid=`ps -C nginx --no-header | wc -l`

echo $nginxpid

if [ $nginxpid -eq 0 ];then

/etc/init.d/keepalived stop

fi

fi

sleep 5

done

我们将脚本制定为任务计划运行即可,我们测试就直接交给后台自动运行:

这是一个无限循环的脚本,放在主Nginx机器上(因为目前主要是由它提供服务),每隔5秒执行一次,用ps -C 命令来收集nginx的PID值到底是否为0,如果是0的话(即Nginx进程死掉了),尝试启动nginx进程;如果继续为0,即nginx启动失改, 则关闭本机的Keeplaived进程,VIP地址则会由备机接管,当然了,整个网站就会由备机的Nginx来提供服务了,这样保证Nginx进程的高可用。

实例测试:

我们将脚本放在/etc/keepalived目录下,两个节点都存放:

[[email protected] keepalived]# ls

keepalived.conf nginxpidcheck.sh

直接交给后台自动运行,两个节点都运行:

#nohup sh /etc/keepalived/nginxpidcheck.sh &

我们停止主节点的nginx服务:

[[email protected] keepalived]# service nginx stop

Stopping nginx: [ OK ]

观察日志查看主备切换过程成功与否:

MASTE节点的启动日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 17:33:37 proxy Keepalived[7221]: Stopping Keepalived v1.2.13 (10/15,2014)

Jan 16 17:33:37 proxy Keepalived_vrrp[7225]: VRRP_Instance(VI_1) sending 0 priority

Jan 16 17:33:37 proxy Keepalived_vrrp[7225]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 16 17:33:37 proxy Keepalived_healthcheckers[7224]: Netlink reflector reports IP 172.16.31.188 removed

VIP移除了;

备用节点检查nginx服务正常,进行自动加载VIP:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 17:33:37 proxy2 Keepalived_vrrp[26984]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 17:33:38 proxy2 Keepalived_vrrp[26984]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 17:33:38 proxy2 Keepalived_vrrp[26984]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 17:33:38 proxy2 Keepalived_healthcheckers[26983]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 17:33:38 proxy2 Keepalived_vrrp[26984]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 17:33:43 proxy2 Keepalived_vrrp[26984]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

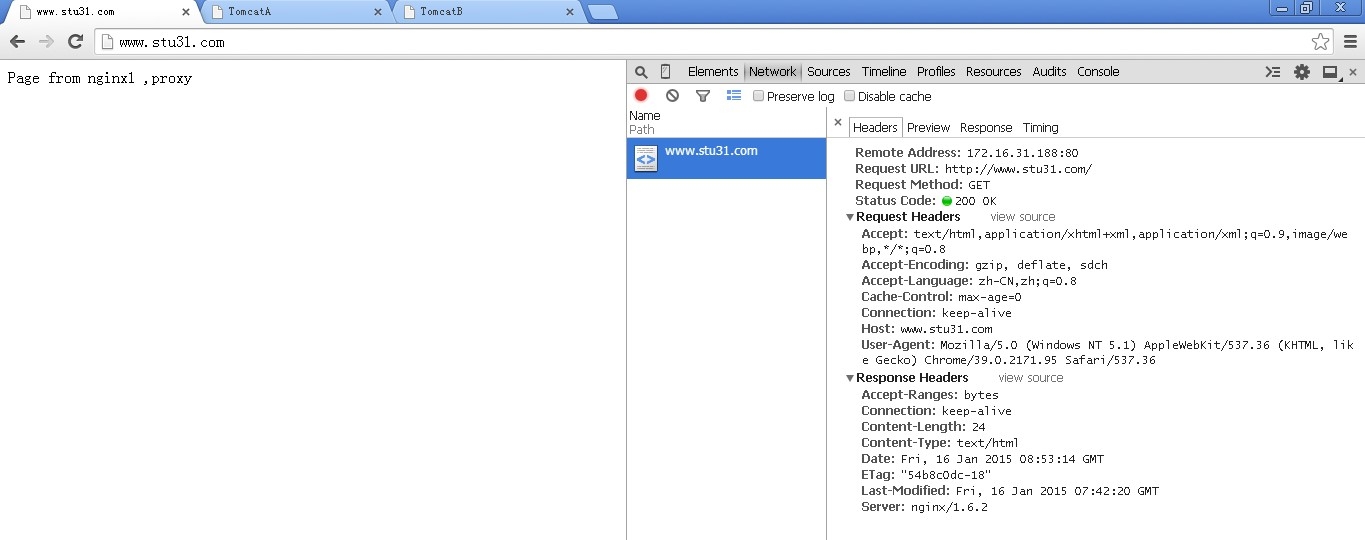

访问测试,静态内容是转到了节点proxy2上了:

4.基于邮件通知形式的自动切换主备节点的脚本构建

邮件通知脚本构建:将脚本放置在/etc/keepalived/下,两个节点都需要创建

[[email protected] keepalived]# vim notify.sh

#!/bin/bash

vip=172.16.31.188

contact=‘[email protected]‘

notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date ‘+%F %H:%M:%S‘`: vrrp transition, `hostname` changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

notify master

/etc/rc.d/init.d/nginx start

exit 0

;;

backup)

notify backup

/etc/rc.d/init.d/nginx stop

exit 0

;;

fault)

notify fault

/etc/rc.d/init.d/nginx stop

exit 0

;;

*)

echo ‘Usage: `basename $0` {master|backup|fault}‘

exit 1

;;

esac

如果是主MASTER节点,我们就启动nginx服务;如果是BACKUP备用节点我们就停止nginx服务;如果节点故障,我们停止nginx服务;

需要在keepalived配置文件中进行脚本调用:

MASTER节点:

[[email protected] keepalived]# cat keepalived.conf

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_maintance_down { #检查脚本,如果存在down这个文件,就将节点的权重减5

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1 #时间间隔为1秒检测一次

weight -5 #当nginx的服务不存在了,就把当前的权重-5

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 100

mcast_src_ip=172.16.31.52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass oracle

}

virtual_ipaddress {

172.16.31.188/16 dev eth0

}

track_script {

chk_maintance_down #引用上面的vrrp_script定义的脚本名称

}

#如果脚本检查到节点是主节点,就邮件通知管理员,并启动nginx服务器

notify_master "/etc/keepalived/notify.sh master"

#如果脚本检查到节点是备用节点,就邮件通知管理员,并停止nginx服务器

notify_backup "/etc/keepalived/notify.sh backup"

#如果脚本检查到节点是故障节点,就邮件通知管理员,并停止nginx服务器

notify_fault "/etc/keepalived/notify.sh fault"

}

BACKUP节点也设置如下:

[[email protected] keepalived]# cat keepalived.conf

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_maintance_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 100

mcast_src_ip=172.16.31.53

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass oracle

}

virtual_ipaddress {

172.16.31.188/16 dev eth0

}

track_script {

chk_maintance_down

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

我们重启keepalived服务器;观察节点的选举情况,

MASTER节点的日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 18:09:36 proxy Keepalived[10991]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 18:09:36 proxy Keepalived[10993]: Starting Healthcheck child process, pid=10996

Jan 16 18:09:36 proxy Keepalived[10993]: Starting VRRP child process, pid=10997

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Registering Kernel netlink reflector

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Registering Kernel netlink command channel

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Configuration is using : 7599 Bytes

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Registering Kernel netlink reflector

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Registering Kernel netlink command channel

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Registering gratuitous ARP shared channel

Jan 16 18:09:36 proxy Keepalived_healthcheckers[10996]: Using LinkWatch kernel netlink reflector...

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Configuration is using : 65356 Bytes

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: Using LinkWatch kernel netlink reflector...

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

#检查出无down这个文件;

Jan 16 18:09:36 proxy Keepalived_vrrp[10997]: VRRP_Script(chk_maintance_down) succeeded

Jan 16 18:09:37 proxy Keepalived_vrrp[10997]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 18:09:38 proxy Keepalived_vrrp[10997]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 18:09:38 proxy Keepalived_vrrp[10997]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 18:09:38 proxy Keepalived_vrrp[10997]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 18:09:38 proxy Keepalived_healthcheckers[10996]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 18:09:43 proxy Keepalived_vrrp[10997]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

BACKUP节点的日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 18:09:36 proxy2 Keepalived[29190]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 18:09:36 proxy2 Keepalived[29192]: Starting Healthcheck child process, pid=29194

Jan 16 18:09:36 proxy2 Keepalived[29192]: Starting VRRP child process, pid=29195

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Registering Kernel netlink reflector

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Registering Kernel netlink command channel

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Registering Kernel netlink reflector

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Registering Kernel netlink command channel

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Registering gratuitous ARP shared channel

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Configuration is using : 7599 Bytes

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Configuration is using : 65356 Bytes

Jan 16 18:09:36 proxy2 Keepalived_healthcheckers[29194]: Using LinkWatch kernel netlink reflector...

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: Using LinkWatch kernel netlink reflector...

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 16 18:09:36 proxy2 Keepalived_vrrp[29195]: VRRP_Script(chk_maintance_down) succeeded

Jan 16 18:09:43 proxy2 Keepalived[29192]: Stopping Keepalived v1.2.13 (10/15,2014)

查看邮件:

MASTER主节点的邮件:

[[email protected] keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 2 messages 2 new

>N 1 root Fri Jan 16 18:08 18/696 "proxy.stu31.com to be master: 172.16.31.188 floating"

N 2 root Fri Jan 16 18:09 18/696 "proxy.stu31.com to be master: 172.16.31.188 floating"

& 2

Message 2:

From [email protected] Fri Jan 16 18:09:38 2015

Return-Path: <[email protected]>

X-Original-To: [email protected]

Delivered-To: [email protected]

Date: Fri, 16 Jan 2015 18:09:38 +0800

Subject: proxy.stu31.com to be master: 172.16.31.188 floating

User-Agent: Heirloom mailx 12.4 7/29/08

Content-Type: text/plain; charset=us-ascii

From: [email protected] (root)

Status: R

2015-01-16 18:09:38: vrrp transition, proxy.stu31.com changed to be master

备用节点的邮件:

[[email protected] keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 2 messages 2 new

>N 1 root Fri Jan 16 18:08 18/703 "proxy2.stu31.com to be backup: 172.16.31.188 floating"

N 2 root Fri Jan 16 18:09 18/703 "proxy2.stu31.com to be backup: 172.16.31.188 floating"

& 2

Message 2:

From [email protected] Fri Jan 16 18:09:36 2015

Return-Path: <[email protected]>

X-Original-To: [email protected]

Delivered-To: [email protected]

Date: Fri, 16 Jan 2015 18:09:36 +0800

Subject: proxy2.stu31.com to be backup: 172.16.31.188 floating

User-Agent: Heirloom mailx 12.4 7/29/08

Content-Type: text/plain; charset=us-ascii

From: [email protected] (root)

Status: R

2015-01-16 18:09:36: vrrp transition, proxy2.stu31.com changed to be backup

我们在主节点的/etc/keepalived/目录下创建一个down文件,来观察主节点是否会切换到备用节点:

[[email protected] keepalived]# touch down

查看主节点日志:

MASTER节点的日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 19:09:10 proxy Keepalived_vrrp[20675]: VRRP_Script(chk_maintance_down) failed

Jan 16 19:09:12 proxy Keepalived_vrrp[20675]: VRRP_Instance(VI_1) Received higher prio advert

Jan 16 19:09:12 proxy Keepalived_vrrp[20675]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 16 19:09:12 proxy Keepalived_vrrp[20675]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 16 19:09:12 proxy Keepalived_healthcheckers[20674]: Netlink reflector reports IP 172.16.31.188 removed

BACKUP节点的日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 19:09:12 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) forcing a new MASTER election

Jan 16 19:09:12 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) forcing a new MASTER election

Jan 16 19:09:13 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 19:09:14 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 19:09:14 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 19:09:14 proxy2 Keepalived_healthcheckers[2319]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 19:09:14 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 19:09:19 proxy2 Keepalived_vrrp[2320]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

查看邮件:

主节点成为了备用节点了:

[[email protected] keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 4 messages 2 new 3 unread

U 1 root Fri Jan 16 18:08 19/706 "proxy.stu31.com to be master: 172.16.31.188 floating"

2 root Fri Jan 16 18:09 19/707 "proxy.stu31.com to be master: 172.16.31.188 floating"

>N 3 root Fri Jan 16 19:06 18/696 "proxy.stu31.com to be master: 172.16.31.188 floating"

N 4 root Fri Jan 16 19:09 18/696 "proxy.stu31.com to be backup: 172.16.31.188 floating"

& 4

Message 4:

From [email protected] Fri Jan 16 19:09:12 2015

Return-Path: <[email protected]>

X-Original-To: [email protected]

Delivered-To: [email protected]

Date: Fri, 16 Jan 2015 19:09:12 +0800

Subject: proxy.stu31.com to be backup: 172.16.31.188 floating

User-Agent: Heirloom mailx 12.4 7/29/08

Content-Type: text/plain; charset=us-ascii

From: [email protected] (root)

Status: R

2015-01-16 19:09:12: vrrp transition, proxy.stu31.com changed to be backup

& quit

备用节点成为了主节点了:

[[email protected] keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 5 messages 3 new 4 unread

U 1 root Fri Jan 16 18:08 19/713 "proxy2.stu31.com to be backup: 172.16.31.188 floating"

2 root Fri Jan 16 18:09 19/714 "proxy2.stu31.com to be backup: 172.16.31.188 floating"

>N 3 root Fri Jan 16 19:06 18/703 "proxy2.stu31.com to be backup: 172.16.31.188 floating"

N 4 root Fri Jan 16 19:06 18/703 "proxy2.stu31.com to be backup: 172.16.31.188 floating"

N 5 root Fri Jan 16 19:09 18/703 "proxy2.stu31.com to be master: 172.16.31.188 floating"

& 5

Message 5:

From [email protected] Fri Jan 16 19:09:14 2015

Return-Path: <[email protected]>

X-Original-To: [email protected]

Delivered-To: [email protected]

Date: Fri, 16 Jan 2015 19:09:14 +0800

Subject: proxy2.stu31.com to be master: 172.16.31.188 floating

User-Agent: Heirloom mailx 12.4 7/29/08

Content-Type: text/plain; charset=us-ascii

From: [email protected] (root)

Status: R

2015-01-16 19:09:14: vrrp transition, proxy2.stu31.com changed to be master

& quit

我们在查看一下nginx服务的启动状况:

主节点的nginx服务状态是停止的:

[[email protected] keepalived]# service nginx status

nginx is stopped

备用节点的nginx服务状态是启动的:

[[email protected] keepalived]# service nginx status

nginx (pid 2679) is running...

至此,基于nginx+keepalived构建主备负载均衡代理服务器的实验就完成了。

四.双主模式构建

两个keepalived节点互为主备节点的模式构建;

实例配置文件:

proxy节点:

[[email protected] keepalived]# cat keepalived.conf

global_defs {

notification_email { #通知邮件地址

}

notification_email_from [email protected]

smtp_server 127.0.0.1 #邮件服务器地址

smtp_connect_timeout 30

router_id LVS_DEVEL

}

#

vrrp_script chk_nginx {

script "killall -0 nginx" #服务探测,返回0说明服务是正常的

interval 1 #每隔1秒探测一次

weight -2 #nginx服务下线,权重减2

}

#

vrrp_instance VI_1 { #双主实例1

state MASTER #proxy(172.16.31.52)为主,proxy2(172.16.31.53)为备

interface eth0

virtual_router_id 88 #实例1的VRID为88

garp_master_delay 1

priority 100 #主(172.16.31.52)的优先级为100,从的(172.16.31.52)优先级为99

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

#

virtual_ipaddress {

172.16.31.88/16 dev eth0 #实例1的VIP

}

track_interface {

eth0

}

#

track_script { #脚本追踪

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

vrrp_instance VI_2 {

state BACKUP #实例2在proxy(172.16.31.52)上是备,在proxy2(172.16.31.53)上是主

interface eth0

virtual_router_id 188 #实例2的VRID是188

garp_master_delay 1

priority 200 #实例2在proxy上的优先级是200,在proxy2上的优先级是201

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

#

virtual_ipaddress {

172.16.31.188/16 dev eth0 #实例2的VIP

}

track_interface {

eth0

}

track_script { #脚本追踪

chk_nginx

}

}

proxy2节点的配置文件:

[[email protected] keepalived]# cat keepalived.conf

global_defs {

notification_email { #通知邮件地址

}

notification_email_from [email protected]

smtp_server 127.0.0.1 #邮件服务器地址

smtp_connect_timeout 30

router_id LVS_DEVEL

}

#

vrrp_script chk_nginx {

script "killall -0 nginx" #服务探测,返回0说明服务是正常的

interval 1 #每隔1秒探测一次

weight -2 #nginx服务下线,权重减2

}

#

vrrp_instance VI_1 { #双主实例1

state BACKUP #proxy(172.16.31.52)为主,proxy2(172.16.31.53)为备

interface eth0

virtual_router_id 88 #实例1的VRID为88

garp_master_delay 1

priority 99 #主(172.16.31.52)的优先级为100,从的(172.16.31.52)优先级为99

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

#

virtual_ipaddress {

172.16.31.88/16 dev eth0 #实例1的VIP

}

track_interface {

eth0

}

#

track_script { #脚本追踪

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

vrrp_instance VI_2 {

state MASTER #实例2在proxy(172.16.31.52)上是备,在proxy2(172.16.31.53)上是主

interface eth0

virtual_router_id 188 #实例2的VRID是188

garp_master_delay 1

priority 201 #实例2在proxy上的优先级是200,在proxy2上的优先级是201

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

#

virtual_ipaddress {

172.16.31.188/16 dev eth0 #实例2的VIP

}

track_interface {

eth0

}

track_script { #脚本追踪

chk_nginx

}

}

启动keepalived服务:

[[email protected] keepalived]# service keepalived start ; ssh proxy2 "service keepalived start"

Starting keepalived: [ OK ]

Starting keepalived: [ OK ]

查看启动日志:

从中发现该节点将本该属于proxy2节点的VIP抢占过来了,能proxy节点出现问题了;去查看一下:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 20:19:06 proxy Keepalived[25249]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 20:19:06 proxy Keepalived[25251]: Starting Healthcheck child process, pid=25254

Jan 16 20:19:06 proxy Keepalived[25251]: Starting VRRP child process, pid=25255

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Netlink reflector reports IP 172.16.31.52 added

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Registering Kernel netlink reflector

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Registering Kernel netlink command channel

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Registering gratuitous ARP shared channel

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Registering Kernel netlink reflector

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Registering Kernel netlink command channel

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Configuration is using : 72628 Bytes

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Configuration is using : 7886 Bytes

Jan 16 20:19:06 proxy Keepalived_healthcheckers[25254]: Using LinkWatch kernel netlink reflector...

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: Using LinkWatch kernel netlink reflector...

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Entering BACKUP STATE

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 16 20:19:06 proxy Keepalived_vrrp[25255]: VRRP_Script(chk_nginx) succeeded

Jan 16 20:19:07 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 16 20:19:08 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 16 20:19:08 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 16 20:19:08 proxy Keepalived_healthcheckers[25254]: Netlink reflector reports IP 172.16.31.88 added

Jan 16 20:19:08 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.88

Jan 16 20:19:09 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.88

Jan 16 20:19:10 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) forcing a new MASTER election

Jan 16 20:19:10 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) forcing a new MASTER election

Jan 16 20:19:11 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Transition to MASTER STATE

Jan 16 20:19:12 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Entering MASTER STATE

Jan 16 20:19:12 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) setting protocol VIPs.

Jan 16 20:19:12 proxy Keepalived_healthcheckers[25254]: Netlink reflector reports IP 172.16.31.188 added

#查看proxy2节点的日志,发现nginx服务检查失败,可能nginx服务停止了,我们去启动nginx服务后在查看日志:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 20:19:06 proxy2 Keepalived[7034]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 16 20:19:06 proxy2 Keepalived[7036]: Starting Healthcheck child process, pid=7038

Jan 16 20:19:06 proxy2 Keepalived[7036]: Starting VRRP child process, pid=7039

Jan 16 20:19:06 proxy2 Keepalived_vrrp[7039]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 20:19:06 proxy2 Keepalived_vrrp[7039]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 20:19:06 proxy2 Keepalived_vrrp[7039]: Registering Kernel netlink reflector

Jan 16 20:19:06 proxy2 Keepalived_vrrp[7039]: Registering Kernel netlink command channel

Jan 16 20:19:06 proxy2 Keepalived_vrrp[7039]: Registering gratuitous ARP shared channel

Jan 16 20:19:07 proxy2 Keepalived_vrrp[7039]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Netlink reflector reports IP 172.16.31.53 added

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Registering Kernel netlink reflector

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Registering Kernel netlink command channel

Jan 16 20:19:07 proxy2 Keepalived_vrrp[7039]: Configuration is using : 72628 Bytes

Jan 16 20:19:07 proxy2 Keepalived_vrrp[7039]: Using LinkWatch kernel netlink reflector...

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Opening file ‘/etc/keepalived/keepalived.conf‘.

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Configuration is using : 7886 Bytes

Jan 16 20:19:07 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 16 20:19:07 proxy2 Keepalived_vrrp[7039]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 16 20:19:07 proxy2 Keepalived_healthcheckers[7038]: Using LinkWatch kernel netlink reflector...

Jan 16 20:19:07 proxy2 Keepalived_vrrp[7039]: VRRP_Script(chk_nginx) succeeded

Jan 16 20:19:08 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Transition to MASTER STATE

Jan 16 20:19:08 proxy2 Keepalived_vrrp[7039]: VRRP_Script(chk_nginx) failed

Jan 16 20:19:09 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Entering MASTER STATE

Jan 16 20:19:09 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) setting protocol VIPs.

Jan 16 20:19:09 proxy2 Keepalived_healthcheckers[7038]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 20:19:09 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 20:19:10 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 20:19:10 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Received higher prio advert

Jan 16 20:19:10 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Entering BACKUP STATE

Jan 16 20:19:10 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) removing protocol VIPs.

Jan 16 20:19:10 proxy2 Keepalived_healthcheckers[7038]: Netlink reflector reports IP 172.16.31.188 removed

在proxy2节点启动nginx服务

[[email protected] keepalived]# service nginx status

nginx is stopped

[[email protected] keepalived]# service nginx start

Starting nginx: [ OK ]

观察两个节点的日志记录:

proxy节点将属于proxy2节点的VIP返还了:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 20:19:12 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 20:19:13 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 20:23:28 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Received higher prio advert

Jan 16 20:23:28 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) Entering BACKUP STATE

Jan 16 20:23:28 proxy Keepalived_vrrp[25255]: VRRP_Instance(VI_2) removing protocol VIPs.

Jan 16 20:23:28 proxy Keepalived_healthcheckers[25254]: Netlink reflector reports IP 172.16.31.188 removed

proxy2节点的VIP设置成功:

[[email protected] keepalived]# tail -f /var/log/messages

Jan 16 20:23:27 proxy2 Keepalived_vrrp[7039]: VRRP_Script(chk_nginx) succeeded

Jan 16 20:23:28 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) forcing a new MASTER election

Jan 16 20:23:28 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) forcing a new MASTER election

Jan 16 20:23:29 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Transition to MASTER STATE

Jan 16 20:23:30 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Entering MASTER STATE

Jan 16 20:23:30 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) setting protocol VIPs.

Jan 16 20:23:30 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 16 20:23:30 proxy2 Keepalived_healthcheckers[7038]: Netlink reflector reports IP 172.16.31.188 added

Jan 16 20:23:31 proxy2 Keepalived_vrrp[7039]: VRRP_Instance(VI_2) Sending gratuitous ARPs on eth0 for 172.16.31.188

我们在DNS服务器中增加一个域名,对应的虚拟IP为172.16.31.88:

[[email protected] keepalived]# vim /var/named/stu31.com.zone

$TTL 600

$ORIGIN stu31.com.

@ IN SOA ns1.stu31.com. root.stu31.com. (

2014121801

1D

5M

1W

1H)

@ IN NS ns1.stu31.com.

ns1 IN A 172.16.31.52

www IN A 172.16.31.188

www1 IN A 172.16.31.88

重启named服务器后在客户端访问测试:

至此,nginx+keepalived的双主高可用负载均衡集群构建成功!

本文出自 “眼眸刻着你的微笑” 博客,请务必保留此出处http://dengaosky.blog.51cto.com/9215128/1965348

以上是关于nginx+keepalived的高可用负载均衡集群构建的主要内容,如果未能解决你的问题,请参考以下文章

nginx实现请求的负载均衡 + keepalived实现nginx的高可用

keepalived实现nginx的高可用 + nginx负载均衡

《nginx》四nginx的负载均衡 + keepalived实现nginx的高可用

《nginx》四nginx的负载均衡 + keepalived实现nginx的高可用