2023/03/25NCRE二级Python综合题:jieba库的使用与文件读取写入

Posted 阿白啥也不会

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了2023/03/25NCRE二级Python综合题:jieba库的使用与文件读取写入相关的知识,希望对你有一定的参考价值。

题目描述(主要内容复现):

在data.txt中有一段文字(全部是中文),本文选用的例子是国家新一代人工智能治理专业委员会2021年9月25日发布的《新一代人工智能伦理规范》

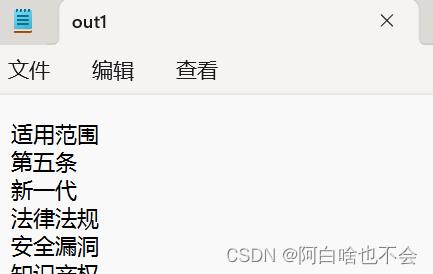

1.请用jieba库提取其中的字符长度大于等于3的关键词(不要求顺序)将其按行写入out1.txt。

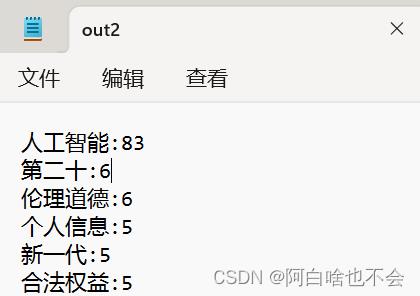

2.将关键词和对应的次数写入out2.txt中(频次从高到低,同频次不分顺序)

1.jieba库

①pip install

考试的时候作者看到是懵逼的——真没听说过,不过不急,我们可以利用help现场学习。由于考试环境已为我们配置好,已安装jieba库,为复现,先讲一下怎么安装

jieba库的安装

②help

会使用help就相当于会作弊(懂得都懂),从题目要求大概知道要使用jieba库的函数,我们直接利用help查看jieba的构成,着重看function部分可知cut和cut_for_search都可以用来解决问题,作者选的是后一个。其解释为 method of Tokenizer instance,Finer segmentation for search engines.(Tokenizer实例的方法搜索引擎的细分更精细。)

>>> import jieba as j

>>> help(j)

Help on package jieba:

NAME

jieba

PACKAGE CONTENTS

__main__

_compat

analyse (package)

finalseg (package)

lac_small (package)

posseg (package)

CLASSES

builtins.object

Tokenizer

class Tokenizer(builtins.object)

| Tokenizer(dictionary=None)

|

| Methods defined here:

|

| __init__(self, dictionary=None)

| Initialize self. See help(type(self)) for accurate signature.

|

| __repr__(self)

| Return repr(self).

|

| add_word(self, word, freq=None, tag=None)

| Add a word to dictionary.

|

| freq and tag can be omitted, freq defaults to be a calculated value

| that ensures the word can be cut out.

|

| calc(self, sentence, DAG, route)

|

| check_initialized(self)

|

| cut(self, sentence, cut_all=False, HMM=True, use_paddle=False)

| The main function that segments an entire sentence that contains

| Chinese characters into separated words.

|

| Parameter:

| - sentence: The str(unicode) to be segmented.

| - cut_all: Model type. True for full pattern, False for accurate pattern.

| - HMM: Whether to use the Hidden Markov Model.

|

| cut_for_search(self, sentence, HMM=True)

| Finer segmentation for search engines.

|

| del_word(self, word)

| Convenient function for deleting a word.

|

| get_DAG(self, sentence)

|

| get_dict_file(self)

|

| initialize(self, dictionary=None)

|

| lcut(self, *args, **kwargs)

|

| lcut_for_search(self, *args, **kwargs)

|

| load_userdict(self, f)

| Load personalized dict to improve detect rate.

|

| Parameter:

| - f : A plain text file contains words and their ocurrences.

| Can be a file-like object, or the path of the dictionary file,

| whose encoding must be utf-8.

|

| Structure of dict file:

| word1 freq1 word_type1

| word2 freq2 word_type2

| ...

| Word type may be ignored

|

| set_dictionary(self, dictionary_path)

|

| suggest_freq(self, segment, tune=False)

| Suggest word frequency to force the characters in a word to be

| joined or splitted.

|

| Parameter:

| - segment : The segments that the word is expected to be cut into,

| If the word should be treated as a whole, use a str.

| - tune : If True, tune the word frequency.

|

| Note that HMM may affect the final result. If the result doesn't change,

| set HMM=False.

|

| tokenize(self, unicode_sentence, mode='default', HMM=True)

| Tokenize a sentence and yields tuples of (word, start, end)

|

| Parameter:

| - sentence: the str(unicode) to be segmented.

| - mode: "default" or "search", "search" is for finer segmentation.

| - HMM: whether to use the Hidden Markov Model.

|

| ----------------------------------------------------------------------

| Static methods defined here:

|

| gen_pfdict(f)

|

| ----------------------------------------------------------------------

| Data descriptors defined here:

|

| __dict__

| dictionary for instance variables (if defined)

|

| __weakref__

| list of weak references to the object (if defined)

FUNCTIONS

add_word(word, freq=None, tag=None) method of Tokenizer instance

Add a word to dictionary.

freq and tag can be omitted, freq defaults to be a calculated value

that ensures the word can be cut out.

calc(sentence, DAG, route) method of Tokenizer instance

cut(sentence, cut_all=False, HMM=True, use_paddle=False) method of Tokenizer instance

The main function that segments an entire sentence that contains

Chinese characters into separated words.

Parameter:

- sentence: The str(unicode) to be segmented.

- cut_all: Model type. True for full pattern, False for accurate pattern.

- HMM: Whether to use the Hidden Markov Model.

cut_for_search(sentence, HMM=True) method of Tokenizer instance

Finer segmentation for search engines.

del_word(word) method of Tokenizer instance

Convenient function for deleting a word.

disable_parallel()

enable_parallel(processnum=None)

Change the module's `cut` and `cut_for_search` functions to the

parallel version.

Note that this only works using dt, custom Tokenizer

instances are not supported.

get_DAG(sentence) method of Tokenizer instance

get_FREQ lambda k, d=None

get_dict_file() method of Tokenizer instance

initialize(dictionary=None) method of Tokenizer instance

lcut(*args, **kwargs) method of Tokenizer instance

lcut_for_search(*args, **kwargs) method of Tokenizer instance

load_userdict(f) method of Tokenizer instance

Load personalized dict to improve detect rate.

Parameter:

- f : A plain text file contains words and their ocurrences.

Can be a file-like object, or the path of the dictionary file,

whose encoding must be utf-8.

Structure of dict file:

word1 freq1 word_type1

word2 freq2 word_type2

...

Word type may be ignored

log(...)

log(x, [base=math.e])

Return the logarithm of x to the given base.

If the base not specified, returns the natural logarithm (base e) of x.

md5 = openssl_md5(...)

Returns a md5 hash object; optionally initialized with a string

setLogLevel(log_level)

set_dictionary(dictionary_path) method of Tokenizer instance

suggest_freq(segment, tune=False) method of Tokenizer instance

Suggest word frequency to force the characters in a word to be

joined or splitted.

Parameter:

- segment : The segments that the word is expected to be cut into,

If the word should be treated as a whole, use a str.

- tune : If True, tune the word frequency.

Note that HMM may affect the final result. If the result doesn't change,

set HMM=False.

tokenize(unicode_sentence, mode='default', HMM=True) method of Tokenizer instance

Tokenize a sentence and yields tuples of (word, start, end)

Parameter:

- sentence: the str(unicode) to be segmented.

- mode: "default" or "search", "search" is for finer segmentation.

- HMM: whether to use the Hidden Markov Model.

DATA

DEFAULT_DICT = None

DEFAULT_DICT_NAME = 'dict.txt'

DICT_WRITING =

PY2 = False

__license__ = 'MIT'

absolute_import = _Feature((2, 5, 0, 'alpha', 1), (3, 0, 0, 'alpha', 0...

check_paddle_install = 'is_paddle_installed': False

default_encoding = 'utf-8'

default_logger = <Logger jieba (DEBUG)>

dt = <Tokenizer dictionary=None>

log_console = <StreamHandler <stderr> (NOTSET)>

pool = None

re_eng = re.compile('[a-zA-Z0-9]')

re_han_default = re.compile('([一-\\u9fd5a-zA-Z0-9+#&\\\\._%\\\\-]+)')

re_skip_default = re.compile('(\\r\\n|\\\\s)')

re_userdict = re.compile('^(.+?)( [0-9]+)?( [a-z]+)?$')

string_types = (<class 'str'>,)

unicode_literals = _Feature((2, 6, 0, 'alpha', 2), (3, 0, 0, 'alpha', ...

user_word_tag_tab =

VERSION

0.42.1

FILE

d:\\anaconda\\lib\\site-packages\\jieba\\__init__.py

cut(sentence, cut_all=False, HMM=True, use_paddle=False) method of Tokenizer instance

The main function that segments an entire sentence that contains

Chinese characters into separated words.

Parameter:

- sentence: The str(unicode) to be segmented.

- cut_all: Model type. True for full pattern, False for accurate pattern.

- HMM: Whether to use the Hidden Markov Model.

cut_for_search(sentence, HMM=True) method of Tokenizer instance

Finer segmentation for search engines.2.题解

思路比较简单,不赘述,代码里面有注释。执行过程在下面。

import jieba as j

f=open(r"C:\\Users\\hqh\\Desktop\\data.txt",'r',encoding='utf-8').readlines()

g='' #先将文本内容作为长字符串读入

for i in f:

g+=i.strip('\\n')

c=list(j.cut_for_search(g))

#1

out1=open(r"C:\\Users\\hqh\\Desktop\\out1.txt",'w')

s=set() #利用集合储存关键词

for i in c:

if len(i)>=3:

s.add(i)

for i in s:

out1.write(i)

out1.write('\\n')

out1.close()

#2

d=

for i in s:

d[i]=c.count(i)

v=sorted(d.items(),key=lambda x:x[1],reverse=True) #按value排序,作为tuple储存在列表中

out2=open(r"C:\\Users\\hqh\\Desktop\\out2.txt",'w')

for i in v:

out2.write('%s:%d\\n'%(i[0],i[1]))

out2.close()

>>>

============= RESTART: C:/Users/hqh/Desktop/python自学/94jieba.py =============

Building prefix dict from the default dictionary ...

Loading model from cache C:\\Users\\hqh\\AppData\\Local\\Temp\\jieba.cache

Loading model cost 0.532 seconds.

Prefix dict has been built successfully.

以上是关于2023/03/25NCRE二级Python综合题:jieba库的使用与文件读取写入的主要内容,如果未能解决你的问题,请参考以下文章

2021昆明计算机二级成绩查询入口+时间http://cjcx.neea.edu.cn/ncre/query.html

2021年上半年计算机二级成绩查询入口http://cjcx.neea.edu.cn/ncre/query.html

Python二级考试-综合应用题(对网络版的《论语》txt文件进行提纯)