基于centos7.3安装部署jewel版本ceph集群实战演练

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于centos7.3安装部署jewel版本ceph集群实战演练相关的知识,希望对你有一定的参考价值。

一、环境准备

安装centos7.3虚拟机三台

由于官网源与网盘下载速度都非常的慢,所以给大家提供了国内的搜狐镜像源:http://mirrors.sohu.com/centos/7.3.1611/isos/x86_64/CentOS-7-x86_64-DVD-1611.iso

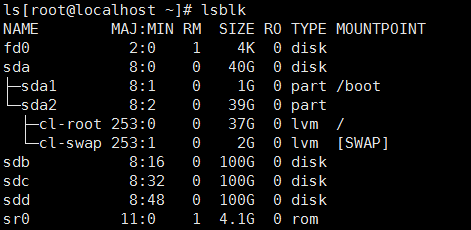

在三台装好的虚拟机上分别加三块100G的硬盘。如图所示:

3.配置ip

| ceph-1 | ceph-2 | ceph-3 |

| 192.168.42.200 | 192.168.42.201 | 192.168.42.203 |

修改可参照下面的配置文件即可

[[email protected] ~ ]# vim /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE=Ethernet BOOTPROTO=none DEFROUTE=yes PEERDNS=yes PEERROUTES=yes NAME=ens33 DEVICE=ens33 ONBOOT=yes IPADDR=192.168.42.200 GATEWAY=192.168.42.2 NETMASK=255.255.255.0 DNS1=8.8.8.8 DNS2=8.8.4.4 [[email protected] ~ ]# systemctl restart network

4.修改yum源,官网的yum源可能会很慢,所以可以添加ali的

[[email protected] ~ ]# yum clean all [[email protected] ~ ]# curl http://mirrors.aliyun.com/repo/Centos-7.repo >/etc/yum.repos.d/CentOS-Base.repo [[email protected] ~ ]# curl http://mirrors.aliyun.com/repo/epel-7.repo >/etc/yum.repos.d/epel.repo [[email protected] ~ ]# sed -i ‘/aliyuncs/d‘ /etc/yum.repos.d/CentOS-Base.repo [[email protected] ~ ]# sed -i ‘/aliyuncs/d‘ /etc/yum.repos.d/epel.repo [[email protected] ~ ]# yum makecache

5.修改主机名和安装一些软件

[[email protected] ~ ]#

6.最后的工作,将各个主机的IP加入各自的/etc/hosts中

[[email protected] ~ ]# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.42.200 ceph-1 192.168.42.201 ceph-2 192.168.42.202 ceph-3

7.重启服务器环境生效。

二、集群搭建

1.集群配置如下:

| 主机 | IP | 功能 |

|---|---|---|

| ceph-1 | 192.168.42.200 | deploy、mon*1、osd*3 |

| ceph-2 | 192.168.42.201 | mon*1、 osd*3 |

| ceph-3 | 192.168.42.202 | mon*1 、osd*3 |

2.环境清理

如果之前部署失败了,不必删除ceph客户端,或者重新搭建虚拟机,只需要在每个节点上执行如下指令即可将环境清理至刚安装完ceph客户端时的状态!强烈建议在旧集群上搭建之前清理干净环境,否则会发生各种异常情况。

[[email protected] cluster]# ps aux|grep ceph |awk ‘{print $2}‘|xargs kill -9 [[email protected] cluster]# ps aux|grep ceph #确保所有进程已经结束 ps -ef|grep ceph #确保此时所有ceph进程都已经关闭!!!如果没有关闭,多执行几次。 umount /var/lib/ceph/osd/* rm -rf /var/lib/ceph/osd/* rm -rf /var/lib/ceph/mon/* rm -rf /var/lib/ceph/mds/* rm -rf /var/lib/ceph/bootstrap-mds/* rm -rf /var/lib/ceph/bootstrap-osd/* rm -rf /var/lib/ceph/bootstrap-rgw/* rm -rf /var/lib/ceph/tmp/* rm -rf /etc/ceph/* rm -rf /var/run/ceph/*

3.yum源及ceph的安装

需要在每个主机上执行以下指令 yum clean all rm -rf /etc/yum.repos.d/*.repo wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo sed -i ‘/aliyuncs/d‘ /etc/yum.repos.d/CentOS-Base.repo sed -i ‘/aliyuncs/d‘ /etc/yum.repos.d/epel.repo sed -i ‘s/$releasever/7/g‘ /etc/yum.repos.d/CentOS-Base.repo

4.增加ceph的源

vim /etc/yum.repos.d/ceph.repo 添加以下内容: [ceph] name=ceph baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/x86_64/ gpgcheck=0 [ceph-noarch] name=cephnoarch baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch/ gpgcheck=0

5.安装ceph客户端:

yum makecache yum install ceph ceph-radosgw rdate -y 关闭selinux&firewalld sed -i ‘s/SELINUX=.*/SELINUX=disabled/‘ /etc/selinux/config setenforce 0 systemctl stop firewalld systemctl disable firewalld 同步各个节点时间: yum -y install rdate rdate -s time-a.nist.gov echo rdate -s time-a.nist.gov >> /etc/rc.d/rc.local chmod +x /etc/rc.d/rc.local

6.开始部署

在部署节点(ceph-1)安装ceph-deploy,下文的部署节点统一指ceph-1:

[[email protected] ~]# yum -y install ceph-deploy [[email protected] ~]# ceph-deploy --version 1.5.34 [[email protected] ~]# ceph -v ceph version 10.2.2 (45107e21c568dd033c2f0a3107dec8f0b0e58374)

7.在部署节点创建部署目录并开始部署:

[[email protected] ~]# cd [[email protected] ~]# mkdir cluster [[email protected] ~]# cd cluster/ [[email protected] cluster]# ceph-deploy new ceph-1 ceph-2 ceph-3

如果之前没有ssh-copy-id到各个节点,则需要输入一下密码,过程log如下:

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (1.5.34): /usr/bin/ceph-deploy new ceph-1 ceph-2 ceph-3 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] func : <function new at 0x7f91781f96e0> [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f917755ca28> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] ssh_copykey : True [ceph_deploy.cli][INFO ] mon : [‘ceph-1‘, ‘ceph-2‘, ‘ceph-3‘] .. .. ceph_deploy.new][WARNIN] could not connect via SSH [ceph_deploy.new][INFO ] will connect again with password prompt The authenticity of host ‘ceph-2 (192.168.57.223)‘ can‘t be established. ECDSA key fingerprint is ef:e2:3e:38:fa:47:f4:61:b7:4d:d3:24:de:d4:7a:54. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added ‘ceph-2,192.168.57.223‘ (ECDSA) to the list of known hosts. root [email protected]‘s password: [ceph-2][DEBUG ] connected to host: ceph-2

..

..

[ceph_deploy.new][DEBUG ] Resolving host ceph-3

[ceph_deploy.new][DEBUG ] Monitor ceph-3 at 192.168.57.224

[ceph_deploy.new][DEBUG ] Monitor initial members are [‘ceph-1‘, ‘ceph-2‘, ‘ceph-3‘]

[ceph_deploy.new][DEBUG ] Monitor addrs are [‘192.168.57.222‘, ‘192.168.57.223‘, ‘192.168.57.224‘]

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

此时,目录内容如下:

[[email protected] cluster]# ls ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

8.根据自己的IP配置向ceph.conf中添加public_network,并稍微增大mon之间时差允许范围(默认为0.05s,现改为2s):

[[email protected] cluster]# echo public_network=192.168.57.0/24 >> ceph.conf [[email protected] cluster]# echo mon_clock_drift_allowed = 2 >> ceph.conf [[email protected] cluster]# cat ceph.conf [global] fsid = 0248817a-b758-4d6b-a217-11248b098e10 mon_initial_members = ceph-1, ceph-2, ceph-3 mon_host = 192.168.57.222,192.168.57.223,192.168.57.224 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx public_network=192.168.57.0/24 mon_clock_drift_allowed = 2

9.开始部署monitor:

[[email protected] cluster]# ceph-deploy mon create-initial .. ..若干log [[email protected] cluster]# ls ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

10.查看集群状态:

[[email protected] cluster]# ceph -s cluster 0248817a-b758-4d6b-a217-11248b098e10 health HEALTH_ERR no osds Monitor clock skew detected monmap e1: 3 mons at {ceph-1=192.168.57.222:6789/0,ceph-2=192.168.57.223:6789/0,ceph-3=192.168.57.224:6789/0} election epoch 6, quorum 0,1,2 ceph-1,ceph-2,ceph-3 osdmap e1: 0 osds: 0 up, 0 in flags sortbitwise pgmap v2: 64 pgs, 1 pools, 0 bytes data, 0 objects 0 kB used, 0 kB / 0 kB avail 64 creating

11.开始部署OSD:

ceph-deploy --overwrite-conf osd prepare ceph-1:/dev/sdb ceph-1:/dev/sdc ceph-1:/dev/sdd ceph-2:/dev/sdb ceph-2:/dev/sdc ceph-2:/dev/sdd ceph-3:/dev/sdb ceph-3:/dev/sdc ceph-3:/dev/sdd --zap-disk ceph-deploy --overwrite-conf osd activate ceph-1:/dev/sdb1 ceph-1:/dev/sdc1 ceph-1:/dev/sdd1 ceph-2:/dev/sdb1 ceph-2:/dev/sdc1 ceph-2:/dev/sdd1 ceph-3:/dev/sdb1 ceph-3:/dev/sdc1 ceph-3:/dev/sdd1

我在部署的时候出了个小问题,有一个OSD没成功(待所有OSD部署完毕后,再重新部署问题OSD即可解决),如果不出意外的话,集群状态应该如下:

[[email protected] cluster]# ceph -s cluster 0248817a-b758-4d6b-a217-11248b098e10 health HEALTH_WARN too few PGs per OSD (21 < min 30) monmap e1: 3 mons at {ceph-1=192.168.57.222:6789/0,ceph-2=192.168.57.223:6789/0,ceph-3=192.168.57.224:6789/0} election epoch 22, quorum 0,1,2 ceph-1,ceph-2,ceph-3 osdmap e45: 9 osds: 9 up, 9 in flags sortbitwise pgmap v82: 64 pgs, 1 pools, 0 bytes data, 0 objects 273 MB used, 16335 GB / 16336 GB avail 64 active+clean

12.去除这个WARN,只需要增加rbd池的PG就好:

[[email protected] cluster]# ceph osd pool set rbd pg_num 128 set pool 0 pg_num to 128 [[email protected] cluster]# ceph osd pool set rbd pgp_num 128 set pool 0 pgp_num to 128 [[email protected] cluster]# ceph -s cluster 0248817a-b758-4d6b-a217-11248b098e10 health HEALTH_ERR 19 pgs are stuck inactive for more than 300 seconds 12 pgs peering 19 pgs stuck inactive monmap e1: 3 mons at {ceph-1=192.168.57.222:6789/0,ceph-2=192.168.57.223:6789/0,ceph-3=192.168.57.224:6789/0} election epoch 22, quorum 0,1,2 ceph-1,ceph-2,ceph-3 osdmap e49: 9 osds: 9 up, 9 in flags sortbitwise pgmap v96: 128 pgs, 1 pools, 0 bytes data, 0 objects 308 MB used, 18377 GB / 18378 GB avail 103 active+clean 12 peering 9 creating 4 activating

[[email protected] cluster]# ceph -s cluster 0248817a-b758-4d6b-a217-11248b098e10 health HEALTH_OK monmap e1: 3 mons at {ceph-1=192.168.57.222:6789/0,ceph-2=192.168.57.223:6789/0,ceph-3=192.168.57.224:6789/0} election epoch 22, quorum 0,1,2 ceph-1,ceph-2,ceph-3 osdmap e49: 9 osds: 9 up, 9 in flags sortbitwise pgmap v99: 128 pgs, 1 pools, 0 bytes data, 0 objects 310 MB used, 18377 GB / 18378 GB avail 128 active+clean

至此,集群部署完毕。

13.config推送

请不要使用直接修改某个节点的/etc/ceph/ceph.conf文件的方式,而是去部署节点(此处为ceph-1:/root/cluster/ceph.conf)目录下修改。因为节点到几十个的时候,不可能一个个去修改的,采用推送的方式快捷安全!

修改完毕后,执行如下指令,将conf文件推送至各个节点:

[[email protected] cluster]# ceph-deploy --overwrite-conf config push ceph-1 ceph-2 ceph-3

此时,需要重启各个节点的monitor服务,见下一节。

14.mon&osd启动方式

#monitor start/stop/restart #ceph-1为各个monitor所在节点的主机名。 systemctl start [email protected] systemctl restart [email protected] systemctl stop [email protected] #OSD start/stop/restart #0为该节点的OSD的id,可以通过`ceph osd tree`查看 systemctl start/stop/restart [email protected] [[email protected] cluster]# ceph osd tree ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY -1 17.94685 root default -2 5.98228 host ceph-1 0 1.99409 osd.0 up 1.00000 1.00000 1 1.99409 osd.1 up 1.00000 1.00000 8 1.99409 osd.2 up 1.00000 1.00000 -3 5.98228 host ceph-2 2 1.99409 osd.3 up 1.00000 1.00000 3 1.99409 osd.4 up 1.00000 1.00000 4 1.99409 osd.5 up 1.00000 1.00000 -4 5.98228 host ceph-3 5 1.99409 osd.6 up 1.00000 1.00000 6 1.99409 osd.7 up 1.00000 1.00000 7 1.99409 osd.8 up 1.00000 1.00000

以上是关于基于centos7.3安装部署jewel版本ceph集群实战演练的主要内容,如果未能解决你的问题,请参考以下文章