Opencv项目实战:20 单手识别数字0到5

Posted 夏天是冰红茶

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Opencv项目实战:20 单手识别数字0到5相关的知识,希望对你有一定的参考价值。

目录

0、项目介绍

今天要做的是单手识别数字0到5,通过在窗口展示,实时的展示相应的图片以及文字。

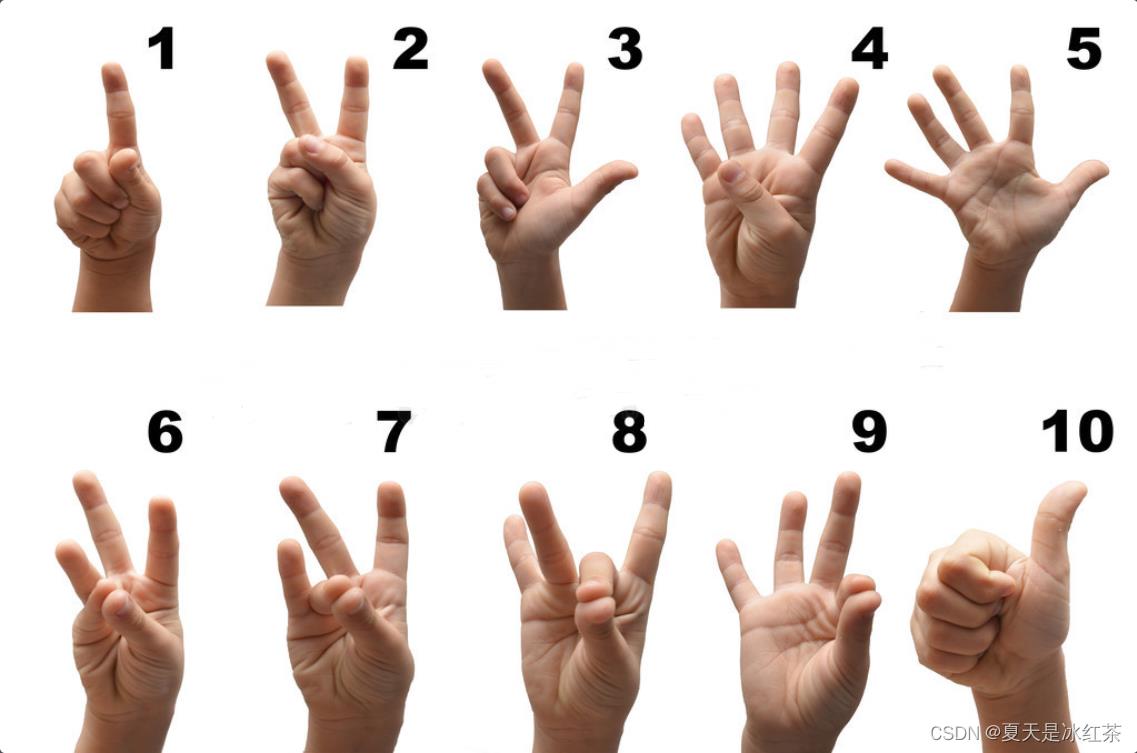

在网上找了很久的手势表示数字的图片,当然为了本项目的简洁,我只展示了0到5,感兴趣的可以自己添加后面的,原理很简单。

1、效果展示

成功的实现了单手识别数字0到5,实时展现也很不错。

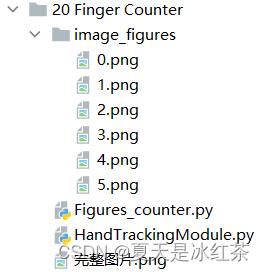

2、项目搭建

在文件image_figures中,我将"完整图片.png"手动裁剪成0到5的图片,大小为220 300,当然你可以不用想我这样裁成统一大小,后面有解决的方法。

300,当然你可以不用想我这样裁成统一大小,后面有解决的方法。

3、项目代码展示

HandTrackingModule.py

import cv2

import mediapipe as mp

import math

import time

class handDetector:

def __init__(self, mode=False, maxHands=2, detectionCon=0.5, minTrackCon=0.5):

self.mode = mode

self.maxHands = maxHands

self.detectionCon = detectionCon

self.minTrackCon = minTrackCon

self.mpHands = mp.solutions.hands

self.hands = self.mpHands.Hands(static_image_mode=self.mode, max_num_hands=self.maxHands,

min_detection_confidence=self.detectionCon,

min_tracking_confidence=self.minTrackCon)

self.mpDraw = mp.solutions.drawing_utils

self.tipIds = [4, 8, 12, 16, 20]

self.fingers = []

self.lmList = []

def findHands(self, img, draw=True):

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

self.results = self.hands.process(imgRGB)

# print(results.multi_hand_landmarks)

if self.results.multi_hand_landmarks:

for handLms in self.results.multi_hand_landmarks:

if draw:

self.mpDraw.draw_landmarks(img, handLms,

self.mpHands.HAND_CONNECTIONS)

return img

def findPosition(self, img, handNo=0, draw=True):

self.lmList=[]

bbox = 0

if self.results.multi_hand_landmarks:

myHand = self.results.multi_hand_landmarks[handNo]

xList = []

yList = []

for id, lm in enumerate(myHand.landmark):

# print(id, lm)

h, w, c = img.shape

cx, cy = int(lm.x * w), int(lm.y * h)

xList.append(cx)

yList.append(cy)

# print(id, cx, cy)

self.lmList.append([id, cx, cy])

if draw:

cv2.circle(img, (cx, cy), 5, (255, 0, 255), cv2.FILLED)

xmin, xmax = min(xList), max(xList)

ymin, ymax = min(yList), max(yList)

bbox = xmin, ymin, xmax, ymax

if draw:

cv2.rectangle(img, (xmin - 20, ymin - 20), (xmax + 20, ymax + 20),

(0, 255, 0), 2)

return self.lmList, bbox

def fingersUp(self):

fingers = []

# Thumb

if self.lmList[self.tipIds[0]][1] > self.lmList[self.tipIds[0] - 1][1]:

fingers.append(1)

else:

fingers.append(0)

# Fingers

for id in range(1, 5):

if self.lmList[self.tipIds[id]][2] < self.lmList[self.tipIds[id] - 2][2]:

fingers.append(1)

else:

fingers.append(0)

# totalFingers = fingers.count(1)

return fingers

def findDistance(self, p1, p2, img=None):

x1, y1 = self.lmList[p1][1:]

x2, y2 = self.lmList[p2][1:]

cx, cy = (x1 + x2) // 2, (y1 + y2) // 2

length = math.hypot(x2 - x1, y2 - y1)

info = (x1, y1, x2, y2, cx, cy)

if img is not None:

cv2.circle(img, (x1, y1), 15, (255, 0, 255), cv2.FILLED)

cv2.circle(img, (x2, y2), 15, (255, 0, 255), cv2.FILLED)

cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)

cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)

return length, info, img

else:

return length, info

def main():

pTime = 0

cTime = 0

cap = cv2.VideoCapture(0)

detector = handDetector()

while True:

success, img = cap.read()

img = detector.findHands(img)

lmList, bbox = detector.findPosition(img)

if len(lmList) != 0:

print(lmList[4])

cTime = time.time()

fps = 1 / (cTime - pTime)

pTime = cTime

cv2.putText(img, str(int(fps)), (10, 70), cv2.FONT_HERSHEY_PLAIN, 3,

(255, 0, 255), 3)

cv2.imshow("Image", img)

k=cv2.waitKey(1)

if k==27:

break

if __name__ == "__main__":

main()

Figures_counter.py

import os

import cv2

import mediapipe as mp

import time

import HandTrackingModule as htm

class fpsReader():

def __init__(self):

self.pTime = time.time()

def FPS(self,img=None,pos=(20, 50), color=(255, 255, 0), scale=3, thickness=3):

cTime = time.time()

try:

fps = 1 / (cTime - self.pTime)

self.pTime = cTime

if img is None:

return fps

else:

cv2.putText(img, f'FPS: int(fps)', pos, cv2.FONT_HERSHEY_PLAIN,

scale, color, thickness)

return fps, img

except:

return 0

fpsReader = fpsReader()

cap=cv2.VideoCapture(0)

Wcam, Hcam = 980, 980

cap.set(3, Wcam)

cap.set(4, Hcam)

cap.set(10,150)

img_path="image_figures"

mulu=os.listdir(img_path)

print(mulu)

Laylist=[]

for path in mulu:

image=cv2.imread(f"img_path/path")

Laylist.append(image)

detector = htm.handDetector(detectionCon=0.75)

while 1:

_, img = cap.read()

detector.findHands(img)

lmList,_= detector.findPosition(img, draw=False)

if len(lmList) != 0:

fingerup=detector.fingersUp()

print(fingerup)

all_figures=fingerup.count(1)

print(all_figures)

h, w, _ = Laylist[all_figures].shape

img[0:h, 0:w] = Laylist[all_figures]

# img[0:300,0:220]=Laylist[0]

cv2.rectangle(img,(0,350),(220,550),(0,255,0),cv2.FILLED)

cv2.putText(img,str(all_figures),(45,510),cv2.FONT_HERSHEY_COMPLEX,6,(0,0,255),25)

#################打印帧率#####################

fps, img = fpsReader.FPS(img,pos=(880,50))

cv2.imshow("image",img)

k=cv2.waitKey(1)

if k==27:

break

这里的HandTrackingModule.py文件与上一节相同,不用更改什么。

由于我裁剪的时候是按照0-5的顺序命名,Laylist的索引刚好与其对应,所以不用在进行多的修改,而且这里的图片大小其实是可以根据其shape直接得到的,但当时我没有想到,所以就把所有的图片裁剪成统一大小了。

4、项目资源

GitHub:Opencv项目实战:20 单手识别数字0到5

5、项目总结

在这里,我提供一下识别更多数字的方法(0-10)。首先最简便的是双手识别,完全不用更改代码,把图片处理好就行了;其次,就是按照最上面的那张图片,参数figureup是一个长度为5的列表[0,0,0,0,0],你可以参照着手势将其打印出来,然后将其用if条件判断。当然,在我们这边最常见的还是华北手势表示数字,大家按照自己的习惯来就行。

以上是关于Opencv项目实战:20 单手识别数字0到5的主要内容,如果未能解决你的问题,请参考以下文章