通过OpenNI2获取数据并转至OpenCV格式及点云生成

Posted lwlv

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了通过OpenNI2获取数据并转至OpenCV格式及点云生成相关的知识,希望对你有一定的参考价值。

OpenNI简介

OpenNI(Open Natural Interaction)中文译为开放自然语言交互,用官方的表述来讲就是a standard framework for 3D sensing,用于3D感知的开发接口。OpenNI2是第二代版本(官网 http://structure.io/openni),相对于第一代更加专注于对3D设备的支持和数据的获取,移除了手势识别等中间件的方式,使用OpenNI2编程序,代码更简洁易懂。

简而言之OpenNI2就是一个RGBD相机的用户态驱动,对上提供统一的接口,方便用户获取RGBD的图像数据,对下提供统一的标准类,方便RGBD厂商进行适配。

目前OpenNI2支持的设备包括微软Kinect2(kinect一代所需要的驱动则为nite https://github.com/PrimeSense/NiTEControls、sensor https://github.com/avin2/SensorKinect),华硕Xtion,英特尔RealSense等设备,由于其清晰的代码结构,很容易对第三方设备进行适配。OpenNI2的源码地址为 https://github.com/OpenNI,下载后Linux和windows均可编译调用。

如果有华硕xtion设备,安装好openni2后,直接运行里面的SimpleViewer就可看到深度图像。关于其安装和环境变量的配置,可参见 Chenxin-小斤 的博客。

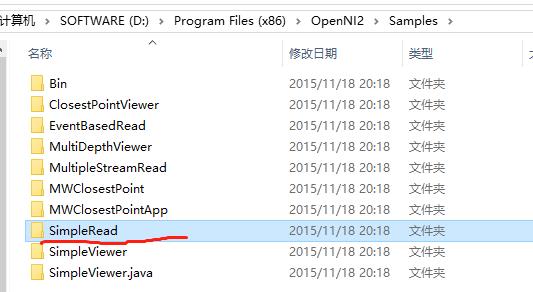

在windows下安装好OpenNI2后,可以在其目录Samples下查看到一些简单的例程 ,作为入门和学习用,如下图所示。

1、测试是否连接成功

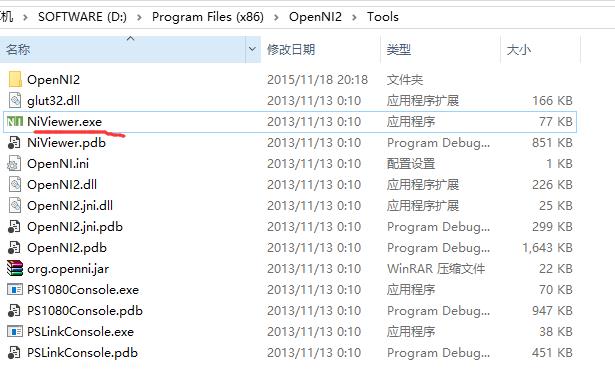

查看深度相机有没有连接成功且可驱动,最简单的方法就是用Tools里面的NiViewer工具,如果能正常显示彩色图像和深度图像则说明驱动成功。

下面附上测试连接成功的代码:

#include <stdio.h>

#include <OpenNI.h>

#include "OniSampleUtilities.h"

#define SAMPLE_READ_WAIT_TIMEOUT 2000 //2000ms

using namespace openni;

int main()

Status rc = OpenNI::initialize();

if (rc != STATUS_OK)

printf("Initialize failed\\n%s\\n", OpenNI::getExtendedError());

return 1;

Device device;

rc = device.open(ANY_DEVICE);

if (rc != STATUS_OK)

printf("Couldn't open device\\n%s\\n", OpenNI::getExtendedError());

return 2;

VideoStream depth;

if (device.getSensorInfo(SENSOR_DEPTH) != NULL)

rc = depth.create(device, SENSOR_DEPTH);

if (rc != STATUS_OK)

printf("Couldn't create depth stream\\n%s\\n", OpenNI::getExtendedError());

return 3;

rc = depth.start();

if (rc != STATUS_OK)

printf("Couldn't start the depth stream\\n%s\\n", OpenNI::getExtendedError());

return 4;

VideoFrameRef frame;

while (!wasKeyboardHit())

int changedStreamDummy;

VideoStream* pStream = &depth;

rc = OpenNI::waitForAnyStream(&pStream, 1, &changedStreamDummy, SAMPLE_READ_WAIT_TIMEOUT);

if (rc != STATUS_OK)

printf("Wait failed! (timeout is %d ms)\\n%s\\n", SAMPLE_READ_WAIT_TIMEOUT, OpenNI::getExtendedError());

continue;

rc = depth.readFrame(&frame);

if (rc != STATUS_OK)

printf("Read failed!\\n%s\\n", OpenNI::getExtendedError());

continue;

if (frame.getVideoMode().getPixelFormat() != PIXEL_FORMAT_DEPTH_1_MM && frame.getVideoMode().getPixelFormat() != PIXEL_FORMAT_DEPTH_100_UM)

printf("Unexpected frame format\\n");

continue;

DepthPixel* pDepth = (DepthPixel*)frame.getData();

int middleIndex = (frame.getHeight()+1)*frame.getWidth()/2;

printf("[%08llu] %8d\\n", (long long)frame.getTimestamp(), pDepth[middleIndex]);

depth.stop();

depth.destroy();

device.close();

OpenNI::shutdown();

return 0;

其代码参考于官方例程OpenNI2\\Samples\\SimpleRead,还是比较简单容易看懂的。其中OniSampleUtilities.h头文件也是在该文件夹下找到的。

2、图像转至OpenCV下的type

由上述例程可知,变量VideoStream depth用于从传感器中获取数据。如果要显示或者进行一些图像处理操作,一般又会用到OpenCV库,就要进行一些转换。代码如下:

#include <stdlib.h>

#include <iostream>

#include <string>

#include "OpenNI.h"

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

using namespace std;

using namespace cv;

using namespace openni;

//该函数用于将OpenNI数据转换为OpenCV下的格式

Mat oni2mat(VideoFrameRef &ocv);

int main()

/* initialize OpenNI2 */

Status result = OpenNI::initialize();

if (result != STATUS_OK)

printf("Initialize failed\\n%s\\n", OpenNI::getExtendedError());

return 1;

/* open device */

Device device;

result = device.open(ANY_DEVICE);

if (result != STATUS_OK)

printf("Couldn't open device\\n%s\\n", OpenNI::getExtendedError());

OpenNI::shutdown();

return 2;

/* create color stream */

VideoStream oniColorStream;

VideoFrameRef oniColorImg;

// set color video mode

VideoMode modeColor;

modeColor.setResolution(640,480);

modeColor.setFps(20);

modeColor.setPixelFormat(PIXEL_FORMAT_RGB888);

oniColorStream.setVideoMode(modeColor);

// start color stream

result = oniColorStream.create(device, SENSOR_COLOR);

if (result == STATUS_OK)

result = oniColorStream.start();

if (result != STATUS_OK)

printf("Couldn't start color stream:\\n%s\\n", OpenNI::getExtendedError());

oniColorStream.destroy();

else

printf("Couldn't find color stream:\\n%s\\n", OpenNI::getExtendedError());

/* create depth stream */

VideoStream oniDepthStream;

VideoFrameRef oniDepthImg;

// set depth video mode

VideoMode modeDepth;

modeDepth.setResolution(640 , 480);

modeDepth.setFps(25);

modeDepth.setPixelFormat(PIXEL_FORMAT_DEPTH_1_MM);

oniDepthStream.setVideoMode(modeDepth);

// start depth stream

result = oniDepthStream.create(device, SENSOR_DEPTH);

if (result == STATUS_OK)

result = oniDepthStream.start();

if (result != STATUS_OK)

printf("Couldn't start depth stream:\\n%s\\n", OpenNI::getExtendedError());

oniDepthStream.destroy();

else

printf("Couldn't find depth stream:\\n%s\\n", OpenNI::getExtendedError());

char key = 0;

//int rows = ocv.getHeight();

while (key != 27)

// read color frame

if (oniColorStream.readFrame(&oniColorImg) == STATUS_OK)

Mat cvRGBMat = oni2mat(oniColorImg);

IplImage *cvRGBImg = (&(IplImage)cvRGBMat);

cvNamedWindow("RGB Image", CV_WINDOW_NORMAL);

//cvMoveWindow("RGB Image", 600, 100);

cvShowImage("RGB Image", cvRGBImg);

// read depth frame

if (oniDepthStream.readFrame(&oniDepthImg) == STATUS_OK)

Mat cvDepthMat = oni2mat(oniDepthImg);

Mat cvFusionMat;

cvDepthMat.convertTo(cvFusionMat, CV_8U, 255.0 / (oniDepthStream.getMaxPixelValue()));

cvtColor(cvFusionMat, cvFusionMat, CV_GRAY2BGR);

IplImage *cvDepthImg = (&(IplImage)cvFusionMat);

cvNamedWindow("Depth Image", CV_WINDOW_NORMAL);

//cvMoveWindow("Depth Image", 0 , 1000);

cvShowImage("Depth Image", cvDepthImg);

key = waitKey(20);

//CV destroy

cvDestroyWindow("RGB Image");

cvDestroyWindow("Depth Image");

//NI destroy

oniDepthStream.destroy();

oniColorStream.destroy();

device.close();

OpenNI::shutdown();

return 0;

Mat oni2mat(VideoFrameRef &ocv)

Mat cv_frame;

//int rows = ocv.getHeight();

//int cols = ocv.getWidth();

if (ocv.getSensorType() == SENSOR_COLOR)

RGB888Pixel *dData = (RGB888Pixel*)ocv.getData();

cv_frame = Mat(ocv.getHeight(), ocv.getWidth(), CV_8UC3, dData).clone();

cvtColor(cv_frame, cv_frame, CV_RGB2BGR);

else if (ocv.getSensorType() == SENSOR_DEPTH)

DepthPixel *dData = (DepthPixel*)ocv.getData();

cv_frame = Mat(ocv.getHeight(), ocv.getWidth(), CV_16UC1, dData).clone();

else

throw runtime_error("Unsupported sensor type.");

return cv_frame;

此外,还可参考(Kinect开发教程八:OpenNI2显示深度、彩色及融合图像)的例子,关键代码如下:

// read frame

if (oniColorStream.readFrame(&oniColorImg) == STATUS_OK)

// convert data into OpenCV type

cv::Mat cvRGBMat(oniColorImg.getHeight(), oniColorImg.getWidth(), CV_8UC3, (void*)oniColorImg.getData());

cv::cvtColor(cvRGBMat, cvRGBMat, CV_RGB2BGR);

cv::Mat A(cvRGBMat, cv::Rect(50, 50, 2, 2));

std::cout << "A = " << std::endl << " " <<A << std::endl << std::endl;

cvRGBImg = (&(IplImage)cvRGBMat);

cvNamedWindow("image", CV_WINDOW_NORMAL);

cvShowImage("image", cvRGBImg);

if (oniDepthStream.readFrame(&oniDepthImg) == STATUS_OK)

cv::Mat cvRawImg16U(oniDepthImg.getHeight(), oniDepthImg.getWidth(), CV_16UC1, (void*)oniDepthImg.getData());

cv::Mat B(cvRawImg16U, cv::Rect(50, 50, 2, 2));

std::cout << "B = " << std::endl << " " << B << std::endl << std::endl;

cvOrgDepth = (&(IplImage)cvRawImg16U);

cvNamedWindow("org_depth", CV_WINDOW_NORMAL);

cvShowImage("org_depth", cvOrgDepth);

// convert depth info from 0~255

cv::Mat cvDepthMat;

cvRawImg16U.convertTo(cvDepthMat, CV_8U, 255.0 / (oniDepthStream.getMaxPixelValue()));

printf("max depth pixel = %d , %d\\n\\n", oniDepthImg.getHeight() , oniDepthImg.getWidth());

cv::Mat C(cvDepthMat, cv::Rect(50, 50, 2, 2));

std::cout << "C = " << std::endl << " " << C << std::endl << std::endl;

// convert depth image GRAY to BGR

cv::cvtColor(cvDepthMat, cvDepthMat, CV_GRAY2BGR);

cvFusionImg = (&(IplImage)cvDepthMat);

cvNamedWindow("depth", CV_WINDOW_NORMAL);

cvShowImage("depth", cvFusionImg);

3、保存点云pcd文件

说到点云不得不用到PCL库,Point Cloud Library,比较容易安装,其官网 http://pointclouds.org。

保存为.pcd文件的部分代码如下:

#include <iostream>

#include <pcl/io/pcd_io.h>

#include <pcl/point_types.h>

int main(int argc, char** argv)

pcl::PointCloud<pcl::PointXYZ> cloud;

// Fill in the cloud data

cloud.width = 5;

cloud.height = 1;

cloud.is_dense = false;

cloud.points.resize(cloud.width * cloud.height);

for (size_t i = 0; i < cloud.points.size(); ++i)

cloud.points[i].x = 1024 * rand() / (RAND_MAX + 1.0f);

cloud.points[i].y = 1024 * rand() / (RAND_MAX + 1.0f);

cloud.points[i].z = 1024 * rand() / (RAND_MAX + 1.0f);

pcl::io::savePCDFileASCII("test_pcd.pcd", cloud);

std::cerr << "Saved " << cloud.points.size() << " data points to test_pcd.pcd." << std::endl;

for (size_t i = 0; i < cloud.points.size(); ++i)

std::cerr << " " << cloud.points[i].x << " " << cloud.points[i].y << " " << cloud.points[i].z << std::endl;

return (0);

关于点云的转换及拼接,可参见高翔博士的博客:从图像到点云

对于生成的点云数据,可使用pcl_viewer来查看。当然也可以自己写方法来显示,一般用得比较多是OpenGL,如博客 关于OpenNI2和OpenCV2的那些事——获取三维点云数据并用OpenGL表示

Enjoy!

以上是关于通过OpenNI2获取数据并转至OpenCV格式及点云生成的主要内容,如果未能解决你的问题,请参考以下文章