Web集群部署(Nginx+Keepalived+Varnish+LAMP+NFS)

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Web集群部署(Nginx+Keepalived+Varnish+LAMP+NFS)相关的知识,希望对你有一定的参考价值。

Web集群部署(Nginx+Keepalived+Varnish+LAMP+NFS)

一、服务介绍

1.1 Nginx服务

nginx是一个高性能的HTTP和反向代理服务器,也是一个支持IMAP/POP3/SMTP的代理服务器。Nginx即支持Web服务正向代理,也支持反向代理,尤其是反向代理功能十分强大。Nginx支持缓存功能,负载均衡,FASTCGI协议,支持第三方模块。时下Nginx的Web反向代理功能非常流行。

1.2 Keepalived

Keepalived见名知意,即保持存活,其目的是解决单点故障,当一台服务器宕机或者故障时自动切换到其他的服务器中。Keepalived是基于VRRP协议实现的。VRRP协议是用于实现路由器冗余的协议,VRRP协议将两台或多台路由器设备虚拟成虚拟设备,可以对外提供虚拟路由器IP(一个或多个),即漂移(VIP)。用户通过VIP访问WEB访问。

1.3 Varnish

Varnish Cache是一个Web 应用加速器,也是一个知名的反向代理程序。你可以把它安装在任

何http服务器的前端,同时对它进行配置来缓存内容。varnish真的很快,单台代理的分发速度可以达到300 - 1000x,当然这也依赖于你架构。

1.4 Httpd

Httpd是一个高性能的Web服务器,也被称为Apache。Httpd支持很多的功能,拥有大量的模块,可以实现大量的功能。Httpd支持CGI(Common Gataway Interface)协议,支持反向代理,支持负载均衡,支持大量第三方模块。本文将Httpd作为Web静态服务器以及支持php的动态服务器。

1.5 Mariadb

Mariadb是多用户,多线程的SQL数据库服务器。它是C/S架构,即client/server,客服端/服务端架构。MariaDB基于事务的Maria存储引擎,使用了Percona的 XtraDB,InnoDB的变体,性能十分的强大。mariadb由开源社区维护,采用GPL授权许可,完全兼容mysql。

1.6 NFS

NFS叫做网络文件系统,是由SUN公司研发的。见名知意,简单理解就是通过网络互联,将本地的文件系统共享出去,从而实现资源的共享。

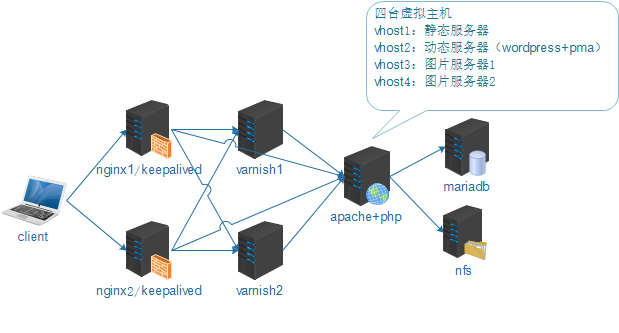

二、架构要求

2.1 架构要求

1.用户可通过客户端Internet访问到如下网站

2.通过客户端设置Hosts解析,解析到Nginx前端代理服务器上,代理服务器将请求轮询到后面的Varnish服务器,动态内容则直接分发到LAMP Web服务器上,动态内容也可以经由Varnish代理分发到动态服务器上但是不缓存。

3.在LAMP的Web服务器在分别部署www.huwho.com blog.huwho.com

4.当用户更新wordpress博客时,会通过Web服务器把数据写入Mariadb数据库服务器中。(注意:Mariadb与Web是分离的,不在一台服务器上,注意授权)。

5.当用户上传图片,附件时,其数据将通过Web服务器传到后端的NFS存储上,而不保存在Web服务器上。

6.为开发人员搭建phpmyadmin MySQL客户端管理软件管理MySQL。

7.为数据库人员安装Mycli数据库管理工具。

8.为Nginx负载均衡反向代理配置双主高可用。

2.2 系统版本选择

OS:centos7.3

Kernel:3.10.0-514.el7.x86_64

X86_64

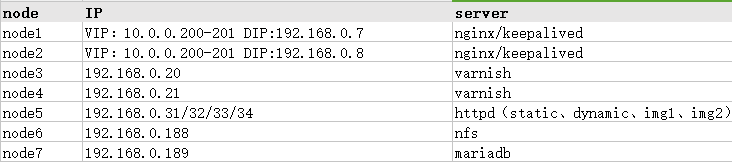

2.3 部署环境

三、Linux系统环境查看

3.1 查看服务器硬件信息

dmidecode | grep "Product Name"

3.2 查看 CPU CPU型号

lscpu | grep "Model name"

3.3 查看CPU个数

lscpu | grep "^CPU(s)"

3.4 查看内存大小

free -h | grep Mem|awk ‘{print $2}‘ 四、系统初始化

#!/bin/bash

#CentOS7 initialization

if [[ "$(whoami)" != "root" ]]; then

echo "please run this script as root ." >&2

exit 1

fi

echo -e "\033[31m centos7系统初始化脚本,请慎重运行! press ctrl+C to cancel \033[0m"

sleep 5

#update system pack

yum_update(){

yum -y install wget

cd /etc/yum.repos.d/

mkdir bak

mv ./*.repo bak

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all && yum makecache

yum -y install net-tools lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel expect

}

#set ntp

zone_time(){

cp /usr/share/zoneinfo/Asia/Chongqing /etc/localtime

printf ‘ZONE="Asia/Chongqing"\nUTC=false\nARC=false‘ > /etc/sysconfig/clock

/usr/sbin/ntpdate pool.ntp.org

echo "*/5 * * * * /usr/sbin/ntpdate pool.ntp.org > /dev/null 2>&1" >> /var/spool/cron/root;chmod 600 /var/spool/cron/root

echo ‘LANG="en_US.UTF-8"‘ > /etc/sysconfig/i18n

source /etc/sysconfig/i18n

}

#set ulimit

ulimit_config(){

echo "ulimit -SHn 102400" >> /etc/rc.local

cat >> /etc/security/limits.conf << EOF

* soft nofile 102400

* hard nofile 102400

* soft nproc 102400

* hard nproc 102400

EOF

}

#set ssh

sshd_config(){

sed -i ‘s/^GSSAPIAuthentication yes$/GSSAPIAuthentication no/‘ /etc/ssh/sshd_config

sed -i ‘s/#UseDNS yes/UseDNS no/‘ /etc/ssh/sshd_config

systemctl start crond

}

#set sysctl

sysctl_config(){

cp /etc/sysctl.conf /et/sysctl.conf.bak

cat > /etc/sysctl.conf << EOF

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 262144

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

net.ipv4.tcp_fin_timeout = 1

net.ipv4.tcp_keepalive_time = 1200

net.ipv4.ip_local_port_range = 1024 65535

EOF

/sbin/sysctl -p

echo "sysctl set OK!!"

}

#disable selinux

selinux_config(){

sed -i ‘[email protected][email protected][email protected]‘ /etc/selinux/config

setenforce 0

}

iptables_config(){

systemctl stop firewalld.servic

systemctl disable firewalld.service

yum install iptables-services

cat > /etc/sysconfig/iptables << EOF

# Firewall configuration written by system-config-securitylevel

# Manual customization of this file is not recommended.

*filter

:INPUT DROP [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:syn-flood - [0:0]

-A INPUT -i lo -j ACCEPT

-A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 22 -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT

-A INPUT -p icmp -m limit --limit 100/sec --limit-burst 100 -j ACCEPT

-A INPUT -p icmp -m limit --limit 1/s --limit-burst 10 -j ACCEPT

-A INPUT -p tcp -m tcp --tcp-flags FIN,SYN,RST,ACK SYN -j syn-flood

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A syn-flood -p tcp -m limit --limit 3/sec --limit-burst 6 -j RETURN

-A syn-flood -j REJECT --reject-with icmp-port-unreachable

COMMIT

EOF

/sbin/service iptables restart

}

main(){

yum_update

zone_time

ulimit_config

sysctl_config

sshd_config

selinux_config

iptables_config

}

main 五、部署架构环境

5.1 分析每台服务器干了哪些活!

node1&node2

1.配置Nginx反向代理将用户的请求代理到后端的LAMP服务器上。

2.配置双主keepalived完成Nginx proxy的双主机高可用,当一台Nginx proxy宕机时,通过Keepalived把VIP转移到BACKUP服务器。

3.主要的文件需要每天凌晨通过Rsync备份(本文暂时未提供,会在后续补上)

node3&node4

1.配置Varnish缓存代理服务器。

2.缓存图片,html,css等静态文件;不缓存php,php5等动态文件。

node5

1.部署httpd服务,使用httpd的虚拟主机功能虚拟出四个web服务

2.Web1用来放置静态内容(暂时不用,只做测试);Web2部署wordpress,PhpMyAdmin,Img1,Img2用于图片服务器.

3.挂载在远端的NFS共享目录到本地,实现图片放置到NFS服务器上。

4.重要文件在每天凌晨通过Rsync备份

node6

1.部署NFS服务,expect分发公钥

2.实时同步(暂时未部署)

3.重要文件每天凌晨通过Rsync备份

node7

1.与Httpd分离,须授权允许远程连接

2.通过Crond+Rsync定时备份(暂未提供)

3.重要文件每天凌晨通过Rsync备份

5.2 分发sshkey

将NFS服务器作为批量分发服务器,目前通过“人工智能”实现,对分发工具这一块还不熟悉,待补充。

ssh-keygen -t rsa -P ‘‘ ssh-copy-id -i .ssh/id_rsa.pub [email protected] ssh-copy-id -i .ssh/id_rsa.pub [email protected] ssh-copy-id -i .ssh/id_rsa.pub [email protected] ssh-copy-id -i .ssh/id_rsa.pub [email protected] ssh-copy-id -i .ssh/id_rsa.pub [email protected] ssh-copy-id -i .ssh/id_rsa.pub [email protected] ssh-copy-id -i .ssh/id_rsa.pub [email protected]

5.3 分发/etc/hosts

将/etc/hosts文件分发至每一台主机。

vi FenfaHost.sh

#!/bin/bash # cat > /etc/hosts << EOF 192.168.0.7 node1 192.168.0.8 node2 192.168.0.20 node3 192.168.0.21 node4 192.168.0.31 node5 192.168.0.188 node6 192.168.0.189 node7 EOF IP=192.168.0 for I in 7 8 20 21 31 189;do scp /etc/hosts [email protected]${IP}.$I:/etc/ done

5.4 程序包选择

安装全部选择yum安装,但是推荐源码安装。这里推荐一下自动化工具ansible,能够非常轻量地实现应用服务批量模块化管理。(PS:正在研究中,相信不久就能搞定!)

node1&node2

yum install -y keepalived ipvsadm nginx

Version:

Keepalived v1.2.13 (11/05,2016)

ipvsadm v1.27 2008/5/15 (compiled with popt and IPVS v1.2.1)

nginx version: nginx/1.10.2

node3&node4

yum install -y varnish

Version:

varnish 4.0.4

node5

yum install -y httpd php php-mysql php-mcrypt php-xcache php-mbstring nfs-utils

Server version:

Apache/2.4.6 (CentOS)

PHP 5.4.16 (cli) (built: Nov 6 2016 00:29:02)

phpMyAdmin-4.0.10.20-all-languages.tar.gz

wordpress-4.7.4-zh_CN

node6

yum install nfs-utils rpcbind -y

node7

yum install -y mariadb

Version: mariadb 5.5.52

5.5 node1&node2环境配置

nginx.conf配置

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main ‘$remote_addr - $remote_user [$time_local] "$request" ‘

‘$status $body_bytes_sent "$http_referer" ‘

‘"$http_user_agent" "$http_x_forwarded_for"‘;

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

upstream cachesrvs {

server 192.168.0.20:80;

server 192.168.0.21:80;

}

include /etc/nginx/conf.d/*.conf;

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name www.huwho.com;

# root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

proxy_pass http://cachesrvs;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

server {

listen 80;

server_name blog.huwho.com;

location / {

proxy_pass http://192.168.0.32:80;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

} keepalived.conf配置(node2稍有不同会在代码中注释说明)

! Configuration File for keepalived

#全局配置端

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 10

router_id node1 #另一节点修改为:node2

vrrp_mcast_group4 224.0.0.223 #组播地址,须保持一致

}

#Keepalived健康探测

vrrp_script chk_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

#Nginx健康探测

vrrp_script chk_nginx {

script "killall -0 nginx && exit 0 || exit 1"

interval 1

weight -5

fall 2

rise 1

}

#第一个虚拟路由器

vrrp_instance VI_1 {

state MASTER #另一个节点修改为:BACKUP

interface ens37

virtual_router_id 51

priority 100 #优先级,另一个节点修改为:90

advert_int 1

authentication {

auth_type PASS

auth_pass JzvnmfkY

}

virtual_ipaddress {

10.0.0.200/24 dev ens37

}

track_script {

chk_down

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

#第二个虚拟路由器

vrrp_instance VI_2 {

state BACKUP #另一个节点修改为:MASTER

interface ens37

virtual_router_id 52

priority 90 #另一个节点修改为:100

advert_int 1

authentication {

auth_type PASS

auth_pass azvnmfkY

}

virtual_ipaddress {

10.0.0.201/24 dev ens37

}

track_script {

chk_down

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

} notify.sh配置放置到/etc/keepalived

#!/bin/bash #description: An example of notify script # contact=‘[email protected]‘ notify(){ local mailsubject="$(hostname) to be $1 vip floating" local mailbody="$(date +‘%F %T‘):vrrp1 transition,$(hostname) changed to be $1" echo "$mailbody" | mail -s "$mailsubject" $contact } case $1 in master) systemctl start nginx notify master ;; backup) systemctl start nginx notify backup ;; fault) systemctl stop nginx notify fault ;; *) echo "Usage:$(basename $0) {master|backup|fault}" exit 1 ;; esac

5.6 node3&node4环境配置

default.vcl配置

vcl 4.0;

import directors;

# probe

probe www_probe {

.url = "/";

}

# Default backend

backend default {

.host = "192.168.0.31";

.port = "80";

}

# backend server

backend web1 {

.host = "192.168.0.31";

.port = "80";

.connect_timeout = 2s;

.first_byte_timeout = 10s;

.between_bytes_timeout = 1s;

.probe = www_probe;

}

backend web2 {

.host = "192.168.0.32";

.port = "80";

.connect_timeout = 2s;

.first_byte_timeout = 10s;

.between_bytes_timeout = 1s;

.probe = www_probe;

}

backend img1 {

.host = "192.168.0.33";

.port = "80";

.connect_timeout = 2s;

.first_byte_timeout = 10s;

.between_bytes_timeout = 1s;

.probe = www_probe;

}

backend img2 {

.host = "192.168.0.34";

.port = "80";

.connect_timeout = 2s;

.first_byte_timeout = 10s;

.between_bytes_timeout = 1s;

.probe = www_probe;

}

# init

sub vcl_init {

new web_cluster = directors.round_robin();

web_cluster.add_backend(web1);

web_cluster.add_backend(web2);

new img_cluster = directors.random();

img_cluster.add_backend(img1, 10); # 2/3 to backend one

img_cluster.add_backend(img2, 5); # 1/3 to backend two

}

# acl purgers

acl purgers {

"127.0.0.1";

"192.168.0.0"/24;

}

# recv

sub vcl_recv {

if (req.url ~ "\.(php|asp|aspx|jsp|do|ashx|shtml)($|\?)") {

set req.backend_hint = web2;

}

if (req.url ~ "\.(bmp|png|gif|jpg|jpeg|ico|gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)$") {

set req.backend_hint = img_cluster.backend();

}

if (req.url ~ "\.(html|htm|css|js)") {

set req.backend_hint = web1;

}

# allow PURGE from localhost and 192.168.0...

if (req.method == "PURGE") {

if (!client.ip ~ purgers) {

return (synth(405, "Purging not allowed for " + client.ip));

}

return (purge);

}

if (req.method != "GET" &&

req.method != "HEAD" &&

req.method != "PUT" &&

req.method != "POST" &&

req.method != "TRACE" &&

req.method != "OPTIONS" &&

req.method != "PATCH" &&

req.method != "DELETE") {

return (pipe);

}

if (req.method != "GET" && req.method != "HEAD") {

return (pass);

}

if (req.url ~ "\.(php|asp|aspx|jsp|do|ashx|shtml)($|\?)") {

return (pass);

}

if (req.http.Authorization) {

return (pass);

}

if (req.http.Accept-Encoding) {

if (req.url ~ "\.(bmp|png|gif|jpg|jpeg|ico|gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)$") {

unset req.http.Accept-Encoding;

} elseif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip";

} elseif (req.http.Accept-Encoding ~ "deflate") {

set req.http.Accept-Encoding = "deflate";

} else {

unset req.http.Accept-Encoding;

}

}

if (req.url ~ "\.(css|js|html|htm|bmp|png|gif|jpg|jpeg|ico|gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)($|\?)") {

unset req.http.cookie;

return (hash);

if (req.restarts == 0) {

if (req.http.X-Fowarded-For) {

set req.http.X-Forwarded-For = req.http.X-Forwarded-For + "," + client.ip;

} else {

set req.http.X-Forwarded-For = client.ip;

}

}

}

}

# pass

sub vcl_pass {

return (fetch);

}

# backen fetch

sub vcl_backend_fetch {

return (fetch);

}

# hash

sub vcl_hash {

hash_data(req.url);

if (req.http.host) {

hash_data(req.http.host);

} else {

hash_data(server.ip);

}

return (lookup);

}

# hit

sub vcl_hit {

if (req.method == "PURGE") {

return (synth(200, "Purged."));

}

return (deliver);

}

# miss

sub vcl_miss {

if (req.method == "PURGE") {

return (synth(404, "Purged."));

}

return (fetch);

}

# backend response

sub vcl_backend_response {

set beresp.grace = 5m;

if (beresp.status == 499 || beresp.status == 404 || beresp.status == 502) {

set beresp.uncacheable = true;

}

if (beresp.http.content-type ~ "text") {

set beresp.do_gzip = true;

}

if (bereq.url ~ "\.(php|jsp)(\?|$)") {

set beresp.uncacheable = true;

} else { //自定义缓存文件的缓存时长,即TTL值

if (bereq.url ~ "\.(css|js|html|htm|bmp|png|gif|jpg|jpeg|ico)($|\?)") {

set beresp.ttl = 15m;

unset beresp.http.Set-Cookie;

} elseif (bereq.url ~ "\.(gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)($|\?)") {

set beresp.ttl = 30m;

unset beresp.http.Set-Cookie;

} else {

set beresp.ttl = 10m;

unset beresp.http.Set-Cookie;

}

return (deliver);

}

}

# deliver

sub vcl_deliver {

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT";

set resp.http.X-Cache-Hits = obj.hits;

} else {

set resp.http.X-Cache = "MISS";

}

unset resp.http.X-Powered-By;

unset resp.http.Server;

unset resp.http.X-Drupal-Cache;

unset resp.http.Via;

unset resp.http.Link;

unset resp.http.X-Varnish;

set resp.http.xx_restarts_count = req.restarts;

set resp.http.xx_Age = resp.http.Age;

set resp.http.hit_count = obj.hits;

unset resp.http.Age;

return (deliver);

}

sub vcl_purge {

return (synth(200,"success"));

}

/* sub vcl_backend_error {

if (beresp.status == 500 ||

beresp.status == 501 ||

beresp.status == 502 ||

beresp.status == 503 ||

beresp.status == 504) {

return (retry);

}

}

*/

sub vcl_fini {

return (ok);

}varnish.params配置

RELOAD_VCL=1 VARNISH_VCL_CONF=/etc/varnish/default.vcl VARNISH_LISTEN_PORT=80 VARNISH_ADMIN_LISTEN_ADDRESS=127.0.0.1 VARNISH_ADMIN_LISTEN_PORT=6082 # Shared secret file for admin interface VARNISH_SECRET_FILE=/etc/varnish/secret VARNISH_STORAGE="malloc,512M" # User and group for the varnishd worker processes VARNISH_USER=varnish VARNISH_GROUP=varnish # Other options, see the man page varnishd(1) #DAEMON_OPTS="-p thread_pool_min=5 -p thread_pool_max=500 -p thread_pool_timeout=300"

5.7 node5环境配置

conf.d/http.conf配置

<VirtualHost 192.168.0.31:80> DocumentRoot "/apps/web/web1" DirectoryIndex index.html index.htm <Directory "/apps/web/web1"> Options Indexes FollowSymLinks AllowOverride none </Directory> <Location /> Require all granted </Location> </VirtualHost> <VirtualHost 192.168.0.32:80> DocumentRoot "/apps/web/web2" DirectoryIndex index.html index.php <Directory "/apps/web/web2"> Options Indexes FollowSymLinks AllowOverride none </Directory> <Location /> Require all granted </Location> </VirtualHost> <VirtualHost 192.168.0.33:80> DocumentRoot "/apps/web/img1" <Directory "/apps/web/img1"> Options Indexes FollowSymLinks AllowOverride none </Directory> <Location /> Require all granted </Location> </VirtualHost> <VirtualHost 192.168.0.34:80> DocumentRoot "/apps/web/img2" <Directory "/apps/web/img2"> Options Indexes FollowSymLinks AllowOverride none </Directory> <Location /> Require all granted </Location> </VirtualHost>

config.inc.php配置(phpMyAdmin-4.0.10.20-all-languages)

$cfg[‘blowfish_secret‘] = ‘1a8b72fcog6eeddddd‘; #随机添加字符串,加密作用 $cfg[‘Servers‘][$i][‘host‘] = ‘192.168.0.189‘; #更改IP为数据库的IP

wp-config.php配置(wordpress)

define(‘DB_NAME‘, ‘wordpress‘); /** MySQL数据库用户名 */ define(‘DB_USER‘, ‘michael‘); /** MySQL数据库密码 */ define(‘DB_PASSWORD‘, ‘password‘); /** MySQL主机 */ define(‘DB_HOST‘, ‘node7‘);

5.8 node6环境配置

/etc/exports配置

/data 192.168.0.0/24(rw,sync,all_squash,anonuid=48,anongid=48)

5.9 node7环境配置

安装Mycli,MySQL自动补全软件,高亮显式,我会单独写一篇博客介绍。

mysql create database wordpress; grant all on wordpress.* to [email protected]‘192.168.0.%‘ identified by ‘password‘; flush privileged; flush PRIVILEGES;

六、测试

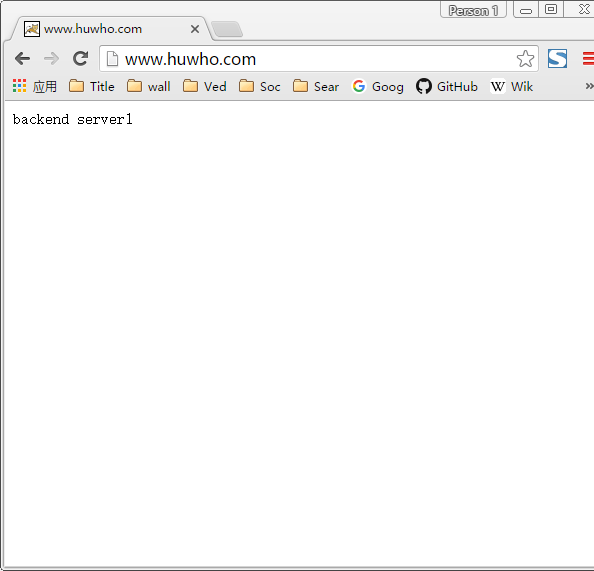

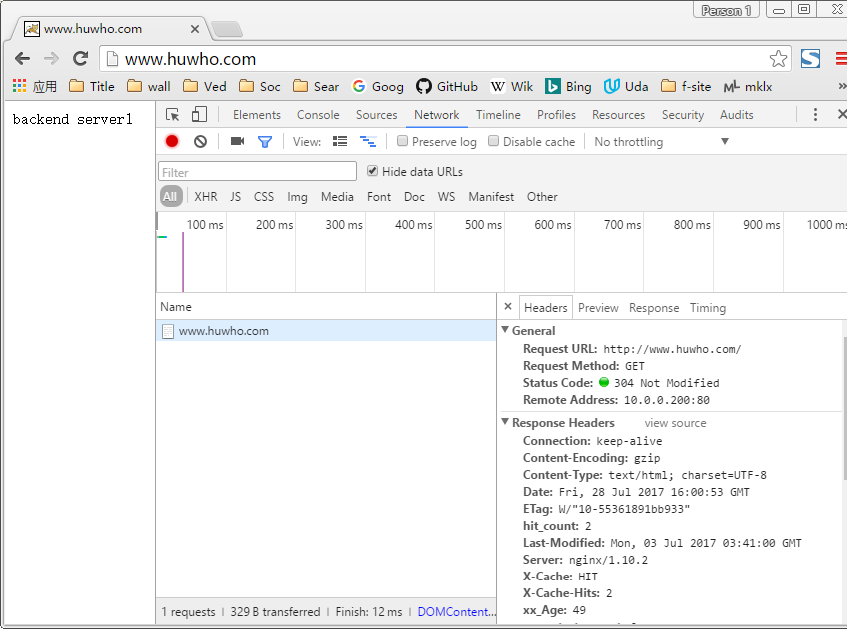

测试一下www.huwho.com。由于我之前用Tomcat做过实验,因为浏览器缓存的原因,所以这里它会显式Tomcat的图标。其实这一个httpd的web服务。

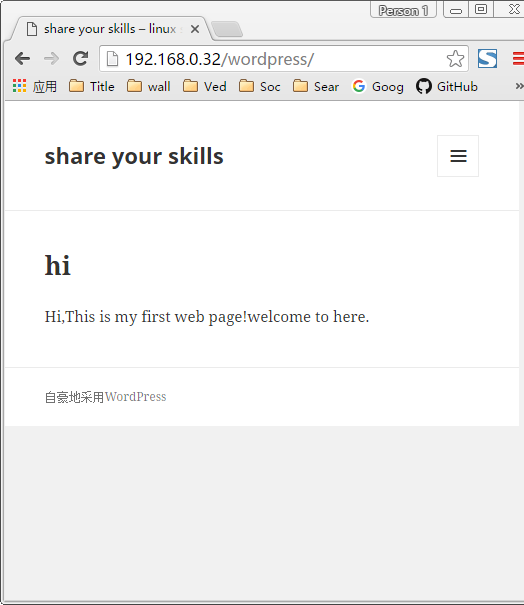

测试一下动态站点blog.huwho.com,由于我第一次用ip访问的所以这里它会自动跳转成IP地址。

测试Varnish html缓存,命中。

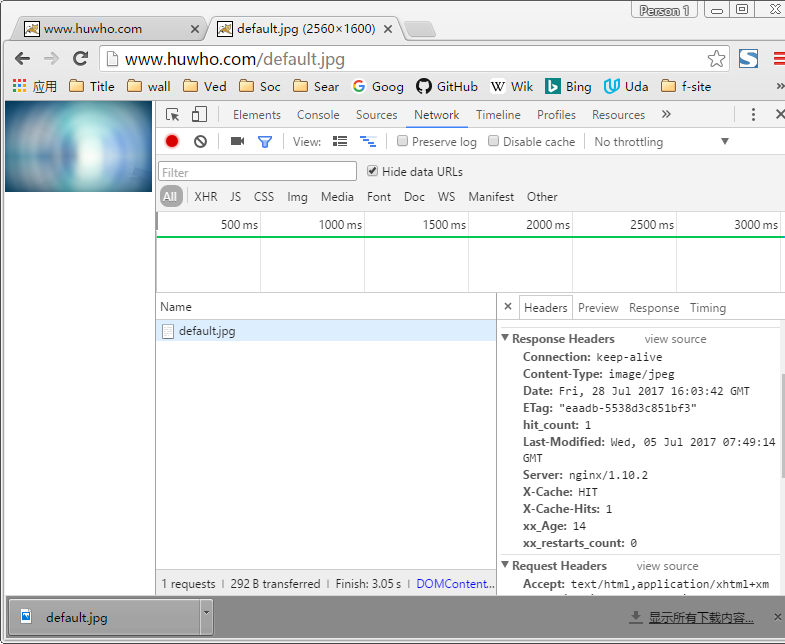

测试Varnish图片缓存,命中。

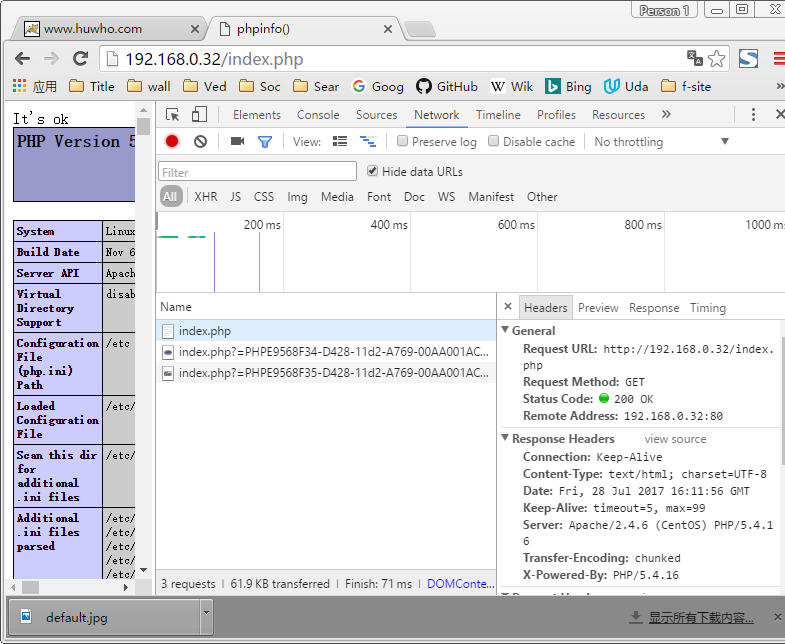

测试php文件,不缓存。

(PS:这是之前搭建的一个架构,由于时间匆忙,很多细节未能够记录下来,很多服务以及配置文件信息也还未进行优化,但总算时能跑起来了,还请见谅。作者水平有限,如有不足,还请指教。)

本文出自 “有趣灵魂” 博客,请务必保留此出处http://powermichael.blog.51cto.com/12450987/1951870

以上是关于Web集群部署(Nginx+Keepalived+Varnish+LAMP+NFS)的主要内容,如果未能解决你的问题,请参考以下文章

Centos 7部署docker+nginx+keepalived实现高可用web集群

keepalived+Nginx高可用集群部署(主从热备模式)

keepalived入门之keepalive+nginx实例部署