spark实战问题:is running beyond physical memory limits. Current usage: xx GB of xx GB physical memory

Posted xiaopihaierletian

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了spark实战问题:is running beyond physical memory limits. Current usage: xx GB of xx GB physical memory相关的知识,希望对你有一定的参考价值。

一:背景

Spark 任务出现了container内存负载出现OOM

二:问题

Application application_xxx_xxxx failed 2 times due to AM Container for appattempt_xxxx_xxxx_xxxx exited with exitCode: -104

Failing this attempt.Diagnostics: Container [pid=78835,containerID=container_e14_1611819047508_2623322_02_000003] is running beyond physical memory limits. Current usage: 6.6 GB of 6.6 GB physical memory used; 11.9 GB of 32.3 TB virtual memory used. Killing container.

Dump of the process-tree for container_e14_1611819047508_2623322_02_000003 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

三、分析

1、Spark OOM主要出现方:Drive和Executor。

Driver:主要是collect、show等

Executor:主要是缓存RDD等

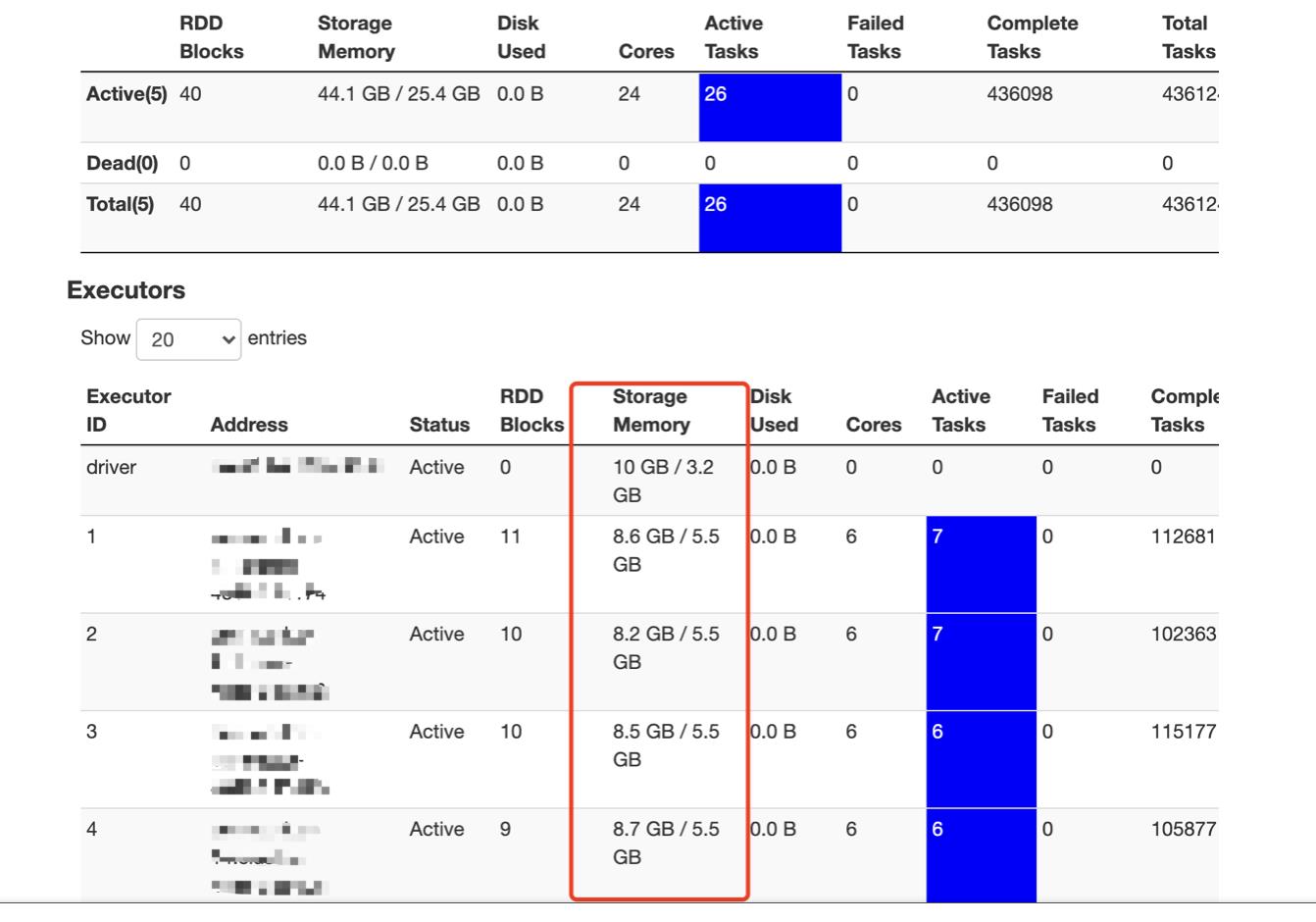

2、查看UI

3、调整对应参数

executor-memory

num-executors

driver-memory

以上是关于spark实战问题:is running beyond physical memory limits. Current usage: xx GB of xx GB physical memory的主要内容,如果未能解决你的问题,请参考以下文章