7.FLINK Source基于集合基于文件基于Socket自定义Source--随机订单数量自定义Source自定义Source-MySQL

Posted to.to

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了7.FLINK Source基于集合基于文件基于Socket自定义Source--随机订单数量自定义Source自定义Source-MySQL相关的知识,希望对你有一定的参考价值。

7.Source

7.1.基于集合

7.2.基于文件

7.3.基于Socket

7.4.自定义Source–随机订单数量

7.4.1.自定义Source

7.5.自定义Source-mysql

7.Source

7.1.基于集合

基于集合的Source

一般用于学习测试时编造数据时使用。

env.fromElements(可变参数);

env.fromCollection(各种集合);

env.generateSequence(开始,结束);

env.fromSequence(开始,结束);

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Arrays;

/**

* TODO

*

* @author tuzuoquan

* @date 2022/4/1 21:52

*/

public class SourceDemo01_Collection

public static void main(String[] args) throws Exception

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//TODO 1.source

DataStream<String> ds1 = env.fromElements("hadoop spark flink", "hadoop spark flink");

DataStream<String> ds2 = env.fromCollection(Arrays.asList("hadoop spark flink", "hadoop spark flink"));

DataStream<Long> ds3 = env.generateSequence(1, 100);

DataStream<Long> ds4 = env.fromSequence(1, 100);

//TODO 2.transformation

//TODO 3.sink

ds1.print();

ds2.print();

ds3.print();

ds4.print();

//TODO 4.execute

env.execute();

7.2.基于文件

一般用于学习测试时编造数据时使用

env.readTextFile(本地/HDFS文件/文件夹); //压缩文件也可以

public class SourceDemo02_File

public static void main(String[] args) throws Exception

//TODO 0.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//TODO 1.source

DataStream<String> ds1 = env.readTextFile("data/input/words.txt");

DataStream<String> ds2 = env.readTextFile("data/input/dir");

DataStream<String> ds3 = env.readTextFile("data/input/wordcount.txt.gz");

//TODO 2.transformation

//TODO 3.sink

ds1.print();

ds2.print();

ds3.print();

//TODO 4.execute

env.execute();

7.3.基于Socket

需求:

1.在node1上使用nc -lk 9999向指定端口发送数据

nc是netcat的简称,原本是用来设置路由器,我们可以利用它向某个端口发送数据。

如果没有该命令可以下安装

yum install -y nc

2.使用Flink编写流处理应用程序实时统计单词数量

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* @author tuzuoquan

* @date 2022/4/1 23:49

*/

public class SourceDemo03_Socket

public static void main(String[] args) throws Exception

//TODO 0.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//TODO 1.source

DataStream<String> line = env.socketTextStream("node1", 9999);

//TODO 2.transformation

/*SingleOutputStreamOperator<String> words = lines.flatMap(new FlatMapFunction<String, String>()

@Override

public void flatMap(String value, Collector<String> out) throws Exception

String[] arr = value.split(" ");

for (String word : arr)

out.collect(word);

);

words.map(new MapFunction<String, Tuple2<String,Integer>>()

@Override

public Tuple2<String, Integer> map(String value) throws Exception

return Tuple2.of(value,1);

);*/

//注意:下面的操作将上面的2步合成了1步,直接切割单词并记为1返回

SingleOutputStreamOperator<Tuple2<String,Integer>> wordAndOne =

line.flatMap(new FlatMapFunction<String, Tuple2<String, Integer>>()

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) throws Exception

String[] arr = value.split(" ");

for (String word : arr)

out.collect(Tuple2.of(word, 1));

);

SingleOutputStreamOperator<Tuple2<String, Integer>> result =

wordAndOne.keyBy(t -> t.f0).sum(1);

// TODO 3.sink

result.print();

// TODO 4.execute

env.execute();

7.4.自定义Source–随机订单数量

注意:lombok的使用

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.2</version>

<scope>provided</scope>

</dependency>

package demo3;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

/**

* @author tuzuoquan

* @date 2022/4/2 0:02

*/

@Data

@AllArgsConstructor

@NoArgsConstructor

public class Order

private String id;

private Integer userId;

private Integer money;

private Long createTime;

7.4.1.自定义Source

随机生成数据

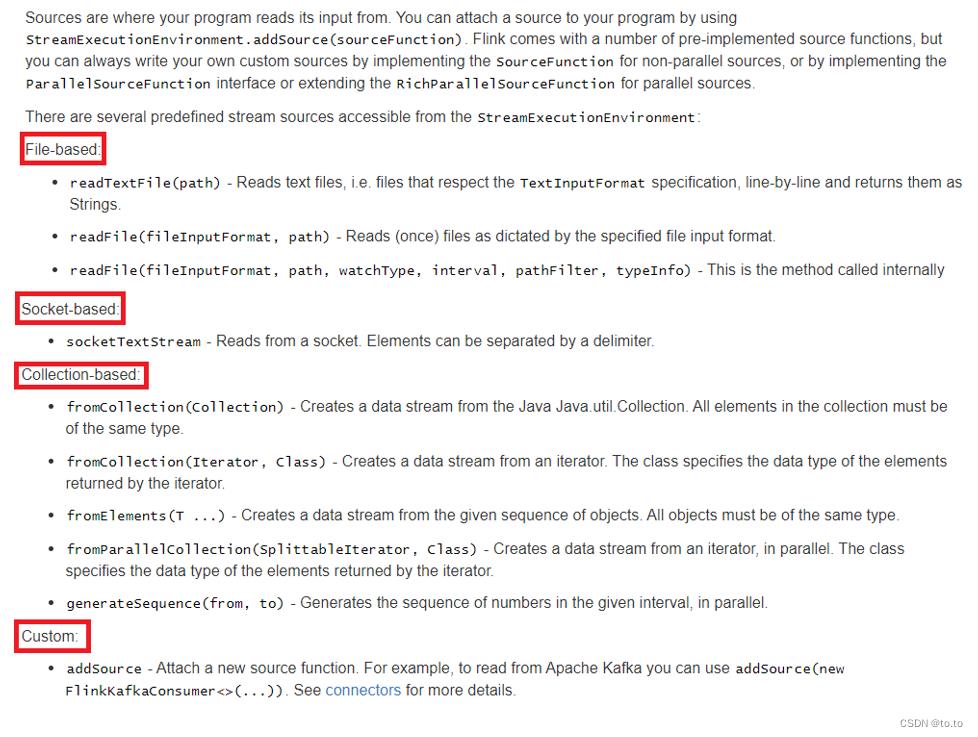

Flink还提供了数据源接口,我们实现该接口就可以实现自定义数据源,不同的接口有不同的功能,分类如下:

SourceFunction: 非并行数据源(并行度只能 = 1)

RichSourceFunction: 多功能非并行数据源(并行度只能 = 1)

ParallelSourceFunction: 并行数据源(并行度能够 >= 1)

RichParallelSourceFunction: 多功能并行数据源(并行度能够 >= 1)

需求

每隔1秒随机生成一条订单信息(订单ID、用户ID、订单金额、时间戳)

要求:

- 随机生成订单ID (UUID)

- 随机生成用户ID (0 - 2)

- 随机生成订单金额 (0 - 100)

- 时间戳为当前的系统时间

CREATE TABLE `t_student` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=7 DEFAULT CHARSET=utf8;

INSERT INTO `t_student` VALUES ('1', 'jack', '18');

INSERT INTO `t_student` VALUES ('2', 'tom', '19');

INSERT INTO `t_student` VALUES ('3', 'rose', '20');

INSERT INTO `t_student` VALUES ('4', 'tom', '19');

INSERT INTO `t_student` VALUES ('5', 'jack', '18');

INSERT INTO `t_student` VALUES ('6', 'rose', '20');

package demo3;

import demo3.Order;

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

import java.util.Random;

import java.util.UUID;

/**

* TODO

*

* @author tuzuoquan

* @date 2022/4/2 0:13

*/

public class SourceDemo04_Customer

public static void main(String[] args) throws Exception

//TODO 0.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//TODO 1.source

DataStream<Order> orderDS = env.addSource(new MyOrderSource()).setParallelism(2);

//TODO 2.transformation

//TODO 3.sink

orderDS.print();

//TODO 4.execute

env.execute();

public static class MyOrderSource extends RichParallelSourceFunction<Order>

private Boolean flag = true;

// 执行并生数据

@Override

public void run(SourceContext<Order> sourceContext) throws Exception

Random random = new Random();

while (flag)

String oid = UUID.randomUUID().toString();

int userId = random.nextInt(3);

int money = random.nextInt(101);

long createTime = System.currentTimeMillis();

sourceContext.collect(new Order(oid, userId, money, createTime));

Thread.sleep(1000);

//执行cancel命令的时候执行

@Override

public void cancel()

flag = false;

执行结果:

1> Order(id=20783def-ede5-4fd3-88b8-79023f936bf2, userId=2, money=13, createTime=1648830057754)

3> Order(id=3c1daa2f-ff8e-42fc-bdfa-b88de08df592, userId=0, money=86, createTime=1648830057754)

2> Order(id=e55ee291-6ecf-4545-b85e-ff9a22726e59, userId=1, money=93, createTime=1648830058765)

4> Order(id=60aafc7f-39a1-4911-8d02-bb3f0de99024, userId=0, money=60, createTime=1648830058765)

3> Order(id=77ccdb4f-3e67-46f0-9920-b285c9c78fd3, userId=2, money=36, createTime=1648830059775)

5> Order(id=c1635e13-b134-424f-90b8-d2f738a7e3bc, userId=0, money=42, createTime=1648830059776)

6> Order(id=e4cbc59c-a3ab-4969-8b7c-828f5cb72a38, userId=0, money=59, createTime=1648830060778)

7.5.自定义Source-MySQL

MySQL

实际开发中,经常会实时接收一些数据,要和MySQL中存储的一些规则进行匹配,那么这时候就可以使用Flink自定义数据源从MySQL中读取数据

那么现在先完成一个简单的需求:

1、从MySQL中实时加载数据

2、要求MySQL中的数据有变化,也能被实时加载出来。

package demo4;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

/**

* @author tuzuoquan

* @date 2022/4/14 9:49

*/

@Data

@NoArgsConstructor

@AllArgsConstructor

public class Student

private Integer id;

private String name;

private Integer age;

package demo4;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

/**

* TODO

*

* @author tuzuoquan

* @date 2022/4/14 9:51

*/

public class MySQLSource extends RichParallelSourceFunction<Student>

private boolean flag = true;

private Connection conn = null;

private PreparedStatement ps = null;

private ResultSet rs = null;

/**

* open只执行一次,适合开启资源

* @param parameters

* @throws Exception

*/

@Override

public void open(Configuration parameters) throws Exception

conn = DriverManager.getConnection("jdbc:mysql://localhost:3306/bigdata",

"root", "root");

String sql = "select id,name,age from t_student";

ps = conn.prepareStatement(sql);

@Override

public void run(SourceContext<Student> ctx) throws Exception

while (flag)

rs = ps.executeQuery();

while (rs.next())

int id = rs.getInt("id");

String name = rs.getString("name");

int age = rs.getInt("age");

ctx.collect(new Student(id, name, age));

Thread.sleep(5000);

/**

* 接收到cancel命令时取消数据生成

*/

@Override

public void cancel()

flag = false;

/**

* close里面关闭资源

* @throws Exception

*/

@Override

public void close() throws Exception

if (conn != null) conn.close();

if (ps != null) ps.close();

if (rs != null) rs.close();

/*

CREATE TABLE `t_student` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=7 DEFAULT CHARSET=utf8;

INSERT INTO `t_student` VALUES ('1', 'jack', '18');

INSERT INTO `t_student` VALUES ('2', 'tom', '19');

INSERT INTO `t_student` VALUES ('3', 'rose', '20');

INSERT INTO `t_student` VALUES ('4', 'tom', '19');

INSERT INTO `t_student` VALUES ('5', 'jack', '18');

INSERT INTO `t_student` VALUES ('6', 'rose', '20');

*/

package 以上是关于7.FLINK Source基于集合基于文件基于Socket自定义Source--随机订单数量自定义Source自定义Source-MySQL的主要内容,如果未能解决你的问题,请参考以下文章