HBase RegionServer挂掉导致集群不可用问题

Posted 悟初境

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HBase RegionServer挂掉导致集群不可用问题相关的知识,希望对你有一定的参考价值。

生产中HBase RegionServer挂掉一个,整个集群却不可用了,排查下问题原因。

环境准备

限于资源,我们使用一台Linux机器,采用HBase的假分布式模式部署5个RegionServer, 文档.

- HBase版本:1.2.0

- Hadoop版本:2.7.7

hostname 127.0.0.1

hbase-site.xml

<configuration>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/service/hbase/hbase-1.2.0/tmp</value>

</property>

</configuration>

hbase起多个region server

bin/start-hbase.sh

bin/local-regionservers.sh start 2 3 4 5

# jps -l | grep hbase

25204 org.apache.hadoop.hbase.master.HMaster

26711 org.apache.hadoop.hbase.regionserver.HRegionServer

26409 org.apache.hadoop.hbase.regionserver.HRegionServer

25051 org.apache.hadoop.hbase.zookeeper.HQuorumPeer

25387 org.apache.hadoop.hbase.regionserver.HRegionServer

26556 org.apache.hadoop.hbase.regionserver.HRegionServer

26941 org.apache.hadoop.hbase.regionserver.HRegionServer

通过 http://127.0.0.1:16010/master-status 查看Hbase界面。

测试步骤

建一张带有预定义分区的表,写入数据,将数据分布在不同的region,同时分布在不同的 RegionServer。

测试读取、写入不同region的数据,应该正常。

杀掉对应的 RegionServer,在region迁移完成之前,再次读取、写入,应该超时报错。

RegionServer迁移完成,再次读取写入应该正常。

恢复 RegionServer ,region应该重新分布。

具体执行

1.建表

RowKey设置为 8个字节,为递增的数字。

预分区:因为我们起了5个 RegionServer,那就预分区10个吧: 00000000~09000000

create 'test_rs', NAME => 'C', TTL => 5184000, VERSIONS => 1, DATA_BLOCK_ENCODING => 'PREFIX' ,

SPLITS=>["00000000", "10000000", "20000000", "30000000", "40000000", "50000000", "60000000", "70000000", "80000000", "90000000"]

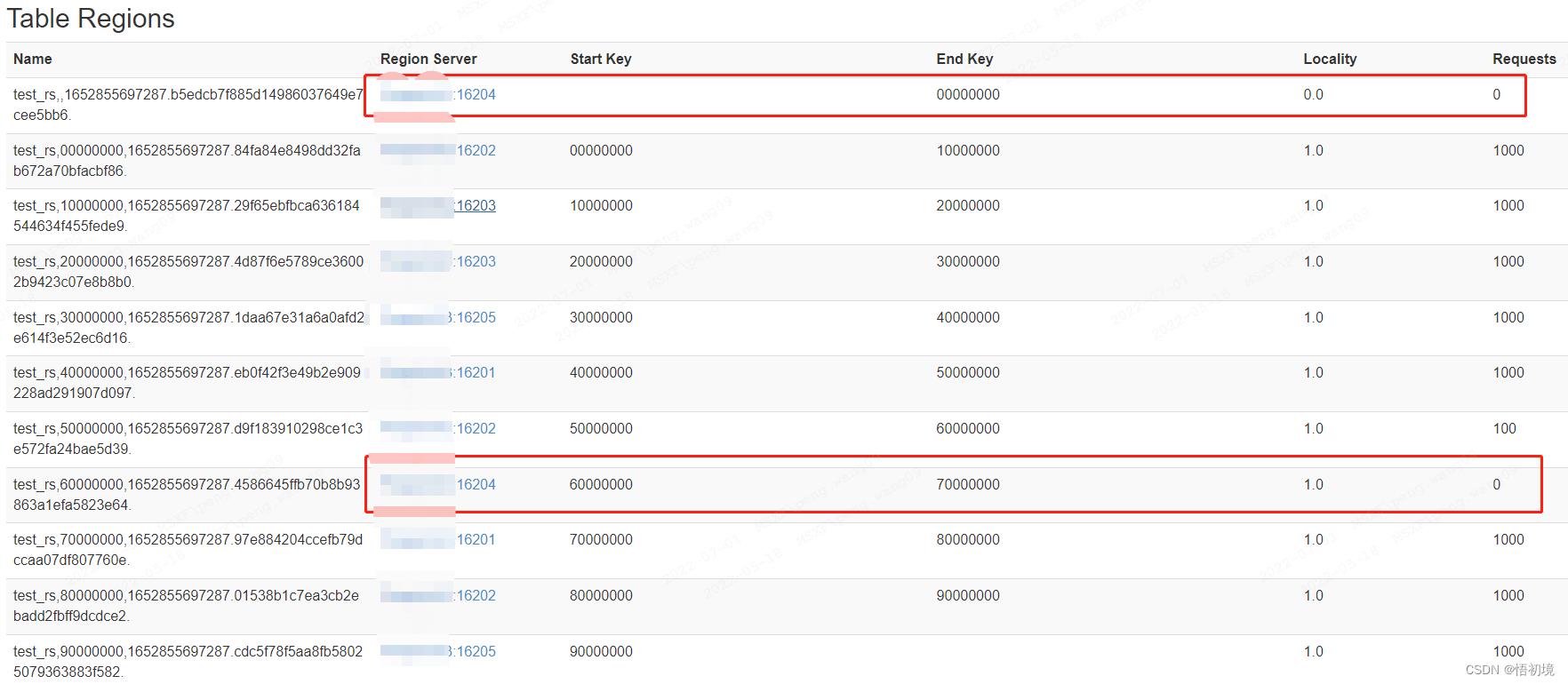

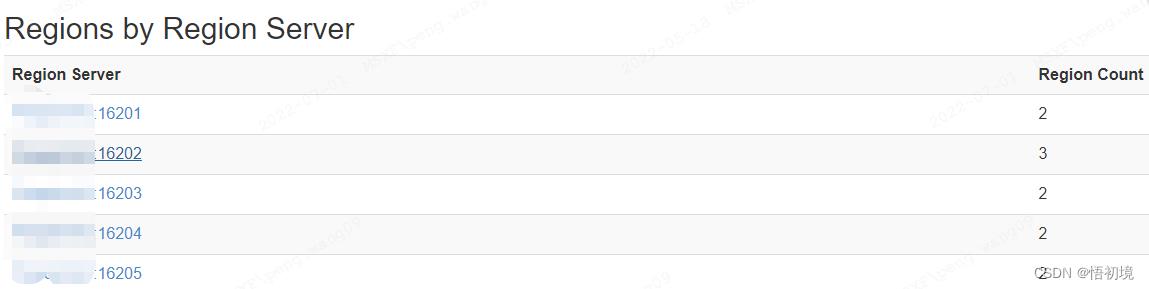

建完后总共11个region,分布均匀:http://127.0.0.1:16010/table.jsp?name=test_rs

2.写入数据

每个分区可以写入1000万条数据,我们这里先各写入 10000 条, 每条数据8K,总共800MB数据。

@Test

public void insert()

int step = 10000000;

HBaseHelper.execute(table ->

for (int i = 0; i < 10; i++)

int start = i * step;

int n = 10000;

int end = start + n;

List<Put> puts = new ArrayList<>(n);

for (int j = start; j < end; j++)

Put p = getPut(j);

puts.add(p);

System.out.println("批次:" + i);

table.put(puts);

table.close();

);

private static Put getPut(int j)

byte[] rowKey = String.format("%08d", j).getBytes(StandardCharsets.UTF_8);

Put p = new Put(rowKey);

// 不用WAL

p.setDurability(Durability.SKIP_WAL);

p.addColumn(CF, QUALIFIER, randomValue());

return p;

private static byte[] randomValue()

StringBuilder sb = new StringBuilder();

for (int i = 0; i < 500; i++)

sb.append(UUID.randomUUID());

return sb.toString().getBytes(StandardCharsets.UTF_8);

为了让数据都落盘,我们flush一下:

flush 'test_rs'

写入完成,我们验证数据是否分布正确,查看Master页面。

3.正常读取

目前正常读取

@Test

public void scan()

HBaseHelper.execute(table ->

byte[] start = "00000000".getBytes(StandardCharsets.UTF_8);

byte[] end = "99999999".getBytes(StandardCharsets.UTF_8);

ResultScanner scanner = table.getScanner(new Scan(start, end));

int cnt = 0;

for (Result r : scanner)

cnt++;

assertEquals(10000, cnt);

);

4.杀掉一个RegionServer,在region迁移完成之前,再次读取、写入,应该超时报错

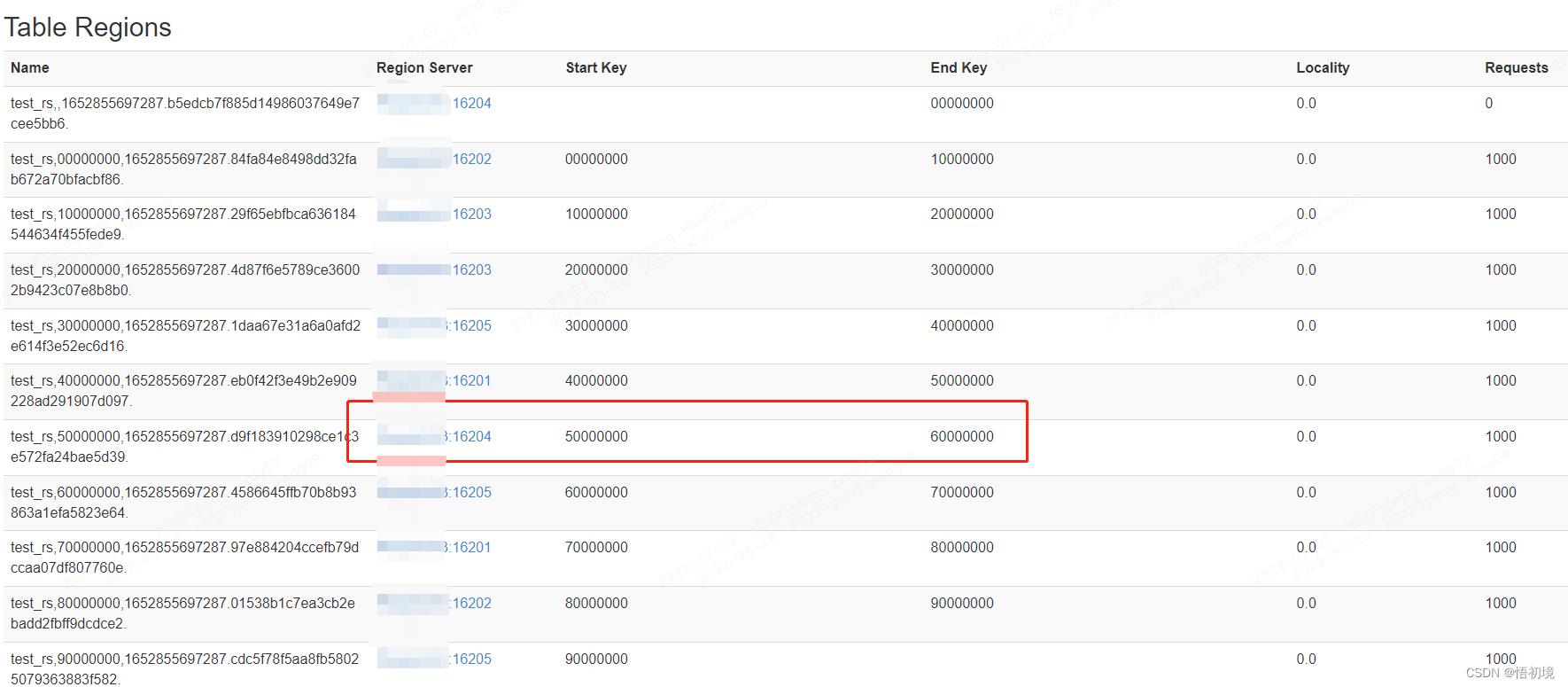

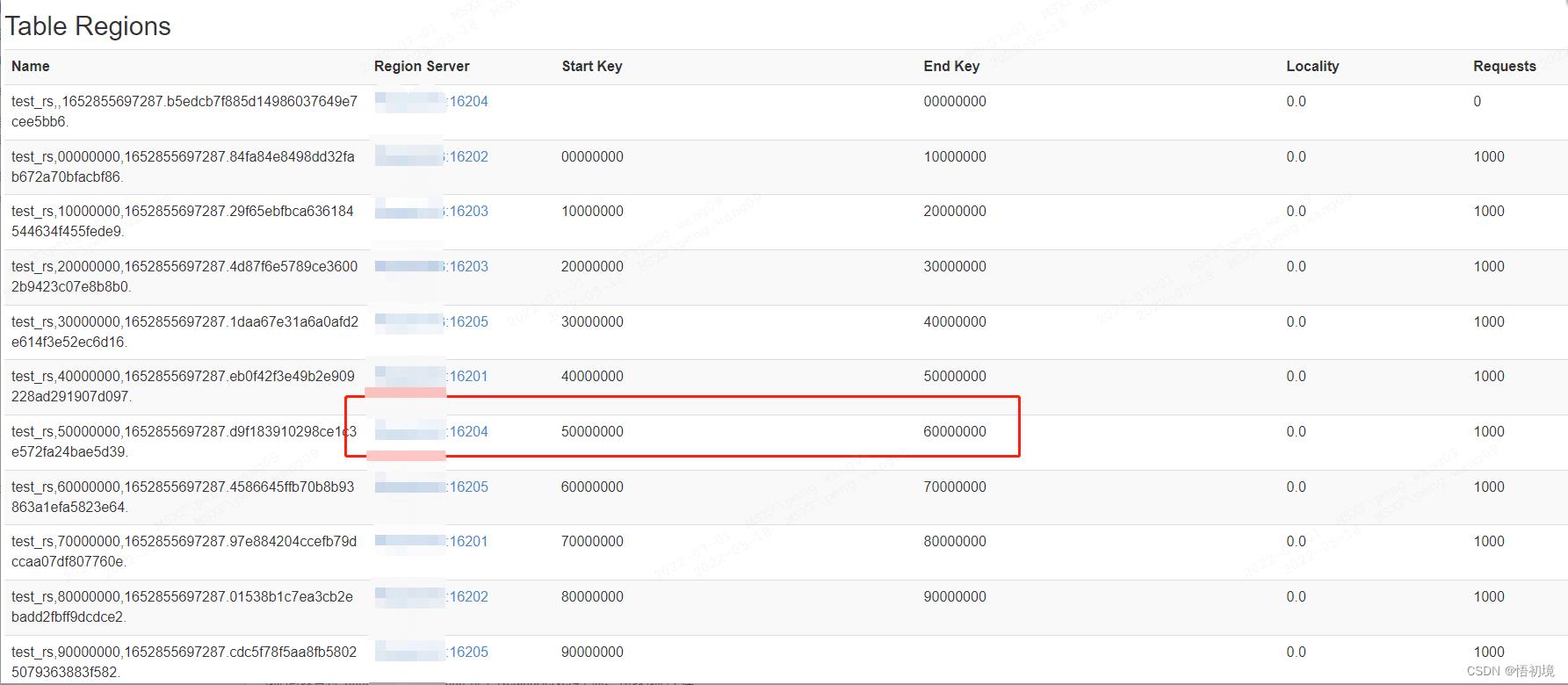

先看下之前的Region分布:

我们可以看到 50000000 - 60000000位于 RegionServer4上面。 所以我们干掉

RegionServer4,直接杀进程。

# jps -lm | grep hbase

25204 org.apache.hadoop.hbase.master.HMaster start

26711 org.apache.hadoop.hbase.regionserver.HRegionServer -D hbase.regionserver.port=16204 -D hbase.regionserver.info.port=16304 start

26409 org.apache.hadoop.hbase.regionserver.HRegionServer -D hbase.regionserver.port=16202 -D hbase.regionserver.info.port=16302 start

32041 org.jruby.Main -X+O /home/service/hbase/hbase-1.2.0/bin/../bin/hirb.rb

25051 org.apache.hadoop.hbase.zookeeper.HQuorumPeer start

25387 org.apache.hadoop.hbase.regionserver.HRegionServer -D hbase.regionserver.port=16201 -D hbase.regionserver.info.port=16301 start

26556 org.apache.hadoop.hbase.regionserver.HRegionServer -D hbase.regionserver.port=16203 -D hbase.regionserver.info.port=16303 start

26941 org.apache.hadoop.hbase.regionserver.HRegionServer -D hbase.regionserver.port=16205 -D hbase.regionserver.info.port=16305 start

# 不给进程反映时间

# kill -9 26711

事实证明,杀掉进程后并没有session超时的90秒钟,HBase立马做了Region迁移。而在HDFS上的数据没有移动,因为我们只有一个副本,一个HDFS节点,也不需要移动,所以很快。

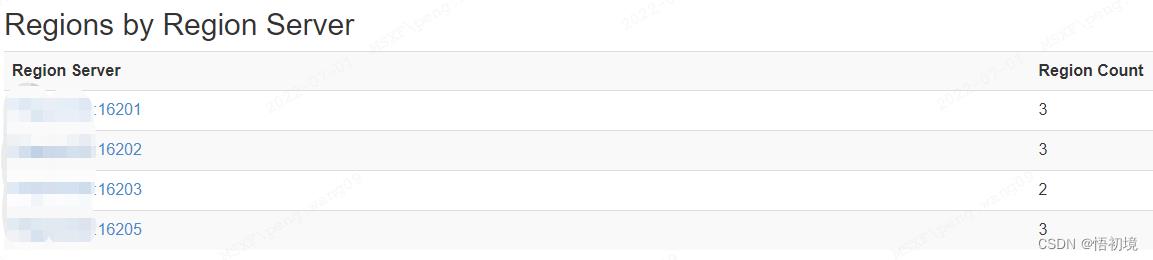

我们再将RegionServer4起起来:

bin/local-regionservers.sh start 4

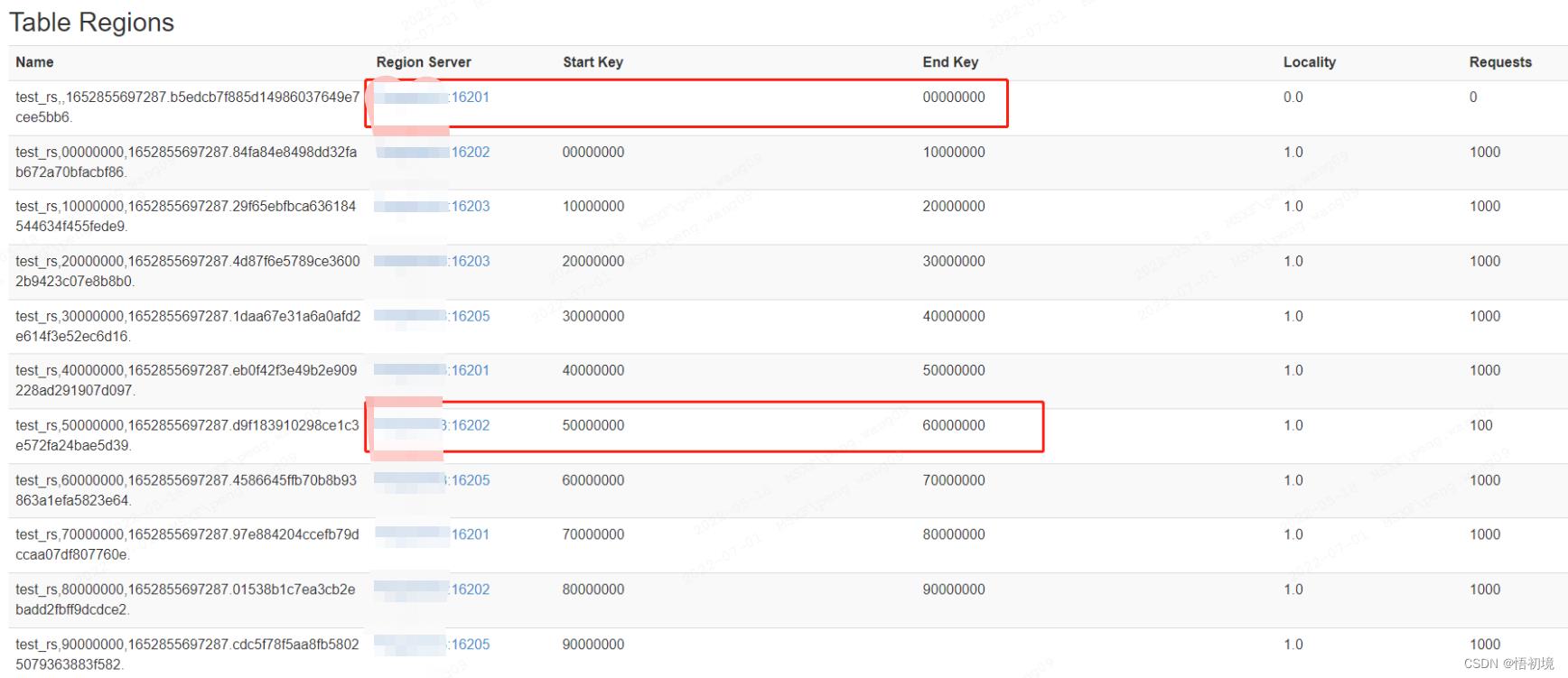

过了一会,region重新分布

当手动删除ZK上region server的节点

[zk: host01:2181(CONNECTED) 8] delete /hbase/rs/host01,16201,1652781432440

[zk: host01:2181(CONNECTED) 9] ls /hbase/rs

[host01,16202,1652781543385, host01,16203,1652781544653, host01,16204,1652856885868, host01,16205,1652781547086]

RegionServer也会自动把自己杀掉

2022-05-18 15:17:43,853 INFO [regionserver/host01/127.0.0.1:16201.logRoller] regionserver.LogRoller: LogRoller exiting.

2022-05-18 15:17:43,856 INFO [regionserver/host01/127.0.0.1:16201] ipc.RpcServer: Stopping server on 16201

2022-05-18 15:17:43,856 INFO [RpcServer.listener,port=16201] ipc.RpcServer: RpcServer.listener,port=16201: stopping

2022-05-18 15:17:43,857 INFO [RpcServer.responder] ipc.RpcServer: RpcServer.responder: stopped

2022-05-18 15:17:43,857 INFO [RpcServer.responder] ipc.RpcServer: RpcServer.responder: stopping

2022-05-18 15:17:43,859 INFO [regionserver/host01/127.0.0.1:16201] zookeeper.ZooKeeper: Session: 0x180d17334390002 closed

2022-05-18 15:17:43,859 INFO [main-EventThread] zookeeper.ClientCnxn: EventThread shut down

2022-05-18 15:17:43,859 INFO [regionserver/host01/127.0.0.1:16201] regionserver.HRegionServer: stopping server host01,16201,1652781432440; zookeeper connection closed.

2022-05-18 15:17:43,859 INFO [regionserver/host01/127.0.0.1:16201] regionserver.HRegionServer: regionserver/host01/127.0.0.1:16201 exiting

2022-05-18 15:17:43,859 ERROR [main] regionserver.HRegionServerCommandLine: Region server exiting

java.lang.RuntimeException: HRegionServer Aborted

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.start(HRegionServerCommandLine.java:68)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.run(HRegionServerCommandLine.java:87)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126)

at org.apache.hadoop.hbase.regionserver.HRegionServer.main(HRegionServer.java:2667)

2022-05-18 15:17:43,861 INFO [Thread-6] regionserver.ShutdownHook: Shutdown hook starting; hbase.shutdown.hook=true; fsShutdownHook=org.apache.hadoop.fs.FileSystem$Cache$ClientFinalizer@42e3ede4

2022-05-18 15:17:43,861 INFO [Thread-6] regionserver.ShutdownHook: Starting fs shutdown hook thread.

2022-05-18 15:17:43,864 INFO [Thread-6] regionserver.ShutdownHook: Shutdown hook finished.

过一会同样会重新分布。

杀掉RegionServer进程

ZK立马就感应到连接断开,并删除了 /hbase/rs/下对应RS的节点。

2022-05-18 16:19:46,809 WARN [NioserverCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxn: caught end of stream exception

EndOfStreamException: Unable to read additional data from client sessionid 0x180d17334390098, likely client has closed socket

at org.apache.zookeeper.server.NIOServerCnxn.doIO(NIOServerCnxn.java:228)

at org.apache.zookeeper.server.NIOServerCnxnFactory.run(NIOServerCnxnFactory.java:208)

at java.lang.Thread.run(Thread.java:748)

2022-05-18 16:19:46,810 INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxn: Closed socket connection for client /127.0.0.1:49070 which had sessionid 0x180d17334390098

2022-05-18 16:19:46,810 WARN [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxn: caught end of stream exception

EndOfStreamException: Unable to read additional data from client sessionid 0x180d17334390099, likely client has closed socket

at org.apache.zookeeper.server.NIOServerCnxn.doIO(NIOServerCnxn.java:228)

at org.apache.zookeeper.server.NIOServerCnxnFactory.run(NIOServerCnxnFactory.java:208)

at java.lang.Thread.run(Thread.java:748)

2022-05-18 16:19:46,810 INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxn: Closed socket connection for client /127.0.0.1:49072 which had sessionid 0x180d17334390099

2022-05-18 16:19:47,855 INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxnFactory: Accepted socket connection from /127.0.0.1:49442

2022-05-18 16:19:47,857 INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.ZooKeeperServer: Client attempting to establish new session at /127.0.0.1:49442

2022-05-18 16:19:47,858 INFO [SyncThread:0] server.ZooKeeperServer: Established session 0x180d173343900a0 with negotiated timeout 30000 for client /127.0.0.1:49442

2022-05-18 16:19:48,192 WARN [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxn: caught end of stream exception

EndOfStreamException: Unable to read additional data from client sessionid 0x180d173343900a0, likely client has closed socket

at org.apache.zookeeper.server.NIOServerCnxn.doIO(NIOServerCnxn.java:228)

at org.apache.zookeeper.server.NIOServerCnxnFactory.run(NIOServerCnxnFactory.java:208)

at java.lang.Thread.run(Thread.java:748)

2022-05-18 16:19:48,193 INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181] server.NIOServerCnxn: Closed socket connection for client /127.0.0.1:49442 which had sessionid 0x180d173343900a0

master上仅仅过了1秒,就开始重分配region,下面是完整日志:

2022-05-18 16:19:47,862 INFO [main-EventThread] zookeeper.RegionServerTracker: RegionServer ephemeral node deleted, processing expiration [host01,16203,1652861323251]

2022-05-18 16:19:47,966 INFO [ProcedureExecutor-0] procedure.ServerCrashProcedure: Start processing crashed host01,16203,1652861323251

2022-05-18 16:19:48,180 INFO [ProcedureExecutor-0] master.SplitLogManager: dead splitlog workers [host01,16203,1652861323251]

2022-05-18 16:19:48,180 INFO [ProcedureExecutor-0] master.SplitLogManager: Started splitting 1 logs in [hdfs://localhost:9000/hbase/WALs/host01,16203,1652861323251-splitting] for [host01,16203,1652861323251]

2022-05-18 16:19:48,183 INFO [main-EventThread] coordination.SplitLogManagerCoordination: task /hbase/splitWAL/WALs%2Fhost01%2C16203%2C1652861323251-splitting%2Fhost01%252C16203%252C1652861323251.default.1652861541261 acquired by host01,16205,1652781547086

2022-05-18 16:19:48,743 INFO [host01,16000,1652781431501_splitLogManager__ChoreService_1] master.SplitLogManager: total tasks = 1 unassigned = 0 tasks=/hbase/splitWAL/WALs%2Fhost01%2C16203%2C1652861323251-splitting%2Fhost01%252C16203%252C1652861323251.default.1652861541261=last_update = 1652861988208 last_version = 2 cur_worker_name = host01,16205,1652781547086 status = in_progress incarnation = 0 resubmits = 0 batch = installed = 1 done = 0 error = 0

2022-05-18 16:19:52,218 INFO [main-EventThread] coordination.SplitLogManagerCoordination: task /hbase/splitWAL/WALs%2Fhost01%2C16203%2C1652861323251-splitting%2Fhost01%252C16203%252C1652861323251.default.1652861541261 entered state: DONE host01,16205,1652781547086

2022-05-18 16:19:52,222 INFO [main-EventThread] wal.WALSplitter: Archived processed log hdfs://localhost:9000/hbase/WALs/host01,16203,1652861323251-splitting/host01%2C16203%2C1652861323251.default.1652861541261 to hdfs://localhost:9000/hbase/oldWALs/host01%2C16203%2C1652861323251.default.1652861541261

2022-05-18 16:19:52,222 INFO [main-EventThread] coordination.SplitLogManagerCoordination: Done splitting /hbase/splitWAL/WALs%2Fhost01%2C16203%2C1652861323251-splitting%2Fhost01%252C16203%252C1652861323251.default.1652861541261

2022-05-18 16:19:52,223 INFO [ProcedureExecutor-0] master.SplitLogManager: finished splitting (more than or equal to) 83 bytes in 1 log files in [hdfs://localhost:9000/hbase/WALs/host01,16203,1652861323251-splitting] in 4043ms

2022-05-18 16:19:52,325 INFO [ProcedureExecutor-0] master.RegionStates: Transition 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=OPEN, ts=1652861541361, server=host01,16203,1652861323251 to 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=OFFLINE, ts=1652861992325, server=host01,16203,1652861323251

2022-05-18 16:19:52,325 INFO [ProcedureExecutor-0] master.RegionStates: Transition 29f65ebfbca636184544634f455fede9 state=OPEN, ts=1652861541362, server=host01,16203,1652861323251 to 29f65ebfbca636184544634f455fede9 state=OFFLINE, ts=1652861992325, server=host01,16203,1652861323251

2022-05-18 16:19:52,325 INFO [ProcedureExecutor-0] master.RegionStates: Transition 97e884204ccefb79dccaa07df807760e state=OPEN, ts=1652861541362, server=host01,16203,1652861323251 to 97e884204ccefb79dccaa07df807760e state=OFFLINE, ts=1652861992325, server=host01,16203,1652861323251

2022-05-18 16:19:52,325 INFO [ProcedureExecutor-0] master.AssignmentManager: Bulk assigning 3 region(s) across 3 server(s), round-robin=true

2022-05-18 16:19:52,326 INFO [host01,16000,1652781431501-GeneralBulkAssigner-0] master.AssignmentManager: Assigning 1 region(s) to host01,16202,1652781543385

2022-05-18 16:19:52,326 INFO [host01,16000,1652781431501-GeneralBulkAssigner-1] master.AssignmentManager: Assigning 1 region(s) to host01,16204,1652856885868

2022-05-18 16:19:52,326 INFO [host01,16000,1652781431501-GeneralBulkAssigner-2] master.AssignmentManager: Assigning 1 region(s) to host01,16205,1652781547086

2022-05-18 16:19:52,331 INFO [host01,16000,1652781431501-GeneralBulkAssigner-1] master.RegionStates: Transition 97e884204ccefb79dccaa07df807760e state=OFFLINE, ts=1652861992326, server=host01,16203,1652861323251 to 97e884204ccefb79dccaa07df807760e state=PENDING_OPEN, ts=1652861992331, server=host01,16204,1652856885868

2022-05-18 16:19:52,331 INFO [host01,16000,1652781431501-GeneralBulkAssigner-0] master.RegionStates: Transition 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=OFFLINE, ts=1652861992326, server=host01,16203,1652861323251 to 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=PENDING_OPEN, ts=1652861992331, server=host01,16202,1652781543385

2022-05-18 16:19:52,331 INFO [host01,16000,1652781431501-GeneralBulkAssigner-2] master.RegionStates: Transition 29f65ebfbca636184544634f455fede9 state=OFFLINE, ts=1652861992326, server=host01,16203,1652861323251 to 29f65ebfbca636184544634f455fede9 state=PENDING_OPEN, ts=1652861992331, server=host01,16205,1652781547086

2022-05-18 16:19:52,342 INFO [AM.ZK.Worker-pool2-t241] master.RegionStates: Transition 29f65ebfbca636184544634f455fede9 state=PENDING_OPEN, ts=1652861992331, server=host01,16205,1652781547086 to 29f65ebfbca636184544634f455fede9 state=OPENING, ts=1652861992342, server=host01,16205,1652781547086

2022-05-18 16:19:52,342 INFO [AM.ZK.Worker-pool2-t242] master.RegionStates: Transition 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=PENDING_OPEN, ts=1652861992331, server=host01,16202,1652781543385 to 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=OPENING, ts=1652861992342, server=host01,16202,1652781543385

2022-05-18 16:19:52,345 INFO [AM.ZK.Worker-pool2-t243] master.RegionStates: Transition 97e884204ccefb79dccaa07df807760e state=PENDING_OPEN, ts=1652861992331, server=host01,16204,1652856885868 to 97e884204ccefb79dccaa07df807760e state=OPENING, ts=1652861992345, server=host01,16204,1652856885868

2022-05-18 16:19:52,398 INFO [AM.ZK.Worker-pool2-t244] master.RegionStates: Transition 29f65ebfbca636184544634f455fede9 state=OPENING, ts=1652861992342, server=host01,16205,1652781547086 to 29f65ebfbca636184544634f455fede9 state=OPEN, ts=1652861992398, server=host01,16205,1652781547086

2022-05-18 16:19:52,402 INFO [AM.ZK.Worker-pool2-t245] master.RegionStates: Transition 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=OPENING, ts=1652861992342, server=host01,16202,1652781543385 to 01538b1c7ea3cb2ebadd2fbff9dcdce2 state=OPEN, ts=1652861992402, server=host01,16202,1652781543385

2022-05-18 16:19:52,402 INFO [AM.ZK.Worker-pool2-t246] master.RegionStates: Offlined 29f65ebfbca636184544634f455fede9 from host01,16203,1652861323251

2022-05-18 16:19:52,404 INFO [AM.ZK.Worker-pool2-t249] master.RegionStates: Offlined 01538b1c7ea3cb2ebadd2fbff9dcdce2 from host01,16203,1652861323251

2022-05-18 16:19:52,500 INFO [AM.ZK.Worker-pool2-t250] master.RegionStates: Transition 97e884204ccefb79dccaa07df807760e state=OPENING, ts=1652861992345, server=host01,16204,1652856885868 to 97e884204ccefb79dccaa07df807760e state=OPEN, ts=1652861992500, server=host01,16204,1652856885868

2022-05-18 16:19:52,501 INFO [AM.ZK.Worker-pool2-t252] master.RegionStates: Offlined 97e884204ccefb79dccaa07df807760e from host01,16203,1652861323251

2022-05-18 16:19:52,502 INFO [ProcedureExecutor-0] master.AssignmentManager: Bulk assigning done

2022-05-18 16:19:52,604 INFO [ProcedureExecutor-0] procedure.ServerCrashProcedure: Finished processing of crashed host01,16203,1652861323251

高压下的反应

将HBase内存调到200M

export HBASE_HEAPSIZE=200M

export HBASE_OFFHEAPSIZE=200M

然后,朝一个RS写入大量数据

/**

* 16byte * 50000 = 800K

*/

private static Put getBigPut(int j)

return getPut(j, 50000);

@Test

public void writeBulkData() throws InterruptedException

ExecutorService pool = Executors.newFixedThreadPool(225);

int bound = 10000000;

int start = 20000000;

int end = start + bound;

TableName tableName = TableName.valueOf(TABLE);

// 写线程

for (int i = 0; i < 200; i++)

pool.execute(() ->

// 异步写入

HBaseHelper.executeAsync(multiplexer ->

// 写入800M

int n = 1000;

Random random = new Random();

for (int j = 0; j < n; j++)

HBaseHelper.withTime("Put", () ->

int rowKey = start + random.nextInt(bound);

multiplexer.put(tableName, getBigPut(rowKey));

);

)以上是关于HBase RegionServer挂掉导致集群不可用问题的主要内容,如果未能解决你的问题,请参考以下文章