Pytorch 入门

Posted wang豪

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Pytorch 入门相关的知识,希望对你有一定的参考价值。

Pytorch 入门

文章目录

参考

一、常见的Tensor形式

- scaler

通常为一个数值

x = tensor(42.)

- vector

常见的向量

v = tensor([1,2,3])

- matrix

一般是矩阵,通常是多维的

M = tensor([[1,2],[4,5]])

- n-dimensional tensor

通常指更高纬度的表示

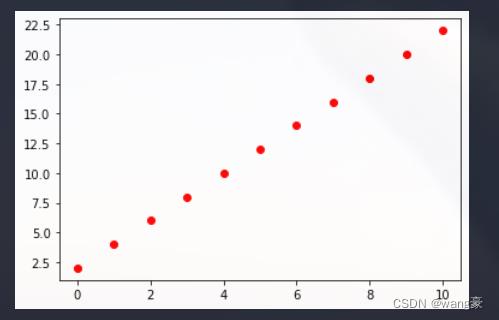

实现简单的线性回归Demo

主要通过该案例去理解Pytorch中的autograd机制

训练模型并保存模型

import torch

import torch.nn as nn

import numpy as np

# 数据准备

x_values = [i for i in range(11)]

x_train = np.array(x_values, dtype=np.float32)

x_train = x_train.reshape(-1, 1)

y_values = [i*2 + 2 for i in range(11)]

y_train = np.array(y_values, dtype=np.float32)

y_train = y_train.reshape(-1,1)

class LinearRegressionModel(nn.Module):

def __init__(self, input_dim, output_dim):

super(LinearRegressionModel, self).__init__()

self.linear = nn.Linear(input_dim, output_dim)

def forward(self, x):

out = self.linear(x)

return out

input_dim = 1

output_dim = 1

model = LinearRegressionModel(input_dim, output_dim)

epochs = 1000

learning_rate = 0.01

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

criterion = nn.MSELoss()

# 模型训练

for epoch in range(epochs):

epoch += 1

# 注意转行成tensor

inputs = torch.from_numpy(x_train)

labels = torch.from_numpy(y_train)

# 梯度要清零每一次迭代

optimizer.zero_grad()

# 前向传播

outputs = model(inputs)

# 计算损失

loss = criterion(outputs, labels)

# 返向传播

loss.backward()

# 更新权重参数

optimizer.step()

if epoch % 50 == 0:

print('epoch , loss '.format(epoch, loss.item()))

torch.save(model.state_dict(), 'model.pkl')

加载模型并预测

input_dim = 1

output_dim = 1

model = LinearRegressionModel(input_dim, output_dim)

model.load_state_dict(torch.load('model.pkl'))

import matplotlib.pyplot as plt

pred = model(torch.from_numpy(x_train).requires_grad_()).data.numpy()

plt.plot(x_values, pred, 'ro')

预测结果

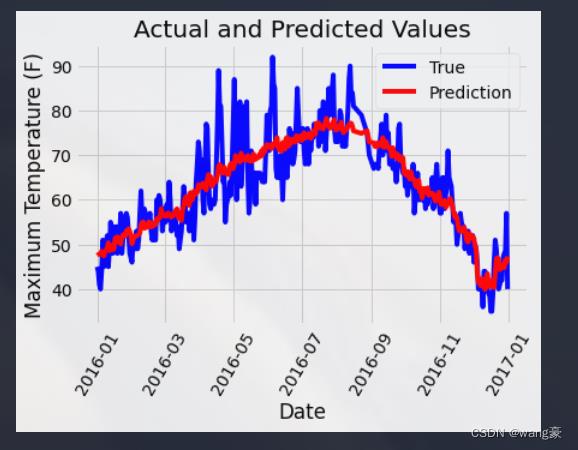

用神经网络进行气温预测

数据处理

导入数据

import numpy as np

import torch

import pandas as pd

import torch.optim as optim

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

features = pd.read_csv("./temps.csv")

print(features.head())

对时间数据进行转化并可视化

import datetime

years = features["year"]

months = features["month"]

days = features["day"]

# datetime格式

dates = [str(int(year)) + '-' + str(int(month)) + '-' + str(int(day)) for year, month, day in zip(years, months, days)]

dates = [datetime.datetime.strptime(date, '%Y-%m-%d') for date in dates]

plt.style.use('fivethirtyeight')

# 设置布局

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(nrows=2, ncols=2, figsize = (10,10))

fig.autofmt_xdate(rotation = 45)

# 标签值

ax1.plot(dates, features['actual'])

ax1.set_xlabel(''); ax1.set_ylabel('Temperature'); ax1.set_title('Max Temp')

# 昨天

ax2.plot(dates, features['temp_1'])

ax2.set_xlabel(''); ax2.set_ylabel('Temperature'); ax2.set_title('Previous Max Temp')

# 前天

ax3.plot(dates, features['temp_2'])

ax3.set_xlabel('Date'); ax3.set_ylabel('Temperature'); ax3.set_title('Two Days Prior Max Temp')

ax4.plot(dates, features['friend'])

ax4.set_xlabel('Date'); ax4.set_ylabel('Temperature'); ax4.set_title('Friend Estimate')

plt.tight_layout(pad=2)

获得训练集

# 对部分数据进行独热编码

features = pd.get_dummies(features)

#标签

labels = np.array(features['actual'])

# 在特征中去掉标签

features = features.drop('actual', axis = 1)

# 名字单独保存

features_list = list(features.columns)

# 转换成合适的格式

features = np.array(features)

# 标准化处理

from sklearn.preprocessing import StandardScaler

input_features = StandardScaler().fit_transform(features)

# 获得训练集和测试集

x = torch.tensor(input_features, dtype = float)

y = torch.tensor(labels, dtype = float)

在处理好数据后我们可以通过下面两种方式搭建模型

1. 较为复杂的构建形式(有助于理解)

下面我们手动去进行梯度下降,而不是使用torch 中内置的方法。

## 权重参数初始化

weights = torch.randn((14, 128), dtype = float, requires_grad = True)

biases = torch.randn(128, dtype = float, requires_grad = True)

weights2 = torch.randn((128, 1), dtype = float, requires_grad = True)

biases2 = torch.randn(1, dtype = float, requires_grad = True)

learning_rate = 0.001

losses = []

for i in range(10000):

# 计算隐层

hidden = x.mm(weights) + biases

# 加入激活函数

hidden = torch.relu(hidden)

# 计算输出层

output = hidden.mm(weights2) + biases2

# 计算损失

loss = torch.mean((output - y) ** 2)

losses.append(loss.data.numpy())

# 计算梯度

loss.backward()

#更新参数

weights.data.add_(- learning_rate * weights.grad.data)

biases.data.add_(- learning_rate * biases.grad.data)

weights2.data.add_(- learning_rate * weights2.grad.data)

biases2.data.add_(- learning_rate * biases2.grad.data)

# 清空梯度

weights.grad.zero_()

biases.grad.zero_()

weights2.grad.zero_()

biases2.grad.zero_()

# 打印损失值

if i % 100 == 0:

print('loss:', loss)

2.较为简单的构建方式

可以将这种写法跟上面的方法一进行对比

input_size = input_features.shape[1]

hidden_size = 128

output_size = 1

batch_size = 16

my_nn = torch.nn.Sequential(

torch.nn.Linear(input_size, hidden_size),

torch.nn.Sigmoid(),

torch.nn.Linear(hidden_size, output_size)

)

# 定义损失函数

cost = torch.nn.MSELoss(reduction = 'mean')

# 可以通过这个进行链式的梯度下降

optimizer = torch.optim.Adam(my_nn.parameters(), lr = 0.001)

# 训练网络

losses = []

for i in range(1000):

batch_loss = []

# MINI-BATCH 方法来进行训练

# 每次仅用一部分数据投入训练

for start in range(0, len(input_features), batch_size):

end = start + batch_size if start + batch_size < len(input_features) else len(input_features)

x_batch = torch.tensor(input_features[start:end], dtype = torch.float, requires_grad = True)

y_batch = torch.tensor(labels[start:end], dtype = torch.float, requires_grad = True)

# 前向传播

y_pred = my_nn(x_batch)

# 计算损失

loss = cost(y_pred, y_batch)

batch_loss.append(loss.item())

# 反向传播

loss.backward()

# 更新参数

optimizer.step()

# 清空梯度

optimizer.zero_grad()

batch_loss.append(loss.data.numpy())

# 打印损失

if i % 100 == 0:

losses.append(np.mean(batch_loss))

print('loss:', np.mean(batch_loss))

使用模型预测

x = torch.tensor(input_features, dtype = torch.float)

predict = my_nn(x).data.numpy()

# 转换日期格式

dates = [str(int(year)) + '-' + str(int(month)) + '-' + str(int(day)) for year, month, day in zip(years, months, days)]

dates = [datetime.datetime.strptime(date, '%Y-%m-%d') for date in dates]

# 创建一个表格来存日期和其对应的标签数值

true_data = pd.DataFrame(data = 'date': dates, 'actual': labels)

pre_data = pd.DataFrame(data = 'date': dates, 'actual': predict.reshape(-1))

# 真实值

plt.plot(true_data['date'], true_data['actual'], 'b-', label = 'True')

# 预测值

plt.plot(pre_data['date'], pre_data['actual'], 'r-', label = 'Prediction')

plt.xticks(rotation = "60")

plt.legend()

plt.xlabel('Date')

plt.ylabel('Maximum Temperature (F)')

plt.title('Actual and Predicted Values')

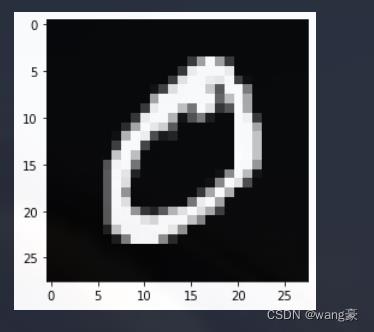

手写体识别(线性模型)

加载数据并查看

from pathlib import Path

import requests

import matplotlib.pyplot as plt

DATA_PATH = Path("data")

PATH = DATA_PATH / "mnist"

PATH.mkdir(parents=True, exist_ok=True)

URL = "http://deeplearning.net/data/mnist/"

FILENAME = "mnist.pkl.gz"

if not (PATH / FILENAME).exists():

content = requests.get(URL + FILENAME).content

(PATH / FILENAME).open("wb").write(content)

import pickle

import gzip

with gzip.open((PATH / FILENAME).as_posix(), "rb") as f:

((x_train, y_train), (x_valid, y_valid), _) = pickle.load(f, encoding="latin-1")

plt.imshow(x_train[1].reshape(28, 28), cmap="gray")

数据格式化处理

# 将数据格式进行转化

x_train, y_train, x_valid, y_valid = map(

torch.tensor, (x_train, y_train, x_valid, y_valid)

)

n,c = x_train.shape

x_train, x_train.shape, y_train.min(), y_train.max()

bs = 64

xb = x_train[0:bs]

yb = y_train[0:bs]

weights = torch.randn([784, 10], dtype=torch.float ,requires_grad=True)

bs = 64

bias = torch.zeros(10, requires_grad=True)

from torch.utils.data import DataLoader, TensorDataset

train_ds = TensorDataset(x_train, y_train)

train_dl = DataLoader(train_ds, batch_size=bs, shuffle=True)

valid_ds = TensorDataset(x_valid, y_valid)

valid_dl = DataLoader(valid_ds, batch_size=bs *2)

网络构建

创建一个model 来简化代码

- 必须继承nn.Module 且在其构造函数中需调用nn.Module 中的构造函数

- 无需写反向传播函数, nn.Module 能够利用autograd自动实现反向传播

- Module 中可学习参数可以通过named_parameters()或者parameters()返回迭代器

from torch import nn

import torch.nn.functional as F

class Mnist_NN(nn.Module):

"""

## 网络结构搭建

"""

def __init__(self):

super().__init__()

self.hidden1 = nn.Linear(784, 128)

self.hidden2 = nn.Linear(128, 256)

self.out = nn.Linear(256, 10)

def forward(self, xb):

"""

## 尽心前向传播

"""

xb = F.relu(self.hidden1(xb))

xb = F.relu(self.hidden2(xb))

xb = self.out(xb)

return xb

def loss_batch(mode, loss_func, xb, yb, opt = None):

"""

## 计算损失值的时候,同时进行反向传播

"""

loss = loss_func(mode(xb), yb)

if opt is not None:

loss.backward()

opt.step()

opt.zero_grad()

return loss.item(), len(xb)

def getModel():

"""

## 获取模, 并获得优化函数

"""

model = Mnist_NN()

return model<以上是关于Pytorch 入门的主要内容,如果未能解决你的问题,请参考以下文章