深度学习系列35:transformer应用例子

Posted IE06

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深度学习系列35:transformer应用例子相关的知识,希望对你有一定的参考价值。

1. 快速上手

首先安装: pip install transformers

这里有不同种类语言的离线模型清单:https://huggingface.co/languages

最简单的使用方式,是使用现成的pipeline。

2. pipeline例子

2.1 简介

预训练的模型如下:

"audio-classification": 语音分类

"automatic-speech-recognition" 语音识别

"conversational": 对话

"feature-extraction": 提取特征

"fill-mask": 填充

"image-classification": 图像分类

"question-answering": 问答

"table-question-answering": 表格问答

"text2text-generation": 文本生成

"text-classification" (又名"sentiment-analysis"): 文本分类

"text-generation": 文本生成

"token-classification" (又名"ner"): token分类

"translation": 翻译

"translation_xx_to_yy": 翻译

"summarization": 总结

"zero-shot-classification": 零样本分类

pipepline加载的内容包含如下:

2.2 情绪分析

from transformers import pipeline

classifier = pipeline('sentiment-analysis')

classifier('We are very happy to introduce pipeline to the transformers repository.')

2.3 问答

from transformers import pipeline

question_answerer = pipeline('question-answering')

question_answerer( 'question': 'What is the name of the repository ?', 'context': 'Pipeline has been included in the huggingface/transformers repository')

2.4 语音识别

from transformers import pipeline

import torch

from datasets import load_dataset, Audio

dataset = load_dataset("PolyAI/minds14", name="en-US", split="train")

# 对数据进行重采样

dataset = dataset. cast_column("audio", Audio(sampling_rate=speech_recognizer.feature_extractor.sampling_rate))

speech_recognizer = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-base-960h")

result = speech_recognizer([a['array'] for a in dataset[:4]["audio"]])

2.5 文本生成

generator = pipeline(task="text-generation")

generator("Eight people were kill at party in California.")

2.6 图像分类

vision_classifier = pipeline(task="image-classification")

vision_classifier(images="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg")

3. 自定义模型

3.1 一般流程

需要首先加载模型。

文字的话需要定义tokenizer。tokenizer负责把文字转换为一个字典,例如:

encoding = tokenizer("We are very happy to show you the 🤗 Transformers library.")

print(encoding)

'input_ids': [101, 11312, 10320, 12495, 19308, 10114, 11391, 10855, 10103, 100, 58263, 13299, 119, 102],

'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

文字和图像同理:

from transformers import AutoFeatureExtractor

feature_extractor = AutoFeatureExtractor.from_pretrained(

"ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition"

)

也可以使用AutoProcessor,兼容两者:

from transformers import AutoProcessor

processor = AutoProcessor.from_pretrained("microsoft/layoutlmv2-base-uncased")

encoding的结果加上**后可以通过model进行计算:

from torch import nn

pt_outputs = pt_model(**encoding)

pt_predictions = nn.functional.softmax(pt_outputs.logits, dim=-1)

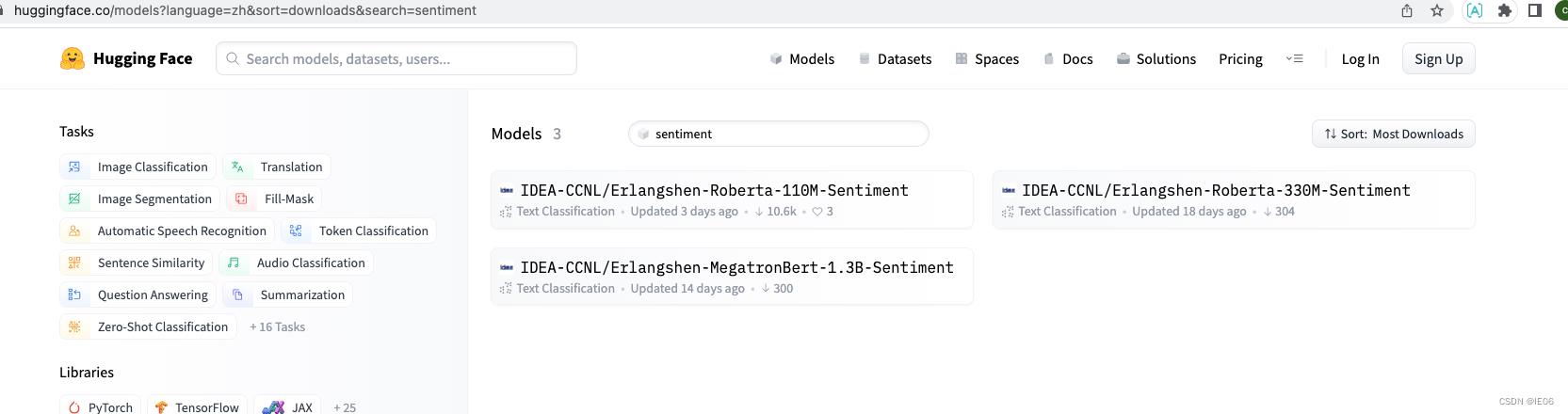

我们以情绪分析为例,默认的pipeline是识别英文的,如果我们要识别中文怎么办?

首先去模型库寻找合适的模型(点击左边的tasks和language可以进行筛选):

from transformers import BertForSequenceClassification

from transformers import BertTokenizer

import torch

tokenizer=BertTokenizer.from_pretrained('IDEA-CCNL/Erlangshen-Roberta-110M-Sentiment')

model=BertForSequenceClassification.from_pretrained('IDEA-CCNL/Erlangshen-Roberta-110M-Sentiment')

text='今天心情不好'

output=model(torch.tensor([tokenizer.encode(text)]))

print(torch.nn.functional.softmax(output.logits,dim=-1))

保存模型的代码如下

pt_save_directory = "./pt_save_pretrained"

tokenizer.save_pretrained(pt_save_directory)

pt_model.save_pretrained(pt_save_directory)

3.2 微调模型

举个例子,使用如下数据:

from datasets import load_dataset

dataset = load_dataset("yelp_review_full")

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

def tokenize_function(examples):

return tokenizer(examples["text"], padding="max_length", truncation=True)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

small_train_dataset = tokenized_datasets["train"].shuffle(seed=42).select(range(1000))

small_eval_dataset = tokenized_datasets["test"].shuffle(seed=42).select(range(1000))

加载训练参数类TrainingArguments,并且要添加metrics

from transformers import TrainingArguments, Trainer

import numpy as np

from datasets import load_metric

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained("bert-base-cased", num_labels=5)

training_args = TrainingArguments(output_dir="test_trainer")

metric = load_metric("accuracy")

def compute_metrics(eval_pred):

logits, labels = eval_pred

predictions = np.argmax(logits, axis=-1)

return metric.compute(predictions=predictions, references=labels)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=small_train_dataset,

eval_dataset=small_eval_dataset,

compute_metrics=compute_metrics,

)

trainer.train()

以上是关于深度学习系列35:transformer应用例子的主要内容,如果未能解决你的问题,请参考以下文章