YARN公平调度器-原理和入门配置

Posted 小基基o_O

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了YARN公平调度器-原理和入门配置相关的知识,希望对你有一定的参考价值。

文章目录

概述

- Fair Scheduler,译名公平调度器

- 特性:

1、支持多队列,支持叶子队列

2、在同一条叶子队列上,所有作业可以并发;

3、资源分配的依据:时间尺度、优先级、资源缺额…

在时间尺度上获得公平的资源

最大最小公平分配算法

初步配置:yarn-site.xml

本文Hadoop版本为

3.1.3

vim $HADOOP_HOME/etc/hadoop/yarn-site.xml

<!-- 指定公平调度器 -->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<!--

在未指定队列的情况下,是否使用【用户名】作为队列名

当设置为true时,当`yellow`用户提交作业时,会自动创建并使用`root.yellow`队列

当设置为false时,所有用户默认使用`root.default`队列

当配置了`yarn.scheduler.fair.allocation.file`时,本配置将被忽略

-->

<property>

<name>yarn.scheduler.fair.user-as-default-queue</name>

<value>false</value>

</property>

<!-- 是否启用抢占 -->

<property>

<name>yarn.scheduler.fair.preemption</name>

<value>true</value>

</property>

<!-- 触发抢占的阈值:资源使用量与总容量的占比 -->

<property>

<name>yarn.scheduler.fair.preemption.cluster-utilization-threshold</name>

<value>0.7f</value>

</property>

<!-- 应用最大优先级 -->

<property>

<name>yarn.cluster.max-application-priority</name>

<value>100</value>

</property>

分发配置文件

rsync.py $HADOOP_HOME/etc/hadoop/yarn-site.xml

重启YARN

stop-yarn.sh

start-yarn.sh

默认队列名称

yarn.scheduler.fair.user-as-default-queue

配置释义:在未指定队列的情况下,是否使用【用户名】作为队列名- 设置为true

当yellow用户提交作业且不指定队列名时,会自动创建并使用名为root.yellow的队列

当hive用户提交作业且不指定队列名时,会自动创建并使用名为root.hive的队列 - 设置为false

当用户提交作业且不指定队列名时,自动使用root.default队列 - 当配置了

yarn.scheduler.fair.allocation.file时,本配置将被忽略

yarn.scheduler.fair.user-as-default-queue设为true,使用yellow用户提交应用,不指定队列名

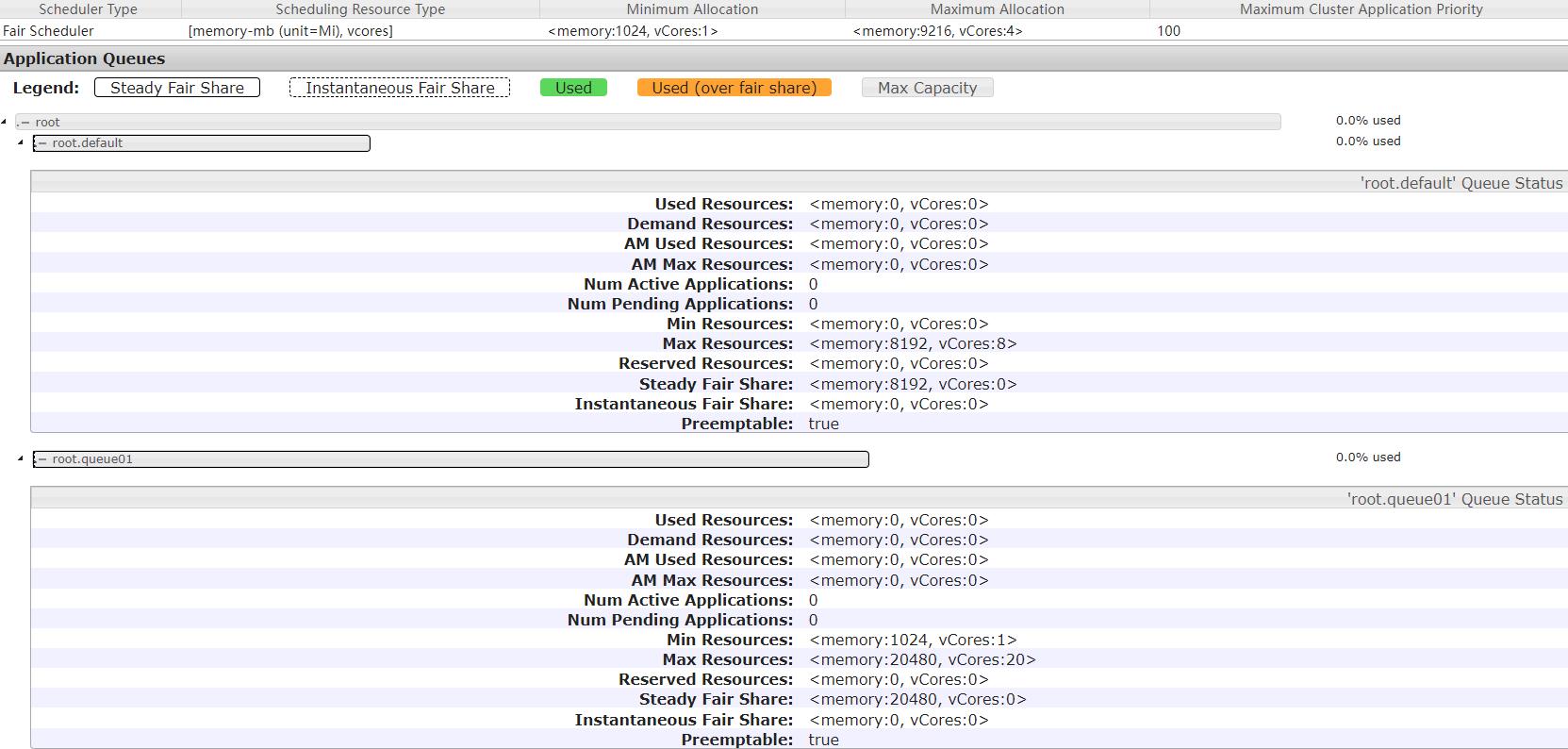

进一步配置:fair-scheduler.xml

yarn-site.xml中有个yarn.scheduler.fair.allocation.file用来指定allocation file的路径

这个allocation file描述了队列及其属性,以及某些策略默认值;格式要求是 XML

如果是相对路径,就去classpath(含Hadoop配置目录)找

默认值:fair-scheduler.xml

vim $HADOOP_HOME/etc/hadoop/fair-scheduler.xml

<?xml version="1.0"?>

<allocations>

<!-- 单个队列中ApplicationMaster资源的最大占比,取值0-1 -->

<queueMaxAMShareDefault>0.5</queueMaxAMShareDefault>

<!-- 单个队列最大资源的默认值 -->

<queueMaxResourcesDefault>8192mb,8vcores</queueMaxResourcesDefault>

<!-- 增加一个名为queue01的队列 -->

<queue name="queue01" >

<!-- 队列最小资源 -->

<minResources>1024mb,1vcores</minResources>

<!-- 队列最大资源 -->

<maxResources>20480mb,20vcores</maxResources>

<!-- 队列中ApplicationMaster占用资源的最大比例 -->

<maxAMShare>0.5</maxAMShare>

<!-- 队列权重,默认值为1.0 -->

<weight>5.0</weight>

</queue>

</allocations>

不需要分发

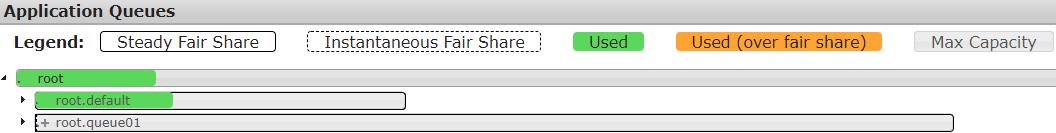

测试(不指定队列)

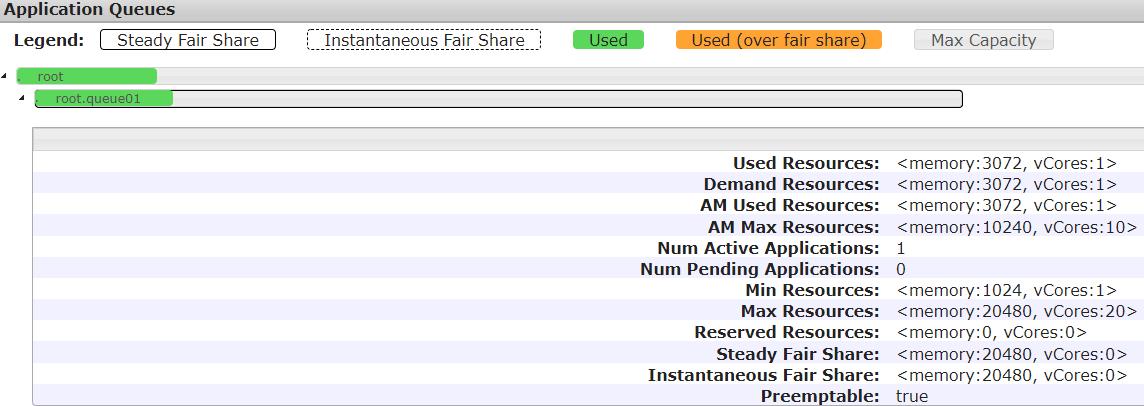

测试(指定队列)

SET mapred.job.queue.name=queue01;

附录

| EN | 🔉 | CN |

|---|---|---|

| utilization | ˌjuːtələˈzeɪʃn | n. 利用,使用 (=utilisation); 利用,使用 |

| preemption | ˌpriːˈempʃn | n. 优先购买;强制收购;抢先占有;取代 |

| kick | kɪk | v. 踢;n. 踢;后坐力 |

| kick in | 踢开;支付;开始生效;腿打水游进;死亡 | |

| property | ˈprɑːpərti | n. 财产;所有权;特性 |

| placement | ˈpleɪsmənt | n. 布置;安顿;实习工作;收容所;定位球 |

| Property | 说明 | 默认值 |

|---|---|---|

yarn.scheduler.fair.allocation.file | 队列配置文件的路径,XML格式;如果是相对路径,就去classpath(含Hadoop conf directory)找 | fair-scheduler.xml |

yarn.scheduler.fair.user-as-default-queue | 在未指定队列的情况下,是否使用【用户名】作为队列名;当设置为true时,当yellow用户提交作业时,默认自动创建并使用名为root.yellow的队列;当设置为false时,所有用户默认使用root.default队列 | true |

yarn.scheduler.fair.preemption | 是否启用抢占 | false |

yarn.scheduler.fair.preemption.cluster-utilization-threshold | 触发抢占的阈值:资源使用量与总容量的占比 | 0.8f |

yarn.scheduler.fair.sizebasedweight | Whether to assign shares to individual apps based on their size, rather than providing an equal share to all apps regardless of size. When set to true, apps are weighted by the natural logarithm of one plus the app’s total requested memory, divided by the natural logarithm of 2. | false |

yarn.scheduler.fair.assignmultiple | Whether to allow multiple container assignments in one heartbeat. | false |

yarn.scheduler.fair.dynamic.max.assign | If assignmultiple is true, whether to dynamically determine the amount of resources that can be assigned in one heartbeat. When turned on, about half of the un-allocated resources on the node are allocated to containers in a single heartbeat. | true |

yarn.scheduler.fair.max.assign | If assignmultiple is true and dynamic.max.assign is false, the maximum amount of containers that can be assigned in one heartbeat | -1(不限制) |

yarn.scheduler.fair.locality.threshold.node | For applications that request containers on particular nodes, the number of scheduling opportunities since the last container assignment to wait before accepting a placement on another node. Expressed as a float between 0 and 1, which, as a fraction of the cluster size, is the number of scheduling opportunities to pass up | -1.0(不放弃任何调度机会) |

yarn.scheduler.fair.locality.threshold.rack | For applications that request containers on particular racks, the number of scheduling opportunities since the last container assignment to wait before accepting a placement on another rack. Expressed as a float between 0 and 1, which, as a fraction of the cluster size, is the number of scheduling opportunities to pass up | -1.0(不放弃任何调度机会) |

yarn.scheduler.fair.allow-undeclared-pools | If this is true, new queues can be created at application submission time, whether because they are specified as the application’s queue by the submitter or because they are placed there by the user-as-default-queue property. If this is false, any time an app would be placed in a queue that is not specified in the allocations file, it is placed in the “default” queue instead. If a queue placement policy is given in the allocations file, this property is ignored. | true |

yarn.scheduler.fair.update-interval-ms | The interval at which to lock the scheduler and recalculate fair shares, recalculate demand, and check whether anything is due for preemption | 500 ms. |

yarn.resource-types.memory-mb.increment-allocation | The fairscheduler grants memory in increments of this value. If you submit a task with resource request that is not a multiple of memory-mb.increment-allocation, the request will be rounded up to the nearest increment. | 1024 MB. |

yarn.resource-types.vcores.increment-allocation | The fairscheduler grants vcores in increments of this value. If you submit a task with resource request that is not a multiple of vcores.increment-allocation, the request will be rounded up to the nearest increment. | 1 |

yarn.resource-types.<resource>.increment-allocation | The fairscheduler grants in increments of this value. If you submit a task with resource request that is not a multiple of .increment-allocation, the request will be rounded up to the nearest increment. If this property is not specified for a resource, the increment round-up will not be applied. If no unit is specified, the default unit for the resource is assumed. |

Allocation file format

Queue elements

which represent queues. Queue elements can take an optional attribute ‘type’, which when set to ‘parent’ makes it a parent queue. This is useful when we want to create a parent queue without configuring any leaf queues. Each queue element may contain the following properties:minResources: minimum resources the queue is entitled to, in the form “X mb, Y vcores”. For the single-resource fairness policy, the vcores value is ignored. If a queue’s minimum share is not satisfied, it will be offered available resources before any other queue under the same parent. Under the single-resource fairness policy, a queue is considered unsatisfied if its memory usage is below its minimum memory share. Under dominant resource fairness, a queue is considered unsatisfied if its usage for its dominant resource with respect to the cluster capacity is below its minimum share for that resource. If multiple queues are unsatisfied in this situation, resources go to the queue with the smallest ratio between relevant resource usage and minimum. Note that it is possible that a queue that is below its minimum may not immediately get up to its minimum when it submits an application, because already-running jobs may be using those resources.maxResources: maximum resources a queue is allocated, expressed either in absolute values (X mb, Y vcores) or as a percentage of the cluster resources (X% memory, Y% cpu). A queue will not be assigned a container that would put its aggregate usage over this limit.maxChildResources: maximum resources an ad hoc child queue is allocated, expressed either in absolute values (X mb, Y vcores) or as a percentage of the cluster resources (X% memory, Y% cpu). An ad hoc child queue will not be assigned a container that would put its aggregate usage over this limit.maxRunningApps: limit the number of apps from the queue to run at oncemaxAMShare: limit the fraction of the queue’s fair share that can be used to run application masters. This property can only be used for leaf queues. For example, if set to 1.0f, then AMs in the leaf queue can take up to 100% of both the memory and CPU fair share. The value of -1.0f will disable this feature and the amShare will not be checked. The default value is 0.5f.weight: to share the cluster non-proportionally with other queues. Weights default to 1, and a queue with weight 2 should receive approximately twice as many resources as a queue with the default weight.schedulingPolicy: to set the scheduling policy of any queue. The allowed values are “fifo”/“fair”/“drf” or any class that extendsorg.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.SchedulingPolicy. Defaults to “fair”. If “fifo”, apps with earlier submit times are given preference for containers, but apps submitted later may run concurrently if there is leftover space on the cluster after satisfying the earlier app’s requests.aclSubmitApps: a list of users and/or groups that can submit apps to the queue. Refer to the ACLs section below for more info on the format of this list and how queue ACLs work.aclAdministerApps: a list of users and/or groups that can administer a queue. Currently the only administrative action is killing an application. Refer to the ACLs section below for more info on the format of this list and how queue ACLs work.minSharePreemptionTimeout: number of seconds the queue is under its minimum share before it will try to preempt containers to take resources from other queues. If not set, the queue will inherit the value from its parent queue. Default value isLong.MAX_VALUE, which means that it will not preempt containers until you set a meaningful value.fairSharePreemptionTimeout: number of seconds the queue is under its fair share threshold before it will try to preempt containers to take resources from other queues. If not set, the queue will inherit the value from its parent queue. Default value isLong.MAX_VALUE, which means that it will not preempt containers until you set a meaningful value.fairSharePreemptionThreshold: the fair share preemption threshold for the queue. If the queue waits fairSharePreemptionTimeout without receiving fairSharePreemptionThreshold*fairShare resources, it is allowed to preempt containers to take resources from other queues. If not set, the queue will inherit the value from its parent queue. Default value is 0.5f.allowPreemptionFrom: determines whether the scheduler is allowed to preempt resources from the queue. The default is true. If a queue has this property set to false, this property will apply recursively to all child queues.reservation: indicates to theReservationSystemthat the queue’s resources is available for users to reserve. This only applies for leaf queues. A leaf queue is not reservable if this property isn’t configured.

User elements: which represent settings governing the behavior of individual users. They can contain a single property: maxRunningApps, a limit on the number of running apps for a particular user.A userMaxAppsDefault element: which sets the default running app limit for any users whose limit is not otherwise specified.A defaultFairSharePreemptionTimeout element: which sets the fair share preemption timeout for the root queue; overridden by fairSharePreemptionTimeout element in root queue. Default is set toLong.MAX_VALUE.A defaultMinSharePreemptionTimeout element: which sets the min share preemption timeout for the root queue; overridden by minSharePreemptionTimeout element in root queue. Default is set toLong.MAX_VALUE.A defaultFairSharePreemptionThreshold element: which sets the fair share preemption threshold for the root queue; overridden by fairSharePreemptionThreshold element in root queue. Default is set to 0.5f.A queueMaxAppsDefault element: which sets the default running app limit for queues; overridden by maxRunningApps element in each queue.A queueMaxResourcesDefault element: which sets the default max resource limit for queue; overridden by maxResources element in each queue.A queueMaxAMShareDefault element: which sets the default AM resource limit for queue; overridden by maxAMShare element in each queue.A defaultQueueSchedulingPolicy element: which sets the default scheduling policy for queues; overridden by the schedulingPolicy element in each queue if specified. Defaults to “fair”.A reservation-agent element: which sets the class name of the implementation of theReservationAgent, which attempts to place the user’s reservation request in the Plan. The default value isorg.apache.hadoop.yarn.server.resourcemanager.reservation.planning.AlignedPlannerWithGreedy.A reservation-policy element: which sets the class name of the implementation of the SharingPolicy, which validates if the new reservation doesn’t violate any invariants. The default value isorg.apache.hadoop.yarn.server.resourcemanager.reservation.CapacityOverTimePolicy.A reservation-planner element: which sets the class name of the implementation of the Planner, which is invoked if the Plan capacity fall below (due to scheduled maintenance or node failuers) the user reserved resources. The default value isorg.apache.hadoop.yarn.server.resourcemanager.reservation.planning.SimpleCapacityReplanner, which scans the Plan and greedily removes reservations in reversed order of acceptance (LIFO) till the reserved resources are within the Plan capacity.A queuePlacementPolicy element: which contains a list of rule elements that tell the scheduler how to place incoming apps into queues. Rules are applied in the order that they are listed. Rules may take arguments. All rules accept the “create” argument, which indicates whether the rule can create a new queue. “Create” defaults to true; if set to false and the rule would place the app in a queue that is not configured in the allocations file, we continue on to the next rule. The last rule must be one that can never issue a continue. Valid rules are:specified: the app is placed into the queue it requested. If the app requested no queue, i.e. it specified “default”, we continue. If the app requested a queue name starting or ending with period, i.e. names like “.q1” or “q1.” will be rejected.user: the app is placed into a queue with the name of the user who submitted it. Periods in the username will be replace with “_dot_”, i.e. the queue name for user “first.last” is “first_dot_last”.primaryGroup: the app is placed into a queue with the name of the primary group of the user who submitted it. Periods in the group name will be replaced with “_dot_”, i.e. the queue name for group “one.two” is “one_dot_two”.secondaryGroupExistingQueue: the app is placed into a queue with a name that matches a secondary group of the user who submitted it. The first secondary group that matches a configured queue will be selected. Periods in group names will be replaced with “_dot_”, i.e. a user with “one.two” as one of their secondary groups would be placed into the “one_dot_two” queue, if such a queue exists.nestedUserQueue: the app is placed into a queue with the name of the user under the queue suggested by the nested rule. This is similar to ‘user’ rule,the difference being in ‘nestedUserQueue’ rule,user queues can be created under any parent queue, while ‘user’ rule creates user queues only under root queue. Note that nestedUserQueue rule would be applied only if the nested rule returns a parent queue.One can configure a parent queue either by setting ‘type’ attribute of queue to ‘parent’ or by configuring at least one leaf under that queue which makes it a parent. See example allocation for a sample use case.default: the app is placed into the queue specified in the ‘queue’ attribute of the default rule. If ‘queue’ attribute is not specified, the app is placed into ‘root.default’ queue.reject: the app is rejected.

以上是关于YARN公平调度器-原理和入门配置的主要内容,如果未能解决你的问题,请参考以下文章