Python 携程去哪儿游记爬取

Posted 卖山楂啦prss

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python 携程去哪儿游记爬取相关的知识,希望对你有一定的参考价值。

应别人的需求

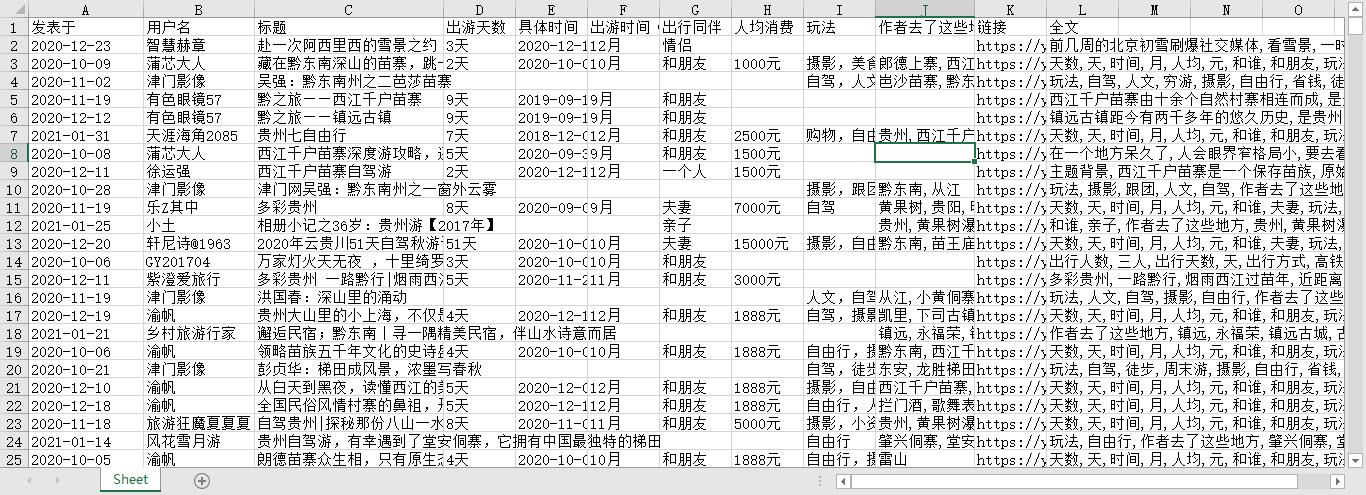

我把以前的代码拿过来,改了改,获取了一些数据

爬取的内容就特别简单的那种,如下

携程

pip install -i https://pypi.doubanio.com/simple/ --trusted-host pypi.doubanio.com feapder

feapder create -j

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"accept-encoding": "gzip, deflate, br",

"accept-language": "zh-CN,zh;q=0.9",

"cache-control": "max-age=0",

"cookie": "",

"sec-ch-ua": "\\"Google Chrome\\";v=\\"95\\", \\"Chromium\\";v=\\"95\\", \\";Not A Brand\\";v=\\"99\\"",

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": "\\"Windows\\"",

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "none",

"sec-fetch-user": "?1",

"upgrade-insecure-requests": "1",

"user-agent": ""

import time

import requests

from lxml import etree

from multiprocessing.dummy import Pool

from requests.exceptions import RequestException

import openpyxl

import re

from fake_useragent import UserAgent

def get_one_page(url):

try:

res = requests.get(url,headers = headers)

if res.status_code == 200:

return res.text

return None

except RequestException:

return None

def parse_one_page(html):

# 构造HTML解析器

info_list=[]

ii_list = html.xpath('//a[@class="journal-item cf"]')

for ii in ii_list:

##提取

# 客源地

title = ii.xpath('.//dt[@class="ellipsis"]/text()')[0].strip()

di_li = ii.xpath('.//span[@class="tips_a"]/text()')[0].strip().split('\\n ')

try:

day = di_li[0]

# 出游时间(月份)

time = di_li[1]

# 出行同伴(家人、朋友或其他)

people = di_li[3].replace(',','')

# 人均消费

money = di_li[2].replace(',','')

except Exception:

day=''

time=''

people=''

money=''

# print(day,time,people,money)

# 用户名

user1 = ii.xpath('.//dd[@class="item-user"]/text()')[0].strip()

user = re.findall(r"(.+?)发表于", user1)[0].strip()

fa = user1[user1.rfind('发表于'):].replace('发表于 ', '')

# print(user)

url = 'https://you.ctrip.com' + ii.xpath('./@href')[0].strip()

headers =

'cookie': '_ga=GA1.2.50538359.1626942417; MKT_CKID=1626942416972.xsrkp.h14a; _RSG=vt4axMVXju2TUp4mgpTnUB; _RDG=28416d30204f5527dc27cd978da9f4f9ba; _RGUID=2e2d85f5-bb90-4df9-b7b1-773ab013379d; GUID=09031042315856507136; nfes_isSupportWebP=1; nfes_isSupportWebP=1; ibulanguage=CN; ibulocale=zh_cn; cookiePricesDisplayed=CNY; _gid=GA1.2.607075386.1635573932; MKT_Pagesource=PC; Session=smartlinkcode=U130026&smartlinklanguage=zh&SmartLinkKeyWord=&SmartLinkQuary=&SmartLinkHost=; Union=AllianceID=4897&SID=130026&OUID=&createtime=1635573932&Expires=1636178732460; MKT_CKID_LMT=1635573932634; _RF1=113.204.171.221; ASP.NET_SessionSvc=MTAuNjAuNDkuOTJ8OTA5MHxqaW5xaWFvfGRlZmF1bHR8MTYyMzE0MzgyNjI2MA; _bfa=1.1626942411832.2cm51p.1.1635573925821.1635580203950.4.26; _bfs=1.2; _jzqco=%7C%7C%7C%7C%7C1.429931237.1626942416968.1635580207564.1635580446965.1635580207564.1635580446965.0.0.0.19.19; __zpspc=9.4.1635580207.1635580446.2%232%7Cwww.baidu.com%7C%7C%7C%7C%23; appFloatCnt=7; _bfi=p1%3D290602%26p2%3D0%26v1%3D26%26v2%3D25',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)'

+'Chrome/62.0.3202.94 Safari/537.36'

hji2 = get_one_page(url)

hji2 = etree.HTML(hji2)

quanwen = hji2.xpath('//div[@class="ctd_content"]')

try:

quanwen = quanwen[0].xpath('string(.)').strip().replace('\\\\n', '')

except Exception:

quanwen = ''

pattern="[\\u4e00-\\u9fa5]+"

regex = re.compile(pattern)

results = ','.join(regex.findall(quanwen))

cai = hji2.xpath('//div[@class="ctd_content_controls cf"]')

try:

result = cai[0].xpath('string(.)').strip().replace('\\\\n', '').replace('\\r\\n', '')

except Exception:

result = ''

# print(result)

if '天数' in result:

n = re.findall(r"天数:(.+?)天", result[result.rfind('天数'):])[0].strip()+'天'

# print(n)

else:

n = ''

if '时间' in result:

m = re.findall(r"时间:(.+?)月", result[result.rfind('时间'):])[0].strip()+'月'

# print(m)

else:

m = ''

if '人均' in result:

ren1111 = re.findall(r"人均:(.+?)元", result[result.rfind('人均'):])[0].strip()+'元'

# print(k)

else:

ren1111 = ''

if '和谁' in result:

c = result[result.rfind('和谁'):][3:6]

# print(c)

else:

c = ''

if '玩法' in result:

try:

a = re.findall(r"玩法:(.+?)作者去了这些地方", result[result.rfind('玩法'):])[0].strip()

# print(a)

except Exception:

a = ''

else:

a=''

if '作者去了这些地方' in result:

b = result[result.rfind('作者去了这些地方'):].replace(' ','、')

b = b.replace('、、 ', ',')

b = b.replace('、', '')

b = b.replace('作者去了这些地方:', '')

# print(b)

else:

b = ''

# print([user,title, day, n,time,m,people,c,money,k,a,b,quanwen,url])

sheet.append([fa,user,title, day, n,time,m,people,c,money,ren1111,a,b,url,results])

print(ii)

def main(offset):

# 构造主函数,初始化各个模块,传入入口URL

base_url = 'https://you.ctrip.com/travels/qiandongnan2375/t2-p.html'

print(offset)

url = base_url.format(offset)

html = etree.HTML(get_one_page(url))

parse_one_page(html)

if __name__ == '__main__':

wb = openpyxl.Workbook() # 获取工作簿对象

sheet = wb.active # 活动的工作表

# 添加列名

global ren1111

sheet.append(['发表于','用户名', '标题', '出游天数','天数','具体时间','出游时间(月份)',\\

'出行同伴','和谁','人均消费','人均','玩法','作者去了这些地方','链接','全文'])

# 请求头

headers = 'User-Agent':UserAgent(verify_ssl=False).random

# 使用线程池

print('多线程爬取开始')

start_time=time.time()

p = Pool(8)

p.map(main,[i for i in range(70,75)])

# 保存位置

wb.save(r'info8.xlsx')

#关闭线程池

end_time=time.time()

print('多线程爬取结束')

print('耗时:',end_time-start_time)

p.close()

p.join()#用来等待进程池中的worker进程执行完毕,防止主进程在worker进程结束前结束。

去哪儿

代码都差球不多

import time

import requests

from lxml import etree

from multiprocessing.dummy import Pool

from requests.exceptions import RequestException

import openpyxl

import re

from fake_useragent import UserAgent

def get_one_page(url):

try:

res = requests.get(url,headers = headers)

if res.status_code == 200:

return res.text

return None

except RequestException:

return None

def parse_one_page(html):

# 构造HTML解析器

info_list=[]

ii_list = html.xpath('//li[@class="list_item "]')

try:

hh = html.xpath('//li[@class="list_item last_item"]')[0]

ii_list.append(hh)

except Exception:

pass

for ii in ii_list:

##提取

try:

# 客源地

title = ''.join(ii.xpath('.//h2[@class="tit"]//text()'))

except Exception:

title=''

try:

# 用户名

user = ii.xpath('.//span[@class="user_name"]/a/text()')[0].strip()

except Exception:

user=''

try:

# 出游天数

day = ii.xpath('.//span[@class="days"]/text()')[0].strip()

except Exception:

day=''

try:

# 出游时间(月份)

time = ii.xpath('.//span[@class="date"]/text()')[0].strip()

except Exception:

time=''

try:

# 出行同伴(家人、朋友或其他)

people = ii.xpath('.//span[@class="people"]/text()')[0].strip()

except Exception:

people=''

try:

# 人均消费

money = ii.xpath('.//span[@class="fee"]/text()')[0].strip()

except Exception:

money=''

try:

# 途径

places = ''.join(ii.xpath('.//p[@class="places"]//text()'))

xingcheng = places[places.rfind('行程'):]

tujing = places[:places.rfind('行程')]

except Exception:

xingcheng=''

tujing=''

# 游记链接

url = 'https://travel.qunar.com/travelbook/note/' + ii.xpath('.//h2[@class="tit"]/a/@href')[0].strip().replace('/youji/','')

headers = 'User-Agent':UserAgent(verify_ssl=False).random

res1 = requests.get(url,headers = headers)

hji2 = res1.text

hji2 = etree.HTML(hji2)

quanwen = hji2.xpath('//div[@class="b_panel_schedule"]')

try:

quanwen = quanwen[0].xpath('string(.)').strip().replace('\\\\n', '')

except Exception:

quanwen = ''

pattern="[\\u4e00-\\u9fa5]+"

regex = re.compile(pattern)

results = ','.join(regex.findall(quanwen))

try:

chufa_date = hji2.xpath('//li[@class="f_item when"]//text()')[2]

except Exception:

chufa_date=''

try:

tian = hji2.xpath('//li[@class="f_item howlong"]//text()')[2]+'天'

except Exception:

tian=''

try:

fee = hji2.xpath('//li[@class="f_item howmuch"]//text()')[2]+'元'

except Exception:

fee=''

try:

ren = hji2.xpath('//li[@class="f_item who"]//text()')[2]

except Exception:

ren=''

try:

wan = ''.join(hji2.xpath('//li[@class="f_item how"]//text()')).replace('玩法/', '').replace('\\xa0',' ')

wan = wan.strip()

except Exception:

wan=''

# print(result)

# print([user,title, day, n,time,m,people,c,money,k,a,b,quanwen,url])

sheet.append([user,title, day,tian,time,chufa_date,people,ren,fee,money,xingcheng,tujing,wan,url,results])

print(ii)

def main(offset):

# 构造主函数,初始化各个模块,传入入口URL

base_url = 'https://travel.qunar.com/search/gonglue/22-qiandongnan-300125/hot_ctime/.htm'

print(offset)

url = base_url.format(offset)

html = etree.HTML(get_one_page(url))

parse_one_page(html)

if __name__ == '__main__':

wb = openpyxl.Workbook() # 获取工作簿对象

sheet = wb.active # 活动的工作表

# 添加列名

global ren1111

sheet.append(['用户名', '标题', '出游天数','天数','具体时间','出游时间',\\

'出行同伴','和谁','人均消费','人均','行程','途径','玩法','链接','全文'])

# 请求头

headers = 'User-Agent':UserAgent(verify_ssl=False).random

# 使用线程池

print('多线程爬取开始')

start_time=time.time()

# p = Pool(8)

# p.map(main,[i for i in range(1,4)])

for i in range(30,35):

time.sleep(6)

main(i)

# 保存位置

wb.save(r'去哪儿6.xlsx')

#关闭线程池

end_time=time.time()

print('多线程爬取结束')

print('耗时:',end_time-start_time)

# p.close()

# p.join()#用来等待进程池中的worker进程执行完毕,防止主进程在worker进程结束前结束。

以上是关于Python 携程去哪儿游记爬取的主要内容,如果未能解决你的问题,请参考以下文章