SpringCloud Alibaba Docker 安装 Seata Server集群

Posted 小毕超

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了SpringCloud Alibaba Docker 安装 Seata Server集群相关的知识,希望对你有一定的参考价值。

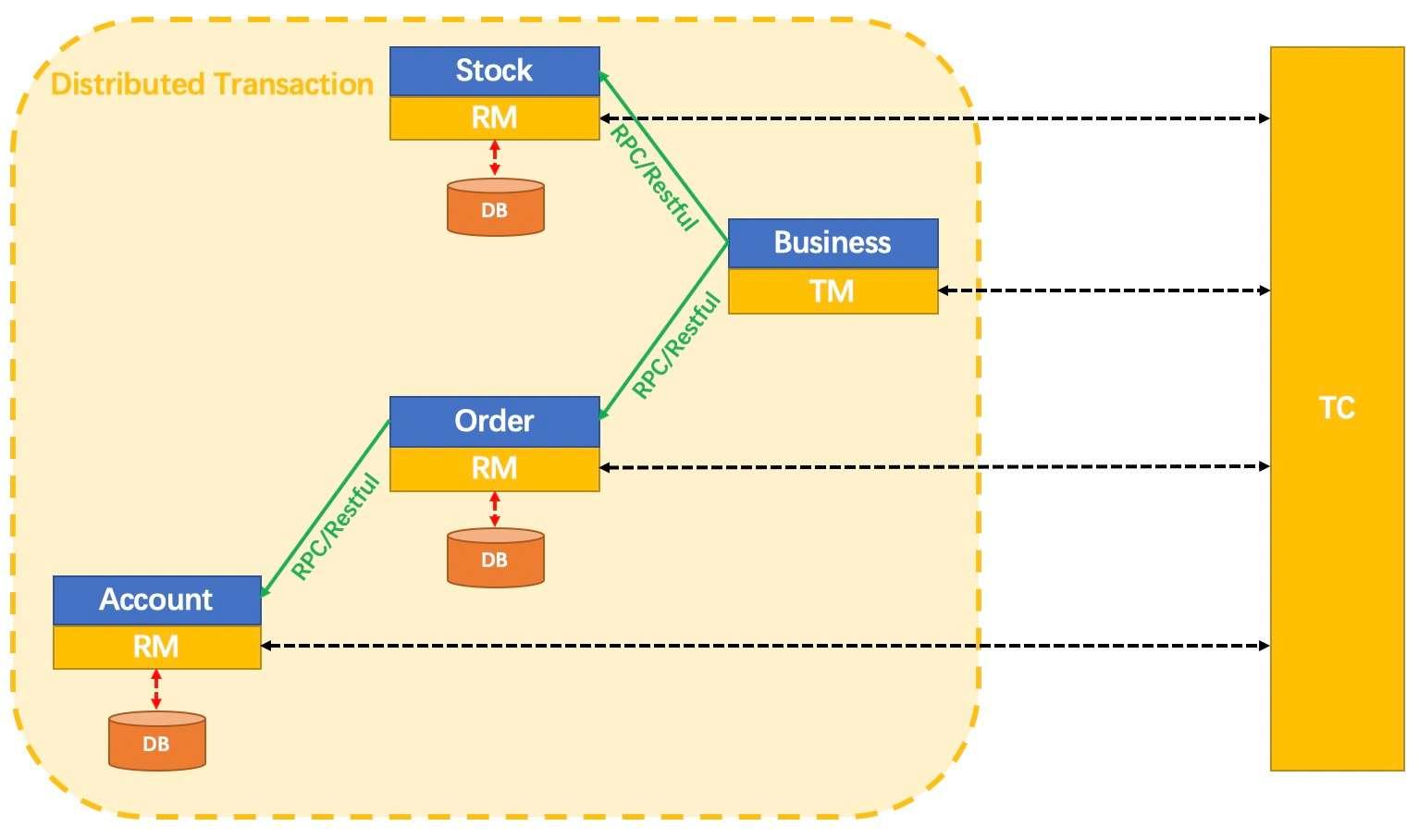

一、Seata

Seata 是一款开源的分布式事务解决方案,致力于提供高性能和简单易用的分布式事务服务。Seata 将为用户提供了 AT、TCC、SAGA 和 XA 事务模式,为用户打造一站式的分布式解决方案。

下面将使用Docker 主要依靠docker-compose 工具来进行Seata Server集群的安装,在安装前请确保服务器环境已经安装Docker 和 docker-compose,由于我们将信息注册到注册中心nacos中,请也确保已经安装nacos。

关于nacos的集群环境可以参考:

Docker 搭建 Nacos集群:https://blog.csdn.net/qq_43692950/article/details/122031557

seata官网:http://seata.io

二、Seata Server 集群搭建

集群安装架构:

| 主机 | 角色 |

|---|---|

| 192.168.40.130 | mysql,seata-server1 |

| 192.168.40.167 | seata-server2 |

| 192.168.40.168 | seata-server3 |

首先在Nacos中新建配制文件:

Data ID:seataServer.properties

Group:SEATA_GROUP

配制内容:

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableTmClientBatchSendRequest=false

transport.enableRmClientBatchSendRequest=true

transport.rpcRmRequestTimeout=5000

transport.rpcTmRequestTimeout=10000

transport.rpcTcRequestTimeout=10000

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

service.vgroupMapping.default_tx_group=default

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.sagaJsonParser=fastjson

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

store.mode=db

store.lock.mode=file

store.session.mode=file

store.publicKey=

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.jdbc.Driver

store.db.url=jdbc:mysql://192.168.40.130:3306/seata?useUnicode=true&rewriteBatchedStatements=true

store.db.user=root

store.db.password=root123

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

store.redis.mode=single

store.redis.single.host=127.0.0.1

store.redis.single.port=6379

store.redis.sentinel.masterName=

store.redis.sentinel.sentinelHosts=

store.redis.maxConn=10

store.redis.minConn=1

store.redis.maxTotal=100

store.redis.database=0

store.redis.password=

store.redis.queryLimit=100

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

tcc.fence.logTableName=tcc_fence_log

tcc.fence.cleanPeriod=1h

在你指定的mysql 中新建数据库 seata ,并执行下面SQL脚本,创建global_table、branch_table、lock_table 三个表:

-- the table to store GlobalSession data

drop table if exists `global_table`;

create table `global_table` (

`xid` varchar(128) not null,

`transaction_id` bigint,

`status` tinyint not null,

`application_id` varchar(32),

`transaction_service_group` varchar(32),

`transaction_name` varchar(128),

`timeout` int,

`begin_time` bigint,

`application_data` varchar(2000),

`gmt_create` datetime,

`gmt_modified` datetime,

primary key (`xid`),

key `idx_gmt_modified_status` (`gmt_modified`, `status`),

key `idx_transaction_id` (`transaction_id`)

);

-- the table to store BranchSession data

drop table if exists `branch_table`;

create table `branch_table` (

`branch_id` bigint not null,

`xid` varchar(128) not null,

`transaction_id` bigint ,

`resource_group_id` varchar(32),

`resource_id` varchar(256) ,

`lock_key` varchar(128) ,

`branch_type` varchar(8) ,

`status` tinyint,

`client_id` varchar(64),

`application_data` varchar(2000),

`gmt_create` datetime,

`gmt_modified` datetime,

primary key (`branch_id`),

key `idx_xid` (`xid`)

);

-- the table to store lock data

drop table if exists `lock_table`;

create table `lock_table` (

`row_key` varchar(128) not null,

`xid` varchar(96),

`transaction_id` long ,

`branch_id` long,

`resource_id` varchar(256) ,

`table_name` varchar(32) ,

`pk` varchar(36) ,

`gmt_create` datetime ,

`gmt_modified` datetime,

primary key(`row_key`)

);

开始搭建 seata-server 环境

首先在三台主机上创建配制目录,或者使用nfs挂载:

mkdir -p /seata-server/config

在该目录下,创建 registry.conf 注册配制文件

vi /seata-server/config/registry.conf

写入以下内容:

registry

type = "nacos"

nacos

# seata服务注册在nacos上的别名,客户端通过该别名调用服务

application = "seata-server"

# 请根据实际生产环境配置nacos服务的ip和端口

serverAddr = "192.168.40.1:8848"

# nacos上指定的namespace

namespace = ""

cluster = "default"

username = "nacos"

password = "nacos"

config

type = "nacos"

nacos

# 请根据实际生产环境配置nacos服务的ip和端口

serverAddr = "192.168.40.1:8848"

# nacos上指定的namespace

namespace = ""

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

# 从v1.4.2版本开始,已支持从一个Nacos dataId中获取所有配置信息,你只需要额外添加一个dataId配置项

dataId: "seataServer.properties"

编写docker-compose 文件:

version: "3.1"

services:

seata-server:

image: seataio/seata-server:1.4.2

hostname: seata-server

ports:

- "8091:8091"

environment:

# 指定seata服务启动端口

- SEATA_PORT=8091

# 注册到nacos上的ip。客户端将通过该ip访问seata服务。

# 注意公网ip和内网ip的差异。

- SEATA_IP=192.168.40.130

- SEATA_CONFIG_NAME=file:/root/seata-config/registry

volumes:

# 因为registry.conf中是nacos配置中心,只需要把registry.conf放到./seata-server/config文件夹中

- "/seata-server/config:/root/seata-config"

注意将SEATA_IP修改为每个主机的ip。

启动:

docker-compose up -d

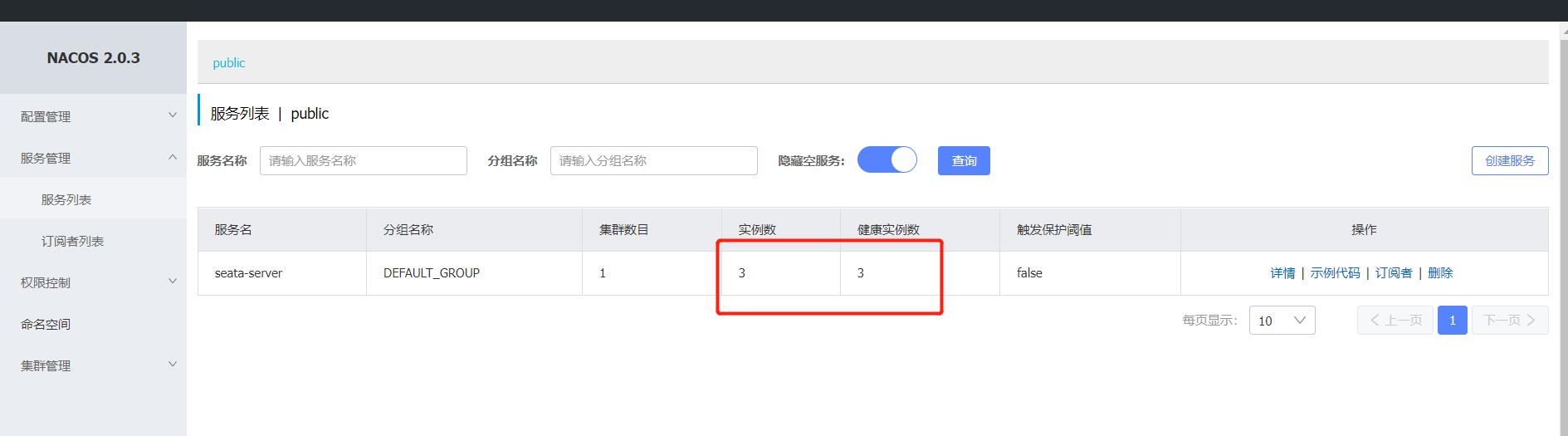

然后查看nacos中的服务列表信息:

已经有三个实例启动了。

喜欢的小伙伴可以关注我的个人微信公众号,获取更多学习资料!

以上是关于SpringCloud Alibaba Docker 安装 Seata Server集群的主要内容,如果未能解决你的问题,请参考以下文章