elk,centos7,filebeat,elasticsearch-head详细安装步骤

Posted Jt00

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了elk,centos7,filebeat,elasticsearch-head详细安装步骤相关的知识,希望对你有一定的参考价值。

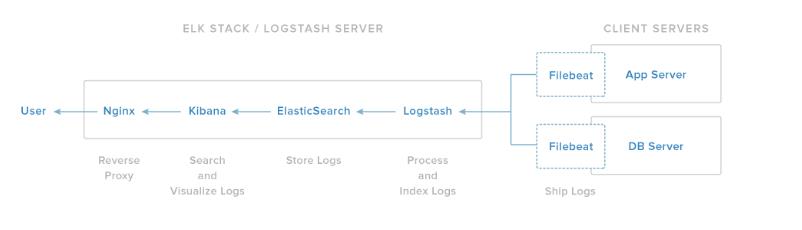

先来张图,大致结构应该晓得,对吧!

安装jdk:至少1.8以上

yum -y localinstall jdk-8u73-linux-x64.rpm

安装elasticsearch5.2.1 用普通用户安装es:

tar -zxvf elasticsearch-5.2.1.tar.gz

[es@node elasticsearch-5.2.1]$ ./bin/elasticsearch

处理错误:

ERROR: bootstrap checks failed

max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

cat /etc/security/limits.conf 加上下面这个:

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

cat /etc/sysctl.conf

vm.max_map_count=262144

fs.file-max=65536

然后启动成功!

也可以指定名字启动:

./elasticsearch -Ecluster.name=my_cluster_name -Enode.name=my_node_name

健康基本检测

[es@node bin]$ curl -XGET \'192.168.36.141:9200/_cat/master?v&pretty\'

id host ip node

lbWUxIvITHy_0SufW6fBtw 192.168.36.141 192.168.36.141 my_node_name

[es@node bin]$ curl -XGET \'192.168.36.141:9200/_cat/health?v&pretty\'

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1499173093 08:58:13 my_cluster_name green 1 1 0 0 0 0 0 0 - 100.0%

[es@node bin]$

练习索引和文档:

新建索引,显示索引:

[es@node bin]$ curl -XPUT \'localhost:9200/customer?pretty&pretty\'

[2017-07-04T09:02:16,302][INFO ][o.e.c.m.MetaDataCreateIndexService] [my_node_name] [customer] creating index, cause [api], templates [], shards [5]/[1], mappings []

{

"acknowledged" : true,

"shards_acknowledged" : true

}

[es@node bin]$ curl -XGET \'localhost:9200/_cat/indices?v&pretty\'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open customer gtxHBupjSQ-4DNgMAMnuqQ 5 1 0 0 650b 650b

[es@node bin]$

56 curl -XPUT \'localhost:9200/customer?pretty&pretty\'

57 curl -XGET \'localhost:9200/_cat/indices?v&pretty\'

58 curl -XPUT \'localhost:9200/customer/external/1?pretty&pretty\' -H \'Content-Type: application/json\' -d\'

{

"name": "John Doe"

}

\'

59 curl -XGET \'localhost:9200/customer/external/1?pretty&pretty\'

60 curl -XDELETE \'localhost:9200/customer?pretty&pretty\'

61 curl -XGET \'localhost:9200/_cat/indices?v&pretty\'

62 curl -XPUT \'localhost:9200/customer/external/1?pretty&pretty\' -H \'Content-Type: application/json\' -d\'

{

"name": "John Doe"

}

\'

63 PUT /customer/external/1?pretty

64 { "name": "Jane Doe"; }

65 curl -XPUT \'localhost:9200/customer/external/1?pretty&pretty\' -H \'Content-Type: application/json\' -d\'

{

"name": "Jane Doe"

}

\'

66 PUT /customer/external/2?pretty

67 { "name": "Jane Doe"; }

68 curl -XPUT \'localhost:9200/customer/external/2?pretty&pretty\' -H \'Content-Type: application/json\' -d\'

{

"name": "Jane Doe"

}

\'

69 curl -XDELETE \'localhost:9200/customer/external/2?pretty&pretty\'

官方文档说可以在kibana里面写更加直观。

反正这个装好了!下一个

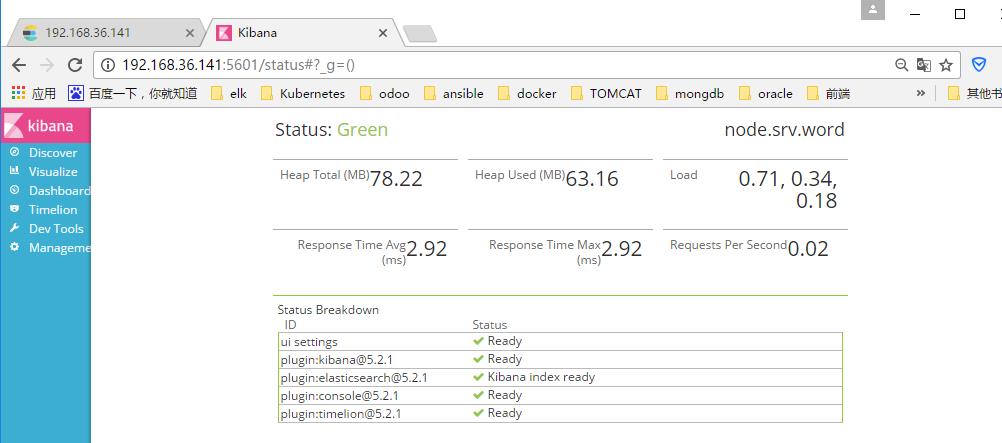

安装kibana:

tar zxvf kibana-5.2.1-linux-x86_64.tar.gz

./kibana &

http://192.168.36.141:5601/status#?_g=()

先安装filebeat:

docker run -itd --name java -p 5000:22 bc74bce8afa1 镜像是我以前做的,直接用,在另外自己的一个网站上,有时间弄下来:

测试:启动我的java包nohup java -jar /data/exchange/exchange-1.4.jar & > nohup.out >/dev/null

获取 nohup.out

tar zxvf filebeat-5.2.1-linux-x86_64.tar.gz

配置:

[root@4e07f322e28f ~]# cat filebeat-5.2.1-linux-x86_64/filebeat.yml

#filebeat.prospectors:

#- input_type: log

# paths:

# - /root/logstash-tutorial.log

#output.logstash:

# hosts: ["192.168.36.150:5043"]

filebeat.prospectors:

- input_type: log

paths:

- /root/nohup.out

# - /var/log/secure

# - /var/log/messages

# - /var/log/*.log

fields:

type: syslog

output.logstash:

hosts: ["192.168.36.141:5043"]

[root@4e07f322e28f ~]#

启动filebeat:./filebeat-5.2.1-linux-x86_64/filebeat -e -c filebeat-5.2.1-linux-x86_64/filebeat.yml -d "publish"

安装logstach:

tar zxvf logstash-5.2.1.tar.gz

配置pip:

[root@node logstash-5.2.1]# cat first-pipline.conf

input {

beats {

port => 5043

# ssl => true

# ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt"

# ssl_key => "/etc/pki/tls/private/logstash-forwarder.key"

}

}

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\\[%{POSINT:syslog_pid}\\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

syslog_pri { }

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

output {

elasticsearch {

hosts => ["192.168.36.141:9200"]

sniffing => true

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

启动起来:

./bin/logstash -f first-pipline.conf --config.reload.automatic

最后在kibana上面建一个索引:

好了数据出来了:

安装一个elasticsearch-head:

这里下载:https://github.com/mobz/elasticsearch-head

1050 unzip elasticsearch-head-master.zip

1051 wget https://nodejs.org/dist/v5.10.1/node-v5.10.1-linux-x64.tar.gz

1052 tar zxvf node-v5.10.1-linux-x64.tar.gz

1053 ln -s /root/node-v5.10.1-linux-x64/bin/node /usr/local/bin/node

1054 ln -s /root/node-v5.10.1-linux-x64/bin/npm /usr/local/bin/npm

1055 cd elasticsearch-head-master

1056 npm install

1057 npm install -g cnpm --registry=https://registry.npm.taobao.org

1058 su es

1059 ll

1060 vi Gruntfile.js

1061 npm install -g grunt--registry=https://registry.npm.taobao.org

1062 npm install

cat _site/app.js

this.base_uri = this.config.base_uri || this.prefs.get("app-base_uri") || "http://192.168.36.141:9200";

cat Gruntfile.js:

server: {

options: {

hostname: \'192.168.36.141\',

port: 9100,

base: \'.\',

keepalive: true

1063 cd node_modules/

1064 cd grunt

1065 cd bin/

1066 ./grunt server

OK!!!!!

---------------我是有底线的--------------------

作者:jt

出处:http://www.cnblogs.com/Jt00/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

如果文中有什么错误,欢迎指出。以免更多的人被误导。

以上是关于elk,centos7,filebeat,elasticsearch-head详细安装步骤的主要内容,如果未能解决你的问题,请参考以下文章