Learning Transferable Adversarial Perturbations

Posted Daft shiner

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Learning Transferable Adversarial Perturbations相关的知识,希望对你有一定的参考价值。

在知乎上关注了一个大佬ZehaoDou窦泽皓,基本做到每天或者隔两天更新一篇已读论文,特别佩服大佬的毅力,因此想像他学习,也算记录自己读过的部分论文,方便下次查找。最后附送大佬主页:ZehaoDou 窦泽皓

2021.12.4 第1篇(NeurIPS 2021) 精读

原文链接:Learning Transferable Adversarial Perturbations

代码链接:Learning Transferable Adversarial Perturbations(截止目前,作者还只传了个壳)

对抗样本迁移性:同样的对抗样本,会同时被不同的分类器错误分类。

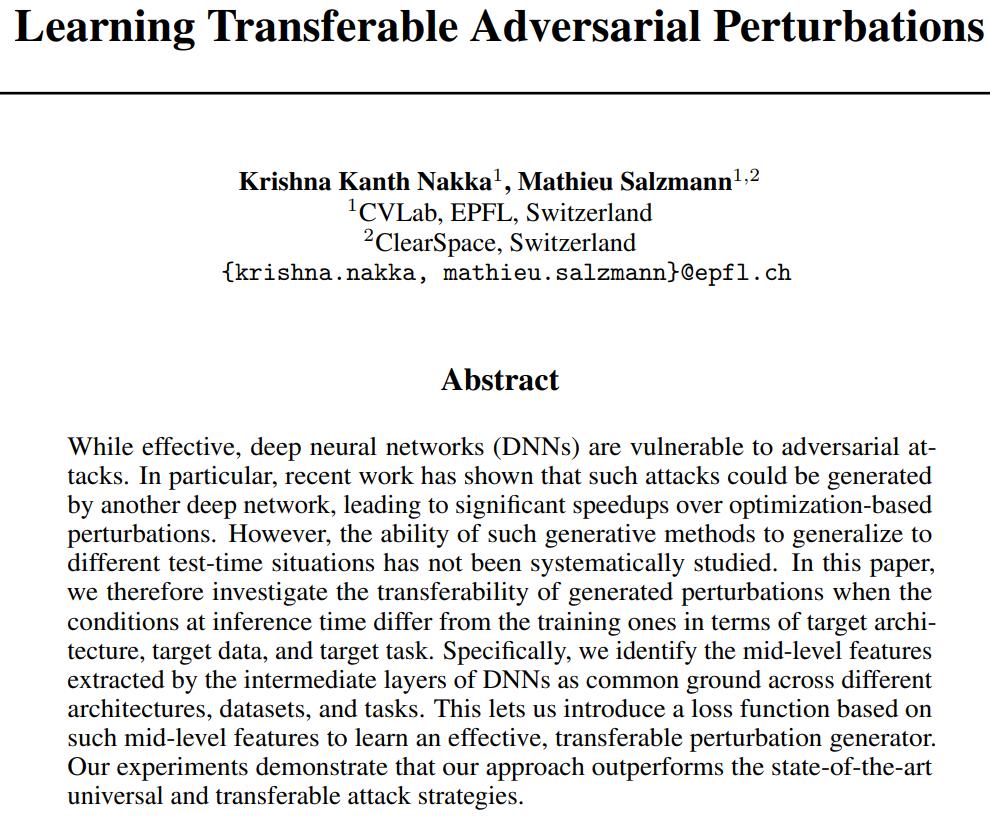

文章从以下三方面入手研究扰动的迁移性:

- target architecture, e.g., the generator was trained to attack a VGG-16 but the target network is a ResNet152;

- target data, e.g., the generator was trained using the Paintings dataset but the test data comes from ImageNet;

- target task, e.g., the generator was trained to attack an image recognition model but faces an object detector at test time

由于他们发现中间层的特征在不同模型结构、数据和任务上有很强的相似性,因此文章利用DNN中间层的特征来提高扰动生成器跨模型、数据和任务的可转移性。

Contributions

- identify the intermediate features of CNNs as common ground across different architectures, different data distributions and different tasks.

- introduce an approach that exploits such features to learn an effective, transferable perturbation generator.

- systematically investigate the effect of target architecture and target data distribution on the transferability of adversarial attacks.

Methodolgy

方法很简单,设计一个扰动生成器

G

\\mathcalG

G,使

L

f

e

a

t

(

x

i

,

x

i

^

)

=

∣

∣

f

l

(

x

i

)

−

f

l

(

x

i

^

)

∣

∣

F

2

\\mathcalL_feat(x_i,\\hatx_i)=\\mid\\mid f_\\mathcall(x_i)-f_\\mathcall(\\hatx_i)\\mid\\mid_F^2

Lfeat(xi,xi^)=∣∣fl(xi)−fl(xi^)∣∣F2

这里

x

i

x_i

xi是原图,

x

i

^

\\hatx_i

xi^是经过扰动生成器

G

\\mathcalG

G后的图,扰动生成器

G

\\mathcalG

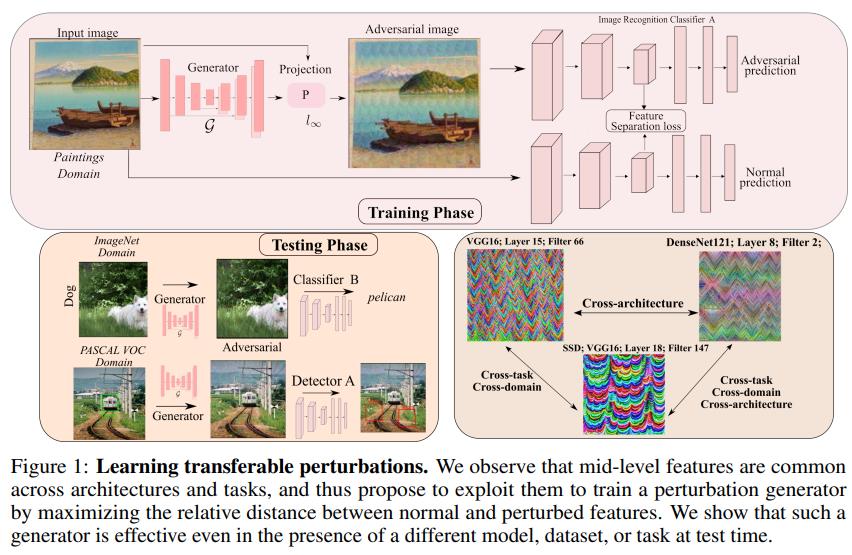

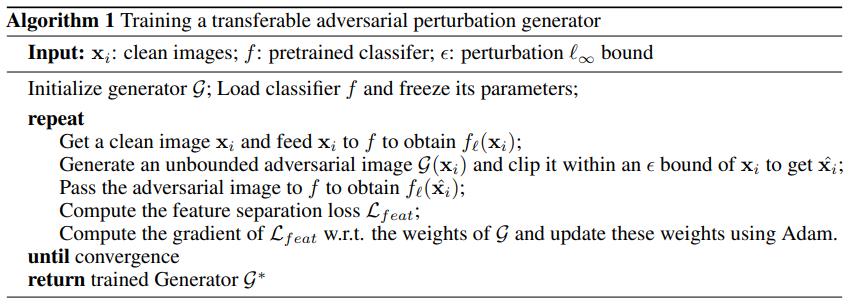

G的目标就是为了让正常样本和扰动样本在模型相应层的距离最大,算法如下:

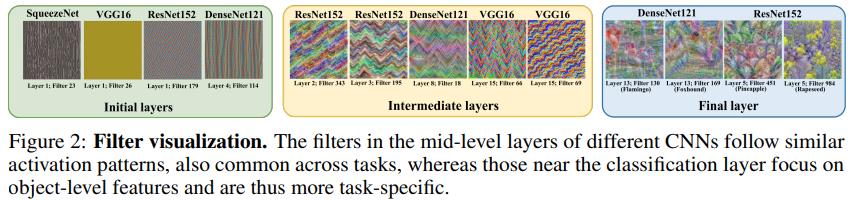

从Figure2可以看出 the bottom filters, close to the input image, extract color and edge information. The top-level filters, close to the output layer, shown in the right block, are more focused on the object representation, and thus more task specific. By contrast, the mid-level filters learn more nuanced features, such as textures, and therefore tend to display similar patterns across architectures, 这也就解释了为什么文章要选择中间层特征而不是前几层或者最后几层特征。

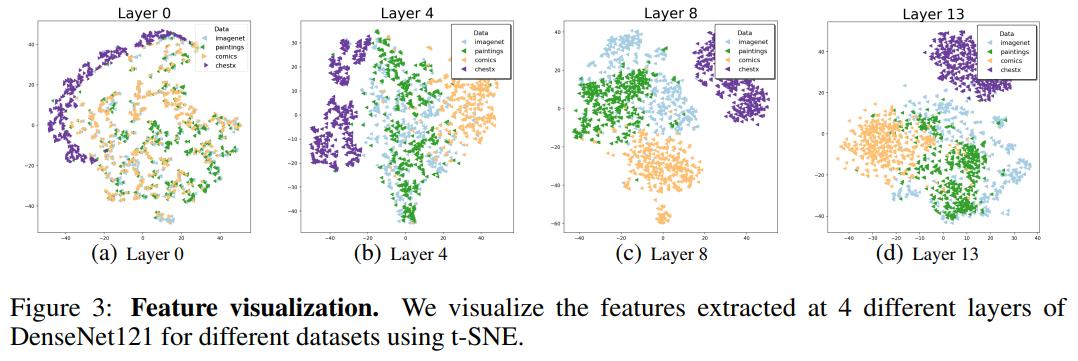

从Figure3可以看出,ChestX dataset和其他数据集的gap很大,而Comics or Paintings datasets和ImageNet dataset的gap较小,因此Comics or Paintings datasets也更适合迁移到ImageNet dataset。

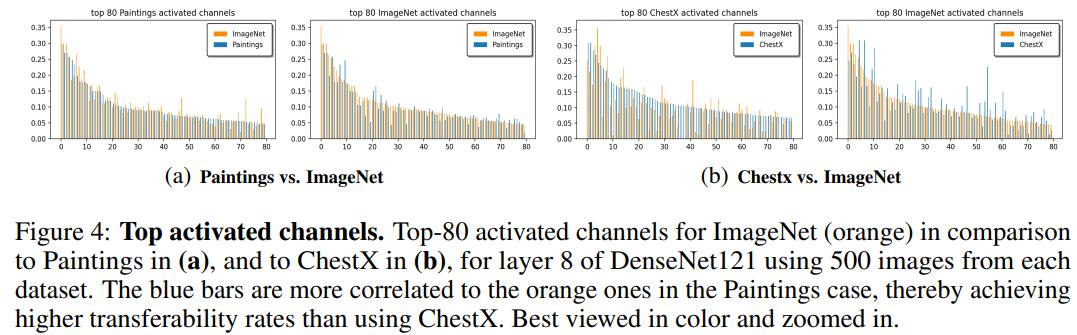

Figure4进一步证明了Paintings datasets比ChestX dataset和ImageNet dataset的gap更小(激活值更相似)。

Comparing with previous works

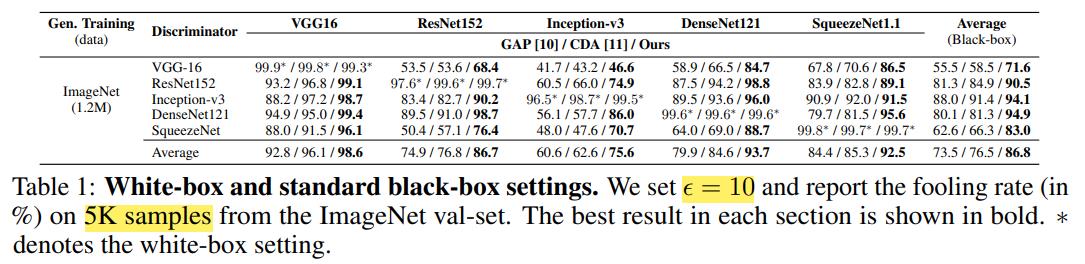

Table 1可以看出本文所提方法的对抗样本迁移性比之前的好很多(不过感觉方法有点老)

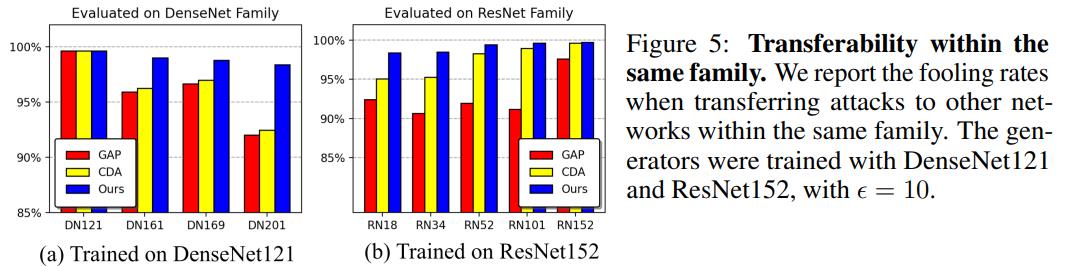

Figure5展示了相同类内模型对抗样本迁移性的比较

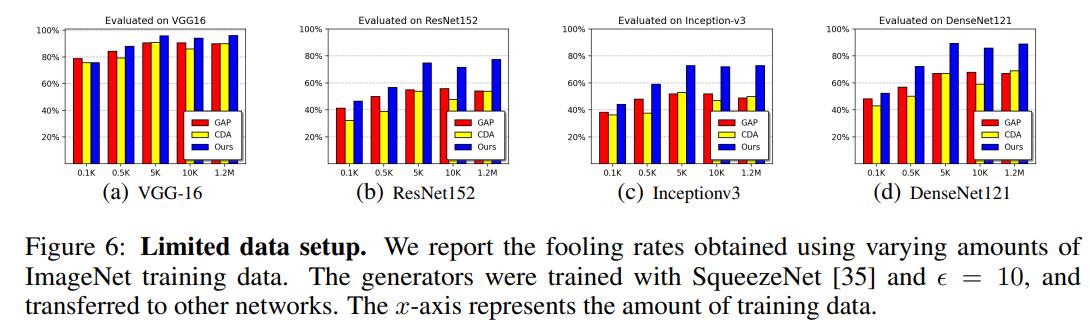

Figure6展示了不同训练数据量得到的模型对抗样本迁移性的比较

Transferability to Unknown Target Data

Unlike standard black-box attacks, where the attacker has access to a substitute model from a different family of architectures trained on the target data and to the target data itself. Strict back-box attacks, where the attacker also uses a substitute model trained on the target data, but without having access to the target data itself.

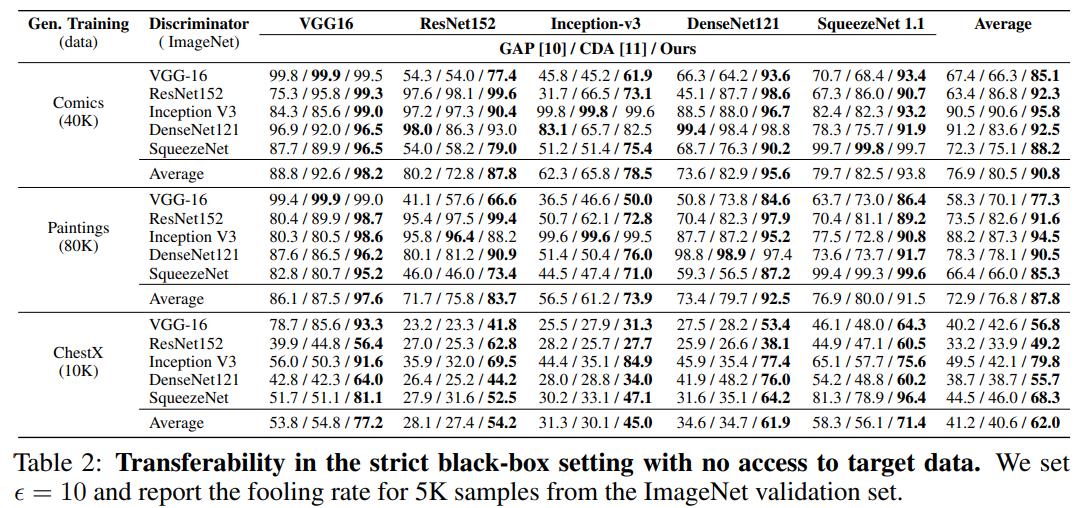

Table 2显示了在获取不到真实数据集的情况下使用其他数据集验证对抗样本的迁移性。

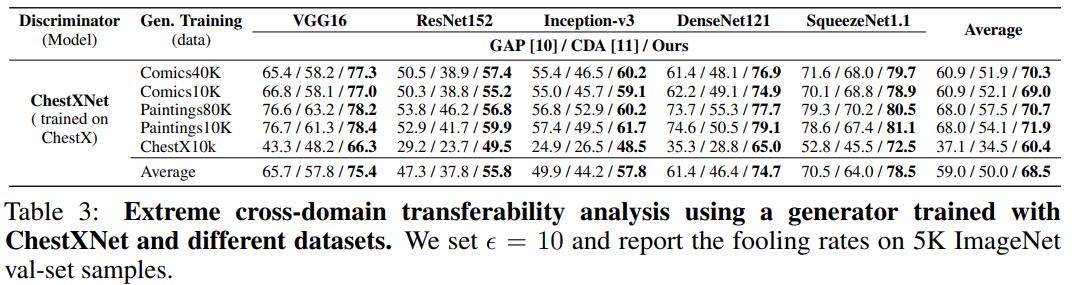

Table 3显示了在获取不到真实数据集的情况下使用ChestXNet数据集迁移到其他数据集上的不同模型的性能。

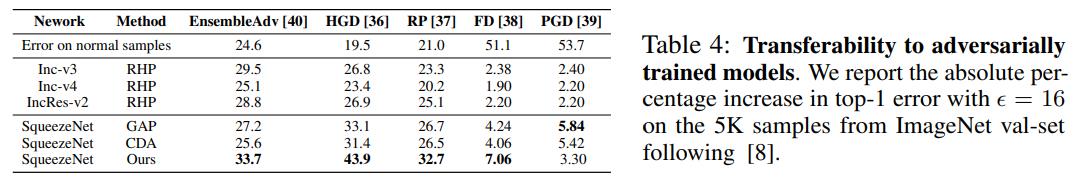

Table 4显示了在对抗防御模型上的对抗样本迁移成功率。

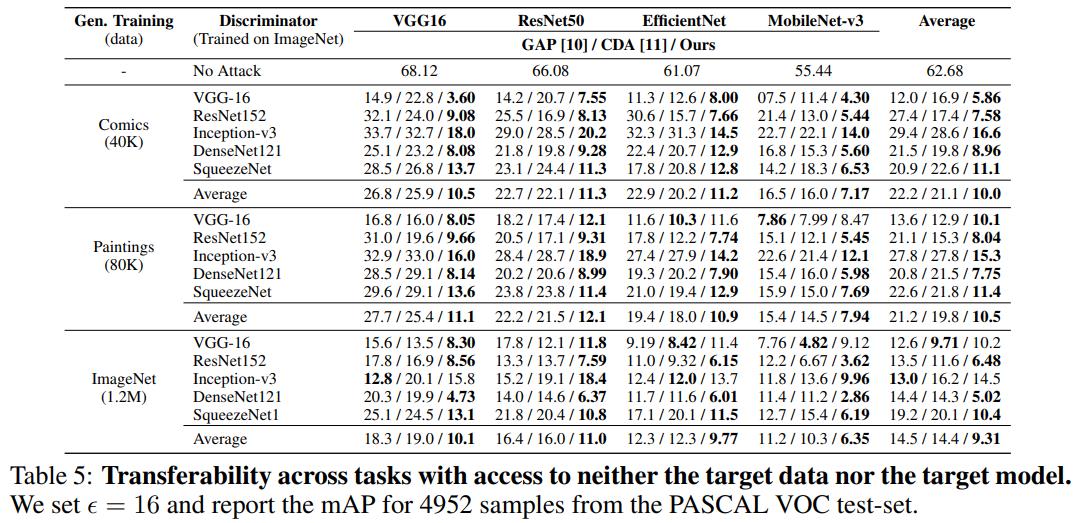

Table 5显示了跨任务在不同数据集和模型上的对抗样本迁移成功率。

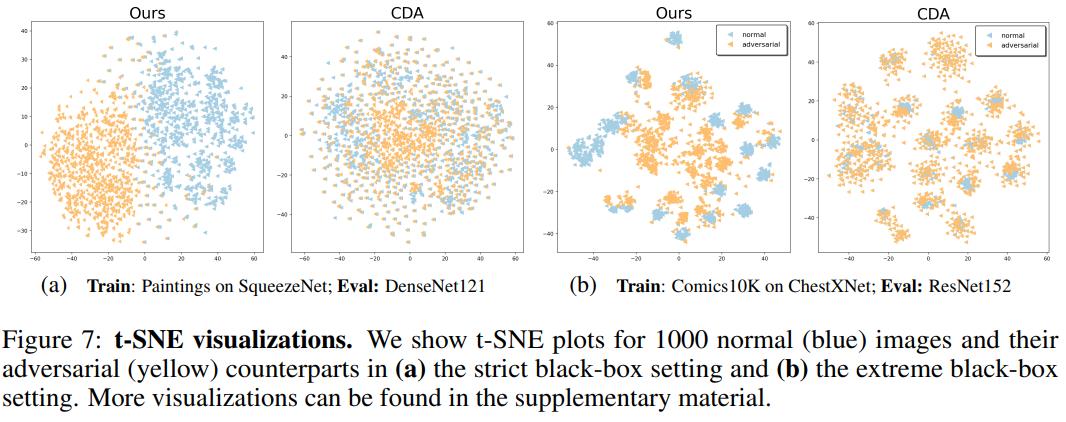

Figure 7显示了本文提出的方法能够比较明显地区分对抗样本和正常样本(毕竟他的损失就是让正常样本和对抗样本的距离最大)。

Figure 8显示了本文提出的方法生成的扰动图片的效果比baseline好很多。

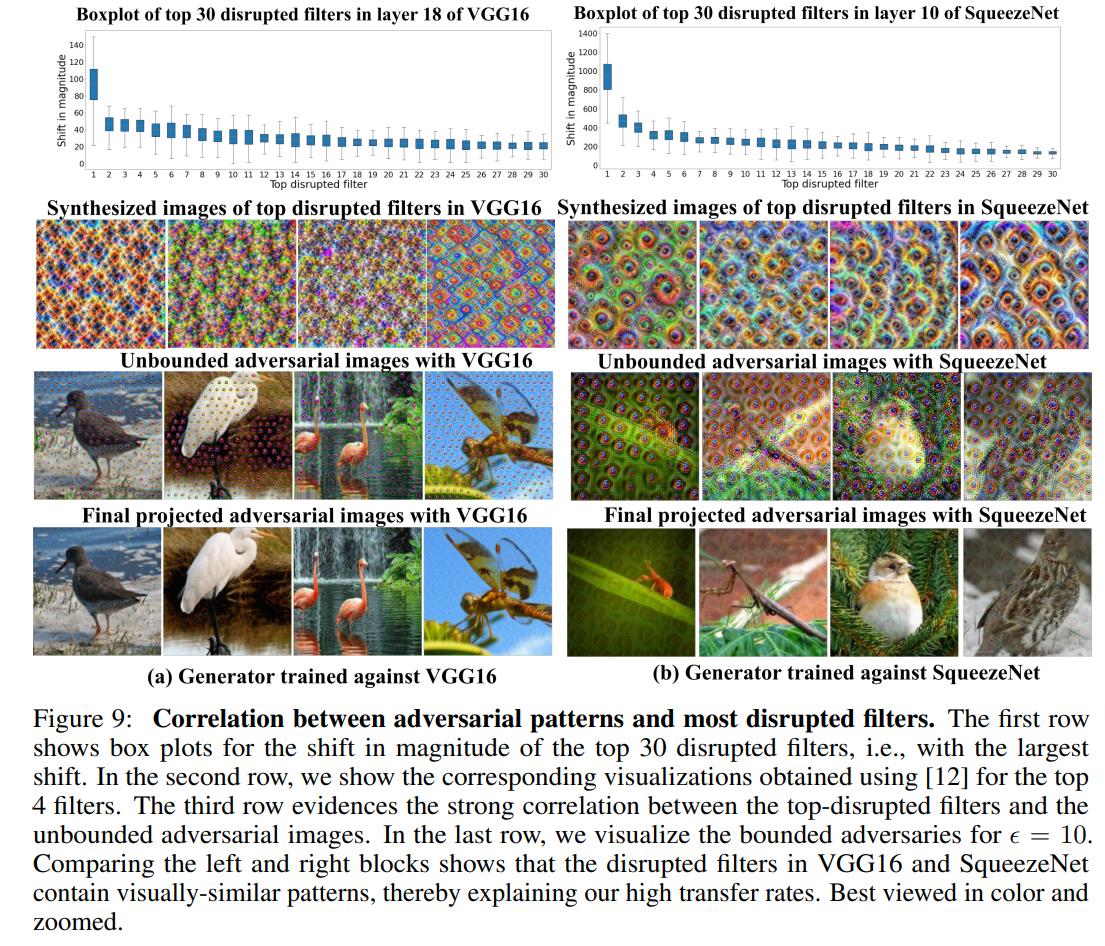

As can also be seen in Figure 9, the perturbations follow repeating patterns corresponding to the patterns of the most disrupted mid-level filters. Our filter visualizations for multiple networks reveals that the bottom layers learn such horizontal and vertical stripes. While we tried training the generator to perturb such layers, we observed that larger

ϵ

\\epsilon

ϵ strength are required in this case. Overall, our results evidence that attacking mid-level filters that have strong correlations across architectures consistently improves the transfer rates.

As can also be seen in Figure 9, the perturbations follow repeating patterns corresponding to the patterns of the most disrupted mid-level filters. Our filter visualizations for multiple networks reveals that the bottom layers learn such horizontal and vertical stripes. While we tried training the generator to perturb such layers, we observed that larger

ϵ

\\epsilon

ϵ strength are required in this case. Overall, our results evidence that attacking mid-level filters that have strong correlations across architectures consistently improves the transfer rates.

个人总结

个人总结

虽然我几乎没看过对抗样本迁移性的文章,不知道这个领域的发展现状如何,但是光从一个外行人的角度来说,这篇文章写作的思路很清晰,实验量也非常之大,非常值得好好学习一下,希望他的代码能全部开源!!!此外由于笔者是对抗样本迁移性小白,文章可能有理解错误的地方,希望大家能多多谅解。

以上是关于Learning Transferable Adversarial Perturbations的主要内容,如果未能解决你的问题,请参考以下文章