大数据Flume数据流监控

Posted 赵广陆

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了大数据Flume数据流监控相关的知识,希望对你有一定的参考价值。

1 Ganglia 的安装与部署

Ganglia 由 gmond、gmetad 和 gweb 三部分组成。gmond(Ganglia Monitoring Daemon)是一种轻量级服务,安装在每台需要收集指标数据的节点主机上。使用 gmond,你可以很容易收集很多系统指标数据,如 CPU、内存、磁盘、网络和活跃进程的数据等。gmetad(Ganglia Meta Daemon)整合所有信息,并将其以 RRD 格式存储至磁盘的服务。gweb(Ganglia Web)Ganglia 可视化工具,gweb 是一种利用浏览器显示 gmetad 所存储数据的 php 前端。在 Web 界面中以图表方式展现集群的运行状态下收集的多种不同指标数据。

1.1 安装 ganglia

(1)规划

hadoop102: web gmetad gmod

hadoop103: gmod

hadoop104: gmod

(2)在 102 103 104 分别安装 epel-release

[atguigu@hadoop102 flume]$ sudo yum -y install epel-release

(3)在 102 安装

[atguigu@hadoop102 flume]$ sudo yum -y install ganglia-gmetad

[atguigu@hadoop102 flume]$ sudo yum -y install ganglia-web

[atguigu@hadoop102 flume]$ sudo yum -y install ganglia-gmond

(4)在 103 和 104 安装

[atguigu@hadoop102 flume]$ sudo yum -y install ganglia-gmond

2 )在 102 修改配置文件 /etc/httpd/conf.d/ganglia.conf

[atguigu@hadoop102 flume]$ sudo vim

/etc/httpd/conf.d/ganglia.conf

修改为红颜色的配置:

# Ganglia monitoring system php web frontend

#

Alias /ganglia /usr/share/ganglia

<Location /ganglia>

# Require local

# 通过 windows 访问 ganglia,需要配置 Linux 对应的主机(windows)ip 地址

Require ip 192.168.9.1

# Require ip 10.1.2.3

# Require host example.org

</Location>

5 )在 在 102 修改配置文件 /etc/ganglia/gmetad.conf

[atguigu@hadoop102 flume]$ sudo vim /etc/ganglia/gmetad.conf

修改为:

data_source “my cluster” hadoop102

6 )在 在 102 103 104 修改配置文件 /etc/ganglia/gmond.conf

[atguigu@hadoop102 flume]$ sudo vim /etc/ganglia/gmond.conf

修改为:

cluster

name = "my cluster"

owner = "unspecified"

latlong = "unspecified"

url = "unspecified"

udp_send_channel

#bind_hostname = yes # Highly recommended, soon to be default.

# This option tells gmond to use a source

address

# that resolves to the machine's hostname.

Without

# this, the metrics may appear to come from

any

# interface and the DNS names associated with

# those IPs will be used to create the RRDs.

# mcast_join = 239.2.11.71

# 数据发送给 hadoop102

host = hadoop102

port = 8649

ttl = 1

udp_recv_channel

# mcast_join = 239.2.11.71

port = 8649

# 接收来自任意连接的数据

bind = 0.0.0.0

retry_bind = true

# Size of the UDP buffer. If you are handling lots of metrics

you really

# should bump it up to e.g. 10MB or even higher.

# buffer = 10485760

7 )在 在 102 修改配置文件 /etc/selinux/config

[atguigu@hadoop102 flume]$ sudo vim /etc/selinux/config

修改为:

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

尖叫提示:selinux 生效需要重启,如果此时不想重启,可以临时生效之:

[atguigu@hadoop102 flume]$ sudo setenforce 0

8 ) 启动 ganglia

(1)在 102 103 104 启动

[atguigu@hadoop102 flume]$ sudo systemctl start gmond

(2)在 102 启动

[atguigu@hadoop102 flume]$ sudo systemctl start httpd

[atguigu@hadoop102 flume]$ sudo systemctl start gmetad

9 ) 打开网页浏览 a ganglia 页面

http://hadoop102/ganglia

尖叫提示:如果完成以上操作依然出现权限不足错误,请修改/var/lib/ganglia 目录

的权限:

[atguigu@hadoop102 flume]$ sudo chmod -R 777 /var/lib/ganglia

2 操作 Flume 测试监控

2.1 启动 Flume 任务

[atguigu@hadoop102 flume]$ bin/flume-ng agent \\

-c conf/ \\

-n a1 \\

-f job/flume-netcat-logger.conf \\

-Dflume.root.logger=INFO,console \\

-Dflume.monitoring.type=ganglia \\

-Dflume.monitoring.hosts=hadoop102:8649

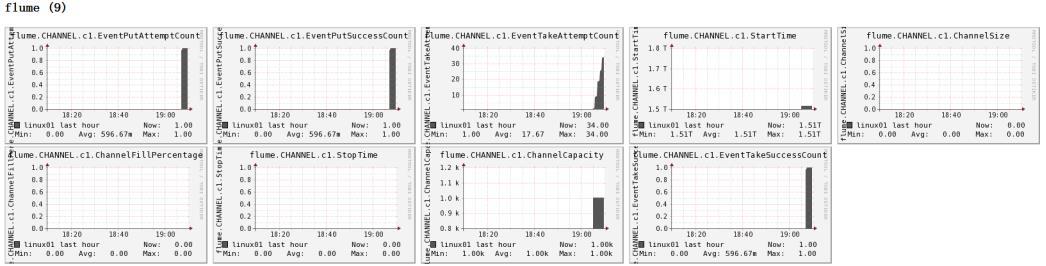

2.2 发送数据观察 a ganglia 监测图

[atguigu@hadoop102 flume]$ nc localhost 44444

图例说明:

以上是关于大数据Flume数据流监控的主要内容,如果未能解决你的问题,请参考以下文章

大数据技术之KafkaKafka APIKafka监控Flume对接KafkaKafka面试题