基于 Labelme 制作手部关键点数据集 并转 COCO 格式

Posted 炼丹狮

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于 Labelme 制作手部关键点数据集 并转 COCO 格式相关的知识,希望对你有一定的参考价值。

Labelme 制作手部21个关键点数据

因为导师的项目是手部姿态估计,经过一些技术预研,最后选定了 Open-MMLab 的 MMPose 作为基础框架来做底层架构。先说下Open-MMLab我认为的优点:

1. 代码优质:模块化做的非常好,易于扩展

2. 多研究方向支持:现在有十几个研究方向,包括MMCV(用于计算机视觉研究的基础)、MMDetection(目标检测工具箱)、MMDetection3D(3D目标检测)、MMSegmentation(语义分割工具箱)、MMClassification(分类工具箱)、MMPose(姿势估计工具箱)、MMAction2(动作理解工具箱)、MMFashion(视觉时尚分析工具箱)、MMEditing(图像和视频编辑工具箱)、MMFlow(光流工具箱)等等

3. 社区活跃:社区沟通一般是通QQ群或者微信群进行沟通,有任何问题都在社区得到相对及时的回答,而且针对一些业务思路,社区志愿者或者官方也会给出一些思路或者建议,这个非常不错。

4. 高性能:提供的现成SOTA算法和预训练模型都比官方的实现有显著的提升

5. 文档丰富:官方文档在不同研究方向上都有非常详细的中英文教程,新手可以按照教程迅速上手,赞一个

技术预研的时候看了包括百度的飞浆(PP)、旷视的天元(MegEngine),也都做得非常好,但是支持的研究方向就少了一些。

实验过程和一些注意事项如下文,希望帮助到有缘人,o( ̄︶ ̄)o

1:环境准备

1.1 基础环境

操作系统:Windows 10

Python:3.8

Anaconda:4.10.1 (这个版本不重要)

Anaconda环境安装,如果没有安装的请自行搜索安装下,或者参考我之前的安装教程(Windows10 安装 Anaconda + CUDA + CUDNN )

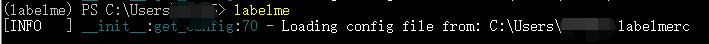

1.2 安装 Labelme

- 可以参考官方的安装,地址:https://github.com/wkentaro/labelme

1.2.1:创建anaconda虚拟环境

conda create -n labelme python=3.8

1.2.2:激活虚拟环境

conda activate labelme

1.2.3:安装labelme的依赖

conda install pillow

conda install pyqt

1.2.3:安装labelme

pip install labelme

1.2.4:运行

lableme

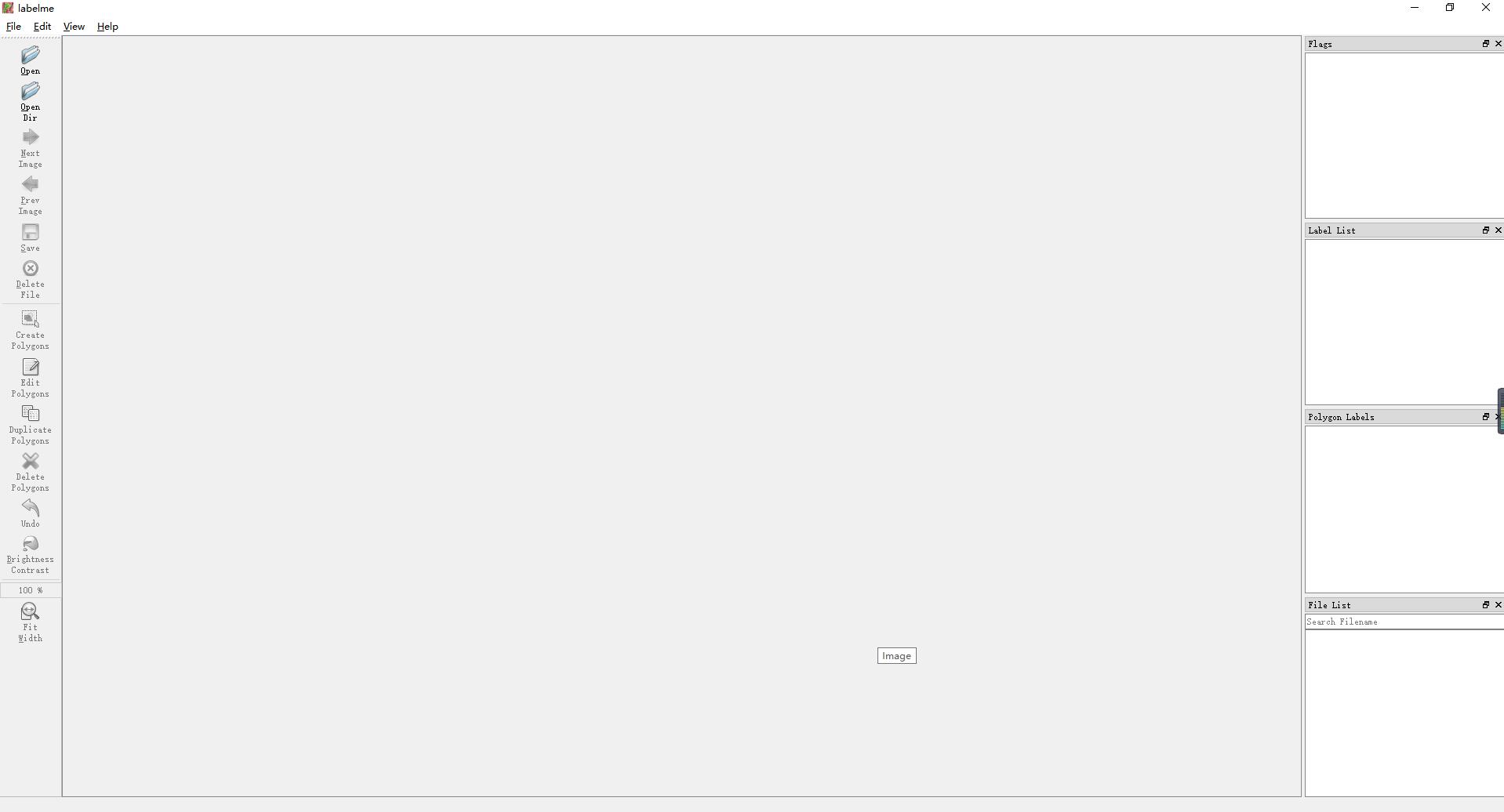

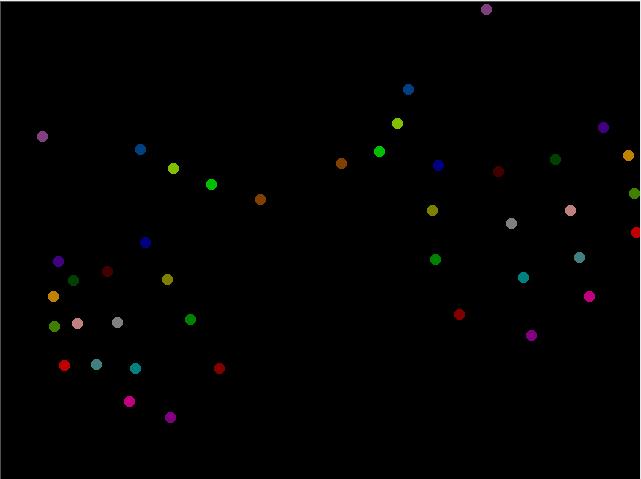

2. 标注图片

标注过程很简单,就是简单的对手部进行表框和对手部的21个关键点进行打点

打完点,大概是这个样子:

3. 标注转化

现在比较流行的标注是 COCO 和 VOC,因为 Open-MMLab 对 COCO 支持的比较好,就转成 COCO 格式的数据集了。

代码如下,有详细的注释。

import os

import sys

import glob

import json

import shutil

import argparse

import numpy as np

import PIL.Image

import os.path as osp

from tqdm import tqdm

from labelme import utils

from sklearn.model_selection import train_test_split

class Labelme2coco_keypoints():

def __init__(self, args):

"""

Lableme 关键点数据集转 COCO 数据集的构造函数:

Args

args:命令行输入的参数

- class_name 根类名字

"""

self.classname_to_id = args.class_name: 1

self.images = []

self.annotations = []

self.categories = []

self.ann_id = 0

self.img_id = 0

def save_coco_json(self, instance, save_path):

json.dump(instance, open(save_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=1)

def read_jsonfile(self, path):

with open(path, "r", encoding='utf-8') as f:

return json.load(f)

def _get_box(self, points):

min_x = min_y = np.inf

max_x = max_y = 0

for x, y in points:

min_x = min(min_x, x)

min_y = min(min_y, y)

max_x = max(max_x, x)

max_y = max(max_y, y)

return [min_x, min_y, max_x - min_x, max_y - min_y]

def _get_keypoints(self, points, keypoints, num_keypoints):

"""

解析 labelme 的原始数据, 生成 coco 标注的 关键点对象

例如:

"keypoints": [

67.06149888292556, # x 的值

122.5043507571318, # y 的值

1, # 相当于 Z 值,如果是2D关键点 0:不可见 1:表示可见。

82.42582269256718,

109.95672933232304,

1,

...,

],

"""

if points[0] == 0 and points[1] == 0:

visable = 0

else:

visable = 1

num_keypoints += 1

keypoints.extend([points[0], points[1], visable])

return keypoints, num_keypoints

def _image(self, obj, path):

"""

解析 labelme 的 obj 对象,生成 coco 的 image 对象

生成包括:id,file_name,height,width 4个属性

示例:

"file_name": "training/rgb/00031426.jpg",

"height": 224,

"width": 224,

"id": 31426

"""

image =

img_x = utils.img_b64_to_arr(obj['imageData']) # 获得原始 labelme 标签的 imageData 属性,并通过 labelme 的工具方法转成 array

image['height'], image['width'] = img_x.shape[:-1] # 获得图片的宽高

# self.img_id = int(os.path.basename(path).split(".json")[0])

self.img_id = self.img_id + 1

image['id'] = self.img_id

image['file_name'] = os.path.basename(path).replace(".json", ".jpg")

return image

def _annotation(self, bboxes_list, keypoints_list, json_path):

"""

生成coco标注

Args:

bboxes_list: 矩形标注框

keypoints_list: 关键点

json_path:json文件路径

"""

if len(keypoints_list) != args.join_num * len(bboxes_list):

print('you loss keypoint(s) with file '.format(args.join_num * len(bboxes_list) - len(keypoints_list), json_path))

print('Please check !!!')

sys.exit()

i = 0

for object in bboxes_list:

annotation =

keypoints = []

num_keypoints = 0

label = object['label']

bbox = object['points']

annotation['id'] = self.ann_id

annotation['image_id'] = self.img_id

annotation['category_id'] = int(self.classname_to_id[label])

annotation['iscrowd'] = 0

annotation['area'] = 1.0

annotation['segmentation'] = [np.asarray(bbox).flatten().tolist()]

annotation['bbox'] = self._get_box(bbox)

for keypoint in keypoints_list[i * args.join_num: (i + 1) * args.join_num]:

point = keypoint['points']

annotation['keypoints'], num_keypoints = self._get_keypoints(point[0], keypoints, num_keypoints)

annotation['num_keypoints'] = num_keypoints

i += 1

self.ann_id += 1

self.annotations.append(annotation)

def _init_categories(self):

"""

初始化 COCO 的 标注类别

例如:

"categories": [

"supercategory": "hand",

"id": 1,

"name": "hand",

"keypoints": [

"wrist",

"thumb1",

"thumb2",

...,

],

"skeleton": [

]

]

"""

for name, id in self.classname_to_id.items():

category =

category['supercategory'] = name

category['id'] = id

category['name'] = name

# 21 个关键点数据

category['keypoint'] = [ "wrist",

"thumb1",

"thumb2",

"thumb3",

"thumb4",

"forefinger1",

"forefinger2",

"forefinger3",

"forefinger4",

"middle_finger1",

"middle_finger2",

"middle_finger3",

"middle_finger4",

"ring_finger1",

"ring_finger2",

"ring_finger3",

"ring_finger4",

"pinky_finger1",

"pinky_finger2",

"pinky_finger3",

"pinky_finger4"]

# category['keypoint'] = [str(i + 1) for i in range(args.join_num)]

self.categories.append(category)

def to_coco(self, json_path_list):

"""

Labelme 原始标签转换成 coco 数据集格式,生成的包括标签和图像

Args:

json_path_list:原始数据集的目录

"""

self._init_categories()

for json_path in tqdm(json_path_list):

obj = self.read_jsonfile(json_path) # 解析一个标注文件

self.images.append(self._image(obj, json_path)) # 解析图片

shapes = obj['shapes'] # 读取 labelme shape 标注

bboxes_list, keypoints_list = [], []

for shape in shapes:

if shape['shape_type'] == 'rectangle': # bboxs

bboxes_list.append(shape) # keypoints

elif shape['shape_type'] == 'point':

keypoints_list.append(shape)

self._annotation(bboxes_list, keypoints_list, json_path)

keypoints =

keypoints['info'] = 'description': 'Lableme Dataset', 'version': 1.0, 'year': 2021

keypoints['license'] = ['BUAA']

keypoints['images'] = self.images

keypoints['annotations'] = self.annotations

keypoints['categories'] = self.categories

return keypoints

def init_dir(base_path):

"""

初始化COCO数据集的文件夹结构;

coco - annotations #标注文件路径

- train #训练数据集

- val #验证数据集

Args:

base_path:数据集放置的根路径

"""

if not os.path.exists(os.path.join(base_path, "coco", "annotations")):

os.makedirs(os.path.join(base_path, "coco", "annotations"))

if not os.path.exists(os.path.join(base_path, "coco", "train")):

os.makedirs(os.path.join(base_path, "coco", "train"))

if not os.path.exists(os.path.join(base_path, "coco", "val")):

os.makedirs(os.path.join(base_path, "coco", "val"))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument("--class_name", "--n", help="class name", type=str, required=True)

parser.add_argument("--input", "--i", help="json file path (labelme)", type=str, required=True)

parser.add_argument("--output", "--o", help="output file path (coco format)", type=str, required=True)

parser.add_argument("--join_num", "--j", help="number of join", type=int, required=True)

parser.add_argument("--ratio", "--r", help="train and test split ratio", type=float, default=0.12)

args = parser.parse_args()

labelme_path = args.input

saved_coco_path = args.output

init_dir(saved_coco_path) # 初始化COCO数据集的文件夹结构

json_list_path = glob.glob(labelme_path + "/*.json")

train_path, val_path = train_test_split(json_list_path, test_size=args.ratio)

print(' for training'.format(len(train_path)),

'\\n for testing'.format(len(val_path)))

print('Start transform please wait ...')

l2c_train = Labelme2coco_keypoints(args) # 构造数据集生成类

# 生成训练集

train_keypoints = l2c_train.to_coco(train_path)

l2c_train.save_coco_json(train_keypoints, os.path.join(saved_coco_path, "coco", "annotations", "keypoints_train.json"))

# 生成验证集

l2c_val = Labelme2coco_keypoints(args)

val_instance = l2c_val.to_coco(val_path)

l2c_val.save_coco_json(val_instance, os.path.join(saved_coco_path, "coco", "annotations", "keypoints_val.json"))

# 拷贝 labelme 的原始图片到训练集和验证集里面

for file in train_path:

shutil.copy(file.replace("json", "bmp"), os.path.join(saved_coco_path, "coco", "train"))

for file in val_path:

shutil.copy(file.replace("json", "bmp"), os.path.join(saved_coco_path, "coco", "val"))

代码写的有些凌乱,周末再优化下,O(∩_∩)O哈哈~

欢迎有问题的同学留言讨论,我也是刚入坑姿态估计,炼丹之路刚刚起步,加油 ~~~~

参考文献

[1]. labelme批量json_to_dataset转换

[2]. OpenMMLab的新篇章

[3]. Labelme使用教程

[4]. m5823779/labelme2coco-keypoints

以上是关于基于 Labelme 制作手部关键点数据集 并转 COCO 格式的主要内容,如果未能解决你的问题,请参考以下文章