keepalived+LVS实现高可用的Web负载均衡

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了keepalived+LVS实现高可用的Web负载均衡相关的知识,希望对你有一定的参考价值。

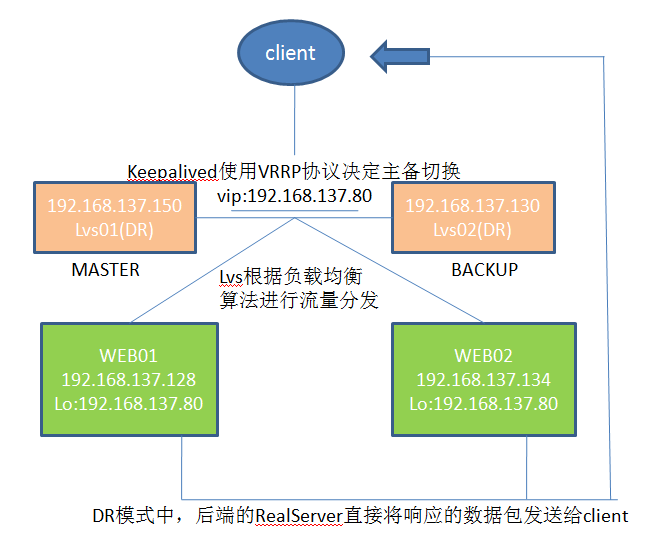

数据流架构图:

一、测试环境

| 主机名 | ip | vip |

| lvs01 | 192.168.137.150 | 192.168.137.80 |

| lvs02 | 192.168.137.130 | |

| web01 | 192.168.137.128 | -- |

| web02 | 192.168.137.134 | -- |

二、安装配置lvs、keepalived

1.分别在lvs01,lvs02主机上安装ipvsadm keepalived

yum install ipvsadm keepalived -y

Installed:

ipvsadm.x86_64 0:1.27-7.el7 keepalived.x86_64 0:1.2.13-9.el7_3

2.lvs01上的keepalived配置文件,按以下内容进行修改,将lvs01配置为MASTER节点,并设置LVS的负载均衡模式为DR模式

lvs01 ~]# vi /etc/keepalived/keepalived.conf

! Configuration Filefor keepalived

global_defs {

notification_email {

}

smtp_server 192.168.137.150

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER #MASTER

interface ens33

virtual_router_id 52

priority 100 #必须比BACKUP的值大

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.80 #VIP

}

}

virtual_server 192.168.137.80 80 {

delay_loop 6

lb_algo rr #轮询算法

lb_kind DR #DR模式

#persistence_timeout 50

protocol TCP

real_server 192.168.137.128 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.137.134 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

3、修改lvs02上的keepalived配置文件,按以下内容进行修改,其实只有2处地方与主节点的配置文件不同,即state 要修改为Backup,priority数值要比master的小

lvs02 ~]# vi /etc/keepalived/keepalived.conf

! Configuration Filefor keepalived

global_defs {

notification_email {

}

smtp_server 192.168.137.130

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP #BACKUP

interface eth0

virtual_router_id 52

priority 90 #必须比MASTER的值小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.80 #VIP

}

}

virtual_server 192.168.137.80 80 {

delay_loop 6

lb_algo rr #轮询算法

lb_kind DR #DR模式

#persistence_timeout 50

protocol TCP

real_server 192.168.137.128 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.137.134 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

4.lvs01和lvs02主机上上设置keepalived开机自动启动,并启动keepalived服务

lvs01 ~]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

lvs01 ~]# systemctl start keepalived

注:查看日志是否有相关日志文件/var/log/messages输出

systemd: Started LVS and VRRP High Availability Monitor.

Keepalived_vrrp[2416]:VRRP_Instance(VI_1) Transition to MATER STATE

Keepalived_healthcheckers[2415]: Netlink reflector reports IP 192.168.137.80 added.

Jun 12 17:07:26 server2 Keepalived_vrrp[15654]: VRRP_Instance(VI_1) Entering BACKUP STATE

5.查看vip是否已经绑定到网卡,

lvs01 ~]# ip a

inet 192.168.137.150/24 brd 192.168.137.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.137.80/32 scope global ens33

valid_lft forever preferred_lft forever

lvs02 ~]# ip a ##介意可以看到vip不在lvs02上

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:a5:b4:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.130/24 brd 192.168.137.255 scope global eth0

inet6 fe80::20c:29ff:fea5:b485/64 scope link

valid_lft forever preferred_lft forever

6.查看LVS的状态,可以看到VIP和两台Realserver的相关信息

lvs01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.137.80:80 rr

-> 192.168.137.128:80 Route 1 0 0

-> 192.168.137.134:80 Route 1 0 0

7.由于DR模式是后端两台realserver在响应请求时直接将数据包发给客户端,无需再经过LVS,这样减轻了LVS的负担、提高了效率,但由于LVS分发给realserver的数据包的目的地址是VIP地址,因此必须把VIP地址绑定到realserver的回环网卡lo上,否则realserver会认为该数据包不是发给自己因此会丢弃不作响应。另外由于网络接口都会进行ARP广播响应,因此当其他机器也有VIP地址时会发生冲突,故需要把realserver的lo接口的ARP响应关闭掉。我们可以用以下脚本来实现VIP绑定到lo接口和关闭ARP响应。

web01 ~]# vim /etc/init.d/lvsrs.sh

#!/bin/bash

#chkconfig: 2345 80 90

vip=192.168.137.80

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p

执行该脚本设置该脚本开机自动执行,查看IP地址,发现lo接口已经绑定了VIP地址

@web01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.137.80/32 brd 192.168.137.80 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

@web02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.137.80/32 brd 192.168.137.80 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

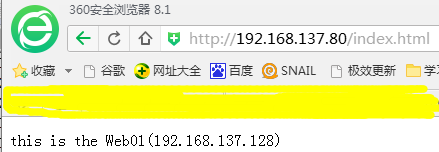

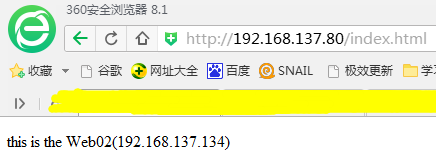

三、LVS负载均衡测试

3、查看LVS的状态,可以看到两台realserver各有2个不活动的连接,说明按1:1权重的轮询也有生效,不活动连接是因为我们只是访问一个静态页面,访问过后很快就会处于不活动状态

lvs01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.137.80:80 rr

-> 192.168.137.128:80 Route 1 0 2

-> 192.168.137.134:80 Route 1 0 2

四、Keepalived高可用测试

1、停止lvs01上的keepalived服务,再观察它的日志,可以发现其绑定的VIP被移除,两个realserver节点也被移除了

lvs01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:77:71:4e brd ff:ff:ff:ff:ff:ff

inet 192.168.137.150/24 brd 192.168.137.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::1565:761b:d9a2:42e4/64 scope link

valid_lft forever preferred_lft forever

lvs01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

此时发现vip及rs节点出现在lvs02上:并且用vip可还可正常访问,表示漂移成功,并且如果之后lvs01恢复正常,vip依然会漂到lvs01上,原因为keepalived的配置文件里状态为master,

lvs02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:a5:b4:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.130/24 brd 192.168.137.255 scope global eth0

inet 192.168.137.80/32 scope global eth0

inet6 fe80::20c:29ff:fea5:b485/64 scope link

valid_lft forever preferred_lft forever

[[email protected] ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.137.80:80 rr

-> 192.168.137.128:80 Route 1 0 0

-> 192.168.137.134:80 Route 1 0 0

2.我们将web01的httpd服务停止,模拟web01出现故障不能提供web服务,测试keepalived能否及时监控到并将web01从LVS中剔除,不再分发请求给web01,防止用户访问到故障的web服务器

@web01 ~]# /usr/local/apache24/bin/httpd -k stop

@lvs02 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.137.80:80 rr

-> 192.168.137.134:80 Route 1 0 0

@lvs02 ~]#

此时把web01的http服务启动,keepalive的进程会检测到rs恢复,自动添加到rs池中。

本文出自 “Gavin” 博客,请务必保留此出处http://guopeng7216.blog.51cto.com/9377374/1934678

以上是关于keepalived+LVS实现高可用的Web负载均衡的主要内容,如果未能解决你的问题,请参考以下文章