手把手从零开始搭建k8s集群超详细教程

Posted Baret-H

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了手把手从零开始搭建k8s集群超详细教程相关的知识,希望对你有一定的参考价值。

本教程根据B站课程云原生Java架构师的第一课K8s+Docker+KubeSphere+DevOps同步所做笔记教程

k8s集群搭建超详细教程

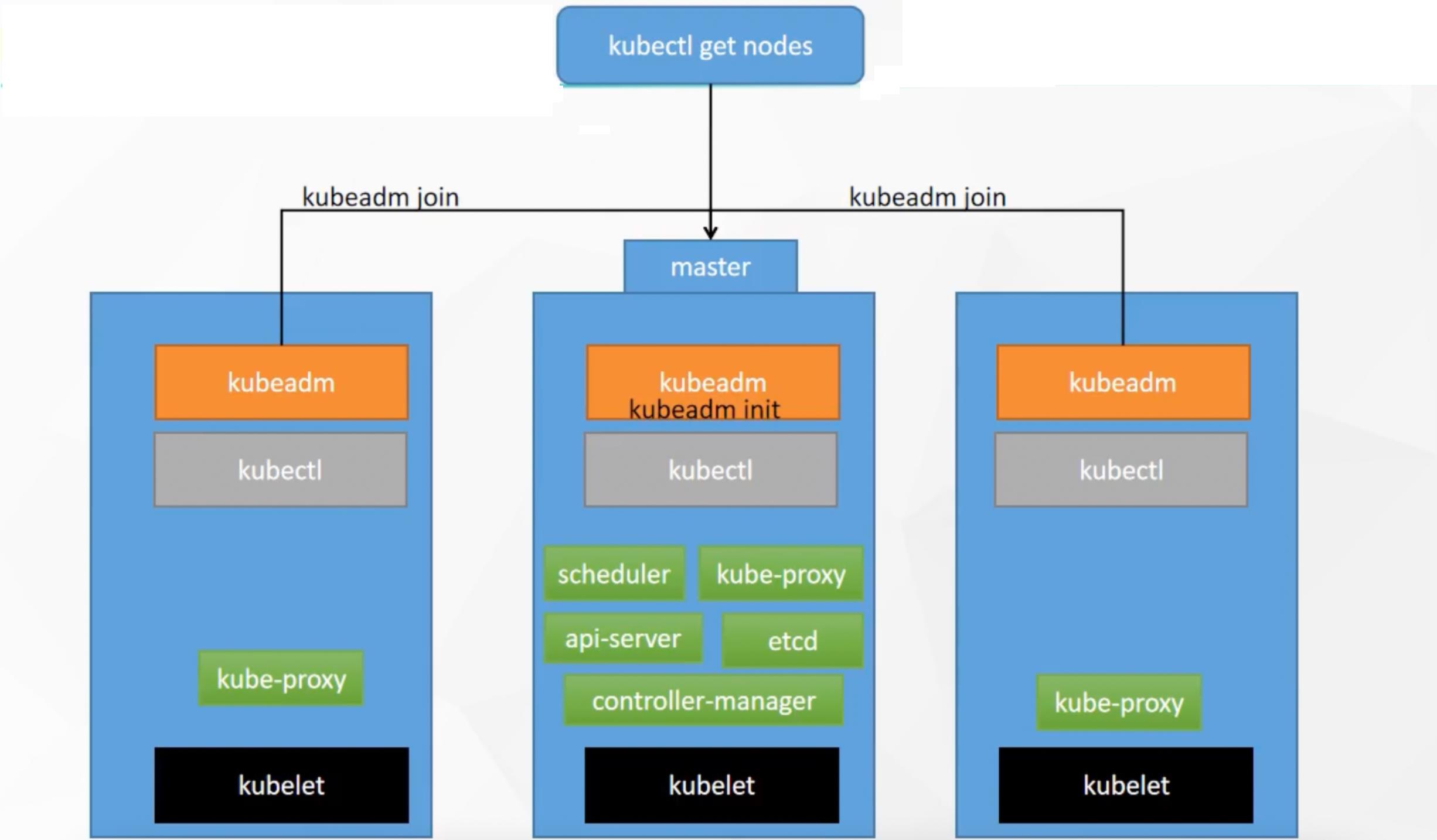

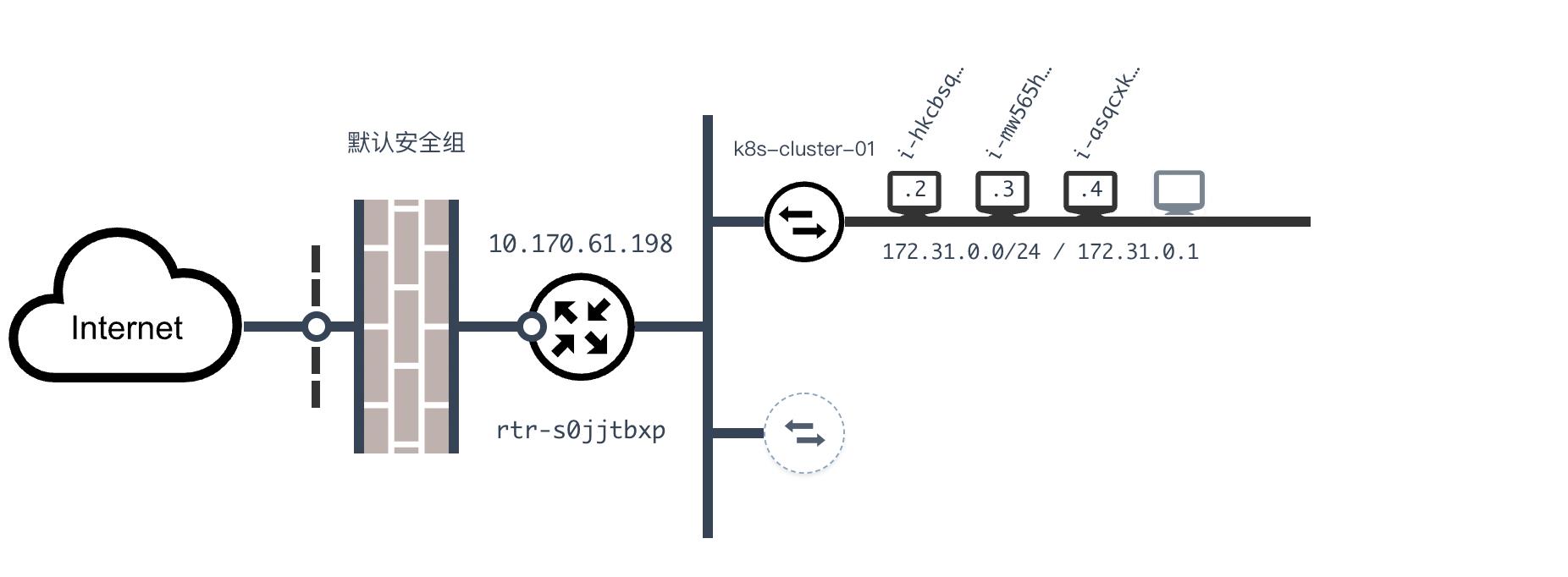

搭建集群架构如下图所示:一共三台机器,其中一个master节点,两个worker工作节点,保证每台机器间能使用内网ip互通

每台机器首先安装docker保证容器运行环境,然后安装核心的三个部件

kubelet、kubectl(命令行工具)、kubeadm(初始化集群工具)

1. 基本环境搭建

以下实验基于第一家混合云上市公司 | 青云QingCloud完成,为什么要选用青云呢?首先是青云自研了KubeSphere,它是基于 Kubernetes 构建的分布式、多租户、多集群、企业级开源容器平台,我们稍后会学习该平台的使用。其次,在使用的过程中,体会到了青云对于各种资源操作的便捷,且附有各种便于理解的可视化界面,整个控制台界面简单高效。

1. 创建私有网络

VPC即

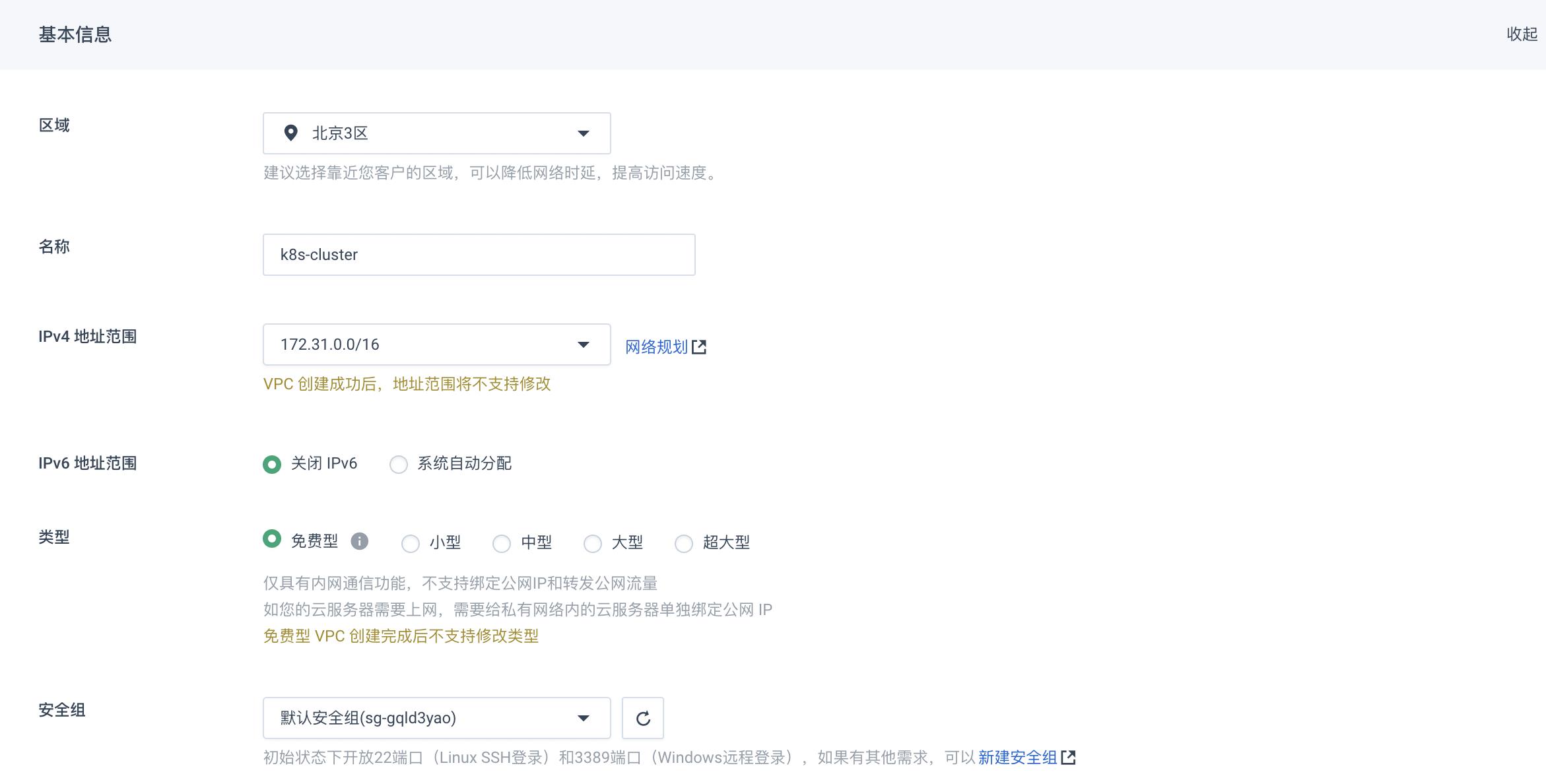

Virtual Private Cloud,私有网络,可以理解为一个网段,在这个网段内还可以选择创建子网段。不同的私有网络内实现完全的隔离,保证资源的封闭性,在公有云上构建出一个专属隔离的网络环境。在 VPC 网络内,您可以自定义 IP 地址范围,创建子网,并在子网内创建云服务器、数据库、大数据等各种云资源。

接下来我们新建一个VPC名为k8s-cluster专门用来存放k8s的集群,并在其中创建一个私有网络k8s-cluster-01

创建完成后如图所示:

2. 创建服务器资源

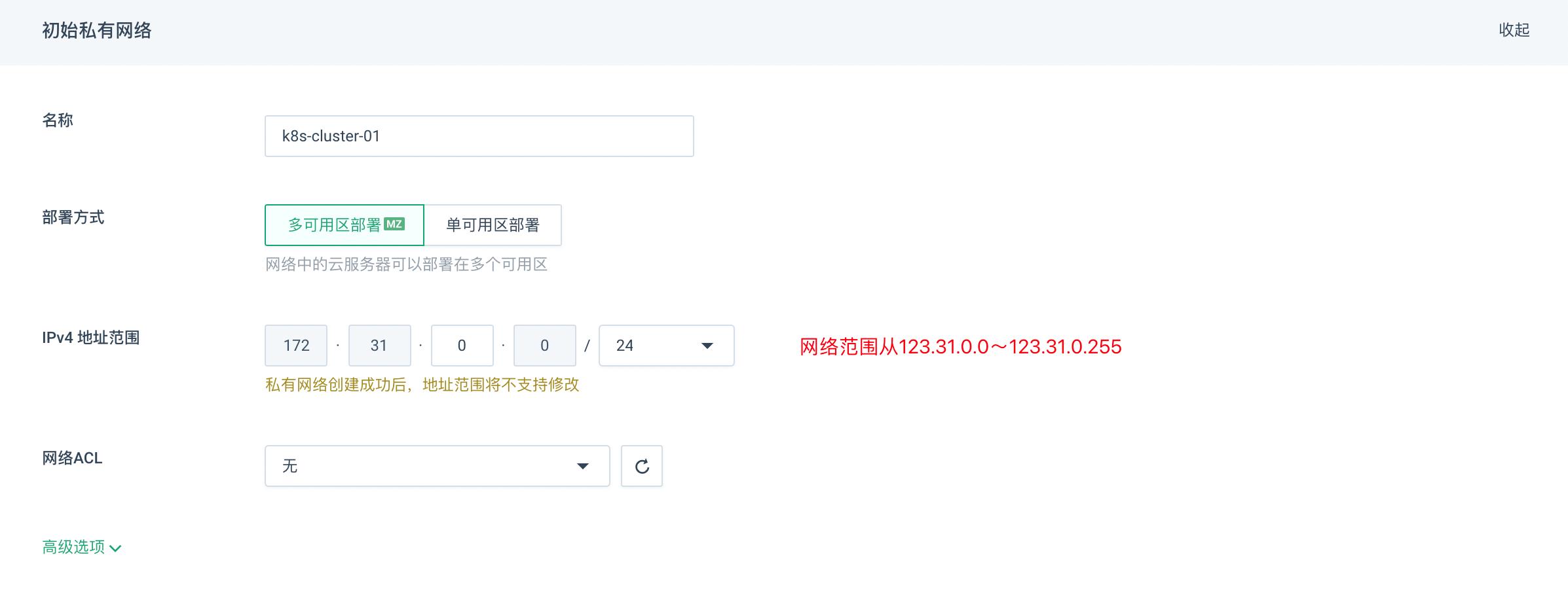

准备三台centos服务器,这里以青云QingCloud的云服务器为例,创建三个centos服务器

注意:kubenetes集群安装要求每台机器内存 >= 2 GB、核心数 >= 2 CPU

选择按需付费,其中:网络加入到我们自己创建的VPC私有网络k8s-cluster-01中,且每台服务器新建对应的公网ip,选择按流量付费

创建完成后可以在VPC私有网络中看到新建的3台服务器:

注意打开安全组的组内互信,就是保证同一个局域网内的所有机器不受防火墙的限制都可以互相访问

3. 远程连接到服务器

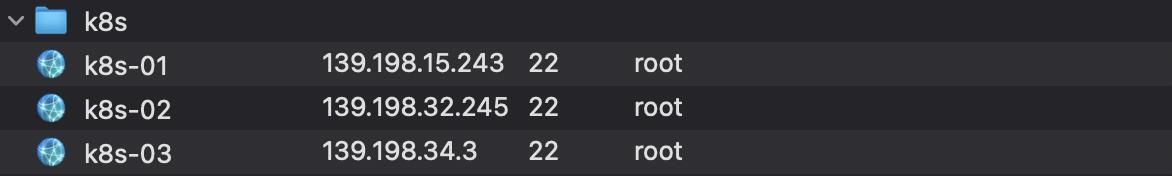

利用远程连接工具连接到3个服务器,其中k8s-01我们作为集群中的主节点

用ip a命令可以查看每个服务器的内网IP,保证3台服务器间能使用内网ip相互ping通

4. docker容器化环境安装

首先给每台服务器安装docker

# 1.移除以前docker相关包

sudo yum remove docker \\

docker-client \\

docker-client-latest \\

docker-common \\

docker-latest \\

docker-latest-logrotate \\

docker-logrotate \\

docker-engine

# 2. 配置yum源

sudo yum install -y yum-utils

sudo yum-config-manager \\

--add-repo \\

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 3. 安装docker

sudo yum install -y docker-ce docker-ce-cli containerd.io

# 4. 启动docker

systemctl enable docker --now

# 5. 配置阿里云加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

5. kubeadm、kubectl、kubelet安装

kubernetes集群安装环境要求:

1️⃣ 基本要求完善

在三台云主机上分别执行以下命令,来保证安装kubernetes集群的基本要求

# 设置每个机器自己的hostname(这里分别为k8s-master、k8s-node1、k8s-node2)

hostnamectl set-hostname 主机名

# 禁用SELinux安全子系统(将SELinux设置为permissive模式)

sudo setenforce 0 # 临时

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config # 永久

# 禁用虚拟内存(关闭swap)

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 允许iptables检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 使配置生效

sudo sysctl --system

2️⃣ 安装kubelet、kubeadm、kubectl

在三台云主机上分别执行以下命令安装 kubelet、kubeadm、kubectl

# 配置k8s的yum源地址

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装 kubelet,kubeadm,kubectl

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9

# 启动kubelet

sudo systemctl enable --now kubelet

# 所有机器配置master域名

echo "172.31.0.4 k8s-master" >> /etc/hosts

2. 使用kubeadm引导集群

1. 下载k8s所需的镜像

在三台云服务器上执行以下命令,命令中编写了一个shell脚本然后执行来帮我们下载安装k8s集群所需的相关镜像

# 编写shell文件

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

# 给shell文件权限并执行

chmod +x ./images.sh && ./images.sh

2. 添加k8s中主节点的域名映射

在三台机器上执行以下命令来添加master域名映射,其中的ip需要修改自己要配置的主节点私网ip地址

# 所有机器添加master域名映射,以下ip需要修改自己要配置的主节点私网ip地址

echo "172.31.0.2 cluster-endpoint" >> /etc/hosts

配置完成后我们可以在任意机器ping cluster-endpoint进行测试,ping通则代表配置成功

# 配置完成后直接ping域名测试

ping cluster-endpoint

3. 初始化k8s主节点

在需要作为直接点的主机中(这里为k8s-01)执行以下命令,使用kubeadm初始化k8s集群中的主节点

注意:修改–apiserver-advertise-address为自己主机的私网ip地址

# 主节点初始化(只对主节点主机执行)

kubeadm init \\

--apiserver-advertise-address=172.31.0.2 \\

--control-plane-endpoint=cluster-endpoint \\

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \\

--kubernetes-version v1.20.9 \\

--service-cidr=10.96.0.0/16 \\

--pod-network-cidr=192.168.0.0/16

主节点初始化成功如下图所示:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token ut0k7e.j286ljqnnaz8v2dp \\

--discovery-token-ca-cert-hash sha256:71dd29dbcc8438caf523df03c6623bac89df35e958cb0adca0f9d400abe8ca7b \\

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token ut0k7e.j286ljqnnaz8v2dp \\

--discovery-token-ca-cert-hash sha256:71dd29dbcc8438caf523df03c6623bac89df35e958cb0adca0f9d400abe8ca7b

其中有进一步的操作提示:

1️⃣ 设置.kube/config

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

2️⃣ 安装网络组件

# 下载calico配置文件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

# 应用calico组件

kubectl apply -f calico.yaml

4. 添加k8s集群中的从节点

在其他两台云主机k8s-02、k8s-03上分别执行上述初始化主节点完后的提示命令加入到k8s-master的集群中

kubeadm join cluster-endpoint:6443 --token x5g4uy.wpjjdbgra92s25pp \\

--discovery-token-ca-cert-hash sha256:6255797916eaee52bf9dda9429db616fcd828436708345a308f4b917d3457a22

# 注意:该命令24小时过期,过期后可以通过如下命令生成新的命令

kubeadm token create --print-join-com

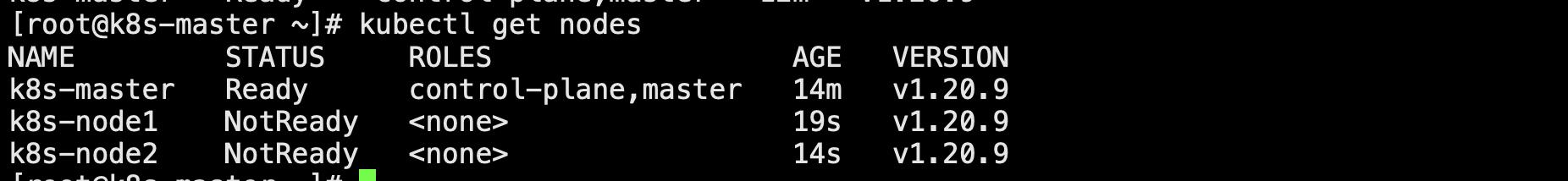

然后我们在master上查看部署的所有应用,可以发现两个节点已经加入

过一段时间待节点初始化完成后即可编程ready状态

3. 验证集群自动恢复功能

kubenetes集群有自动恢复功能,如果我们在青云控制台上将三台云主机关机重启,k8s应用仍然会自动恢复,可通过以下命令来验证

# 查看集群所有节点

kubectl get nodes

# 查看集群部署了哪些应用?类似docker ps(运行中的应用在docker里面叫容器,在k8s里面叫Pod)

kubectl get pods -A

4. 部署k8s可视化管理界面——dashboard

dashboard 是kubernetes官方提供的k8s控制台可视化界面

1. 下载部署dashboard

k8s中下载创建应用可以采用yaml配置文件的方式,使用以下命令即可创建资源

# 根据配置文件,给集群创建资源

kubectl apply -f xxxx.yaml

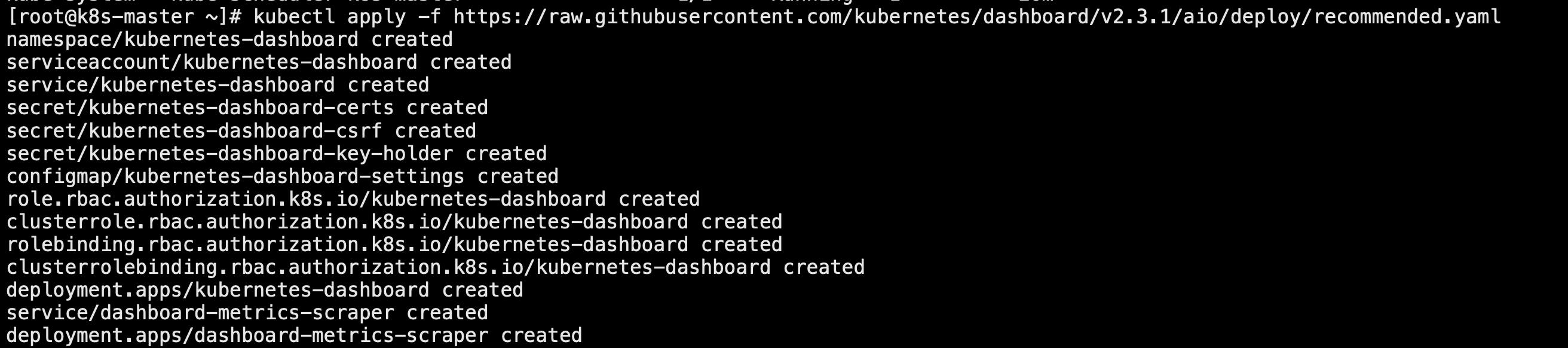

接下来我们以配置文件的方式安装dashboard可视化界面

# 在主节点执行以下命令安装dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

如果下载不下来,则可以创建复制以下配置文件并通过kubectl apply -f 配置文件名命令配置应用

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.3.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

配置成功如下图所示:

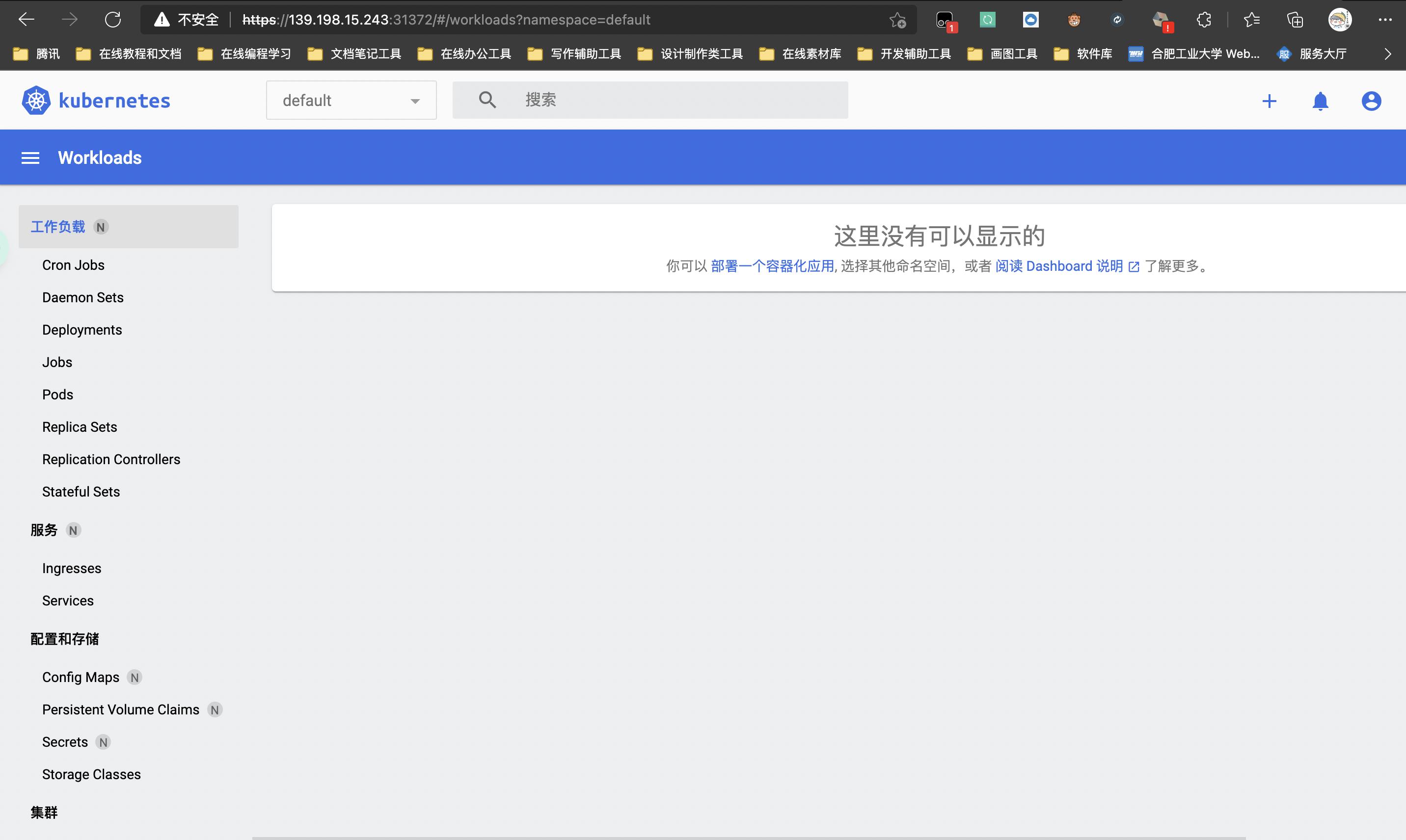

2. 设置dashboard访问端口

# 1.运行以下命令将dashboard web界面的端口暴露到机器上

# 注意将文件中的 type: ClusterIP 改为 type: NodePort

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

注意将文件中的 type: ClusterIP 改为 type: NodePort,这里我们只需要知道NodePort表示暴露端口可以使用公网访问,具体原因后续会介绍

# 2.找到端口,在安全组放行

kubectl get svc -A |grep kubernetes-dashboard

这里为31372端口,然后在青云安全组设置中开放该端口

然后我们使用集群中任意一台机器的公网IP加上该端口号即可访问,注意带上https前缀

注意:如果出现不安全不能继续前往的情况,直接在页面输入thisisunsafe,直接在页面输入不需要在地址栏输入即可自动跳转

3. 创建访问账号

# 1. 创建访问账号,准备一个yaml文件

vim dash-user.yaml

# 文件内容如下

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

# 2. 然后应用该配置

kubectl apply -f dash-user.yaml

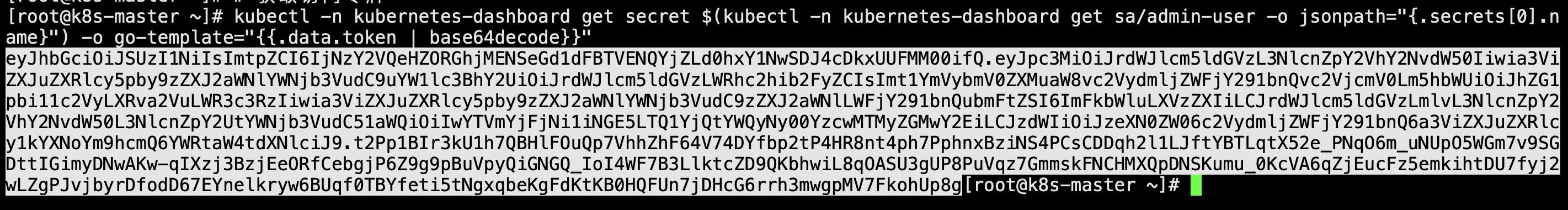

4. 获取访问令牌

# 获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6IjNzY2VQeHZORGhjMENSeGd1dFBTVENQYjZLd0hxY1NwSDJ4cDkxUUFMM00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWR3c3RzIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwYTVmYjFjNi1iNGE5LTQ1YjQtYWQyNy00YzcwMTMyZGMwY2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.t2Pp1BIr3kU1h7QBHlFOuQp7VhhZhF64V74DYfbp2tP4HR8nt4ph7PphnxBziNS4PCsCDDqh2l1LJftYBTLqtX52e_PNqO6m_uNUpO5WGm7v9SGDttIGimyDNwAKw-qIXzj3BzjEeORfCebgjP6Z9g9pBuVpyQiGNGQ_IoI4WF7B3LlktcZD9QKbhwiL8qOASU3gUP8PuVqz7GmmskFNCHMXQpDNSKumu_0KcVA6qZjEucFz5emkihtDU7fyj2wLZgPJvjbyrDfodD67EYnelkryw6BUqf0TBYfeti5tNgxqbeKgFdKtKB0HQFUn7jDHcG6rrh3mwgpMV7FkohUp8g

然后复制令牌进行登录,即可进入到管理界面

以上是关于手把手从零开始搭建k8s集群超详细教程的主要内容,如果未能解决你的问题,请参考以下文章