语义分割专题语义分割相关工作--Fully Convolutional DenseNet

Posted mind_programmonkey

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了语义分割专题语义分割相关工作--Fully Convolutional DenseNet相关的知识,希望对你有一定的参考价值。

The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation

在本文中扩充了DenseNets,以解决语义分割的问题。在城市场景基准数据集(CamVid和Gatech)上获得了最优异的结果,没有使用进一步的后处理模块(如CRF)和预训练模型。此外,由于模型的优异结构,这个方根比当前发布在这些数据集上取得了最佳的网络参数要少得多。

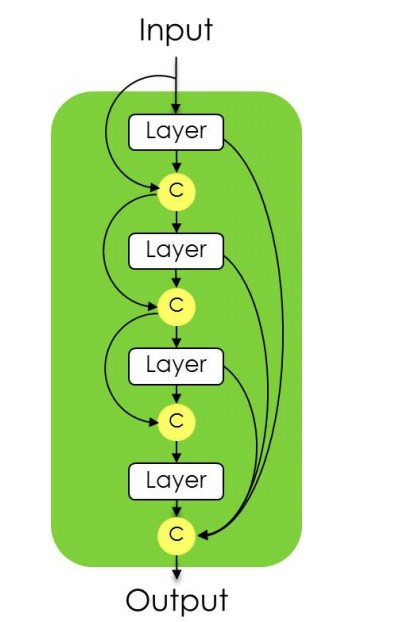

DenseNets背后的思想是让每一层以一种前馈的方式与所有层相连接,能够让网络更容易训练,更加准确。

主要贡献在:

- 针对语义分割任务,将DenseNets的结构扩展到了全卷积网络中;

- 提出密集网络中进行上采样路径的方式,这要比其他的上采样路径性能更好。

- 证明了网络能够在标准的基准测试数据集上产生较好的效果。

https://arxiv.org/abs/1611.09326

-

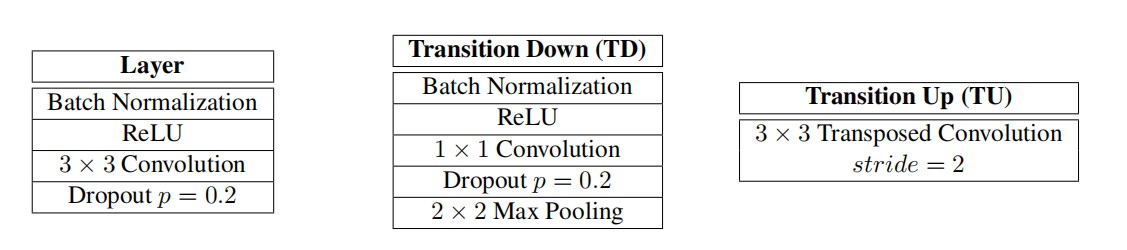

DenseBlock: BatchNormalization + Activation [ Relu ] + Convolution2D + Dropout

-

TransitionDown: BatchNormalization + Activation [ Relu ] + Convolution2D + Dropout + MaxPooling2D

-

TransitionUp: Deconvolution2D (Convolutions Transposed)

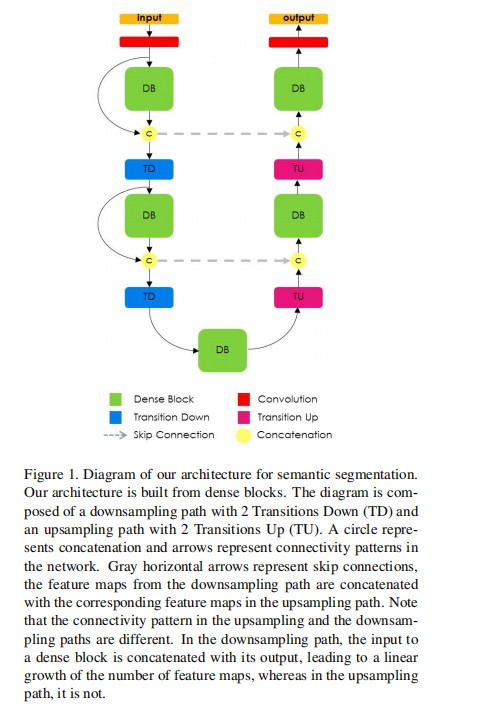

论文架构:

-

Abstract

一个典型的图像分割模型的结构是:一个下采样的模块用来提取粗糙的语义信息;之后一个经过训练的上采样模型来恢复图像的尺寸;最后一个后处理单元来精修模型预测。发现了一个新的网络架构DenseNet

将DenseNets扩展到语义分割上

-

Introduction

FCN为了补偿池化层造成的损失,FCN引进了skip connections跳跃连接结构,在下采样和上采样之间的路径中。这种结构有利于增加准确率以及帮助快速训练优化。

DenseNet:parameter efficiency;implicit deep supervision;feature reuse;以便skip connections 和 multi-scale supervision

论文主要贡献:将DenseNet网络架构引入到了FCN当中,并缓解了特征图探索,也就是只使用前几层;提出基于dense blocks的上采样路径;在几个数据集达到最先进。

-

Related Work

主要改进的措施:改进上采样的路径(从简单的双线性插值 - > unpooling or transposed connvolutions -> skip connections);

引进广泛的语义理解;扩张卷积;RNNS;无监督的全局图像描述符;提出一个基于扩张卷积的语义模块来扩大网络呃感受野

赋予FCN结构化的能力:增加一个CRF模块(Conditional Random Fields)

-

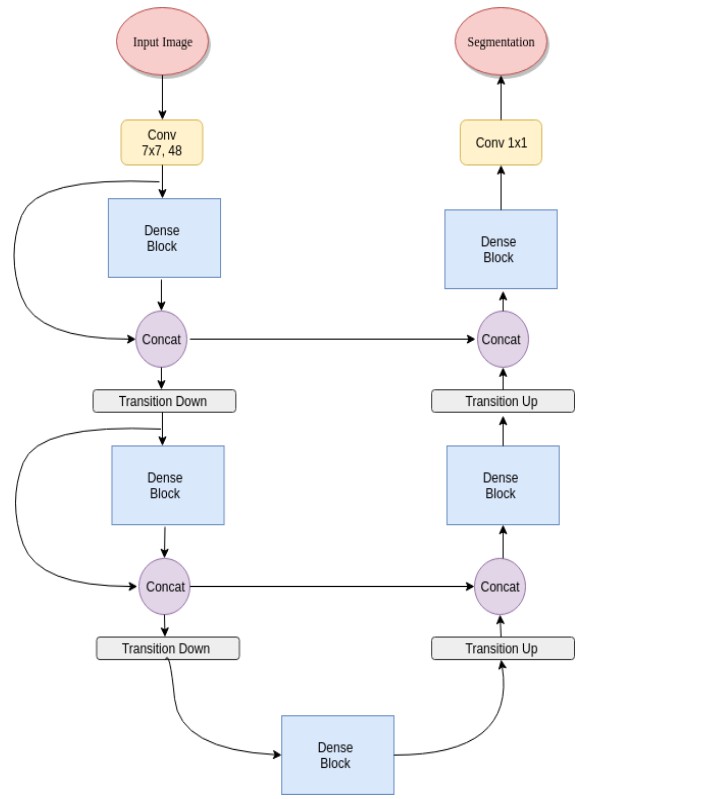

Fully Convolutional DenseNets

a down sampling path;an upsampling path;skip connections;

跳过连接通过重用特征映射帮助上采样路径从下采样路径恢复空间详细信息。

我们模型的目标是通过扩展更复杂的DenseNet体系结构来进一步利用特征重用,同时避免网络上采样路径上的特征爆炸。DenseNetss回顾;DenseNets到FCNS中;语义分割;

-

Experiments

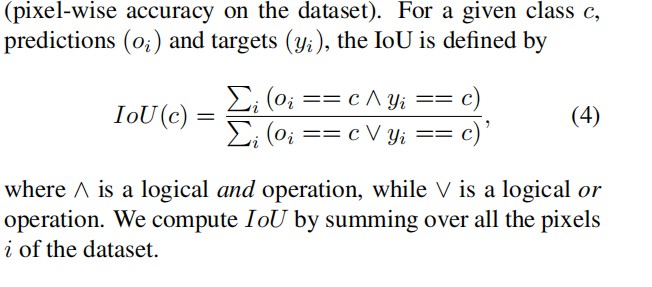

两个基准数据集;IOU;训练细节:HeUniform和RMSprop;

两个数据集;

-

Conclusion

公式

代码

模块化

from keras.layers import Activation,Conv2D,MaxPooling2D,UpSampling2D,Dense,BatchNormalization,Input,Reshape,multiply,add,Dropout,AveragePooling2D,GlobalAveragePooling2D,concatenate

from keras.layers.convolutional import Conv2DTranspose

from keras.models import Model

import keras.backend as K

from keras.regularizers import l2

from keras.engine import Layer,InputSpec

from keras.utils import conv_utils

def BN_ReLU_Conv(inputs, n_filters, filter_size=3, dropout_p=0.2):

'''Apply successivly BatchNormalization, ReLu nonlinearity, Convolution and Dropout (if dropout_p > 0)'''

l = BatchNormalization()(inputs)

l = Activation('relu')(l)

l = Conv2D(n_filters, filter_size, padding='same', kernel_initializer='he_uniform')(l)

if dropout_p != 0.0:

l = Dropout(dropout_p)(l)

return l

def TransitionDown(inputs, n_filters, dropout_p=0.2):

""" Apply first a BN_ReLu_conv layer with filter size = 1, and a max pooling with a factor 2 """

l = BN_ReLU_Conv(inputs, n_filters, filter_size=1, dropout_p=dropout_p)

l = MaxPooling2D((2,2))(l)

return l

def TransitionUp(skip_connection, block_to_upsample, n_filters_keep):

'''Performs upsampling on block_to_upsample by a factor 2 and concatenates it with the skip_connection'''

#Upsample and concatenate with skip connection

l = Conv2DTranspose(n_filters_keep, kernel_size=3, strides=2, padding='same', kernel_initializer='he_uniform')(block_to_upsample)

l = concatenate([l, skip_connection], axis=-1)

return l

def SoftmaxLayer(inputs, n_classes):

"""

Performs 1x1 convolution followed by softmax nonlinearity

The output will have the shape (batch_size * n_rows * n_cols, n_classes)

"""

l = Conv2D(n_classes, kernel_size=1, padding='same', kernel_initializer='he_uniform')(inputs)

l = Reshape((-1, n_classes))(l)

l = Activation('softmax')(l)#or softmax for multi-class

return l

Using TensorFlow backend.

FC_DenseNet

def FC_DenseNet(

input_shape=(None, None, 3),

n_classes=1,

n_filters_first_conv=48,

n_pool=5,

growth_rate=16,

n_layers_per_block=[4, 5, 7, 10, 12, 15, 12, 10, 7, 5, 4],

dropout_p=0.2

):

if type(n_layers_per_block) == list:

print(len(n_layers_per_block))

elif type(n_layers_per_block) == int:

n_layers_per_block = [n_layers_per_block] * (2 * n_pool + 1)

else:

raise ValueError

#####################

# First Convolution #

#####################

inputs = Input(shape=input_shape)

stack = Conv2D(filters=n_filters_first_conv, kernel_size=3, padding='same', kernel_initializer='he_uniform')(inputs)

n_filters = n_filters_first_conv

#####################

# Downsampling path #

#####################

skip_connection_list = []

for i in range(n_pool):

for j in range(n_layers_per_block[i]):

l = BN_ReLU_Conv(stack, growth_rate, dropout_p=dropout_p)

stack = concatenate([stack, l])

n_filters += growth_rate

skip_connection_list.append(stack)

stack = TransitionDown(stack, n_filters, dropout_p)

skip_connection_list = skip_connection_list[::-1]

#####################

# Bottleneck #

#####################

block_to_upsample = []

for j in range(n_layers_per_block[n_pool]):

l = BN_ReLU_Conv(stack, growth_rate, dropout_p=dropout_p)

block_to_upsample.append(l)

stack = concatenate([stack, l])

block_to_upsample = concatenate(block_to_upsample)

#####################

# Upsampling path #

#####################

for i in range(n_pool):

n_filters_keep = growth_rate * n_layers_per_block[n_pool + i]

stack = TransitionUp(skip_connection_list[i], block_to_upsample, n_filters_keep)

block_to_upsample = []

for j in range(n_layers_per_block[n_pool + i + 1]):

l = BN_ReLU_Conv(stack, growth_rate, dropout_p=dropout_p)

block_to_upsample.append(l)

stack = concatenate([stack, l])

block_to_upsample = concatenate(block_to_upsample)

#####################

# Softmax #

#####################

output = SoftmaxLayer(stack, n_classes)

model = Model(inputs=inputs, outputs=output)

return model

以上是关于语义分割专题语义分割相关工作--Fully Convolutional DenseNet的主要内容,如果未能解决你的问题,请参考以下文章