OpenCV图像处理--常用图像拼接方法

Posted C君莫笑

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了OpenCV图像处理--常用图像拼接方法相关的知识,希望对你有一定的参考价值。

OpenCV常用图像拼接方法(一) :直接拼接

vconcat()—垂直方向拼接,要求待拼接图像有相同的宽度; hconcat()—水平方向拼接,要求待拼接图像有相同的高度。

使用vconcat()和hconcat()拼接则要求待拼接图像有相同的宽度或高度

Mat img = imread("girl.jpg");

vector<Mat>vImgs;

Mat result;

vImgs.push_back(img);

vImgs.push_back(img);

vconcat(vImgs, result); //垂直方向拼接

//hconcat(vImgs, result); //水平方向拼接

imshow("result",result);

cout << "Hello World!" << endl;

waitKey(0);

其他拼接函数

vector<Mat> images;

images.push_back(imread("stitch1.jpg"));

images.push_back(imread("stitch2.jpg"));

// 设置拼接模式与参数

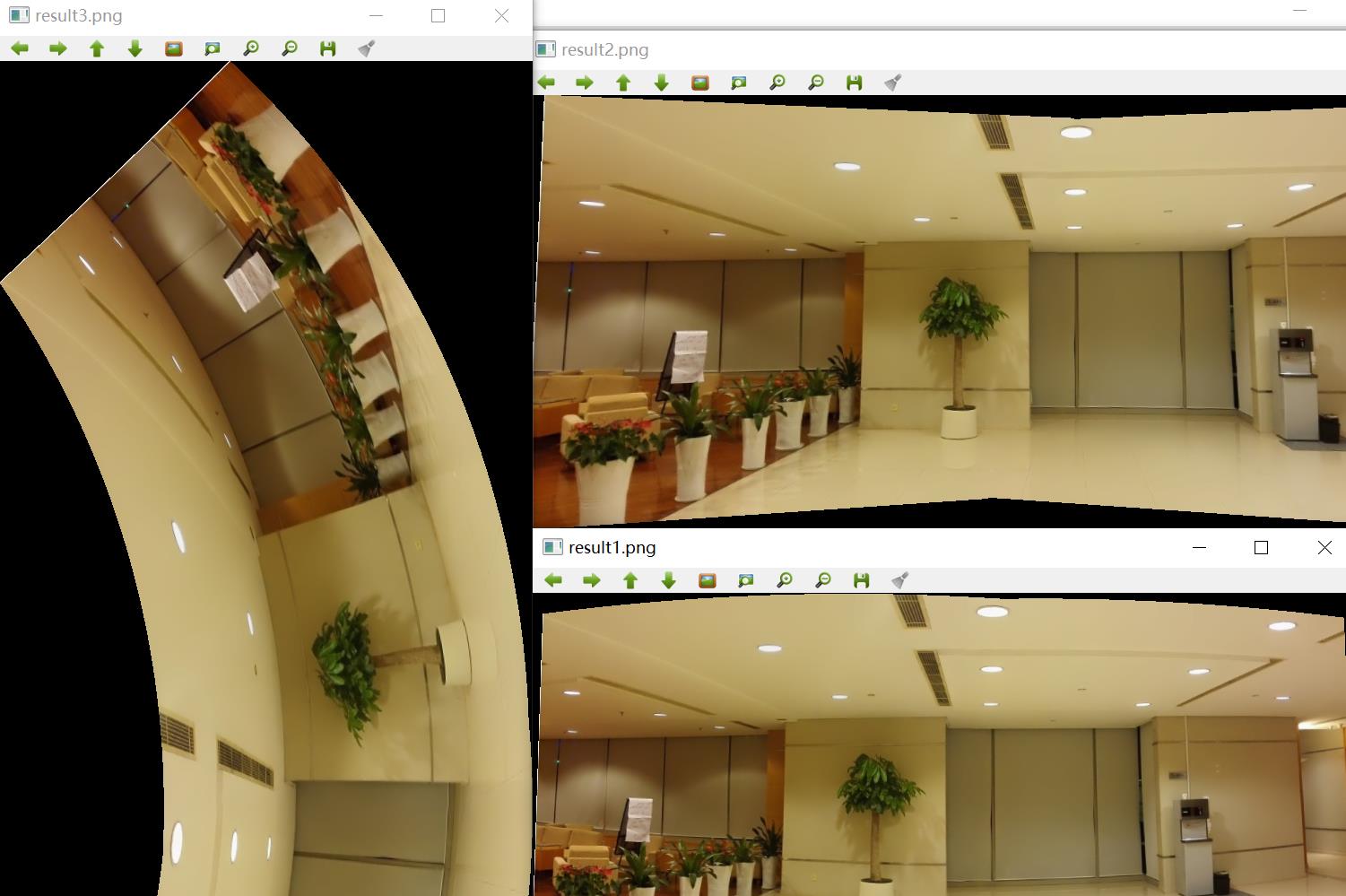

Mat result1, result2, result3;

Stitcher::Mode mode = Stitcher::PANORAMA;

Ptr<Stitcher> stitcher = Stitcher::create(mode);

// 拼接方式-多通道融合

auto blender = detail::Blender::createDefault(detail::Blender::MULTI_BAND);

stitcher->setBlender(blender);

// 拼接

Stitcher::Status status = stitcher->stitch(images, result1);

// 平面曲翘拼接

auto plane_warper = makePtr<cv::PlaneWarper>();

stitcher->setWarper(plane_warper);

status = stitcher->stitch(images, result2);

// 鱼眼拼接

auto fisheye_warper = makePtr<cv::FisheyeWarper>();

stitcher->setWarper(fisheye_warper);

status = stitcher->stitch(images, result3);

// 检查返回

if (status != Stitcher::OK)

{

cout << "Can't stitch images, error code = " << int(status) << endl;

return EXIT_FAILURE;

}

imshow("result1.png", result1);

imshow("result2.png", result2);

imshow("result3.png", result3);

waitKey(0);

图示:

OpenCV常用图像拼接方法(二) :基于模板匹配拼接

- 适用范围:图像有重合区域,且待拼接图像之间无明显尺度变化和畸变。

- 优点:简单、快速(相比于SIFT特征匹配拼接)。

- 常用实例:两个相邻相机水平拍摄图像拼接。

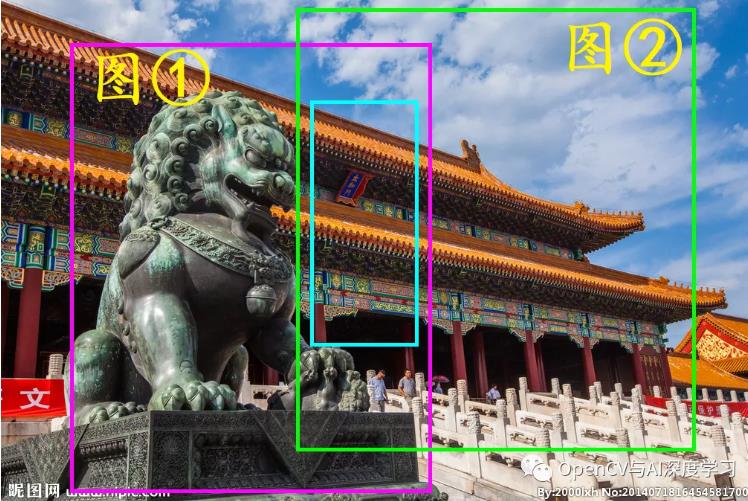

截取如下两部分ROI作为待拼接图像。

待拼接图①:

待拼接图②:

思路:在图①中截取部分公共区域ROI作为模板,利用模板在图②中匹配,得到最佳匹配位置后计算X和Y方向需要平移的像素距离,将图②对应的拼接到大图中。如下,模板为青色区域:

部分代码和效果如下:

Mat imgL = imread("A.jpg");

Mat imgR = imread("B.jpg");

double start = getTickCount();

Mat grayL, grayR;

cvtColor(imgL, grayL, COLOR_BGR2GRAY);

cvtColor(imgR, grayR, COLOR_BGR2GRAY);

Rect rectCut = Rect(372, 122, 128, 360);

Rect rectMatched = Rect(0, 0, imgR.cols / 2, imgR.rows);

Mat imgTemp = grayL(Rect(rectCut));

Mat imgMatched = grayR(Rect(rectMatched));

int width = imgMatched.cols - imgTemp.cols + 1;

int height = imgMatched.rows - imgTemp.rows + 1;

Mat matchResult(height, width, CV_32FC1);

matchTemplate(imgMatched, imgTemp, matchResult, TM_CCORR_NORMED);

normalize(matchResult, matchResult, 0, 1, NORM_MINMAX, -1); //归一化到0--1范围

double minValue, maxValue;

Point minLoc, maxLoc;

minMaxLoc(matchResult, &minValue, &maxValue, &minLoc, &maxLoc);

Mat dstImg(imgL.rows, imgR.cols + rectCut.x - maxLoc.x, CV_8UC3, Scalar::all(0));

Mat roiLeft = dstImg(Rect(0, 0, imgL.cols, imgL.rows));

imgL.copyTo(roiLeft);

Mat debugImg = imgR.clone();

rectangle(debugImg, Rect(maxLoc.x, maxLoc.y, imgTemp.cols, imgTemp.rows), Scalar(0, 255, 0), 2, 8);

imwrite("match.jpg", debugImg);

Mat roiMatched = imgR(Rect(maxLoc.x, maxLoc.y - rectCut.y, imgR.cols - maxLoc.x, imgR.rows - 1 - (maxLoc.y - rectCut.y)));

Mat roiRight = dstImg(Rect(rectCut.x, 0, roiMatched.cols, roiMatched.rows));

roiMatched.copyTo(roiRight);

double end = getTickCount();

double useTime = (end - start) / getTickFrequency();

cout << "use-time : " << useTime << "s" << endl;

imwrite("dst.jpg", dstImg);

cout << "Done!" << endl;

匹配结果:

拼接结果:

OpenCV常用图像拼接方法(三) :SIFT特征匹配拼接方法

特点和适用范围:图像有足够重合相同特征区域,且待拼接图像之间无明显尺度变换和畸变。

优点:适应部分倾斜变化情况。缺点:需要有足够的相同特征区域进行匹配,速度较慢,拼接较大图片容易崩溃。

如下是待拼接的两张图片:

特征匹配图:

拼接结果图:

拼接缝处理后(拼接处过渡更自然):

/********************直接图像拼接函数*************************/

bool ImageOverlap0(Mat &img1, Mat &img2)

{

Mat g1(img1, Rect(0, 0, img1.cols, img1.rows)); // init roi

Mat g2(img2, Rect(0, 0, img2.cols, img2.rows));

cvtColor(g1, g1, COLOR_BGR2GRAY);

cvtColor(g2, g2, COLOR_BGR2GRAY);

vector<cv::KeyPoint> keypoints_roi, keypoints_img; /* keypoints found using SIFT */

Mat descriptor_roi, descriptor_img; /* Descriptors for SIFT */

FlannBasedMatcher matcher; /* FLANN based matcher to match keypoints */

vector<cv::DMatch> matches, good_matches;

cv::Ptr<cv::SIFT> sift = cv::SIFT::create();

int i, dist = 80;

sift->detectAndCompute(g1, cv::Mat(), keypoints_roi, descriptor_roi); /* get keypoints of ROI image */

sift->detectAndCompute(g2, cv::Mat(), keypoints_img, descriptor_img); /* get keypoints of the image */

matcher.match(descriptor_roi, descriptor_img, matches); //实现描述符之间的匹配

double max_dist = 0; double min_dist = 5000;

//-- Quick calculation of max and min distances between keypoints

for (int i = 0; i < descriptor_roi.rows; i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

// 特征点筛选

for (i = 0; i < descriptor_roi.rows; i++)

{

if (matches[i].distance < 3 * min_dist)

{

good_matches.push_back(matches[i]);

}

}

printf("%ld no. of matched keypoints in right image\\n", good_matches.size());

/* Draw matched keypoints */

Mat img_matches;

//绘制匹配

drawMatches(img1, keypoints_roi, img2, keypoints_img,

good_matches, img_matches, Scalar::all(-1),

Scalar::all(-1), vector<char>(),

DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("matches", img_matches);

vector<Point2f> keypoints1, keypoints2;

for (i = 0; i < good_matches.size(); i++)

{

keypoints1.push_back(keypoints_img[good_matches[i].trainIdx].pt);

keypoints2.push_back(keypoints_roi[good_matches[i].queryIdx].pt);

}

//计算单应矩阵(仿射变换矩阵)

Mat H = findHomography(keypoints1, keypoints2, RANSAC);

Mat H2 = findHomography(keypoints2, keypoints1, RANSAC);

Mat stitchedImage; //定义仿射变换后的图像(也是拼接结果图像)

Mat stitchedImage2; //定义仿射变换后的图像(也是拼接结果图像)

int mRows = img2.rows;

if (img1.rows > img2.rows)

{

mRows = img1.rows;

}

int count = 0;

for (int i = 0; i < keypoints2.size(); i++)

{

if (keypoints2[i].x >= img2.cols / 2)

count++;

}

//判断匹配点位置来决定图片是左还是右

if (count / float(keypoints2.size()) >= 0.5) //待拼接img2图像在右边

{

cout << "img1 should be left" << endl;

vector<Point2f>corners(4);

vector<Point2f>corners2(4);

corners[0] = Point(0, 0);

corners[1] = Point(0, img2.rows);

corners[2] = Point(img2.cols, img2.rows);

corners[3] = Point(img2.cols, 0);

stitchedImage = Mat::zeros(img2.cols + img1.cols, mRows, CV_8UC3);

warpPerspective(img2, stitchedImage, H, Size(img2.cols + img1.cols, mRows));

perspectiveTransform(corners, corners2, H);

/*

circle(stitchedImage, corners2[0], 5, Scalar(0, 255, 0), 2, 8);

circle(stitchedImage, corners2[1], 5, Scalar(0, 255, 255), 2, 8);

circle(stitchedImage, corners2[2], 5, Scalar(0, 255, 0), 2, 8);

circle(stitchedImage, corners2[3], 5, Scalar(0, 255, 0), 2, 8); */

cout << corners2[0].x << ", " << corners2[0].y << endl;

cout << corners2[1].x << ", " << corners2[1].y << endl;

imshow("temp", stitchedImage);

//imwrite("temp.jpg", stitchedImage);

Mat half(stitchedImage, Rect(0, 0, img1.cols, img1.rows));

img1.copyTo(half);

imshow("result", stitchedImage);

}

else //待拼接图像img2在左边

{

cout << "img2 should be left" << endl;

stitchedImage = Mat::zeros(img2.cols + img1.cols, mRows, CV_8UC3);

warpPerspective(img1, stitchedImage, H2, Size(img1.cols + img2.cols, mRows));

imshow("temp", stitchedImage);

//计算仿射变换后的四个端点

vector<Point2f>corners(4);

vector<Point2f>corners2(4);

corners[0] = Point(0, 0);

corners[1] = Point(0, img1.rows);

corners[2] = Point(img1.cols, img1.rows);

corners[3] = Point(img1.cols, 0);

perspectiveTransform(corners, corners2, H2); //仿射变换对应端点

/*

circle(stitchedImage, corners2[0], 5, Scalar(0, 255, 0), 2, 8);

circle(stitchedImage, corners2[1], 5, Scalar(0, 255, 255), 2, 8);

circle(stitchedImage, corners2[2], 5, Scalar(0, 255, 0), 2, 8);

circle(stitchedImage, corners2[3], 5, Scalar(0, 255, 0), 2, 8); */

cout << corners2[0].x << ", " << corners2[0].y << endl;

cout << corners2[1].x << ", " << corners2[1].y << endl;

Mat half(stitchedImage, Rect(0, 0, img2.cols, img2.rows));

img2.copyTo(half);

imshow("result", stitchedImage);

}

imwrite("result.bmp", stitchedImage);

return true;

}

OpenCV常用图像拼接方法(四) :SURF特征匹配拼接方法

参考链接:

https://www.cnblogs.com/skyfsm/p/7411961.html

https://www.cnblogs.com/skyfsm/p/7401523.html

以上是关于OpenCV图像处理--常用图像拼接方法的主要内容,如果未能解决你的问题,请参考以下文章

OpenCV 例程 300篇255.OpenCV 实现图像拼接

OpenCV 例程 300篇255.OpenCV 实现图像拼接

OpenCV-Python 图像全景拼接stitch及黑边处理