Sqoop MySQL 8 数据迁移至Hadoop 3 提示:ClassNotFoundException: Class base_house not found

Posted 在奋斗的大道

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Sqoop MySQL 8 数据迁移至Hadoop 3 提示:ClassNotFoundException: Class base_house not found相关的知识,希望对你有一定的参考价值。

今天尝试使用Sqoop 将mysql 8 中的数据迁移至Hadoop 3 提示如下错误信息:

2023-03-01 15:00:04,985 WARN mapred.LocalJobRunner: job_local103536620_0001

java.lang.Exception: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class base_house not found

at org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:492)

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:552)

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class base_house not found

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2636)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getInputClass(DBConfiguration.java:403)

at org.apache.sqoop.mapreduce.db.DataDrivenDBInputFormat.createDBRecordReader(DataDrivenDBInputFormat.java:270)

at org.apache.sqoop.mapreduce.db.DBInputFormat.createRecordReader(DBInputFormat.java:266)

at org.apache.hadoop.mapred.MapTask$NewTrackingRecordReader.<init>(MapTask.java:528)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:771)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:348)

at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:271)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.lang.ClassNotFoundException: Class base_house not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2540)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2634)

... 12 more

造成此问题原因:默认sqoop在执行导入table过程中会生成对应的table的java文件和编译产生的.class和.jar文件,而class 和 jar文件则保存在/tmp/sqoop-当前用户/compile/ 下相应的文件夹中。

实战:我当前用户为root

[root@Hadoop3-master sqoop-root]# ll

总用量 0

drwxr-xr-x 8 root root 246 3月 1 15:23 compile

[root@Hadoop3-master sqoop-root]# cd compile/

[root@Hadoop3-master compile]# ll

总用量 0

drwxr-xr-x 2 root root 222 3月 1 15:23 134ee3c5a21fd73cc90cac58b11ed36e

drwxr-xr-x 2 root root 199 3月 1 14:52 8d74f429c3b7b93b3e0387a285d6022e

drwxr-xr-x 2 root root 199 3月 1 15:22 95f29f8d2f7d2aa440b7b5d722331db5

drwxr-xr-x 2 root root 222 3月 1 14:35 c86318a7dd407cc0eba026d9ff722706

drwxr-xr-x 2 root root 222 3月 1 15:00 d4fd2c0f31c7b28fb77453314b7ee799

drwxr-xr-x 2 root root 199 3月 1 14:33 e323f1801202ec8e470f4501a249622f

将*ed36e 目录下的base_house.jar 拷贝至/usr/local/sqoop/lib 目录下。

cp /tmp/sqoop-root/compile/134ee3c5a21fd73cc90cac58b11ed36e/base_house.jar /usr/local/sqoop/bin/重新执行导入:

[root@Hadoop3-master bin]# sqoop import --connect "jdbc:mysql://192.168.43.10:3306/house?useSSL=false&serverTimezone=UTC&zeroDateTimeBehavior=CONVERT_TO_NULL" --username root --password 123456 --table base_house --target-dir '/sqoop/base-house' --fields-terminated-by ',' -m 1;

Warning: /usr/local/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

2023-03-01 15:23:07,047 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

2023-03-01 15:23:07,202 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

2023-03-01 15:23:07,405 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

2023-03-01 15:23:07,405 INFO tool.CodeGenTool: Beginning code generation

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

2023-03-01 15:23:07,949 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `base_house` AS t LIMIT 1

2023-03-01 15:23:08,037 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `base_house` AS t LIMIT 1

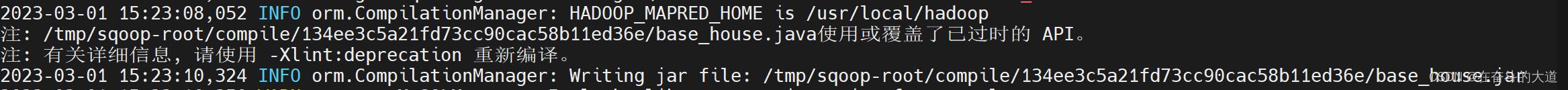

2023-03-01 15:23:08,052 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop

注: /tmp/sqoop-root/compile/134ee3c5a21fd73cc90cac58b11ed36e/base_house.java使用或覆盖了已过时的 API。

注: 有关详细信息, 请使用 -Xlint:deprecation 重新编译。

2023-03-01 15:23:10,324 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/134ee3c5a21fd73cc90cac58b11ed36e/base_house.jar

2023-03-01 15:23:10,350 WARN manager.MySQLManager: It looks like you are importing from mysql.

2023-03-01 15:23:10,350 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

2023-03-01 15:23:10,350 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

2023-03-01 15:23:10,368 INFO mapreduce.ImportJobBase: Beginning import of base_house

2023-03-01 15:23:10,370 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2023-03-01 15:23:10,627 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

2023-03-01 15:23:11,818 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

2023-03-01 15:23:11,969 INFO impl.MetricsConfig: Loaded properties from hadoop-metrics2.properties

2023-03-01 15:23:12,100 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2023-03-01 15:23:12,101 INFO impl.MetricsSystemImpl: JobTracker metrics system started

2023-03-01 15:23:12,694 INFO db.DBInputFormat: Using read commited transaction isolation

2023-03-01 15:23:12,729 INFO mapreduce.JobSubmitter: number of splits:1

2023-03-01 15:23:12,936 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local755394790_0001

2023-03-01 15:23:12,936 INFO mapreduce.JobSubmitter: Executing with tokens: []

2023-03-01 15:23:13,290 INFO mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/job_local755394790_0001_58e4f0dd-e37b-41eb-9676-cf81b9c11936/libjars <- /usr/local/sqoop/bin/libjars/*

2023-03-01 15:23:13,302 WARN fs.FileUtil: Command 'ln -s /tmp/hadoop-root/mapred/local/job_local755394790_0001_58e4f0dd-e37b-41eb-9676-cf81b9c11936/libjars /usr/local/sqoop/bin/libjars/*' failed 1 with: ln: 无法创建符号链接"/usr/local/sqoop/bin/libjars/*": 没有那个文件或目录

2023-03-01 15:23:13,303 WARN mapred.LocalDistributedCacheManager: Failed to create symlink: /tmp/hadoop-root/mapred/local/job_local755394790_0001_58e4f0dd-e37b-41eb-9676-cf81b9c11936/libjars <- /usr/local/sqoop/bin/libjars/*

2023-03-01 15:23:13,303 INFO mapred.LocalDistributedCacheManager: Localized file:/tmp/hadoop/mapred/staging/root755394790/.staging/job_local755394790_0001/libjars as file:/tmp/hadoop-root/mapred/local/job_local755394790_0001_58e4f0dd-e37b-41eb-9676-cf81b9c11936/libjars

2023-03-01 15:23:13,394 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

2023-03-01 15:23:13,395 INFO mapreduce.Job: Running job: job_local755394790_0001

2023-03-01 15:23:13,401 INFO mapred.LocalJobRunner: OutputCommitter set in config null

2023-03-01 15:23:13,423 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2023-03-01 15:23:13,423 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2023-03-01 15:23:13,426 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2023-03-01 15:23:13,538 INFO mapred.LocalJobRunner: Waiting for map tasks

2023-03-01 15:23:13,539 INFO mapred.LocalJobRunner: Starting task: attempt_local755394790_0001_m_000000_0

2023-03-01 15:23:13,595 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2023-03-01 15:23:13,597 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2023-03-01 15:23:13,645 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2023-03-01 15:23:13,671 INFO db.DBInputFormat: Using read commited transaction isolation

2023-03-01 15:23:13,679 INFO mapred.MapTask: Processing split: 1=1 AND 1=1

2023-03-01 15:23:13,825 INFO db.DBRecordReader: Working on split: 1=1 AND 1=1

2023-03-01 15:23:13,826 INFO db.DBRecordReader: Executing query: SELECT `id`, `project_no`, `project_name`, `project_address` FROM `base_house` AS `base_house` WHERE ( 1=1 ) AND ( 1=1 )

2023-03-01 15:23:13,840 INFO mapreduce.AutoProgressMapper: Auto-progress thread is finished. keepGoing=false

2023-03-01 15:23:13,847 INFO mapred.LocalJobRunner:

2023-03-01 15:23:14,322 INFO mapred.Task: Task:attempt_local755394790_0001_m_000000_0 is done. And is in the process of committing

2023-03-01 15:23:14,328 INFO mapred.LocalJobRunner:

2023-03-01 15:23:14,328 INFO mapred.Task: Task attempt_local755394790_0001_m_000000_0 is allowed to commit now

2023-03-01 15:23:14,363 INFO output.FileOutputCommitter: Saved output of task 'attempt_local755394790_0001_m_000000_0' to hdfs://Hadoop3-master:9000/sqoop/base-house

2023-03-01 15:23:14,367 INFO mapred.LocalJobRunner: map

2023-03-01 15:23:14,367 INFO mapred.Task: Task 'attempt_local755394790_0001_m_000000_0' done.

2023-03-01 15:23:14,380 INFO mapred.Task: Final Counters for attempt_local755394790_0001_m_000000_0: Counters: 21

File System Counters

FILE: Number of bytes read=6729

FILE: Number of bytes written=567339

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=0

HDFS: Number of bytes written=139

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

HDFS: Number of bytes read erasure-coded=0

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=228

Total committed heap usage (bytes)=215482368

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=139

2023-03-01 15:23:14,381 INFO mapred.LocalJobRunner: Finishing task: attempt_local755394790_0001_m_000000_0

2023-03-01 15:23:14,381 INFO mapred.LocalJobRunner: map task executor complete.

2023-03-01 15:23:14,412 INFO mapreduce.Job: Job job_local755394790_0001 running in uber mode : false

2023-03-01 15:23:14,415 INFO mapreduce.Job: map 100% reduce 0%

2023-03-01 15:23:14,419 INFO mapreduce.Job: Job job_local755394790_0001 completed successfully

2023-03-01 15:23:14,444 INFO mapreduce.Job: Counters: 21

File System Counters

FILE: Number of bytes read=6729

FILE: Number of bytes written=567339

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=0

HDFS: Number of bytes written=139

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

HDFS: Number of bytes read erasure-coded=0

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=228

Total committed heap usage (bytes)=215482368

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=139

2023-03-01 15:23:14,449 INFO mapreduce.ImportJobBase: Transferred 139 bytes in 2.6131 seconds (53.1939 bytes/sec)

2023-03-01 15:23:14,452 INFO mapreduce.ImportJobBase: Retrieved 2 records.数据导入成功。

以上是关于Sqoop MySQL 8 数据迁移至Hadoop 3 提示:ClassNotFoundException: Class base_house not found的主要内容,如果未能解决你的问题,请参考以下文章