DPDK - 通过源码安装dpdk并运行examples (by quqi99)

Posted quqi99

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了DPDK - 通过源码安装dpdk并运行examples (by quqi99)相关的知识,希望对你有一定的参考价值。

作者:张华 发表于:2021-08-11

版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息及本版权声明

前言

2016年通过源码安装过dpdk,但过时了,见: https://blog.csdn.net/quqi99/article/details/51087955/

通过juju安装dpdk请参考此网页搜索dpdk字眼: https://blog.csdn.net/quqi99/article/details/116893909

本文是在ubuntu 20.04上通过dpdk安装源码并运行例子。目前,已经通过meson取代了Makefile,这是最大的区别。注意:meson不能通过apt安装,只能通过pip3安装。

ubuntu@saha:/bak/linux/dpdk$ cat Makefile

.PHONY: all

all:

@echo "To build DPDK please use meson and ninja as described at"

@echo " https://core.dpdk.org/doc/quick-start/"

实验机器 - KVM搭建DPDK实验平台

使用QEMU/KVM来搭建一个DPDK实验平台, virt-manager安装一个硬盘30G, 内存4096M, 4个CPU核,3个e1000网卡的虚机。

1, 使用支持大页的CPU, 运行:sudo virsh edit dpdk

<cpu mode='custom' match='exact' check='partial'>

<model fallback='allow'>Haswell</model>

<feature policy='force' name='x2apic'/>

<feature policy='force' name='pdpe1gb'/>

<feature policy='disable' name='hle'/>

<feature policy='disable' name='smep'/>

<feature policy='disable' name='rtm'/>

</cpu>

2, 重启虚机: sudo virsh destroy dpdk && sudo virsh start dpdk

注:如果是vmware player的虚机,可以这样保持和kvm的测试环境一致:

a, 将之前的o7k虚机(https://blog.csdn.net/quqi99/article/details/97622336)关机后vmware gui中再添加两块网卡即可 (82545是e1000网卡不支持多队列,免费版的vmware player中似乎无法使用多队列的vmxnet3网卡),这样就有了3块e1000网卡(lspci |grep -i eth).

b, 并且将虚机CPU改成4个.

c, 另外VMware虚机默认CPU和物理CPU相同,请用"cat /proc/cpuinfo |grep pdpe1gb"命令确认CPU也是支持DPDK的.

d, 最后调整netplan文件, 调整完之后使用'netplan apply'使其生效

ubuntu@o7k:~$ cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: true

ens38:

dhcp4: true

ens39:

dhcp4: true

version: 2

3, 虚机内配置大页,在物理机上运行’arp -a’找到虚机IP并ssh进虚机,在/etc/default/grub中添中下列内容后然后运行’sudo update-grub’

GRUB_CMDLINE_LINUX="default_hugepagesz=2M hugepagesz=2M hugepages=64 isolcpus=1-3"

#GRUB_CMDLINE_LINUX="default_hugepagesz=1G hugepagesz=1G hugepages=1 isolcpus=1-3"

注意:1G大页不能在系统启动后设置大小(对于2M大页则可以在系统启动之后再通过nr_hugepages设置):

mkdir -p /dev/hugepages

mountpoint -q /dev/hugepages || mount -t hugetlbfs nodev /dev/hugepages

echo 64 > /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

其他的,如需HPET(高精度事件定时器)功能支持,还需要在Bios,Kernel,DPDK中打开HPET相关的配置 - 见[2].

此外,内核对大页的支持,默认就是打开的:

$ sudo grep -i HUGETLB /boot/config-$(uname -r)

CONFIG_ARCH_WANT_GENERAL_HUGETLB=y

CONFIG_CGROUP_HUGETLB=y

CONFIG_HUGETLBFS=y

CONFIG_HUGETLB_PAGE=y

如果使用vfio-pci,还要记得打iommu, 例如:

ubuntu@saha:~$ cat /proc/cmdline

BOOT_IMAGE=/boot/vmlinuz-4.15.0-151-generic root=UUID=cd6e46ab-dc09-414b-ab22-dd9709bf1712 ro console=ttyS1 transparent_hugepage=never hugepagesz=1G hugepages=16 default_hugepagesz=1G iommu=pt intel_iommu=on

4, 重启机器后验证大页

ubuntu@dpdk:~$ cat /proc/meminfo |grep -i hugepages

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

FileHugePages: 0 kB

HugePages_Total: 64

HugePages_Free: 64

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

通过meson源码安装

目前,dpdk使用meson代替makefile还安装(ninja类似于make).

# ./autogen.sh && ./configure && make && sudo make install

# meson build && ninja -C build && sudo ninja -C build install

下面的makefile方式是过时的老方式,列在这里以利于比较。

sudo apt install linux-headers-$(uname -r) libpcap-dev -y

cd dpdk

make config T=x86_64-native-linuxapp-gcc

对于运行’make config’时的T参数,官网给出的编译平台规范是ARCH-MACHINE-EXECENV-TOOLCHAIN

- ARCH can be: i686, x86_64, ppc_64

- MACHINE can be: native, ivshmem, power8

- EXECENV can be: linuxapp, bsdapp

- TOOLCHAIN can be: gcc, icc

dpdk的源码目录如下:

sudo mkdir -p /bak/linux && sudo chown -R $USER /bak/linux/ && cd /bak/linux

git clone https://github.com/DPDK/dpdk.git

ubuntu@saha:/bak/linux/dpdk$ tree -L 1 .

.

├── ABI_VERSION

├── MAINTAINERS

├── Makefile

├── README

├── VERSION

├── app

├── buildtools

├── config

├── devtools

├── doc

├── drivers

├── examples

├── kernel

├── lib

├── license

├── meson.build

├── meson_options.txt

└── usertools

DPDK源文件由几个目录组成:

- lib: DPDK 库文件

- drivers: DPDK 轮询驱动源文件

- app: DPDK 应用程序 (自动测试)源文件

- examples: DPDK 应用例程

- config, buildtools, mk: 框架相关的makefile、脚本及配置文件

下载了源码之后,默认是不安装UIO驱动的,可以编辑meson_options.txt文件将enable_kmods改成true:

option('enable_kmods', type: 'boolean', value: true, description:

'build kernel modules')

或者直接编译驱动:

cd /bak/linux/

git clone http://dpdk.org/git/dpdk-kmods

cd dpdk-kmods/linux/igb_uio

make

sudo modprobe uio_pci_generic

sudo insmod igb_uio.ko

通过apt方式安装meson的版本太老会导致这个错误"ERROR: Unknown type feature",所以meson只能通过pip3来安装,通过pip3 install来安装时前面一定要加sudo, 因为在运行’ninja install’时前面也是要加sudo的,这样pip3 install前不加sudo而ninja install前加sudo的话容易报找不着ninja模块的错误。

所以最终的安装步骤如下:

sudo apt install linux-headers-$(uname -r) libpcap-dev make gcc -y

sudo mkdir -p /bak/linux && sudo chown -R $USER /bak/linux/ && cd /bak/linux

git clone https://github.com/DPDK/dpdk.git

# the offiical default is not to compile UIO driver, or set enable_kmods=true

# in meson_options.txt to include UIO driver when compiling dpdk

git clone http://dpdk.org/git/dpdk-kmods

cd dpdk-kmods/linux/igb_uio

make

sudo modprobe uio_pci_generic

sudo insmod igb_uio.ko

#extend space of hdd

sudo pvcreate /dev/vdb

sudo vgextend vgdpdk /dev/vdb

sudo vgs

sudo lvextend -L +10G /dev/mapper/vgdpdk-root

sudo resize2fs /dev/mapper/vgdpdk-root

df -h

cd /bak/linux/dpdk

sudo apt install python3-pip re2c numactl libnuma-dev -y

# NOTE: must use 'sudo' to avoid 'No module named ninja' when running 'ninja install'

sudo pip3 install meson ninja pyelftools

/usr/local/bin/meson -Dexamples=all -Dbuildtype=debug -Denable_trace_fp=true build

#/usr/local/bin/meson --reconfigure -Dexamples=all -Dbuildtype=debug -Denable_trace_fp=true build

#/usr/local/bin/ninja -t clean

/usr/local/bin/ninja -C build -j8

sudo /usr/local/bin/ninja -C build install

'ninjia install’除了将可执行文件安装到/usr/local/bin目录外,还会安装:

- headers -> /usr/local/include

- libraries -> /usr/local/lib/x86_64-linux-gnu/dpdk/

- drivers -> /usr/local/lib64/dpdk/drivers

- libdpdk.pc -> /usr/local/lib/x86_64-linux-gnu/pkgconfig

想要重新配置的话,加’–reconfigure’参数:

meson --reconfigure -Dexamples=all -Dbuildtype=debug -Denable_trace_fp=true build

也可以添加更多的配置参数:

CC=clang meson -Dmax_lcores=128 -Dmachine=sandybridge -Ddisable_drivers=net/af_xdp,net/dpaa -Dexamples=l3fwd,l2fwd -Dwerror=false build2021

ninja -C build2021

主要配置参数如下:

meson的这些参数可以通过"meson configure"查看,例如:

meson configure -Dbuildtype=debug

meson configure -Denable_trace_fp=true

也可以查看meson_options.txt文件。

Run helloworld

ubuntu@dpdk:/bak/linux/dpdk$ sudo ./build/examples/dpdk-helloworld -c3 -n4

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected static linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

hello from core 1

hello from core 0

选项描述如下:

- -c COREMASK: 要运行的内核的十六进制掩码。注意,平台之间编号可能不同,需要事先确定

- -n NUM: 每个处理器插槽的内存通道数目

- -b domain:bus:devid.func: 端口黑名单,避免EAL使用指定的PCI设备。

- –use-device: 仅使用指定的以太网设备。使用逗号分隔 [domain:]bus:devid.func 值,不能与 -b 选项一起使用。

- –socket-mem: 从特定插槽上的hugepage分配内存。

- -m MB: 内存从hugepage分配,不管处理器插槽。建议使用 --socket-mem 而非这个选项。

- -r NUM: 内存数量。

- -v: 显示启动时的版本信息。

- –huge-dir: 挂载hugetlbfs的目录。

- –file-prefix: 用于hugepage文件名的前缀文本。

- –proc-type: 程序实例的类型。

- –xen-dom0: 支持在Xen Domain0上运行,但不具有hugetlbfs的程序。

- –vmware-tsc-map: 使用VMware TSC 映射而不是本地RDTSC。

- –base-virtaddr: 指定基本虚拟地址。

- –vfio-intr: 指定要由VFIO使用的中断类型。(如果不支持VFIO,则配置无效)。

使用dpdk库编写一个helloworld dpdk程序

mkdir examples/mytest && cd examples/mytest

cat << EOF | tee meson.build

project('hello', 'c')

dpdk = dependency('libdpdk')

sources = files('main.c')

executable('hello', sources, dependencies: dpdk)

EOF

cp ../helloworld/main.c .

export RTE_SDK=/bak/linux/dpdk

export PKG_CONFIG_PATH=/usr/local/lib/x86_64-linux-gnu

export LD_LIBRARY_PATH=/usr/local/lib/x86_64-linux-gnu

sudo apt install numactl libnuma-dev re2c make cmake pkg-config -y

pkg-config --version

meson build

ninja -C build

sudo ./build/hello

$ cat main.c

/* SPDX-License-Identifier: BSD-3-Clause

* Copyright(c) 2010-2014 Intel Corporation

*/

#include <stdio.h>

#include <string.h>

#include <stdint.h>

#include <errno.h>

#include <sys/queue.h>

#include <rte_memory.h>

#include <rte_launch.h>

#include <rte_eal.h>

#include <rte_per_lcore.h>

#include <rte_lcore.h>

#include <rte_debug.h>

/* Launch a function on lcore. 8< */

static int

lcore_hello(__rte_unused void *arg)

{

unsigned lcore_id;

lcore_id = rte_lcore_id();

printf("hello from core %u\\n", lcore_id);

return 0;

}

/* >8 End of launching function on lcore. */

/* Initialization of Environment Abstraction Layer (EAL). 8< */

int

main(int argc, char **argv)

{

int ret;

unsigned lcore_id;

ret = rte_eal_init(argc, argv);

if (ret < 0)

rte_panic("Cannot init EAL\\n");

/* >8 End of initialization of Environment Abstraction Layer */

/* Launches the function on each lcore. 8< */

RTE_LCORE_FOREACH_WORKER(lcore_id) {

/* Simpler equivalent. 8< */

rte_eal_remote_launch(lcore_hello, NULL, lcore_id);

/* >8 End of simpler equivalent. */

}

/* call it on main lcore too */

lcore_hello(NULL);

/* >8 End of launching the function on each lcore. */

rte_eal_mp_wait_lcore();

/* clean up the EAL */

rte_eal_cleanup();

return 0;

}

后续如果用meson编译DPDK程序, 默认会使用pkg-config来寻找DPDK库(meson --pkg-config-path=/usr/local/lib64/pkgconfig ), DPDK安装后会把对应的libdpdk.pc安装在某个目录(eg: /usr/local/lib/x86_64-linux-gnu/pkgconfig/libdpdk.pc), 我们需要确保这个路径pkg-config可以找到.

#verify that dpdk libary exists

sudo ldconfig

sudo ldconfig -p |grep librte

$ pkg-config --modversion libdpdk

21.08.0

# export PKG_CONFIG_PATH=/usr/local/lib/x86_64-linux-gnu

$ pkg-config --variable pc_path pkg-config

/usr/local/lib/x86_64-linux-gnu/pkgconfig:/usr/local/lib/pkgconfig:/usr/local/share/pkgconfig:/usr/lib/x86_64-linux-gnu/pkgconfig:/usr/lib/pkgconfig:/usr/share/pkgconfig

这种编译,相当于运行了:

cc -O3 -include rte_config.h -march=native -I/usr/local/include main.c -o mytest -L/usr/local/lib/x86_64-linux-gnu -Wl,--as-needed -lrte_node -lrte_graph -lrte_bpf -lrte_flow_classify -lrte_pipeline -lrte_table -lrte_port -lrte_fib -lrte_ipsec -lrte_vhost -lrte_stack -lrte_security -lrte_sched -lrte_reorder -lrte_rib -lrte_regexdev -lrte_rawdev -lrte_pdump -lrte_power -lrte_member -lrte_lpm -lrte_latencystats -lrte_kni -lrte_jobstats -lrte_ip_frag -lrte_gso -lrte_gro -lrte_eventdev -lrte_efd -lrte_distributor -lrte_cryptodev -lrte_compressdev -lrte_cfgfile -lrte_bitratestats -lrte_bbdev -lrte_acl -lrte_timer -lrte_hash -lrte_metrics -lrte_cmdline -lrte_pci -lrte_ethdev -lrte_meter -lrte_net -lrte_mbuf -lrte_mempool -lrte_rcu -lrte_ring -lrte_eal -lrte_telemetry -lrte_kvargs

如果动态链接 DPDK 库出现找不到网卡问题, 请在链接选项中添加类似以下项:

-L/usr/local/lib64 -lrte_net_ixgbe -lrte_mempool_ring

如何debug它呢?

上面的dpdk程序如何使用gdb来debug呢?

meson --reconfigure -Dbuildtype=debug -Denable_trace_fp=true build

ninja -C build

sudo cgdb ./build/hello -c 1

运行l2fwd

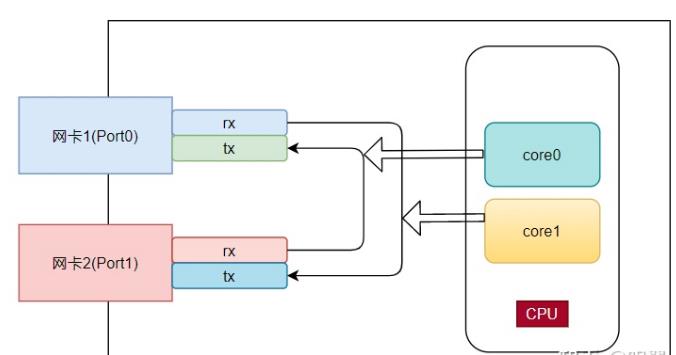

使用‘sudo ./build/l2fwd -c 0x3 -n4 – -q 1 -p 0x3’运行l2fwd:

- -c0x3指使用两个CPU核0和1,这样就是0x3

- -p 0x3指表示只使用2个(二进制11)port (一个port就是一块dpdk网卡,它有两个队列,每个队列都绑到cpu核)

- -q1指每个CPU核使用一个收和发队列

- 注意这里需要两个网卡,队列相互转发

Lcore 0: RX port 0 TX port 1

Lcore 1: RX port 1 TX port 0

cd examples/l2fwd

ubuntu@dpdk:/bak/linux/dpdk/examples/l2fwd$ sudo ./build/l2fwd -c 0x3 -n4 -- -q 1 -p 0x3

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected shared linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

MAC updating enabled

Lcore 0: RX port 0 TX port 1

Lcore 1: RX port 1 TX port 0

Initializing port 0... done:

Port 0, MAC address: 52:54:00:14:68:87

Initializing port 1... done:

Port 1, MAC address: 52:54:00:41:33:73

...

Port statistics ====================================

Statistics for port 0 ------------------------------

Packets sent: 0

Packets received: 0

Packets dropped: 0

Statistics for port 1 ------------------------------

Packets sent: 0

Packets received: 0

Packets dropped: 0

Aggregate statistics ===============================

Total packets sent: 0

Total packets received: 0

Total packets dropped: 0

====================================================

1口1队列: sudo ./build/l2fwd -c0x1 0n4 – -p0x1

1口4队列: sudo ./build/l2fwd -c0x1 0n4 – -p0x1 -q4

单队列e1000e网卡运行l3fwd

1口1队列: sudo ./build/l3fwd -l 0 -n4 – -p0x1 -P --config="(0,0,0)"

1口2队列: sudo ./build/l3fwd -l 0,1 -n4 – -p0x1 -P --config="(0,0,0)(0,1,1)"

两口相互转发: sudo ./build/l3fwd -l 0,1,2,3 -n4 – -p0xf -P --config="(0,0,0)(0,1,1)(1,0,2)(1,1,3)"

共有三块e1000网卡,第一块网卡做管理用,其他两块改成UIO驱动(igb_uio)( 如果是通过deb安装的dpdk deb应该是/usr/share/dpdk/usertools/dpdk-devbind.py, 如果dpdk通过源码安装的应该是/usr/local/bin/dpdk-devbind.py):

sudo /usr/local/bin/dpdk-devbind.py -u 0000:02:00.0

sudo /usr/local/bin/dpdk-devbind.py -b igb_uio 0000:02:00.0

sudo /usr/local/bin/dpdk-devbind.py -u 0000:03:00.0

sudo /usr/local/bin/dpdk-devbind.py -b igb_uio 0000:03:00.0

$ sudo /usr/local/bin/dpdk-devbind.py --status |grep 0000

0000:02:00.0 '82574L Gigabit Network Connection 10d3' drv=igb_uio unused=e1000e,vfio-pci,uio_pci_generic

0000:03:00.0 '82574L Gigabit Network Connection 10d3' drv=igb_uio unused=e1000e,vfio-pci,uio_pci_generic

0000:01:00.0 '82574L Gigabit Network Connection 10d3' if=enp1s0 drv=e1000e unused=igb_uio,vfio-pci,uio_pci_generic *Active*

cd /bak/linux/dpdk/examples/l3fwd

export RTE_SDK=/bak/linux/dpdk

export RTE_TARGET=x86_64-native-linuxapp-gcc

export EXTRA_CFLAGS="-ggdb -ffunction-sections -O0"

make

ls /bak/linux/dpdk/examples/l3fwd/build/l3fwd

ubuntu@dpdk:/bak/linux/dpdk/examples/l3fwd$ sudo ./build/l3fwd -l 0,1,2,3 -n 4 -- -p 0x3 -L --config="(0,0,0)(0,1,1)(1,0,2)(1,1,3)"

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected shared linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

Initializing port 0 ... Creating queues: nb_rxq=2 nb_txq=4... Port 0 modified RSS hash function based on hardware support,requested:0xa38c configured:0

Ethdev port_id=0 nb_rx_queues=2 > 1

EAL: Error - exiting with code: 1

Cause: Cannot configure device: err=-22, port=0

- -p0x3, 表示只有用两个dpdk网卡

- -cff, ff=11,11, 这是掩码,所以就是只充许编号为 0,1,2,3的cpu核. 又例如如果只允许4和5的cpu的话就是0x30。现实中具体绑定到哪个cpu还得考虑numa. 另外,这里可以使用-c来使用掩码,还可以使用-l直接指定如:-l 1-4 。另外,注意:core 0 is usually master lcore所以一般不用0

- –config, 一个dpdk网卡是一个port, 一个port有收发两个队列,并将队列绑定到cpu核.

examples这块仍然可以使用makefile来编译:

注意:使用NetXtreme BCM5719网卡做dpdk实验时报下列检测不到dpdk网卡的错误( Cause: check_port_config failed),这里使用QEMU来模拟的e1000e网卡是这个错误“Ethdev port_id=0 nb_rx_queues=2 > 1”

$ lspci -nnn |grep Eth |grep 02:00.1

02:00.1 Ethernet controller [0200]: Broadcom Inc. and subsidiaries NetXtreme BCM5719 Gigabit Ethernet PCIe [14e4:1657] (rev 01)

port 0 is not present on the board

EAL: Error - exiting with code: 1

Cause: check_port_config failed

“Ethdev port_id=0 nb_rx_queues=2 > 1”这个错误的原因是因为e1000e是单队列网卡,所以如果使用vmware支持多队列的网卡即可。除了换多队列网卡的解决方案外,这个网页(http://mails.dpdk.org/archives/dev/2014-May/002991.html)提供了另外一种解决方案,即可以同一个cpu核都管理多个网卡的单队列0, 如下列通过core0来管理port0和port1上的queue0

ubuntu@dpdk:/bak/linux/dpdk/examples/l3fwd$ sudo ./build/l3fwd -l 0 -n4 -- -p0x3 -P --config="(0,0,0)(1,0,0)"

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected shared linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

Neither LPM, EM, or FIB selected, defaulting to LPM

Initializing port 0 ... Creating queues: nb_rxq=1 nb_txq=1... Port 0 modified RSS hash function based on hardware support,requested:0xa38c configured:0

Address:52:54:00:14:68:87, Destination:02:00:00:00:00:00, Allocated mbuf pool on socket 0

LPM: Adding route 198.18.0.0 / 24 (0)

LPM: Adding route 198.18.1.0 / 24 (1)

LPM: LPM memory allocation failed

EAL: Error - exiting with code: 1

Cause: Unable to create the l3fwd LPM table on socket 0

错误’Cause: Unable to create the l3fwd LPM table on socket 0’是因为缺少大页(Cause: Unable to create the l3fwd LPM table on socket 0),所以增加大页再试一遍:

echo 128 |sudo tee /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

cat /proc/meminfo |grep HugePages_Total

ubuntu@dpdk:/bak/linux/dpdk/examples/l3fwd$ sudo ./build/l3fwd -l 0 -n4 -- -p0x3 -P --config="(0,0,0)(1,0,0)" --parse-ptype

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected shared linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

soft parse-ptype is enabled

Neither LPM, EM, or FIB selected, defaulting to LPM

Initializing port 0 ... Creating queues: nb_rxq=1 nb_txq=1... Port 0 modified RSS hash function based on hardware support,requested:0xa38c configured:0

Address:52:54:00:14:68:87, Destination:02:00:00:00:00:00, Allocated mbuf pool on socket 0

LPM: Adding route 198.18.0.0 / 24 (0)

LPM: Adding route 198.18.1.0 / 24 (1)

LPM: Adding route 2001:200:: / 64 (0)

LPM: Adding route 2001:200:0:1:: / 64 (1)

txq=0,0,0

Initializing port 1 ... Creating queues: nb_rxq=1 nb_txq=1... Port 1 modified RSS hash function based on hardware support,requested:0xa38c configured:0

Address:52:54:00:41:33:73, Destination:02:00:00:00:00:01, txq=0,0,0

Initializing rx queues on lcore 0 ... rxq=0,0,0 rxq=1,0,0

Port 0: softly parse packet type info

Port 1: softly parse packet type info

Checking link status.....done

Port 0 Link up at 1 Gbps FDX Autoneg

Port 1 Link up at 1 Gbps FDX Autoneg

L3FWD: entering main loop on lcore 0

L3FWD: -- lcoreid=0 portid=0 rxqueueid=0

L3FWD: -- lcoreid=0 portid=1 rxqueueid=0

这次成功了,可以看到,这里增加了198.18.0.0/24与192.18.1.0/24两个LPM路由。同时core0在监听port 0与1的收列队(rxqueueid=0,这是因为e1000e只支持单队列)

LPM: Adding route 198.18.0.0 / 24 (0)

LPM: Adding route 198.18.1.0 / 24 (1)

L3FWD: -- lcoreid=0 portid=0 rxqueueid=0

L3FWD: -- lcoreid=0 portid=1 rxqueueid=0

所以想测试更复杂的场景还应该换成多列队网卡,实际的测试场景可能包括3台机器(这个l3fwd所在机器相当于是两个网卡的路由器,测试它的转发还得两台机器)

https://blog.csdn.net/yk_wing4/article/details/104467133

https://redwingz.blog.csdn.net/article/details/107094690

目前怎么运行l3fwd已经很清楚了,这个实验太麻烦也就不继续做了

上面两个网页的测试是将l3fwd当router, 还可以两个dpdk结点互连,然后一台dpdk结点运行pktgen来发包,见:https://blog.csdn.net/qq_45632433/article/details/103043957

总体来说,使用场景是:l2fwd做switch, f3fwd做router

多队列virtio网卡运行l3fwd

上节提到了e1000e网卡只支持单队列,所以它能运行:

echo 128 |sudo tee /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

sudo ./build/l3fwd -l 0 -n4 -- -p0x3 -P --config="(0,0,0)(1,0,0)" --parse-ptype

不能运行

echo 128 |sudo tee /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

sudo ./build/l3fwd -l 0,1,2,3 -n 4 -- -p 0x3 -L --config="(0,0,0)(0,1,1)(1,0,2)(1,1,3)"

想运行多队列网卡,又不想换成vmware,仍然使用kvm虚机,除了e1000e网卡,还有virtio网卡可选择的。首先我们得知dpdk也是支持virtio驱动的(ls drivers/net/virtio/)。编辑(sudo virsh edit dpdk)使用virtio多队列:

<interface type='network'>

<mac address='52:54:00:14:68:87'/>

<source network='default'/>

<model type='virtio'/>

<driver name='vhost' queues='4'/>

<address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/>

</interface>

<interface type='network'>

<mac address='52:54:00:7c:47:73'/>

<source network='cloud'/>

<model type='virtio'/>

<driver name='vhost' queues='4'/>

<address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/>

</interface>

注意:上面一定要将多列队数目设置为4(), 如果设置别的数目如2, 也需要修改l3fwd源码中的NUMBER_PORT_USED参数(它默认为4)

重启虚机后(sudo virsh destroy dpdk && sudo virsh start dpdk), 在未将网卡配置使用UIO驱动之前可使用下面命令确认已经改成4了。

ubuntu@dpdk:~$ ethtool -l enp3s0 |grep Combined

Combined: 4

Combined: 4

如果多队列数目不是改成4,又没修改源码的NUMBER_PORT_USED, 在运行l3fwd时会报下列错:

Ethdev port_id=0 nb_tx_queues=4 > 2

紧接着会遇到这个错:

Ethdev port_id=0 requested Rx offloads 0xe doesn't match Rx offloads capabilities 0x2a1d in rte_eth_dev_configure()

那是因为virtio驱动不支持DEV_RX_OFFLOAD_CHECKSUM,在l3fwd源码中禁用DEV_RX_OFFLOAD_CHECKSUM即可。

sed -i '/DEV_RX_OFFLOAD_CHECKSUM/d' /bak/linux/dpdk/examples/l3fwd/main.c

cd /bak/linux/dpdk/examples/l3fwd

make

接着继续遇到错误:

virtio_dev_configure(): Unsupported Rx multi queue mode 1

这个网页(http://patchwork.dpdk.org/project/dpdk/patch/1570624330-19119-3-git-send-email-arybchenko@solarflare.com/ )提到了因为下列原因virtio的多队列被禁用了。

> Virtio can distribute Rx packets across multi-queue, but there is

> no controls (algorithm, redirection table, hash function) except

> number of Rx queues and ETH_MQ_RX_NONE is the best fit meaning

> no method is enforced on how to route packets to MQs.

看样子,还是因为virtio不支持多队列。但根据上面提示强行设置ETH_MQ_RX_NONE之后居然就work了,cheers 😃

ubuntu@dpdk:/bak/linux/dpdk/examples/l3fwd$ git diff

diff --git a/examples/l3fwd/main.c b/examples/l3fwd/main.c

index 00ac267af1..ca6d41b06c 100644

--- a/examples/l3fwd/main.c

+++ b/examples/l3fwd/main.c

@@ -123,7 +123,6 @@ static struct rte_eth_conf port_conf = {

.mq_mode = ETH_MQ_RX_RSS,

.max_rx_pkt_len = RTE_ETHER_MAX_LEN,

.split_hdr_size = 0,

- .offloads = DEV_RX_OFFLOAD_CHECKSUM,

},

.rx_adv_conf = {

.rss_conf = {

@@ -1044,6 +1043,8 @@ l3fwd_poll_resource_setup(void)

if (dev_info.max_rx_queues == 1)

local_port_conf.rxmode.mq_mode = ETH_MQ_RX_NONE;

+ else

+ local_port_conf.rxmode.mq_mode = ETH_MQ_RX_NONE;

if (local_port_conf.rx_adv_conf.rss_conf.rss_hf !=

port_conf.rx_adv_conf.rss_conf.rss_hf) {

这是输出:

ubuntu@dpdk:/bak/linux/dpdk/examples/l3fwd$ sudo ./build/l3fwd -l 0,1,2,3 -n 4 -- -p 0x3 -L --config="(0,0,0)(0,1,1)(1,0,2)(1,1,3)" --parse-ptype

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected shared linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_virtio (1af4:1041) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_virtio (1af4:1041) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

soft parse-ptype is enabled

Initializing port 0 ... Creating queues: nb_rxq=2 nb_txq=4... Port 0 modified RSS hash function based on hardware support,requested:0xa38c configured:0

Address:52:54:00:14:68:87, Destination:02:00:00:00:00:00, Allocated mbuf pool on socket 0

LPM: Adding route 198.18.0.0 / 24 (0)

LPM: Adding route 198.18.1.0 / 24 (1)

LPM: Adding route 2001:200:: / 64 (0)

LPM: Adding route 2001:200:0:1:: / 64 (1)

txq=0,0,0 txq=1,1,0 txq=2,2,0 txq=3,3,0

Initializing port 1 ... Creating queues: nb_rxq=2 nb_txq=4... Port 1 modified RSS hash function based on hardware support,requested:0xa38c configured:0

Address:52:54:00:7C:47:73, Destination:02:00:00:00:00:01, txq=0,0,0 txq=1,1,0 txq=2,2,0 txq=3,3,0

Initializing rx queues on lcore 0 ... rxq=0,0,0

Initializing rx queues on lcore 1 ... rxq=0,1,0

Initializing rx queues on lcore 2 ... rxq=1,0,0

Initializing rx queues on lcore 3 ... rxq=1,1,0

Port 0: softly parse packet type info

Port 0: softly parse packet type info

Port 1: softly parse packet type info

Port 1: softly parse packet type info

Checking link statusdone

Port 0 Link up at Unknown FDX Autoneg

Port 1 Link up at Unknown FDX Autoneg

L3FWD: entering main loop on lcore 1

L3FWD: -- lcoreid=1 portid=0 rxqueueid=1

L3FWD: entering main loop on lcore 2

L3FWD: -- lcoreid=2 portid=1 rxqueueid=0

L3FWD: entering main loop on lcore 3

L3FWD: -- lcoreid=3 portid=1 rxqueueid=1

L3FWD: entering main loop on lcore 0

L3FWD: -- lcoreid=0 portid=0 rxqueueid=0

附,快速测试的命令如下:

cd /bak/linux/dpdk-kmods/linux/igb_uio

sudo modprobe uio_pci_generic

sudo insmod igb_uio.ko

sudo /usr/local/bin/dpdk-devbind.py -u 0000:02:00.0

sudo /usr/local/bin/dpdk-devbind.py -u 0000:03:00.0

sudo /usr/local/bin/dpdk-devbind.py -b igb_uio 0000:02:00.0

sudo /usr/local/bin/dpdk-devbind.py -b igb_uio 0000:03:00.0

sudo /usr/local/bin/dpdk-devbind.py --status

cd /bak/linux/dpdk/examples/l3fwd

echo 128 |sudo tee /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

sudo ./build/l3fwd -l 0,1,2,3 -n 4 -- -p 0x3 -L --config="(0,0,0)(0,1,1)(1,0,2)(1,1,3)" --parse-ptype

sudo ./build/l3fwd -l 0 -n4 -- -p0x3 -P --config="(0,0,0)(1,0,0)" --parse-ptype

三个虚机多队列virtio网卡运行l3fwd

dpdk虚机做图中的router, 它有两块dpdk网卡(port0接default/virbr1, port1将cloud/virbr0)

创建虚机pc_0, 1G内存,4G硬盘,接default/virbr1

创建虚机pc_1, 1G内存,4G硬盘,接cloud/virbr0

注意:用三个虚机来实现下列拓扑有一点问题,因为192.168.101.202在物理机上本来就有网关,而现在又要使用dpdk虚机来提供网关,不好弄。

参考:https://blog.csdn.net/yk_wing4/article/details/104467133

但似乎不通

+---------+

| pc0 |

+---------+

| port : enp1s0 -> virbr1 (default)

| mac : 52:54:00:b1:51:84

| ip : 192.18.0.1/24

|

|

| dpdk port id: 0

| mac : 52:54:00:14:68:87

+---------+

| router |

+---------+

| dpdk port id: 1

| mac : 52:54:00:7c:47:73

|

|

| port : enp1s0 -> virbr0 (cloud)

| mac : 52:54:00:69:59:58

| ip : 192.18.1.1

+---------+

| pc1 |

+---------+

现在的目的是在pc0中能ping pc1

1,在pc0中设置:

由于DPDK的l3fwd不能处理ARP需人工lladdr处理ARP

sudo ifconfig enp1s0 0.0.0.0

sudo ifconfig enp1s0 192.18.0.1/24

sudo ip r a default dev enp1s0

sudo ip n a 192.18.1.1 dev enp1s0 lladdr 52:54:00:14:68:87

2, 在pc1中设置:

sudo ifconfig enp1s0 0.0.0.0

sudo ifconfig enp1s0 192.18.1.1/24

sudo ip r a default dev enp1s0

sudo ip n a 192.18.0.1 lladdr 52:54:00:7c:47:73 dev enp1s0

3, 路由器中设置:

首先,路由表是main.c中的ipv4_l3fwd_route_array(l3fwd_lpm.c将用它)写死的。

const struct ipv4_l3fwd_route ipv4_l3fwd_route_array[] = {

const struct ipv4_l3fwd_route ipv4_l3fwd_route_array[] = {

{RTE_IPV4(198, 168, 101, 0), 24, 0},

{RTE_IPV4(198, 168, 100, 0), 24, 1},

# -l 2,3

# use core 2 and 3

# -p 0x3

# port mask 0x3 for port0 and port1

# --config="(0,0,2)(1,0,2))"

# port0.queue0 at cpu2

# port1.queue0 at cpu3

# --eth-dest=0,52:54:00:b1:51:84

# for port0 output, the destination mac address set to 52:54:00:b1:51:84

# --eth-dest=1,52:54:00:69:59:58

# for port1 output, the destination mac address set to 52:54:00:69:59:58

sudo ./build/l3fwd -l 2,3 -n 4 -- -p 0x3 -L --config="(0,0,2)(1,0,3))" --eth-dest=0,52:54:00:b1:51:84 --eth-dest=1,52:54:00:69:59:58 --parse-ptype

...

LPM: Adding route 198.18.0.0 / 24 (0)

LPM: Adding route 198.18.1.0 / 24 (1)

...

# router's port0(52:54:00:14:68:87) -> pc0

Address:52:54:00:14:68:87, Destination:52:54:00:B1:51:84, Allocated mbuf pool on socket 0

# router's port1(52:54:00:7C:47:73) -> pc1

Address:52:54:00:7C:47:73, Destination:52:54:00:69:59:58, txq=2,0,0 txq=3,1,0

...

Initializing rx queues on lcore 2 ... rxq=0,0,0

Initializing rx queues on lcore 3 ... rxq=1,0,0

...

L3FWD: entering main loop on lcore 3

L3FWD: -- lcoreid=3 portid=1 rxqueueid=0

L3FWD: entering main loop on lcore 2

L3FWD: -- lcoreid=2 portid=0 rxqueueid=0

在pc0上运行ping 192.18.1.1来测试.

运行TestPMD

参考: https://blog.csdn.net/qq_15437629/article/details/86417895

TestPMD是一个使用DPDK软件包分发的参考应用程序。其主要目的是在网络接口的以太网端口之间转发数据包。此外,用户还可以用TestPMD尝试一些不同驱动程序的功能,例如RSS,过滤器和英特尔®以太网流量控制器(Intel® Ethernet Flow Director)

# 以交互模式运行testpmd, 这时testpmd就能自动检测到两个igb_uio网卡,core1用于管理命令行,core2与3用于转发数据。

ubuntu@dpdk:/bak/linux/dpdk$ sudo ./build/app/dpdk-testpmd -l 1,2,3 -n 4 -- -i --total-num-mbufs=2048

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected static linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available 1048576 kB hugepages reported

EAL: VFIO support initialized

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:02:00.0 (socket 0)

EAL: Probe PCI driver: net_e1000_em (8086:10d3) device: 0000:03:00.0 (socket 0)

TELEMETRY: No legacy callbacks, legacy socket not created

Interactive-mode selected

testpmd: create a new mbuf pool <mb_pool_0>: n=2048, size=2176, socket=0

testpmd: preferred mempool ops selected: ring_mp_mc

Configuring Port 0 (socket 0)

Port 0: 52:54:00:14:68:87

Configuring Port 1 (socket 0)

Port 1: 52:54:00:41:33:73

Checking link statuses...

Done

# 'show config fwd'用于查看转发模式,这里默认的转发模式,Core 2将轮循端口P=0上的包然后转发给端口P=2

testpmd> show config fwd

io packet forwarding - ports=2 - cores=1 - streams=2 - NUMA support enabled, MP allocation mode: native

Logical Core 2 (socket 0) forwards packets on 2 streams:

RX P=0/Q=0 (socket 0) -> TX P=1/Q=0 (socket 0) peer=02:00:00:00:00:01

RX P=1/Q=0 (socket 0) -> TX P=0/Q=0 (socket 0) peer=02:00:00:00:00:00

# start命令开发转发

testpmd> start

# 检查端口之间是否有包正在转发,将显示所有端口的统计信息。

testpmd> show port stats all

######################## NIC statistics for port 0 ########################

RX-packets: 1254 RX-missed: 0 RX-bytes: 99224

RX-errors: 0

RX-nombuf: 0

TX-packets: 6 TX-errors: 0 TX-bytes: 1125

Throughput (since last show)

Rx-pps: 0 Rx-bps: 304

Tx-pps: 0 Tx-bps: 48

############################################################################

######################## NIC statistics for port 1 ########################

RX-packets: 1170 RX-missed: 0 RX-bytes: 93782

RX-errors: 0

RX-nombuf: 0

TX-packets: 90 TX-errors: 0 TX-bytes: 6231

Throughput (since last show)

Rx-pps: 0 Rx-bps: 48

Tx-pps: 0 Tx-bps: 288

############################################################################

############################################################################

# 停止转发

testpmd> stop

安装Pktgen

git clone https://github.com/pktgen/Pktgen-DPDK.git

cd /bak/linux/Pktgen-DPDK/

export PKG_CONFIG_PATH=/usr/local/lib/x86_64-linux-gnu

meson build

ninja -C build

sudo ninja -C build install

使用dpdk库写代码学习

见:云网络性能测试流程 - https://blog.csdn.net/u014389734/article/details/118028026

DPDK-实战之skeleton(basicfwd) - https://blog.csdn.net/pangyemeng/article/details/78226434

20210923更新 - VMware虚机运行dpdk helloworld

1, 将之前的o7k虚机(https://blog.csdn.net/quqi99/article/details/97622336)关机后在VMware GUI中再添加两块网卡 (82545是e1000网卡不支持多队列,免费版的vmware player中似乎无法使用多队列的vmxnet3网卡),这样就有了3块e1000网卡(lspci |grep -i eth).

2, 并且将虚机CPU改成4个.

3, VMware虚机默认CPU和物理CPU相同,请用"cat /proc/cpuinfo |grep pdpe1gb"命令确认CPU也是支持DPDK的.

4, 根据实际的3块网卡名称调整netplan文件(/etc/netplan/00-installer-config.yaml), 之后运行'netplan apply'命令

5, 修改/etc/default/grub文件,之后运行'update-grub'命令

GRUB_CMDLINE_LINUX="default_hugepagesz=2M hugepagesz=2M hugepages=64 isolcpus=1-3"

6, 运行'reboot'命令重启虚机后验证大页是否生效

cat /proc/meminfo |grep -i hugepages

7, 编译dpdk源码

sudo apt install linux-headers-$(uname -r) libpcap-dev make gcc -y

sudo mkdir -p /bak/linux && sudo chown -R $USER /bak/linux/ && cd /bak/linux

git clone http://dpdk.org/git/dpdk-kmods

cd dpdk-kmods/linux/igb_uio

make

sudo modprobe uio_pci_generic

sudo insmod igb_uio.ko

#git clone https://github.com/DPDK/dpdk.git

wget https://github.com/DPDK/dpdk/archive/refs/tags/v21.08.tar.gz

tar -xf v21.08.tar.gz && mv dpdk-21.08 dpdk

cd /bak/linux/dpdk

sudo apt install python3-pip re2c numactl libnuma-dev -y

# NOTE: must use 'sudo' to avoid 'No module named ninja' when running 'ninja install'

sudo pip3 install meson ninja pyelftools

#/usr/local/bin/meson -Dexamples=all -Dbuildtype=debug -Denable_trace_fp=true build

#/usr/local/bin/meson --reconfigure -Dexamples=all -Dbuildtype=debug -Denable_trace_fp=true build

/usr/local/bin/meson -Dexamples=helloworld build

#/usr/local/bin/ninja -t clean

/usr/local/bin/ninja -C build -j4

sudo /usr/local/bin/ninja -C build install

sudo ./build/examples/dpdk-helloworld -c3 -n4

ubuntu@o7k:/bak/linux/dpdk$ sudo ./build/examples/dpdk-helloworld -c3 -n4 EAL: Detected 4 lcore(s) EAL: Detected 1 NUMA nodes EAL: Detected static linkage of DPDK EAL: Multi-process socket /var/run/dpdk/rte/mp_socket EAL: Selected IOVA mode 'PA' EAL: No available 1048576 kB hugepages reported EAL: VFIO support initialized TELEMETRY: No legacy callbacks, legacy socket not created hello from core 1 hello from core 0

Reference

[1] https://www.sdnlab.com/community/article/dpdk/908

[2] https://dpdk-docs.readthedocs.io/en/latest/linux_gsg/enable_func.html#enabling-additional-functionality

[3] https://feishujun.blog.csdn.net/article/details/113665380

[4] https://cxyzjd.com/article/u012570105/82594089

[5] https://blog.csdn.net/linggang_123/article/details/114137361

以上是关于DPDK - 通过源码安装dpdk并运行examples (by quqi99)的主要内容,如果未能解决你的问题,请参考以下文章