ROS实验笔记之——SLAM无人驾驶初入门

Posted gwpscut

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ROS实验笔记之——SLAM无人驾驶初入门相关的知识,希望对你有一定的参考价值。

最近想学习一下无人驾驶SLAM方面的内容

代码测试

这里先基于kitti数据集,进行测试。之前博客中已经介绍过kitti数据集了。本博文就用这个数据集来进行各种经典方法的复现

The KITTI Vision Benchmark Suite http://www.cvlibs.net/datasets/kitti/eval_odometry.php

http://www.cvlibs.net/datasets/kitti/eval_odometry.php

VINS-FUSION

VINS-Fusion demo

把vins-mono也配置一下好了~

主要cm的时候会报错,把里面cmakelist的c++11改为14即可

这样可以避免出错,但好像运行会报错

试试改为ceres-solver-1.14.0。再编译。发现还是不可以。。。。重装系统看看。

在另外一台电脑上尝试了不行,然后把vins-fusion也编译了一下,然后运行过vins-fusion后就可以了???再运行好就work了。。。奇怪。。。。

最终发现问题了,要按下面顺序运行才可以。。。cao

这个原因非常的迷惑。。。。。

roslaunch vins_estimator vins_rviz.launch

roslaunch vins_estimator euroc.launch

vins系列真的非常丰富,下面还有个co-vins

https://github.com/qintonguav/Co-VINS https://github.com/qintonguav/Co-VINS

https://github.com/qintonguav/Co-VINS

A-LOAM

之前博客《学习笔记之——激光雷达SLAM(LOAM系列的复现与学习)》已经配置过A-LOAM了。本博文来跑一下其kitti数据集

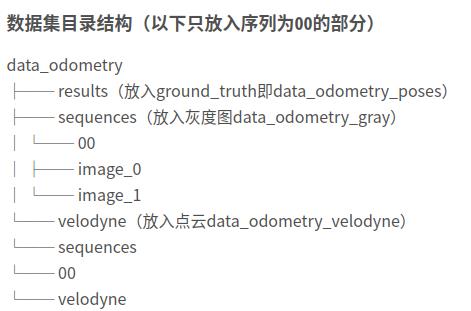

注意,对于下载的数据集需要按以下的形式打包放好

data

|----poses

| |----00.txt

|----sequences

| |----00

| | |----image_0

| | |----image_1

| | |----velodyne

| | |----time.txt

然后将kitti的launch修改为:

<launch>

<node name="kittiHelper" pkg="aloam_velodyne" type="kittiHelper" output="screen">

<!-- <param name="dataset_folder" type="string" value="/data/KITTI/odometry/" /> -->

<param name="dataset_folder" type="string" value="/home/kwanwaipang/dataset/kitti/data/" />

<param name="sequence_number" type="string" value="00" />

<!-- <param name="to_bag" type="bool" value="false" /> -->

<param name="to_bag" type="bool" value="true" />

<!-- <param name="output_bag_file" type="string" value="/tmp/kitti.bag" /> replace with your output folder -->

<param name="output_bag_file" type="string" value="/home/kwanwaipang/dataset/kitti/kitti_00.bag" /> <!-- replace with your output folder -->

<param name="publish_delay" type="int" value="1" />

</node>

</launch>好坑。。上面那样的list是不对的。要改为下面这样才对(建议直接看源代码)

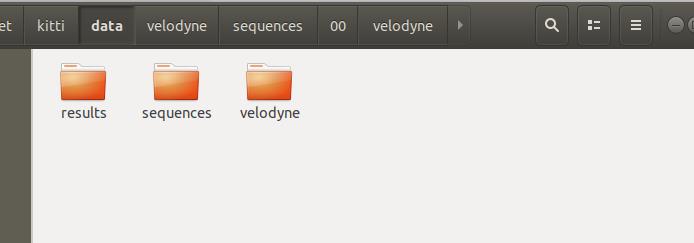

运行效果如下图所示

视频连接:

aloam

LIO-SAM

https://github.com/TixiaoShan/LIO-SAM https://github.com/TixiaoShan/LIO-SAM

https://github.com/TixiaoShan/LIO-SAM

有可能出现缺乏依赖libmetis.so而报错。运行下面命令安装即可

sudo apt-get install -y libmetis-dev

如若需要允许kitti数据集,则需要将config里面的参数文件修改如下:

lio_sam:

# Topics

pointCloudTopic: "points_raw" # Point cloud data

# imuTopic: "imu_raw" # IMU data

imuTopic: "imu_correct" # IMU data

odomTopic: "odometry/imu" # IMU pre-preintegration odometry, same frequency as IMU

gpsTopic: "odometry/gpsz" # GPS odometry topic from navsat, see module_navsat.launch file

# Frames

lidarFrame: "base_link"

baselinkFrame: "base_link"

odometryFrame: "odom"

mapFrame: "map"

# GPS Settings

useImuHeadingInitialization: true # if using GPS data, set to "true"

useGpsElevation: false # if GPS elevation is bad, set to "false"

gpsCovThreshold: 2.0 # m^2, threshold for using GPS data

poseCovThreshold: 25.0 # m^2, threshold for using GPS data

# Export settings

savePCD: false # https://github.com/TixiaoShan/LIO-SAM/issues/3

savePCDDirectory: "/Downloads/LOAM/" # in your home folder, starts and ends with "/". Warning: the code deletes "LOAM" folder then recreates it. See "mapOptimization" for implementation

# Sensor Settings

sensor: velodyne # lidar sensor type, either 'velodyne' or 'ouster'

N_SCAN: 64 #16 # number of lidar channel (i.e., 16, 32, 64, 128)

Horizon_SCAN: 1800 # lidar horizontal resolution (Velodyne:1800, Ouster:512,1024,2048)

downsampleRate: 2 #1 # default: 1. Downsample your data if too many points. i.e., 16 = 64 / 4, 16 = 16 / 1

lidarMinRange: 1.0 # default: 1.0, minimum lidar range to be used

lidarMaxRange: 1000.0 # default: 1000.0, maximum lidar range to be used

# IMU Settings

imuAccNoise: 3.9939570888238808e-03

imuGyrNoise: 1.5636343949698187e-03

imuAccBiasN: 6.4356659353532566e-05

imuGyrBiasN: 3.5640318696367613e-05

imuGravity: 9.80511

imuRPYWeight: 0.01

# Extrinsics (lidar -> IMU)

extrinsicTrans: [-8.086759e-01, 3.195559e-01, -7.997231e-01]

extrinsicRot: [9.999976e-01, 7.553071e-04, -2.035826e-03, -7.854027e-04, 9.998898e-01, -1.482298e-02, 2.024406e-03, 1.482454e-02, 9.998881e-01]

extrinsicRPY: [9.999976e-01, 7.553071e-04, -2.035826e-03, -7.854027e-04, 9.998898e-01, -1.482298e-02, 2.024406e-03, 1.482454e-02, 9.998881e-01]

# extrinsicRot: [1, 0, 0,

# 0, 1, 0,

# 0, 0, 1]

# extrinsicRPY: [1, 0, 0,

# 0, 1, 0,

# 0, 0, 1]

# LOAM feature threshold

edgeThreshold: 1.0

surfThreshold: 0.1

edgeFeatureMinValidNum: 10

surfFeatureMinValidNum: 100

# voxel filter paprams

odometrySurfLeafSize: 0.4 # default: 0.4 - outdoor, 0.2 - indoor

mappingCornerLeafSize: 0.2 # default: 0.2 - outdoor, 0.1 - indoor

mappingSurfLeafSize: 0.4 # default: 0.4 - outdoor, 0.2 - indoor

# robot motion constraint (in case you are using a 2D robot)

z_tollerance: 1000 # meters

rotation_tollerance: 1000 # radians

# CPU Params

numberOfCores: 4 # number of cores for mapping optimization

mappingProcessInterval: 0.15 # seconds, regulate mapping frequency

# Surrounding map

surroundingkeyframeAddingDistThreshold: 1.0 # meters, regulate keyframe adding threshold

surroundingkeyframeAddingAngleThreshold: 0.2 # radians, regulate keyframe adding threshold

surroundingKeyframeDensity: 2.0 # meters, downsample surrounding keyframe poses

surroundingKeyframeSearchRadius: 50.0 # meters, within n meters scan-to-map optimization (when loop closure disabled)

# Loop closure

loopClosureEnableFlag: true

loopClosureFrequency: 1.0 # Hz, regulate loop closure constraint add frequency

surroundingKeyframeSize: 50 # submap size (when loop closure enabled)

historyKeyframeSearchRadius: 15.0 # meters, key frame that is within n meters from current pose will be considerd for loop closure

historyKeyframeSearchTimeDiff: 30.0 # seconds, key frame that is n seconds older will be considered for loop closure

historyKeyframeSearchNum: 25 # number of hostory key frames will be fused into a submap for loop closure

historyKeyframeFitnessScore: 0.3 # icp threshold, the smaller the better alignment

# Visualization

globalMapVisualizationSearchRadius: 1000.0 # meters, global map visualization radius

globalMapVisualizationPoseDensity: 10.0 # meters, global map visualization keyframe density

globalMapVisualizationLeafSize: 1.0 # meters, global map visualization cloud density

# Navsat (convert GPS coordinates to Cartesian)

navsat:

frequency: 50

wait_for_datum: false

delay: 0.0

magnetic_declination_radians: 0

yaw_offset: 0

zero_altitude: true

broadcast_utm_transform: false

broadcast_utm_transform_as_parent_frame: false

publish_filtered_gps: false

# EKF for Navsat

ekf_gps:

publish_tf: false

map_frame: map

odom_frame: odom

base_link_frame: base_link

world_frame: odom

frequency: 50

two_d_mode: false

sensor_timeout: 0.01

# -------------------------------------

# External IMU:

# -------------------------------------

imu0: imu_correct

# make sure the input is aligned with ROS REP105. "imu_correct" is manually transformed by myself. EKF can also transform the data using tf between your imu and base_link

imu0_config: [false, false, false,

true, true, true,

false, false, false,

false, false, true,

true, true, true]

imu0_differential: false

imu0_queue_size: 50

imu0_remove_gravitational_acceleration: true

# -------------------------------------

# Odometry (From Navsat):

# -------------------------------------

odom0: odometry/gps

odom0_config: [true, true, true,

false, false, false,

false, false, false,

false, false, false,

false, false, false]

odom0_differential: false

odom0_queue_size: 10

# x y z r p y x_dot y_dot z_dot r_dot p_dot y_dot x_ddot y_ddot z_ddot

process_noise_covariance: [ 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 10.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0.1, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0.25, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0.25, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0.04, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.5, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.015]

同样的类似ALOAM,作者也给出了从kitti生成rosbag的代码

To generate more bags using other KITTI raw data, you can use the python script provided in "config/doc/kitti2bag".

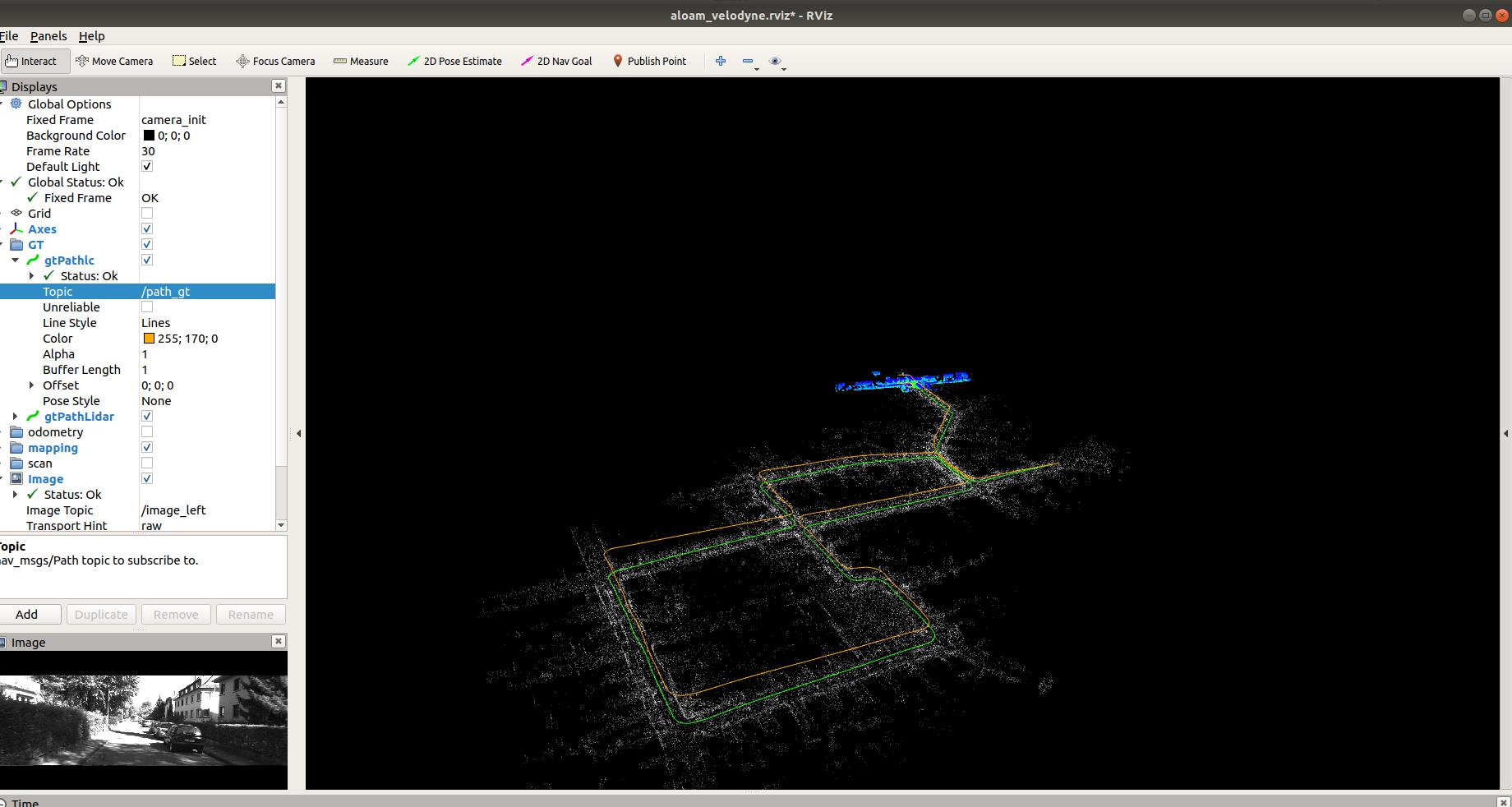

视频效果如下:

LIO-SAM

LIO-Mapping:A Tightly Coupled 3D Lidar and Inertial Odometry and Mapping Approach

Stereo Visual Inertial Pose Estimation Based on Feedforward-Feedback Loops

这是港理工的一个开源项目。论文链接见:https://arxiv.org/pdf/2007.02250.pdf

按照要求配置看看~

如果kitti报错

(.text.startup+0x4e8):对‘Sophus::SE3::SE3()’未定义的引用可以参考(Sophus 编译错误_u010003609的博客-CSDN博客)

将对应的cmkaelist文件改为

cmake_minimum_required(VERSION 2.8.3)

project(flvis)

add_definitions(-std=c++11)

#set(CMAKE_CXX_FLAGS "-std=c++11)

set(CMAKE_CXX_FLAGS "-std=c++11 ${CMAKE_CXX_FLAGS} -O3 -Wall -pthread") # -Wextra -Werror

set(CMAKE_BUILD_TYPE "RELEASE")

list(APPEND CMAKE_MODULE_PATH ${CMAKE_CURRENT_SOURCE_DIR}/3rdPartLib/g2o/cmake_modules)

set(G2O_ROOT /usr/local/include/g2o)

find_package(G2O REQUIRED)

find_package (OpenCV 3 REQUIRED)

find_package (Eigen3 REQUIRED)

find_package (CSparse REQUIRED )

find_package (Sophus REQUIRED )

find_package (yaml-cpp REQUIRED )

find_package (DBoW3 REQUIRED)

# pcl

find_package( PCL REQUIRED)

include_directories( ${PCL_INCLUDE_DIRS} )

add_definitions( ${PCL_DEFINITIONS} )

#FIND_PACKAGE(octomap REQUIRED )

#FIND_PACKAGE(octovis REQUIRED )

#INCLUDE_DIRECTORIES(${OCTOMAP_INCLUDE_DIRS})

find_package(catkin REQUIRED COMPONENTS

nodelet

roscpp

rostime

std_msgs

sensor_msgs

geometry_msgs

nav_msgs

pcl_ros

tf

visualization_msgs

image_transport

cv_bridge

message_generation

message_filters

)

add_message_files(

FILES

KeyFrame.msg

CorrectionInf.msg

)

generate_messages(

DEPENDENCIES

std_msgs

sensor_msgs

geometry_msgs

nav_msgs

visualization_msgs

)

## Declare a catkin package

catkin_package(

CATKIN_DEPENDS message_runtime

)

include_directories(

${catkin_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

${G2O_INCLUDE_DIRS}

${CSPARSE_INCLUDE_DIR}

${Sophus_INCLUDE_DIRS}

${YAML_CPP_INCLUDE_DIR}

${DBoW3_INCLUDE_DIR}

"${CMAKE_CURRENT_SOURCE_DIR}/src/"

"${CMAKE_CURRENT_SOURCE_DIR}/src/processing/"

"${CMAKE_CURRENT_SOURCE_DIR}/src/backend/"

"${CMAKE_CURRENT_SOURCE_DIR}/src/frontend/"

"${CMAKE_CURRENT_SOURCE_DIR}/src/utils/"

"${CMAKE_CURRENT_SOURCE_DIR}/src/visualization/"

#"${CMAKE_CURRENT_SOURCE_DIR}/src/octofeeder/"

)

SET(G2O_LIBS cholmod cxsparse -lg2o_cli -lg2o_core

-lg2o_csparse_extension -lg2o_ext_freeglut_minimal -lg2o_incremental

-lg2o_interactive -lg2o_interface -lg2o_opengl_helper -lg2o_parser

-lg2o_simulator -lg2o_solver_cholmod -lg2o_solver_csparse

-lg2o_solver_dense -lg2o_solver_pcg -lg2o_solver_slam2d_linear

-lg2o_solver_structure_only -lg2o_stuff -lg2o_types_data -lg2o_types_icp

-lg2o_types_sba -lg2o_types_sclam2d -lg2o_types_sim3 -lg2o_types_slam2d

-lg2o_types_slam3d)

## Declare a C++ library

add_library(flvis

#processing

src/processing/feature_dem.cpp

src/processing/depth_camera.cpp

src/processing/landmark.cpp

src/processing/camera_frame.cpp

src/processing/triangulation.cpp

src/processing/lkorb_tracking.cpp

src/processing/imu_state.cpp

src/processing/vi_motion.cpp

src/processing/optimize_in_frame.cpp

#vis

src/visualization/rviz_frame.cpp

src/visualization/rviz_path.cpp

src/visualization/rviz_pose.cpp

src/visualization/rviz_odom.cpp

#msg

src/utils/keyframe_msg.cpp

src/utils/correction_inf_msg.cpp

#node tracking

src/frontend/vo_tracking.cpp

src/frontend/f2f_tracking.cpp

#node localmap

src/backend/vo_localmap.cpp

#node loop closing

src/backend/vo_loopclosing.cpp

src/backend/poselmbag.cpp

#src/octofeeder/octomap_feeder.cpp

)

add_dependencies(flvis

flvis_generate_messages_cpp

${catkin_EXPORTED_TARGETS})

target_link_libraries(flvis

${catkin_LIBRARIES}

${OpenCV_LIBRARIES}

${CSPARSE_LIBRARY}

${Sophus_LIBRARIES}

${YAML_CPP_LIBRARIES}

${DBoW3_LIBRARIES}

${G2O_LIBS}

${PCL_LIBRARIES}

${Boost_SYSTEM_LIBRARY}

#${OCTOMAP_LIBRARIES}

)

#independent modules

#1 euroc_publisher publish path

add_executable(vo_repub_rec

src/independ_modules/vo_repub_rec.cpp)

target_link_libraries(vo_repub_rec

${catkin_LIBRARIES}

${Sophus_LIBRARIES})

add_executable(kitti_publisher

src/independ_modules/kitti_publisher.cpp

src/visualization/rviz_path.cpp)

set(Sophus_LIBRARIES libSophus.so)

target_link_libraries(kitti_publisher

${catkin_LIBRARIES}

${Sophus_LIBRARIES})

注意,可能出现g2o安装不好导致有问题,重新装一下即可

修改对应的launch文件,数据集还是上面整理好的

<?xml version="1.0"?>

<launch>

<!--Input######################################################################################################-->

<param name="/dataset_pub_delay" type="double" value="5.0" />

<param name="/dataset_pub_rate" type="int" value="30" />

<param name="/publish_gt" type="bool" value="true" />

<param name="/dataset_folder_path" type="string" value="/home/kwanwaipang/dataset/kitti/data/sequences/00/" />

<param name="/dataset_gt_file" type="string" value="/home/kwanwaipang/dataset/kitti/data/results/00.txt" />

<node pkg="flvis" type="kitti_publisher" name="kitti_publisher" output="screen"/>

<!--FLVIS######################################################################################################-->

<arg name="node_start_delay" default="1.0" />

<param name="/yamlconfigfile" type="string" value="$(find flvis)/launch/KITTI/KITTI.yaml"/>

<param name="/voc" type="string" value="$(find flvis)/voc/voc_orb.dbow3"/>

<!-- Manager -->

<node pkg="nodelet" type="nodelet"

name="flvis_nodelet_manager" args="manager" output="screen"

launch-prefix="bash -c 'sleep $(arg node_start_delay); $0 $@' ">

<param name="num_worker_threads" value="4" />

</node>

<!-- TrackingNode -->

<!-- D435i -->

<node pkg="nodelet" type="nodelet" args="load flvis/TrackingNodeletClass flvis_nodelet_manager"

name="TrackingNodeletClass_loader" output="screen"

launch-prefix="bash -c 'sleep $(arg node_start_delay); $0 $@' ">

<remap from="/imu" to="/camera/imu"/>

</node>

<!-- LocalMapNode -->

<!--window_size: Num of keyframes in sliding window optimizer-->

<!-- <node pkg="nodelet" type="nodelet" args="load flvis/LocalMapNodeletClass flvis_nodelet_manager"

name="LocalMapNodeletClass_loader" output="screen"

launch-prefix="bash -c 'sleep $(arg node_start_delay); $0 $@' ">

<param name="/window_size" type="int" value="8" />

</node>-->

<!-- LoopClosingNode -->

<node pkg="nodelet" type="nodelet" args="load flvis/LoopClosingNodeletClass flvis_nodelet_manager"

name="LoopClosingNodeletClass_loader" output="screen"

launch-prefix="bash -c 'sleep $(arg node_start_delay); $0 $@' ">

</node>

<node pkg="flvis" type="vo_repub_rec" name="lc2file" output="screen">

<!--Sub Support Type:-->

<param name="sub_type" type="string" value="NavPath" />

<param name="sub_topic" type="string" value="/vision_path_lc_all" />

<!--Support Type:-->

<!--"0" disable the republish function -->

<!--"Path"-->

<!--"PoseStamped"-->

<param name="repub_type" type="string" value="0" />

<param name="repub_topic" type="string" value="/republish_path" />

<!--output_file_path = "0" disable the file output function-->

<param name="output_file_path" type="string" value="$(find flvis)/results/kitti_lc.txt" />

</node>

</launch>

roslaunch flvis rviz_kitti.launch

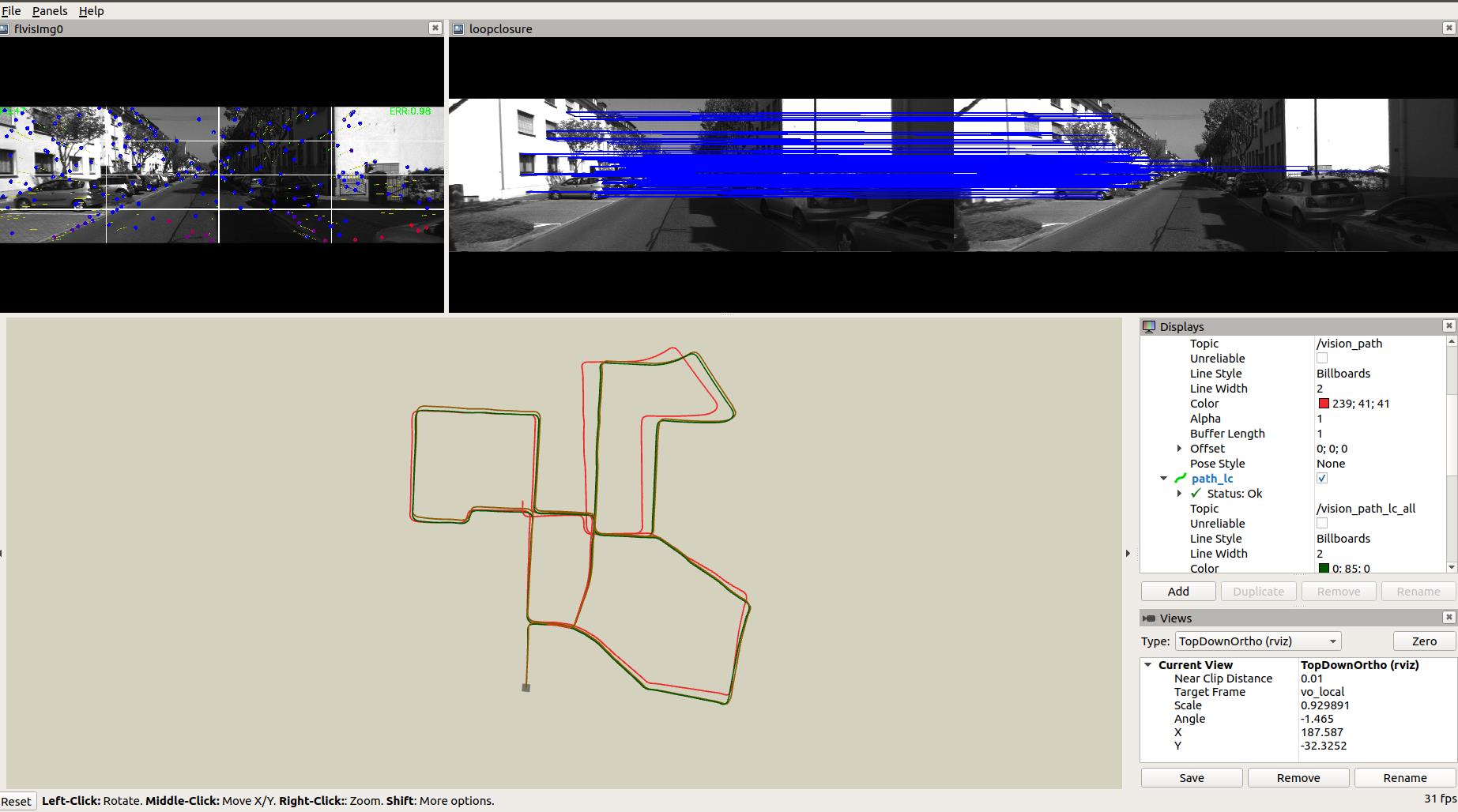

roslaunch flvis flvis_kitti.launch效果如下

flvis

AVP-SLAM: Semantic Visual Mapping and Localization for Autonomous Vehicles in the Parking Lot

ORB-SLAM 跑kitti

GVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Smooth and Consistent State Estimation

SuMa++: Efficient LiDAR-based Semantic SLAM

https://github.com/PRBonn/semantic_suma https://github.com/PRBonn/semantic_suma

https://github.com/PRBonn/semantic_suma

DSO: Direct Sparse Odometry

OverlapNet - Loop Closing for 3D LiDAR-based SLAM

https://github.com/PRBonn/OverlapNet

High-speed Autonomous Drifting with Deep Reinforcement Learning

GitHub - caipeide/drift_drl: High-speed Autonomous Drifting with Deep Reinforcement Learning

https://sites.google.com/view/autonomous-drifting-with-drl

参考资料

以上是关于ROS实验笔记之——SLAM无人驾驶初入门的主要内容,如果未能解决你的问题,请参考以下文章

https://blog.csdn.net/weixin_43166819/article/details/103133570

https://blog.csdn.net/weixin_43166819/article/details/103133570