获取TensorRT(TRT)模型输入和输出

Posted SpikeKing

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了获取TensorRT(TRT)模型输入和输出相关的知识,希望对你有一定的参考价值。

获取TensorRT(TRT)模型输入和输出,用于创建TRT的模型服务使用,具体参考脚本check_trt_script.py,如下:

- 脚本输入:TRT的模型路径和输入图像尺寸

- 脚本输出:模型的输入和输出结点信息,同时验证TRT模型是否可用

#!/usr/bin/env python

# -- coding: utf-8 --

"""

Copyright (c) 2021. All rights reserved.

Created by C. L. Wang on 16.9.21

"""

import argparse

import numpy as np

def check_trt(model_path, image_size):

"""

检查TRT模型

"""

import pycuda.driver as cuda

import tensorrt as trt

# 必须导入包,import pycuda.autoinit,否则报错

import pycuda.autoinit

print('[Info] model_path: {}'.format(model_path))

img_shape = (1, 3, image_size, image_size)

print('[Info] img_shape: {}'.format(img_shape))

trt_logger = trt.Logger(trt.Logger.WARNING)

trt_path = model_path # TRT模型路径

with open(trt_path, 'rb') as f, trt.Runtime(trt_logger) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

for binding in engine:

binding_idx = engine.get_binding_index(binding)

size = engine.get_binding_shape(binding_idx)

dtype = trt.nptype(engine.get_binding_dtype(binding))

print("[Info] binding: {}, binding_idx: {}, size: {}, dtype: {}"

.format(binding, binding_idx, size, dtype))

input_image = np.random.randn(*img_shape).astype(np.float32) # 图像尺寸

input_image = np.ascontiguousarray(input_image)

print('[Info] input_image: {}'.format(input_image.shape))

with engine.create_execution_context() as context:

stream = cuda.Stream()

bindings = [0] * len(engine)

for binding in engine:

idx = engine.get_binding_index(binding)

if engine.binding_is_input(idx):

input_memory = cuda.mem_alloc(input_image.nbytes)

bindings[idx] = int(input_memory)

cuda.memcpy_htod_async(input_memory, input_image, stream)

else:

dtype = trt.nptype(engine.get_binding_dtype(binding))

shape = context.get_binding_shape(idx)

output_buffer = np.empty(shape, dtype=dtype)

output_buffer = np.ascontiguousarray(output_buffer)

output_memory = cuda.mem_alloc(output_buffer.nbytes)

bindings[idx] = int(output_memory)

context.execute_async_v2(bindings, stream.handle)

stream.synchronize()

cuda.memcpy_dtoh(output_buffer, output_memory)

print("[Info] output_buffer: {}".format(output_buffer))

def parse_args():

"""

处理脚本参数

"""

parser = argparse.ArgumentParser(description='检查TRT模型')

parser.add_argument('-m', dest='model_path', required=True, help='TRT模型路径', type=str)

parser.add_argument('-s', dest='image_size', required=False, help='图像尺寸,如336', type=int, default=336)

args = parser.parse_args()

arg_model_path = args.model_path

print("[Info] 模型路径: {}".format(arg_model_path))

arg_image_size = args.image_size

print("[Info] image_size: {}".format(arg_image_size))

return arg_model_path, arg_image_size

def main():

arg_model_path, arg_image_size = parse_args()

check_trt(arg_model_path, arg_image_size) # 检查TRT模型

if __name__ == '__main__':

main()

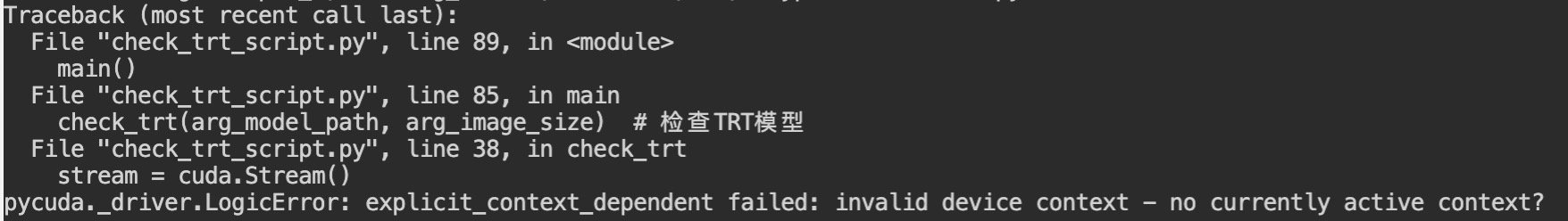

注意:必须导入包,import pycuda.autoinit,否则cuda.Stream()报错,如下:

输出信息如下:

[Info] 模型路径: ../mydata/trt_models/model_best_c2_20210915_cuda.trt

[Info] image_size: 336

[Info] model_path: ../mydata/trt_models/model_best_c2_20210915_cuda.trt

[Info] img_shape: (1, 3, 336, 336)

[Info] binding: input_0, binding_idx: 0, size: (1, 3, 336, 336), dtype: <class 'numpy.float32'>

[Info] binding: output_0, binding_idx: 1, size: (1, 2), dtype: <class 'numpy.float32'>

[Info] input_image: (1, 3, 336, 336)

[Info] output_buffer: [[ 0.23275298 -0.2184143 ]]

有效信息为:

- 输入结点

binding: input_0,输入尺寸size: (1, 3, 336, 336),输入类型dtype: <class 'numpy.float32'> - 输出结果

binding: output_0,输出尺寸size: (1, 2),输出类型dtype: <class 'numpy.float32'>

相应的json文件如下:

{

"model_path": "model_best_c2_20210915_cuda.trt",

"model_format": "trt",

"quant_type": "FP32",

"gpu_index": 0,

"inputs": {

"input_0": {

"shapes": [

1,

3,

336,

336

],

"type": "FP32"

}

},

"outputs": {

"output_0": {

"shapes": [

1,

2

],

"type": "FP32"

}

}

}

以上是关于获取TensorRT(TRT)模型输入和输出的主要内容,如果未能解决你的问题,请参考以下文章