大数据运维 docker搭建分布式图数据库nebula

Posted 脚丫先生

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了大数据运维 docker搭建分布式图数据库nebula相关的知识,希望对你有一定的参考价值。

大家好,我是脚丫先生 (o^^o)

最近在做数据融合分析平台。需要搭建一个分布式图数据库,第一想法就是面向百度和官网搜索,但是大多数只看到单节点搭建,分布式搭建都是基于k8s。自己不想那么把项目搞这么重,于是考利用docker-compose进行分布式搭建。下面进行阐述搭建过程,希望能帮助到大家。

文章目录

一、图数据库nebula

Nebula Graph 是开源的第三代分布式图数据库,不仅能够存储万亿个带属性的节点和边,而且还能在高并发场景下满足毫秒级的低时延查询要求。不同于 Gremlin 和 Cypher,Nebula 提供了一种 SQL-LIKE 的查询语言 nGQL,通过三种组合方式(管道、分号和变量)完成对图的 CRUD 的操作。在存储层 Nebula Graph 目前支持 RocksDB 和 HBase 两种方式。

二、集群规划

| 主机名 | IP | Nebula服务 |

|---|---|---|

| spark1 | 192.168.239.128 | graphd0, metad-0, storaged-0 |

| spark2 | 192.168.239.129 | graphd1, metad-1, storaged-1 |

| spark3 | 192.168.239.130 | graphd2, metad-2, storaged-2 |

对于运维来说,之前搭建原生的环境,非常之麻烦,大部分时候搭建一个环境需要很多时间,而且交付项目,运维项目,都想把客户掐死。阿西吧

2.1 spark1节点的docker-compose

version: '3.4'

services:

metad0:

image: vesoft/nebula-metad:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.129:9559

- --local_ip=192.168.239.128

- --ws_ip=0.0.0.0

- --port=9559

- --ws_http_port=19559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=0

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.128:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9559

- 19559

- 19560

volumes:

- ./data/meta0:/data/meta

- ./logs/meta0:/logs

restart: on-failure

storaged0:

image: vesoft/nebula-storaged:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.130:9559

- --local_ip=192.168.239.128

- --ws_ip=0.0.0.0

- --port=9779

- --ws_http_port=19779

- --data_path=/data/storage

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad0

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.128:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9779

- 19779

- 19780

volumes:

- ./data/storage0:/data/storage

- ./logs/storage0:/logs

restart: on-failure

graphd0:

image: vesoft/nebula-graphd:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.130:9559

- --port=9669

- --ws_ip=0.0.0.0

- --ws_http_port=19669

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad0

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.128:19669/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- "9669:9669"

- 19669

- 19670

volumes:

- ./logs/graph:/logs

restart: on-failure

注意:

- 修改参数meta_server_addrs:

全部Meta服务的IP地址和端口。多个Meta服务用英文逗号(,)分隔 - 修改参数local_ip:

Meta服务的本地IP地址。本地IP地址用于识别nebula-metad进程,如果是分布式集群或需要远程访问,请修改为对应地址。 - 默认参数ws_ip:

HTTP服务的IP地址。预设值:0.0.0.0。

2.2 spark2节点的docker-compose(配置与spark1同理)

version: '3.4'

services:

metad1:

image: vesoft/nebula-metad:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.129:9559

- --local_ip=192.168.239.129

- --ws_ip=0.0.0.0

- --port=9559

- --ws_http_port=19559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=0

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.129:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9559

- 19559

- 19560

volumes:

- ./data/meta1:/data/meta

- ./logs/meta1:/logs

restart: on-failure

storaged1:

image: vesoft/nebula-storaged:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.130:9559

- --local_ip=192.168.239.129

- --ws_ip=0.0.0.0

- --port=9779

- --ws_http_port=19779

- --data_path=/data/storage

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad1

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.129:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9779

- 19779

- 19780

volumes:

- ./data/storage1:/data/storage

- ./logs/storage1:/logs

restart: on-failure

graphd1:

image: vesoft/nebula-graphd:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.130:9559

- --port=9669

- --ws_ip=0.0.0.0

- --ws_http_port=19669

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad1

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.129:19669/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- "9669:9669"

- 19669

- 19670

volumes:

- ./logs/graph1:/logs

restart: on-failure

2.3 spark3节点的docker-compose(配置与spark1同理)

version: '3.4'

services:

metad2:

image: vesoft/nebula-metad:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.129:9559

- --local_ip=192.168.239.130

- --ws_ip=192.168.239.130

- --port=9559

- --ws_http_port=19559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=0

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.130:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9559

- 19559

- 19560

volumes:

- ./data/meta3:/data/meta

- ./logs/meta3:/logs

restart: on-failure

storaged2:

image: vesoft/nebula-storaged:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.130:9559

- --local_ip=192.168.239.130

- --ws_ip=192.168.239.130

- --port=9779

- --ws_http_port=19779

- --data_path=/data/storage

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.130:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9779

- 19779

- 19780

volumes:

- ./data/storage3:/data/storage

- ./logs/storage3:/logs

restart: on-failure

graphd2:

image: vesoft/nebula-graphd:v2.0.0

privileged: true

network_mode: host

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=192.168.239.128:9559,192.168.239.129:9559,192.168.239.130:9559

- --port=9669

- --ws_ip=0.0.0.0

- --ws_http_port=19669

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://192.168.239.128:19669/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- "9669:9669"

- 19669

- 19670

volumes:

- ./logs/graph:/logs

restart: on-failure

三、客户端

不说这么花里胡哨的,直接上docker-compose

version: '3.4'

services:

client:

image: vesoft/nebula-http-gateway:v2

environment:

USER: root

ports:

- 8080

networks:

- nebula-web

web:

image: vesoft/nebula-graph-studio:v2

environment:

USER: root

UPLOAD_DIR: ${MAPPING_DOCKER_DIR}

ports:

- 7001

depends_on:

- client

volumes:

- ${UPLOAD_DIR}:${MAPPING_DOCKER_DIR}:rw

networks:

- nebula-web

importer:

image: vesoft/nebula-importer:v2

networks:

- nebula-web

ports:

- 5699

volumes:

- ${UPLOAD_DIR}:${MAPPING_DOCKER_DIR}:rw

command:

- "--port=5699"

- "--callback=http://nginx:7001/api/import/finish"

nginx:

image: nginx:alpine

volumes:

- ./nginx/nginx.conf:/etc/nginx/conf.d/nebula.conf

- ${UPLOAD_DIR}:${MAPPING_DOCKER_DIR}:rw

depends_on:

- client

- web

networks:

- nebula-web

ports:

- 7001:7001

networks:

nebula-web:

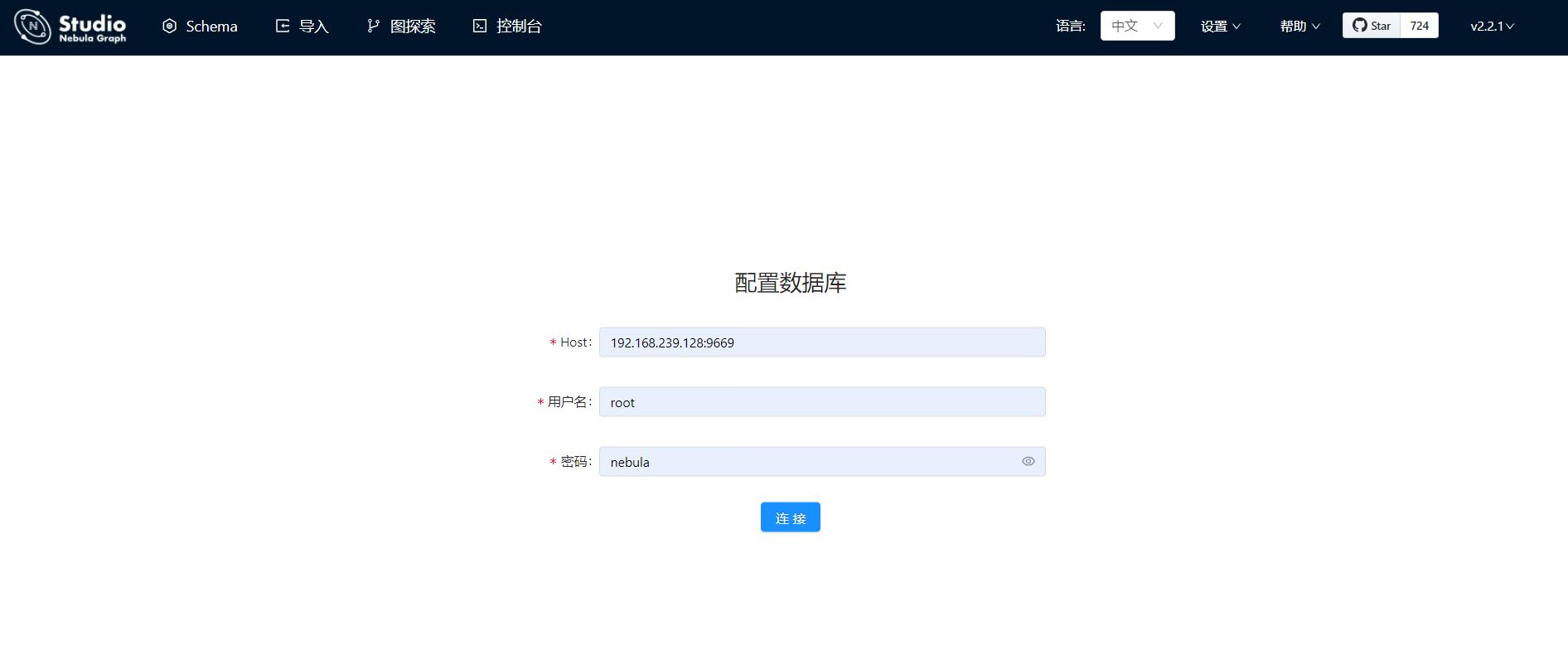

谷歌浏览器访问web界面: http://192.168.239.128:7001

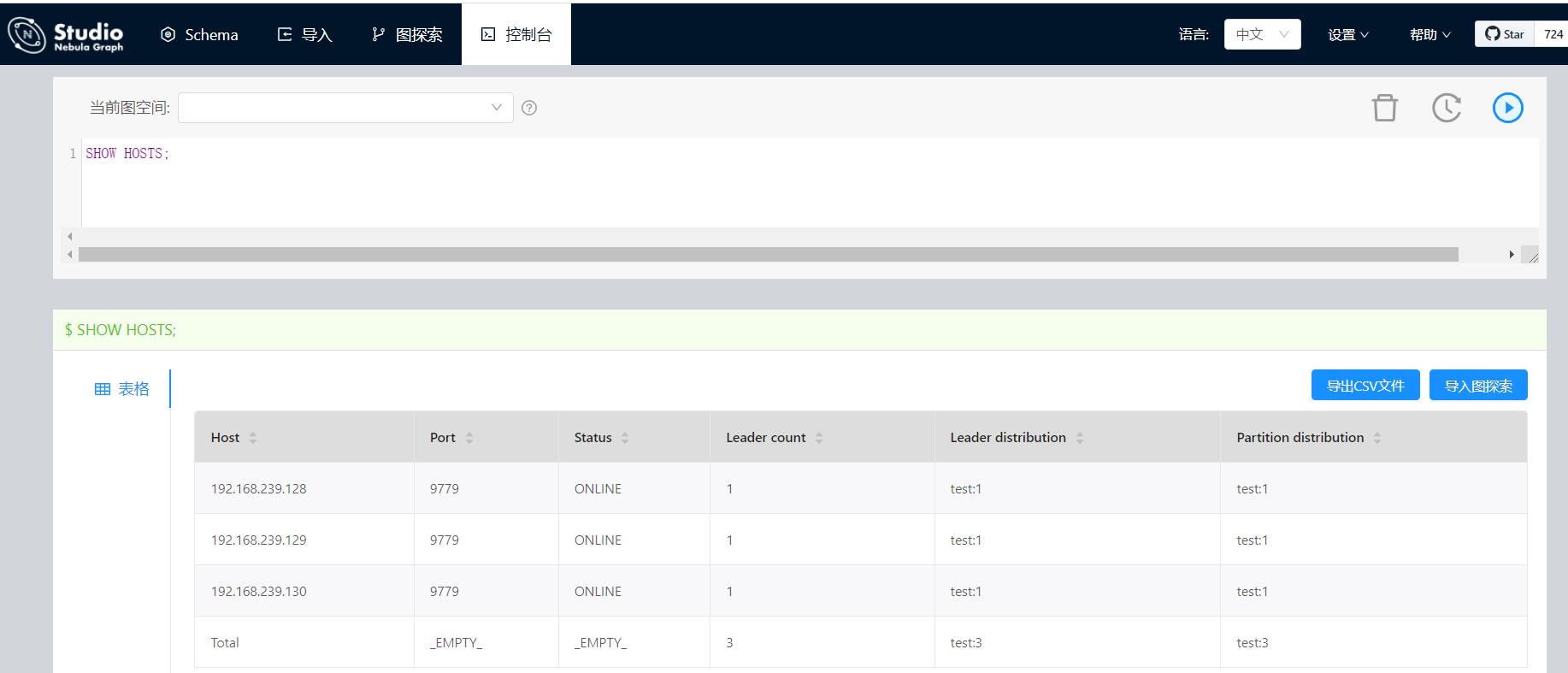

利用SHOW HOSTS;

以上是关于大数据运维 docker搭建分布式图数据库nebula的主要内容,如果未能解决你的问题,请参考以下文章