TensorFlow2深度学习实战(十五):目标检测算法 YOLOv4 实战

Posted AI 菌

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了TensorFlow2深度学习实战(十五):目标检测算法 YOLOv4 实战相关的知识,希望对你有一定的参考价值。

前言:

本专栏以理论与实战相结合的方式,左手看论文,右手敲代码,带你一步步吃透深度学习原理和源码,逐一攻克计算机视觉领域中的三大基本任务:图像分类、目标检测、语义分割。

本专栏完整代码将在我的GiuHub仓库更新,欢迎star收藏:https://github.com/Keyird/DeepLearning-TensorFlow2

文章目录

资源获取:

YOLOv4 算法讲解:https://ai-wx.blog.csdn.net/article/details/116793973

YOLOv4 代码仓库:https://github.com/Keyird/TensorFlow2-Detection/tree/main/YOLOv4

VOC2007 数据集下载:https://pan.baidu.com/s/1lyiA3uzQhRLTaO2Xov5BHQ 提取码:wm4l

预训练模型下载链接:https://pan.baidu.com/s/1KFwAqsnBv24vBEpdFgZvIA 提取码:ce1v

一、VOC数据集构建

(1)VOC格式介绍

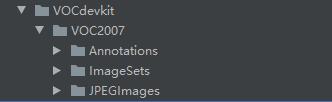

VOC 是目标检测一种通用的标准数据集格式,下面我以VOC2007数据集为例,来制作VOC标准数据集。整个数据集文件的目录结构如下图所示:

其中,VOC2007目录下存在着三个不同的文件,其具体作用是:

- Annotations:存放数据集的xml标签文件,xml文件需要进行解析。

- ImageSets:用来存放训练集或者测试集中图片ID的txt文件。

- JPEGImages:存放数据集原图

如果自己没有准备数据集,可以直接使用VOC2007数据集进行实验,数据集下载方式见上文。

(2)划分数据集

按照一定的比例划分数据集,并将图像数据的文件名(ID)存放在各个不同的txt文件中。比如我们要使用训练集数据,就从读取train.txt文件中存储ID对应的图像数据。

xmlfilepath = "./Annotations"

saveBasePath = "./ImageSets/Main/"

# 打开(新建)txt文件,用来存放待训练/测试数据的ID

ftrainval = open(os.path.join(saveBasePath,'trainval.txt'), 'w')

ftest = open(os.path.join(saveBasePath,'test.txt'), 'w')

ftrain = open(os.path.join(saveBasePath,'train.txt'), 'w')

fval = open(os.path.join(saveBasePath,'val.txt'), 'w')

# 按比例分配数据

for i in list:

name=total_xml[i][:-4]+'\\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name)

# 关闭(保存)txt文件

ftrainval.close()

ftrain.close()

fval.close()

ftest .close()

(3)解析xml标签

通过下面的 convert_annotation() 函数对 xml 标签进行解析,并将原图路径和对应的解析后的标签写入并保存在list_file文件夹中。

# 解析xml,获得标签值,并向txt中写入标签

def convert_annotation(year, image_id, list_file):

in_file = open('VOCdevkit/VOC%s/Annotations/%s.xml'%(year, image_id))

tree = ET.parse(in_file)

root = tree.getroot()

for obj in root.iter('object'):

difficult = 0

if obj.find('difficult')!=None:

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult)==1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (int(xmlbox.find('xmin').text), int(xmlbox.find('ymin').text), int(xmlbox.find('xmax').text), int(xmlbox.find('ymax').text))

list_file.write(" " + ",".join([str(a) for a in b]) + ',' + str(cls_id))

二、YOLOv4网络构建

YOLOv4的整体网络架构如下,下面将按照如下框架图来逐一搭建YOLOv4中的各个结构单元。

(1)DBL模块

def DarknetConv2D_BN_Leaky(*args, **kwargs):

"""

DarknetConv2D + BatchNormalization + LeakyReLU

"""

no_bias_kwargs = {'use_bias': False}

no_bias_kwargs.update(kwargs)

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(),

LeakyReLU(alpha=0.1))

(2)DBM模块

def DarknetConv2D_BN_Mish(*args, **kwargs):

"""

DarknetConv2D + BatchNormalization + Mish

"""

no_bias_kwargs = {'use_bias': False}

no_bias_kwargs.update(kwargs)

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(),

Mish())

(3)Resblock_Body

def resblock_body(x, num_filters, num_blocks, all_narrow=True):

"""

CSPDarknet53中的残差块

"""

# 利用ZeroPadding2D和一个步长为2x2的卷积块进行高和宽的压缩

preconv1 = ZeroPadding2D(((1,0),(1,0)))(x)

preconv1 = DarknetConv2D_BN_Mish(num_filters, (3,3), strides=(2,2))(preconv1)

# 然后建立一个大的残差边shortconv、这个大残差边绕过了很多的残差结构

shortconv = DarknetConv2D_BN_Mish(num_filters//2 if all_narrow else num_filters, (1,1))(preconv1)

# 主干部分会对num_blocks进行循环,循环内部是残差结构

mainconv = DarknetConv2D_BN_Mish(num_filters//2 if all_narrow else num_filters, (1,1))(preconv1)

for i in range(num_blocks):

y = compose(

DarknetConv2D_BN_Mish(num_filters//2, (1,1)),

DarknetConv2D_BN_Mish(num_filters//2 if all_narrow else num_filters, (3,3)))(mainconv)

mainconv = Add()([mainconv,y])

postconv = DarknetConv2D_BN_Mish(num_filters//2 if all_narrow else num_filters, (1,1))(mainconv)

# 将大残差边再堆叠回来

route = Concatenate()([postconv, shortconv])

# 最后对通道数进行整合

return DarknetConv2D_BN_Mish(num_filters, (1,1))(route)

(4)CSPDarknet53

def darknet_body(x):

"""

CSPDarknet53

"""

x = DarknetConv2D_BN_Mish(32, (3,3))(x)

x = resblock_body(x, 64, 1, False)

x = resblock_body(x, 128, 2)

x = resblock_body(x, 256, 8)

feat1 = x

x = resblock_body(x, 512, 8)

feat2 = x

x = resblock_body(x, 1024, 4)

feat3 = x

return feat1,feat2,feat3

(5)YOLOv4整体结构

def yolo_body(inputs, num_anchors, num_classes):

"""

构建YOLOv4整体结构

获得三个有效特征层,他们的shape分别是:(52,52,256)、(26,26,512)、(13,13,1024)

输出的是3个不同shape的预测张量,包含的是相对GT的偏移和真实类别、得分信息

"""

# 分别获得三个预测分支

feat1, feat2, feat3 = darknet_body(inputs)

# 13,13,1024 -> 13,13,512 -> 13,13,1024 -> 13,13,512 -> 13,13,2048 -> 13,13,512 -> 13,13,1024 -> 13,13,512

P5 = DarknetConv2D_BN_Leaky(512, (1, 1))(feat3)

P5 = DarknetConv2D_BN_Leaky(1024, (3, 3))(P5)

P5 = DarknetConv2D_BN_Leaky(512, (1, 1))(P5)

# 使用了SPP结构,即不同尺度的最大池化后堆叠。

maxpool1 = MaxPooling2D(pool_size=(13, 13), strides=(1, 1), padding='same')(P5)

maxpool2 = MaxPooling2D(pool_size=(9, 9), strides=(1, 1), padding='same')(P5)

maxpool3 = MaxPooling2D(pool_size=(5, 5), strides=(1, 1), padding='same')(P5)

P5 = Concatenate()([maxpool1, maxpool2, maxpool3, P5])

P5 = DarknetConv2D_BN_Leaky(512, (1, 1))(P5)

P5 = DarknetConv2D_BN_Leaky(1024, (3, 3))(P5)

P5 = DarknetConv2D_BN_Leaky(512, (1, 1))(P5)

# 13,13,512 -> 13,13,256 -> 26,26,256

P5_upsample = compose(DarknetConv2D_BN_Leaky(256, (1, 1)), UpSampling2D(2))(P5)

# 26,26,512 -> 26,26,256

P4 = DarknetConv2D_BN_Leaky(256, (1, 1))(feat2)

# 26,26,256 + 26,26,256 -> 26,26,512

P4 = Concatenate()([P4, P5_upsample])

# 26,26,512 -> 26,26,256 -> 26,26,512 -> 26,26,256 -> 26,26,512 -> 26,26,256

P4 = make_five_convs(P4, 256)

# 26,26,256 -> 26,26,128 -> 52,52,128

P4_upsample = compose(DarknetConv2D_BN_Leaky(128, (1, 1)), UpSampling2D(2))(P4)

# 52,52,256 -> 52,52,128

P3 = DarknetConv2D_BN_Leaky(128, (1, 1))(feat1)

# 52,52,128 + 52,52,128 -> 52,52,256

P3 = Concatenate()([P3, P4_upsample])

# 52,52,256 -> 52,52,128 -> 52,52,256 -> 52,52,128 -> 52,52,256 -> 52,52,128

P3 = make_five_convs(P3, 128)

# ---------------------------------------------------#

# 第三个特征层

# y3=(batch_size,52,52,3,85)

# ---------------------------------------------------#

P3_output = DarknetConv2D_BN_Leaky(256, (3, 3))(P3)

P3_output = DarknetConv2D(num_anchors * (num_classes + 5), (1, 1),

kernel_initializer=keras.initializers.RandomNormal(mean=0.0, stddev=0.01))(P3_output)

# 52,52,128 -> 26,26,256

P3_downsample = ZeroPadding2D(((1, 0), (1, 0)))(P3)

P3_downsample = DarknetConv2D_BN_Leaky(256, (3, 3), strides=(2, 2))(P3_downsample)

# 26,26,256 + 26,26,256 -> 26,26,512

P4 = Concatenate()([P3_downsample, P4])

# 26,26,512 -> 26,26,256 -> 26,26,512 -> 26,26,256 -> 26,26,512 -> 26,26,256

P4 = make_five_convs(P4, 256)

# ---------------------------------------------------#

# 第二个特征层

# y2=(batch_size,26,26,3,85)

# ---------------------------------------------------#

P4_output = DarknetConv2D_BN_Leaky(512, (3, 3))(P4)

P4_output = DarknetConv2D(num_anchors * (num_classes + 5), (1, 1),

kernel_initializer=keras.initializers.RandomNormal(mean=0.0, stddev=0.01))(P4_output)

# 26,26,256 -> 13,13,512

P4_downsample = ZeroPadding2D(((1, 0), (1, 0)))(P4)

P4_downsample = DarknetConv2D_BN_Leaky(512, (3, 3), strides=(2, 2))(P4_downsample)

# 13,13,512 + 13,13,512 -> 13,13,1024

P5 = Concatenate()([P4_downsample, P5])

# 13,13,1024 -> 13,13,512 -> 13,13,1024 -> 13,13,512 -> 13,13,1024 -> 13,13,512

P5 = make_five_convs(P5, 512)

# ---------------------------------------------------#

# 第一个特征层

# y1=(batch_size,13,13,3,85)

# ---------------------------------------------------#

P5_output = DarknetConv2D_BN_Leaky(1024, (3, 3))(P5)

P5_output = DarknetConv2D(num_anchors * (num_classes + 5), (1, 1),

kernel_initializer=keras.initializers.RandomNormal(mean=0.0, stddev=0.01))(P5_output)

return Model(inputs, [P5_output, P4_output, P3_output])

三、计算损失误差

(1)调整成真实值

根据YOLOv4目标检测原理可知,YOLOv4网络直接输出的不是目标框的真实位置,而是相对位置。所以,在根据预测值和标签值计算损失之前,需要将YOLOv4网络预测的相对位置转换成真实预测位置,完成这一步,需要进行如下操作:

def yolo_head(feats, anchors, num_classes, input_shape, calc_loss=False):

"""

将yolo_body()输出的预测值的调整成真实值

"""

num_anchors = len(anchors)

# [1, 1, 1, num_anchors, 2]

feats = tf.convert_to_tensor(feats)

anchors_tensor = K.reshape(K.constant(anchors), [1, 1, 1, num_anchors, 2])

# 获得x,y的网格

# (13, 13, 1, 2)

grid_shape = K.shape(feats)[1:3] # height, width

grid_y = K.tile(K.reshape(K.arange(0, stop=grid_shape[0]), [-1, 1, 1, 1]),

[1, grid_shape[1], 1, 1])

grid_x = K.tile(K.reshape(K.arange(0, stop=grid_shape[1]), [1, -1, 1, 1]),

[grid_shape[0], 1, 1, 1])

grid = K.concatenate([grid_x, grid_y])

grid = K.cast(grid, K.dtype(feats))

# 将预测结果调整成(batch_size,13,13,3,85)

# 85可拆分成4 + 1 + 80

# 4代表的是中心宽高的调整参数,1代表的是框的置信度,80代表的是种类的置信度

feats = K.reshape(feats, [-1, grid_shape[0], grid_shape[1], num_anchors, num_classes + 5])

# 将预测值调成真实值:box_xy对应框的中心点,box_wh对应框的宽和高

box_xy = (K.sigmoid(feats[..., :2]) + grid) / K.cast(grid_shape[...,::-1], K.dtype(feats)) # 调整后的x,y

box_wh = K.exp(feats[..., 2:4]) * anchors_tensor / K.cast(input_shape[...,::-1], K.dtype(feats)) # 调整后的w,h

box_confidence = K.sigmoid(feats[..., 4:5]) # 置信度confidence

box_class_probs = K.sigmoid(feats[..., 5:]) # 类别

# 在计算loss的时候返回grid, feats, box_xy, box_wh

# 在预测的时候返回box_xy, box_wh, box_confidence, box_class_probs

if calc_loss == True:

return grid, feats, box_xy, box_wh

return box_xy, box_whTensorFlow2 深度学习实战(十四):YOLOv4目标检测算法解析

TensorFlow2深度学习实战(十七):目标检测算法 Faster R-CNN 实战

TensorFlow2深度学习实战(十七):目标检测算法 Faster R-CNN 实战

TensorFlow2深度学习实战:SSD目标检测算法源码解析