pytorch 学习:layer normalization

Posted UQI-LIUWJ

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了pytorch 学习:layer normalization相关的知识,希望对你有一定的参考价值。

torch.nn.LayerNorm(

normalized_shape,

eps=1e-05,

elementwise_affine=True)参数介绍:

| normalized_shape | 输入尺寸 (多大的内容进行归一化)【默认是靠右的几个size(后面实例会说明)】 |

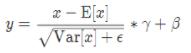

| eps | 为保证数值稳定性(分母不能趋近或取0),给分母加上的值。默认为1e-5。 |

| elementwise_affine | 布尔值,当设为true,给该层添加可学习的仿射变换参数(γ,β)。

|

实例

import torch

import numpy as np

x_test = np.array([[[1,2,-1,1],[3,4,-2,2]],

[[1,2,-1,1],[3,4,-2,2]]])

x_test = torch.from_numpy(x_test).float()

x_test

'''

tensor([[[ 1., 2., -1., 1.],

[ 3., 4., -2., 2.]],

[[ 1., 2., -1., 1.],

[ 3., 4., -2., 2.]]])

''

#x_test的维度是[2,2,4]每一个[i,j][0~3]进行归一化

m = torch.nn.LayerNorm(normalized_shape = [4]) output = m(x_test) output ''' tensor([[[ 0.2294, 1.1471, -1.6059, 0.2294], [ 0.5488, 0.9879, -1.6465, 0.1098]], [[ 0.2294, 1.1471, -1.6059, 0.2294], [ 0.5488, 0.9879, -1.6465, 0.1098]]], grad_fn=<NativeLayerNormBackward>) ''' ''' print(0.2294+1.1471+-1.6059+0.2294) print(0.5488+0.9879+-1.6465+0.1098) -5.551115123125783e-17 -1.249000902703301e-16 '''

每一个[i][0][0]~[i][1][3]进行归一化

m = torch.nn.LayerNorm(normalized_shape = [2,4]) output = m(x_test) output ''' tensor([[[-0.1348, 0.4045, -1.2136, -0.1348], [ 0.9439, 1.4832, -1.7529, 0.4045]], [[-0.1348, 0.4045, -1.2136, -0.1348], [ 0.9439, 1.4832, -1.7529, 0.4045]]], grad_fn=<NativeLayerNormBackward>) ''' ''' print(-0.1348+0.4045+-1.2136+-0.1348) print(0.9439+1.4832+-1.7529+0.4045) print(-0.1348+0.4045+-1.2136+-0.1348+0.9439+1.4832+-1.7529+0.4045) -1.0787 1.0787000000000004 1.6653345369377348e-16 '''

以上是关于pytorch 学习:layer normalization的主要内容,如果未能解决你的问题,请参考以下文章