项目实战2 | 基于Swarm+Prometheus实现双VIP可监控Web高可用集群

Posted chaochao️

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了项目实战2 | 基于Swarm+Prometheus实现双VIP可监控Web高可用集群相关的知识,希望对你有一定的参考价值。

创作不易,来了的客官点点关注,收藏,订阅一键三连❤😜

前言

我是一个即将毕业的大学生,超超。如果你也在学习Linux,python,不妨跟着萌新超超一起学习,拿下Linux,python一起加油,共同努力,拿到理想offer!

系列文章

项目实战1 | 基于iptables的SNAT+DNAT与Docker容器发布的项目

概述

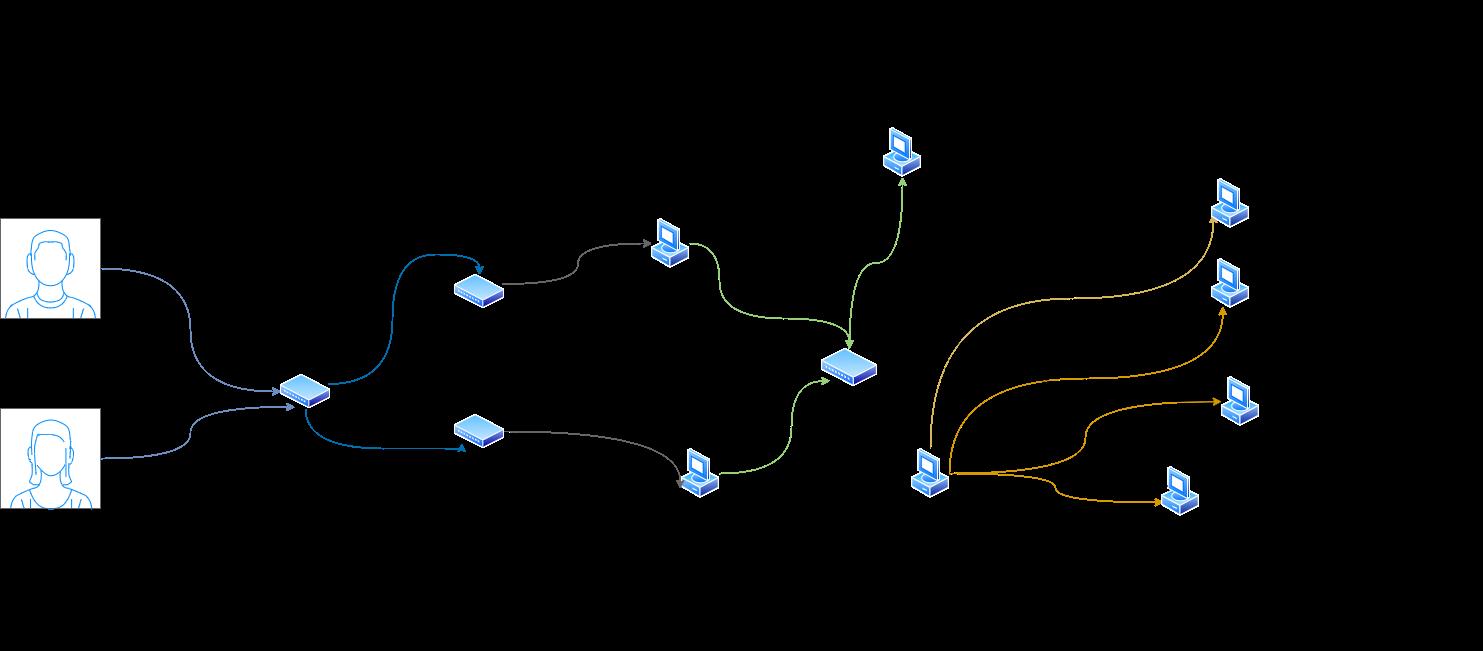

项目实战有利于对已学知识的整合归纳,提高对相关知识的掌握程度,本次项目实战利用swarm+Prometheus实现一个双VIP高可用可监控的Web服务器集群,使用nginx做负载均衡器,同时采用swarm管理的Docker集群并对外提供Web服务,使用keepalived的双vip实现HA,使用Prometheus+Grafana实现对Web服务器的监控。

目录

二、使用swarm实现Web集群部署,实现基于4个节点的swarm集群

三、创建NFS服务服务器为所有的节点提供相同Web数据,使用数据卷,为容器提供一致的Web数据

四、使用Nginx+keepalived实现双VIP负载均衡高可用

五、使用Prometheus实现对swarm集群的监控,结合Grafana成图工具进行数据展示

项目概述

项目名称:基于Swarm+Prometheus实现双VIP可监控Web高可用集群

项目环境:CentOS8(8台,1核2G),Docker(20.10.8),keepalived(2.1.5),Prometheus(2.29.1),Grafana(8.1.2),Nginx(1.14.1)等

项目描述:利用swarm+Prometheus实现一个双VIP高可用可监控的Web服务器集群,使用Nginx做负载均衡器,同时采用swarm管理的Docker集群并对外提供Web服务,使用keepalived的双vip实现HA,使用Prometheus+Grafana实现对Web服务器的监控。

项目步骤

1.规划整个项目的拓扑结构和项目的思维导图

2.使用swarm实现Web集群部署,实现基于4个节点的swarm集群

3.创建NFS服务服务器为所有的节点提供相同Web数据,实现数据一致性

4.使用数据卷,为容器提供一致的Web数据

5.使用Nginx+keepalived实现双VIP负载均衡高可用

6.使用Prometheus实现对swarm集群的监控,结合Grafana成图工具进行数据展示

项目心得

1.通过网络拓补图和思维导图的建立,提高了项目整体的落实和效率

2.对于容器编排工具swarm的使用和集群的部署更为熟悉

3.对于keepalived+nginx实现高可用负载均衡更为了解

4.对于Prometheus+Grafana实现系统监控有了更深的理解

5.对于脑裂现象的出现和解决有了更加清晰的认识

6.通过根据官方文档安装与使用swarm到集群整体的部署,进一步提高了自身的自主学习和troubleshooting能力

项目详细代码

一、规划整个项目的拓扑结构和项目的思维导图

网络拓扑图

思维导图

项目服务器如下:

IP:192.168.232.132 主机名:docker-manager-1 担任角色:swarm manager

IP:192.168.232.133 主机名:docker-2 担任角色:swarm worker node1

IP:192.168.232.134 主机名:docker-3 担任角色:swarm worker node2

IP:192.168.232.131 主机(ubuntu)名:chaochao 担任角色:swarm worker node3

IP:192.168.232.135 主机名:nfs-server 担任角色:nfs服务服务器

IP:192.168.232.136 主机名:load-balancer担任角色:负载均衡器(master)

IP:192.168.232.137 主机名:load-balancer担任角色:负载均衡器(backup)

IP:192.168.232.138 主机名:prometheus-server 担任角色:prometheus-server

二、使用swarm实现Web集群部署,实现基于4个节点的swarm集群

1.部署web服务集群机器环境(四台机器,CentOS8与Ubuntu系统)

IP:192.168.232.132 主机名:docker-manager-1 担任角色:swarm manager

IP:192.168.232.133 主机名:docker-2 担任角色:swarm worker node1

IP:192.168.232.134 主机名:docker-3 担任角色:swarm worker node2

IP:192.168.232.131 主机(ubuntu)名:chaochao 担任角色:swarm worker node3

2.配置hosts文件

swarm manager:

[root@docker-manager-1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.232.132 manager

192.168.232.133 worker1

192.168.232.134 worker2

192.168.232.131 worker3

[root@docker-manager-1 ~]# getenforce

Disabled另外三台worker node操作一致

3.设置防火墙和sellinux

关闭四台机器上的防火墙。

如果是开启防火墙,则需要在所有节点的防火墙上依次放行2377/tcp(管理端口)、7946/udp(节点间通信端口)、4789/udp(overlay 网络端口,容器之间)端口。

[root@docker-manager-1 ~]# systemctl disable firewalld

[root@docker-manager-1 ~]#[root@docker-2 ~]# systemctl disable firewalld

[root@docker-2 ~]#另外两台操作一致,不再赘述。

4.重启docker服务,防止导致网络异常

[root@docker-manager-1 ~]# service docker restart

Redirecting to /bin/systemctl restart docker.service

[root@docker-manager-1 ~]#[root@docker-2 ~]# service docker restart

Redirecting to /bin/systemctl restart docker.service

[root@docker-2 ~]#[root@docker-3 ~]# service docker restart

Redirecting to /bin/systemctl restart docker.service

[root@docker-3 ~]#root@chaochao:~# service docker restart

root@chaochao:~#5.创建swarm集群

对于manager:

# 命令:docker swarm init --advertise-addr manager的IP地址

[root@docker-manager-1 ~]# docker swarm init --advertise-addr 192.168.232.132

Swarm initialized: current node (ooauma1x037wufqkh21uj0j7v) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-0200k3nv43fmc6hcuurx8z1iehsqq6uro12qjfeoxrkmk9fmom-1ub4wsmlpl4zhqalzdrgukx3l 192.168.232.132:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

[root@docker-manager-1 ~]#对于三台swarm nodes

输入manager创建的命令: docker swarm join --token ……,此命令在manager创建swarm时会自动生成。

[root@docker-2 ~]# docker swarm join --token SWMTKN-1-0200k3nv43fmc6hcuurx8z1iehsqq6uro12qjfeoxrkmk9fmom-1ub4wsmlpl4zhqalzdrgukx3l 192.168.232.132:2377

This node joined a swarm as a worker.

[root@docker-2 ~]#[root@docker-3 ~]# docker swarm join --token SWMTKN-1-0200k3nv43fmc6hcuurx8z1iehsqq6uro12qjfeoxrkmk9fmom-1ub4wsmlpl4zhqalzdrgukx3l 192.168.232.132:2377

This node joined a swarm as a worker.

[root@docker-3 ~]#root@chaochao:~# docker swarm join --token SWMTKN-1-0200k3nv43fmc6hcuurx8z1iehsqq6uro12qjfeoxrkmk9fmom-1ub4wsmlpl4zhqalzdrgukx3l 192.168.232.132:2377

This node joined a swarm as a worker.

root@chaochao:~#三、创建NFS服务服务器为所有的节点提供相同Web数据,使用数据卷,为容器提供一致的Web数据

1.准备一台服务器担任NFS Server

客户机配置:CentOS8(1核/2G)

IP地址:192.168.232.135

修改好主机名:nfs-server

root@docker-4 ~]# hostnamectl set-hostname nfs-server

[root@docker-4 ~]# su

[root@nfs-server ~]#共享的Web集群服务器信息如下:

IP:192.168.232.132 主机名:docker-manager-1 担任角色:swarm manager

IP:192.168.232.133 主机名:docker-2 担任角色:swarm node1

IP:192.168.232.134 主机名:docker-3 担任角色:swarm node2

IP:192.168.232.131 主机(ubuntu)名:chaochao 担任角色:swarm node3

2.安装和启动nfs服务

[root@nfs-server ~]# yum install nfs-utils -y

[root@nfs-server ~]# service nfs-server start

Redirecting to /bin/systemctl start nfs-server.service

[root@nfs-server ~]#查看nfs服务的进程: ps aux|grep nfs

[root@nfs-server ~]# ps aux|grep nfs

root 2346 0.0 0.1 50108 2672 ? Ss 19:39 0:00 /usr/sbin/nfsdcld

root 2352 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2353 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2354 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2355 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2356 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2357 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2358 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2359 0.0 0.0 0 0 ? S 19:39 0:00 [nfsd]

root 2367 0.0 0.0 12324 1064 pts/0 S+ 19:40 0:00 grep --color=auto nfs

[root@nfs-server ~]#3.通过NFS共享文件

编辑/etc/exports,写好共享的具体目录、权限以及共享的网段、IP。

[root@nfs-server /]# vim /etc/exports

[root@nfs-server /]# cat /etc/exports

/web 192.168.232.0/24(rw,all_squash,sync)

/download 192.168.232.0/24(ro,all_squash,sync)

[root@nfs-server /]#刷新输出文件的列表

[root@nfs-server /]# exportfs -rv

exporting 192.168.232.0/24:/download

exporting 192.168.232.0/24:/web

[root@nfs-server /]#

[root@nfs-server /]# cd /download/

[root@nfs-server download]# ls

[root@nfs-server download]# vim chao.txt

[root@nfs-server download]# ls

chao.txt

[root@nfs-server download]# exportfs -rv

exporting 192.168.232.0/24:/download

exporting 192.168.232.0/24:/web

[root@nfs-server download]#4.关闭防火墙和selinux

[root@nfs-server download]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@nfs-server download]# systemctl disable firewalld

[root@nfs-server download]# getenforce

Disabled

[root@nfs-server download]#5.在客户机上挂载宿主机上的NFS服务器共享的目录

在客户机上安装NFS服务:yum install nfs-utils -y

[root@docker-2 lianxi]# yum install nfs-utils -y查看宿主机共享的目录

[root@docker-2 lianxi]# showmount -e 192.168.232.135

Export list for 192.168.232.135:

/download 192.168.232.0/24

/web 192.168.232.0/24

[root@docker-2 lianxi]#6.挂载nfs-server上的共享目录到客户机

[root@docker-2 lianxi]# mount 192.168.232.135:/web /web

[root@docker-2 lianxi]# cd /web

[root@docker-2 web]# ls

1.jpg index.html rep.html

[root@docker-2 web]#

[root@docker-2 web]# df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

devtmpfs devtmpfs 876M 0 876M 0% /dev

tmpfs tmpfs 896M 0 896M 0% /dev/shm

tmpfs tmpfs 896M 18M 878M 2% /run

tmpfs tmpfs 896M 0 896M 0% /sys/fs/cgroup

/dev/mapper/cl-root xfs 17G 8.2G 8.9G 48% /

/dev/sda1 xfs 1014M 193M 822M 19% /boot

tmpfs tmpfs 180M 0 180M 0% /run/user/0

overlay overlay 17G 8.2G 8.9G 48% /var/lib/docker/overlay2/c2434295873b6ce0f136d4851cb9a9bf10b1ebf77e80f611841484967b857c94/merged

overlay overlay 17G 8.2G 8.9G 48% /var/lib/docker/overlay2/8b5420179cd05a4a8ea039ba9357f7d59ddfec2fd3f185702a5a0d97883564f2/merged

overlay overlay 17G 8.2G 8.9G 48% /var/lib/docker/overlay2/d9f99510abb4c5d5496e54d11ff763be47610ee7851207aec9fdbb1022f14016/merged

overlay overlay 17G 8.2G 8.9G 48% /var/lib/docker/overlay2/5a87e34567ece3a64a835bcd4cfe59d2ebdf0d36bf74fbd07dff8c82a94f37a2/merged

overlay overlay 17G 8.2G 8.9G 48% /var/lib/docker/overlay2/b70caa1ed0c781711a41cd82a0a91d465a6e02418633bfa00ce398c92405baff/merged

192.168.232.135:/web nfs4 17G 7.8G 9.2G 46% /web

[root@docker-2 web]#[root@docker-manager-1 web]# ls

[root@docker-manager-1 web]# mount 192.168.232.135:/web /web

[root@docker-manager-1 web]# ls

[root@docker-manager-1 web]# cd /web

[root@docker-manager-1 web]# ls

1.jpg index.html rep.html

[root@docker-manager-1 web]# df -Th

……

overlay overlay 17G 8.3G 8.8G 49% /var/lib/docker/overlay2/06cbd339366e8aeb492b21561573d953073e203122262e5089461da0d0d316a0/merged

overlay overlay 17G 8.3G 8.8G 49% /var/lib/docker/overlay2/a132f32c9cff25a1a143e325f2aecd0186630df66748c95984bb3cf2ce9fe8b2/merged

overlay overlay 17G 8.3G 8.8G 49% /var/lib/docker/overlay2/e1efba32267a46940402f682034d07ed51b8ee200186d5acc0c48144cd9fe31e/merged

192.168.232.135:/web nfs4 17G 7.8G 9.2G 46% /web

[root@docker-manager-1 web]#[root@docker-3 web]# showmount 192.168.232.135 -e

Export list for 192.168.232.135:

/download 192.168.232.0/24

/web 192.168.232.0/24

[root@docker-3 web]# mount 192.168.232.135:/web /web

[root@docker-3 web]# cd /web

[root@docker-3 web]# ls

1.jpg index.html rep.html

[root@docker-3 web]# df -TH

文件系统 类型 容量 已用 可用 已用% 挂载点

devtmpfs devtmpfs 919M 0 919M 0% /dev

tmpfs tmpfs 939M 0 939M 0% /dev/shm

tmpfs tmpfs 939M 18M 921M 2% /run

tmpfs tmpfs 939M 0 939M 0% /sys/fs/cgroup

/dev/mapper/cl-root xfs 19G 8.8G 9.6G 48% /

/dev/sda1 xfs 1.1G 202M 862M 19% /boot

tmpfs tmpfs 188M 0 188M 0% /run/user/0

overlay overlay 19G 8.8G 9.6G 48% /var/lib/docker/overlay2/8b5420179cd05a4a8ea039ba9357f7d59ddfec2fd3f185702a5a0d97883564f2/merged

overlay overlay 19G 8.8G 9.6G 48% /var/lib/docker/overlay2/c2434295873b6ce0f136d4851cb9a9bf10b1ebf77e80f611841484967b857c94/merged

overlay overlay 19G 8.8G 9.6G 48% /var/lib/docker/overlay2/6b933ab92577f653a29dcac782a2c5e79bcdbbf219a7ccebb38b585ef117e0b4/merged

overlay overlay 19G 8.8G 9.6G 48% /var/lib/docker/overlay2/40cc77a0f1c7281915947c1fefeb595837eb75fffec0d808a9994ac1fbde5f90/merged

192.168.232.135:/web nfs4 19G 8.4G 9.9G 46% /web

[root@docker-3 web]#root@chaochao:~# mkdir /web

root@chaochao:~# mount 192.168.232.135:/web /web

root@chaochao:~# cd /web

root@chaochao:/web# ls

1.jpg index.html rep.html

root@chaochao:/web# df -Th

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 433M 0 433M 0% /dev

tmpfs tmpfs 96M 1.6M 94M 2% /run

/dev/mapper/ubuntu--vg-ubuntu--lv ext4 19G 6.9G 11G 39% /

tmpfs tmpfs 477M 0 477M 0% /dev/shm

tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs tmpfs 477M 0 477M 0% /sys/fs/cgroup

/dev/sda2 ext4 976M 202M 707M 23% /boot

/dev/loop1 squashfs 70M 70M 0 100% /snap/lxd/19188

/dev/loop3 squashfs 71M 71M 0 100% /snap/lxd/21029

/dev/loop2 squashfs 56M 56M 0 100% /snap/core18/2074

/dev/loop4 squashfs 33M 33M 0 100% /snap/snapd/12704

/dev/loop5 squashfs 32M 32M 0 100% /snap/snapd/10707

/dev/loop6 squashfs 56M 56M 0 100% /snap/core18/2128

tmpfs tmpfs 96M 0 96M 0% /run/user/1000

overlay overlay 19G 6.9G 11G 39% /var/lib/docker/overlay2/a2d7dea3b856302cf61d9584be91aa69614a8b25db3a7b6c91317d71a6d68a3c/merged

overlay overlay 19G 6.9G 11G 39% /var/lib/docker/overlay2/c6188638a4df298b840dce222da041733de01e636362c34c4c0e34cec9a34e08/merged

overlay overlay 19G 6.9G 11G 39% /var/lib/docker/overlay2/e94850d48ea1962457edacef2d09cfaa838fad8b9899d4455c9b31caa11c07e1/merged

192.168.232.135:/web nfs4 17G 7.8G 9.2G 46% /web

root@chaochao:/web#7.使用nfs服务服务器共享的文件,通过volume数据卷是否共享文件成功

命令:docker service create --name nfs-service-1 --mount 'type=volume,source=nfs-volume,target=/usr/share/nginx/html,volume-driver=local,volume-opt=type=nfs,volume-opt=device=:/web,"volume-opt=o=addr=192.168.232.135,rw,nfsvers=4,async"' --replicas 10 -p 8026:80 nginx:latest

source=nfs-volume --> docker宿主机上的卷的名字

/usr/share/nginx/html -->容器里存放网页的目录

volume-driver=local --> 访问本地的某个目录的

volume-opt=type=nfs --> volume对nfs的支持选项

volume-opt=device=:/web --> 是nfs服务器共享目录

volume-opt=o=addr=192.168.232.135,rw,nfsvers=4,async 挂载具体的nfs服务器的ip地址和选项

--replicas 10 副本的数量

nfsvers=4 --> nfs版本

async --> 异步

[root@docker-manager-1 web]# docker service create --name nfs-service-1 --mount 'type=volume,source=nfs-volume,target=/usr/share/nginx/html,volume-driver=local,volume-opt=type=nfs,volume-opt=device=:/web,"volume-opt=o=addr=192.168.232.135,rw,nfsvers=4,async"' --replicas 10 -p 8026:80 nginx:latest

3ws4b26bo2tgi48czckm6jin7

overall progress: 10 out of 10 tasks

1/10: running [==================================================>]

2/10: running [==================================================>]

3/10: running [==================================================>]

4/10: running [==================================================>]

5/10: running [==================================================>]

6/10: running [==================================================>]

7/10: running [==================================================>]

8/10: running [==================================================>]

9/10: running [==================================================>]

10/10: running [==================================================>]

verify: Service converged

[root@docker-manager-1 web]#

[root@docker-manager-1 ~]# cd /var/lib/docker/volumes/nfs-volume/_data

[root@docker-manager-1 _data]# ls

1.jpg index.html rep.html

[root@docker-manager-1 _data]#

[root@docker-2 ~]# cd /var/lib/docker/volumes/nfs-volume/_data

[root@docker-2 _data]# ls

1.jpg index.html rep.html

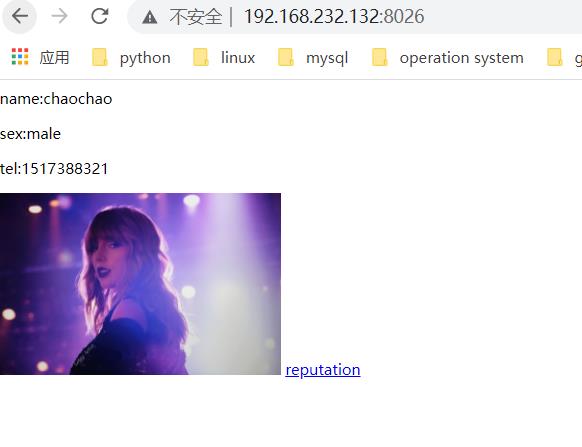

[root@docker-2 _data]#8.访问服务,成功实现文件共享

四、使用Nginx+keepalived实现双VIP负载均衡高可用

首先实现负载均衡,步骤如下:

1.准备两台台客户机作为负载均衡器

IP:192.168.232.136 主机名:load-balancer担任角色:负载均衡器(master)

IP:192.168.232.137 主机名:load-balancer担任角色:负载均衡器(backup)

2.编译脚本

[root@load-balancer ~]# vim onekey_install_lizhichao_nginx_v10.sh

[root@load-balancer ~]# cat onekey_install_lizhichao_nginx_v10.sh

#!/bin/bash

#解决软件的依赖关系,需要安装的软件包

yum -y install zlib zlib-devel openssl openssl-devel pcre pcre-devel gcc gcc-c++ autoconf automake make psmisc net-tools lsof vim wget

#新建chaochao用户和组

id chaochao || useradd chaochao -s /sbin/nologin

#下载nginx软件

mkdir /lzc_load_balancing -p

cd /lzc_load_balancing

wget http://nginx.org/download/nginx-1.21.1.tar.gz

#解压软件

tar xf nginx-1.21.1.tar.gz

#进入解压后的文件夹

cd nginx-1.21.1

#编译前的配置

./configure --prefix=/usr/local/lzc_load_balancing --user=chaochao --group=chaochao --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream

#如果上面的编译前的配置失败,直接退出脚本

if (( $? != 0));then

exit

fi

#编译

make -j 2

#编译安装

make install

#修改PATH变量

echo "PATH=$PATH:/usr/local/lzc_load_balancing/sbin" >>/root/.bashrc

#执行修改了环境变量的脚本

source /root/.bashrc

#firewalld and selinux

#stop firewall和设置下次开机不启动firewalld

service firewalld stop

systemctl disable firewalld

#临时停止selinux和永久停止selinux

setenforce 0

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config

#开机启动

chmod +x /etc/rc.d/rc.local

echo "/usr/local/lzc_load_balancing/sbin/nginx" >>/etc/rc.local

[root@load-balancer ~]#3.安装运行脚本

[root@load-balacer ~]# bash onekey_install_lizhichao_nginx_v10.sh

……

test -d '/usr/local/lzc_load_balancing/logs' \\

|| mkdir -p '/usr/local/lzc_load_balancing/logs'

make[1]: 离开目录“/lzc_load_balancing/nginx-1.21.1”

Redirecting to /bin/systemctl stop firewalld.service

[root@load-balancer ~]#4.启动nginx

命令:

nginx 启动nginx

nginx -s stop 关闭nginx

[root@load-balancer nginx-1.21.1]# nginx

[root@load-balancer nginx-1.21.1]#

[root@load-balancer nginx-1.21.1]# ps aux|grep nginx

root 9301 0.0 0.2 119148 2176 ? Ss 18:20 0:00 nginx: master process nginx

nginx 9302 0.0 0.9 151824 7912 ? S 18:20 0:00 nginx: worker process

root 9315 0.0 0.1 12344 1108 pts/0 S+ 18:21 0:00 grep --color=auto nginx

[root@load-banlancer nginx-1.21.1]# ss -anplut|grep nginx

tcp LISTEN 0 128 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=9302,fd=9),("nginx",pid=9301,fd=9))

tcp LISTEN 0 128 [::]:80 [::]:* users:(("nginx",pid=9302,fd=10),("nginx",pid=9301,fd=10))

[root@load-banlancer nginx-1.21.1]#5.配置nginx里的负载均衡功能

[root@load-balancer nginx-1.21.1]# cd /usr/local/lzc_load_balancing/

[root@load-balancer lzc_load_balancing]# ls

conf html logs sbin

[root@load-balancer lzc_load_balancing]# cd conf/

[root@load-balancer conf]# ls

fastcgi.conf fastcgi_params.default mime.types nginx.conf.default uwsgi_params

fastcgi.conf.default koi-utf mime.types.default scgi_params uwsgi_params.default

fastcgi_params koi-win nginx.conf scgi_params.default win-utf

[root@load-balancer conf]# vim nginx.conf

[root@load-balancer conf]# cat nginx.conf #以下仅显示修改了的脚本部分

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

upstream chaoweb{ #定义一个负载均衡器的名字为:chaoweb

server 192.168.232.132:8026;

server 192.168.232.131:8026;

server 192.168.232.133:8026;

server 192.168.232.134:8026;

}

server {

listen 80;

server_name www.lizhichao.com; #设置域名为www.sc.com

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

server 192.168.232.134:8026;

}

server {

listen 80;

server_name www.lizhichao.com; #设置域名为www.sc.com

location /{

proxy_pass http://chaoweb; #调用负载均衡器

}

[root@load-balancer conf]# nginx -s reload # 重新加载配置文件

[root@load-banlancer conf]# ps aux|grep nginx

root 9301 0.0 1.2 120068 9824 ? Ss 18:20 0:00 nginx: master process nginx

nginx 9395 0.1 1.0 152756 8724 ? S 19:16 0:00 nginx: worker process

root 9397 0.0 0.1 12344 1044 pts/0 S+ 19:18 0:00 grep --color=auto nginx

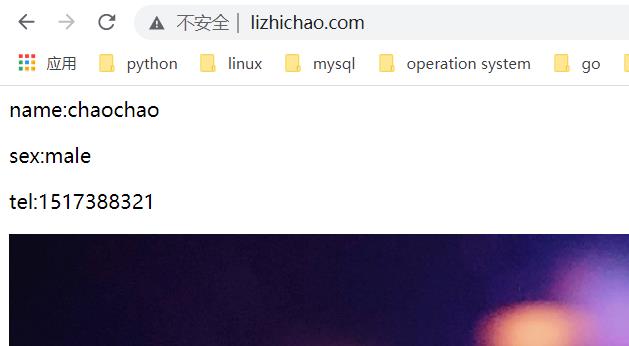

[root@load-balancer conf]#6.在Windows添加IP映射并查看效果

修改windows的hosts文件,点击此处查看方法

在C:\\Windows\\System32\\drivers\\etc的hosts文件

在swarm集群上查看。

[root@docker-manager-1 ~]# vim /etc/hosts

[root@docker-manager-1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.232.132 manager

192.168.232.133 worker1

192.168.232.134 worker2

192.168.232.131 worker3

192.168.232.136 www.lizhichao.com

[root@docker-manager-1 ~]# curl www.lizhichao.com

<html>

<head>

<title>chaochao</title>

</head>

<body>

<p>name:chaochao</p>

<p>sex:male</p>

<p>tel:1517388321</p>

<img src=1.jpg>

<a href=rep.html>reputation</a>

</body>

</html>

[root@docker-manager-1 ~]#7.查看负载均衡的分配情况

用抓包工具来查看:tcpdump

[root@load-balancer ~]# yum install tcpdump -y另一台负载均衡器操作一致,不再赘述。(同时也可以通过克隆客户机快速实现两台负载均衡

接下来展示使用keepalived实现双VIP高可用的步骤:

1.安装keepalived

命令:yum install keepalived -y

[root@load-balancer ~]# yum install keepalived -y[root@load-balancer-2 ~]# yum install keepalived -y2.配置keepalived.conf文件

对于单VIP下的master:

[root@load-balancer ~]# vim /etc/keepalived/keepalived.conf

[root@load-balancer ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 168

priority 220

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.232.168

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 169

priority 180

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.232.169

}

}

[root@load-balancer ~]#对于单VIP下的backup:

[root@load-balancer-2 ~]# vim /etc/keepalived/keepalived.conf

[root@load-balancer-2 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 168

priority 130

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.232.168

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 169

priority 200

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.232.169

}

}

[root@load-balancer-2 ~]#2.重启keepalived服务

对于单VIP下的master:

[root@load-balancer ~]# service keepalived restart

Redirecting to /bin/systemctl restart keepalived.service

[root@load-balancer ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:9a:d6:b6 brd ff:ff:ff:ff:ff:ff

inet 192.168.232.136/24 brd 192.168.232.255 scope global dynamic noprefixroute ens33

valid_lft 1374sec preferred_lft 1374sec

inet 192.168.232.168/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::b4cd:b005:c610:7b3b/64 scope link dadfailed tentative noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::40fb:5be0:b6f9:b063/64 scope link dadfailed tentative noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::2513:c641:3555:5eeb/64 scope link dadfailed tentative noprefixroute

valid_lft forever preferred_lft forever

[root@load-balancer ~]#对于单VIP下的backup:

[root@load-balancer-2 ~]# service keepalived restart

Redirecting to /bin/systemctl restart keepalived.service

[root@load-balancer-2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:04:e5:b4 brd ff:ff:ff:ff:ff:ff

inet 192.168.232.137/24 brd 192.168.232.255 scope global dynamic noprefixroute ens33

valid_lft 1435sec preferred_lft 1435sec

inet 192.168.232.169/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::b4cd:b005:c610:7b3b/64 scope link dadfailed tentative noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::40fb:5be0:b6f9:b063/64 scope link dadfailed tentative noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::2513:c641:3555:5eeb/64 scope link dadfailed tentative noprefixroute

valid_lft forever preferred_lft forever

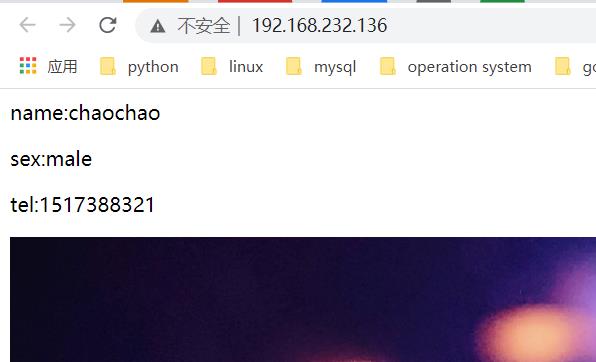

[root@load-balancer-2 ~]#3.测试是否成功实现

[root@load-balancer-2 ~]# nginx

[root@load-balancer-2 ~]# curl 192.168.232.169

<html>

<head>

<title>chaochao</title>

</head>

<body>

<p>name:chaochao</p>

<p>sex:male</p>

<p>tel:1517388321</p>

<img src=1.jpg>

<a href=rep.html>reputation</a>

</body>

</html>

[root@load-balancer-2 ~]#五、使用Prometheus实现对swarm集群的监控,结合Grafana成图工具进行数据展示

1.环境部署

IP:192.168.232.138 主机名:prometheus-server 担任角色:prometheus-server

IP:192.168.232.136 主机名:load-balancer担任角色:负载均衡器(master)

IP:192.168.232.137 主机名:load-balancer担任角色:负载均衡器(backup)

IP:192.168.232.132 主机名:docker-manager-1 担任角色:swarm manager

IP:192.168.232.133 主机名:docker-2 担任角色:swarm worker node1

IP:192.168.232.134 主机名:docker-3 担任角色:swarm worker node2

IP:192.168.232.131 主机(ubuntu)名:chaochao 担任角色:swarm worker node3

IP:192.168.232.135 主机名:nfs-server 担任角色:nfs服务器

2.下载Prometheus安装包并解压到服务器

[root@prometheus-server ~]# mkdir /prometheus

[root@prometheus-server ~]# cp prometheus-2.29.1.linux-amd64.tar.gz /prometheus/

[root@prometheus-server ~]# cd /prometheus

[root@prometheus-server prometheus]# ls

prometheus-2.29.1.linux-amd64.tar.gz

[root@prometheus-server prometheus]# tar xf prometheus-2.29.1.linux-amd64.tar.gz

[root@prometheus-server prometheus]# ls

prometheus-2.29.1.linux-amd64 prometheus-2.29.1.linux-amd64.tar.gz

[root@prometheus-server prometheus]# cd prometheus-2.29.1.linux-amd64

[root@prometheus-server prometheus-2.29.1.linux-amd64]# ls

console_libraries consoles LICENSE NOTICE prometheus prometheus.yml promtool3.修改环境变量

[root@prometheus-server prometheus-2.29.1.linux-amd64]# PATH=$PATH:/prometheus/prometheus-2.29.1.linux-amd64

[root@prometheus-server prometheus-2.29.1.linux-amd64]# which prometheus

/prometheus/prometheus-2.29.1.linux-amd64/prometheus

[root@prometheus-server prometheus-2.29.1.linux-amd64]#问题:误输入PATH命令导致ls,vim等识别不了

解决方法:输入命令:export PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin

TIPS:

此步骤可以添加环境变量到.bashrc文件

[root@prometheus-server prometheus-2.29.1.linux-amd64]# cat /root/.bashrc

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/local/lzc_load_balancing/sbin

PATH=$PATH:/prometheus/prometheus-2.29.1.linux-amd64

[root@prometheus-server prometheus-2.29.1.linux-amd64]#4.启动Prometheus

命令:./prometheus --config.file=prometheus.yml

后台运行命令:nohup ./prometheus --config.file=prometheus.yml &

[root@prometheus-server prometheus-2.29.1.linux-amd64]# ./prometheus --config.file=prometheus.yml

level=info ts=2021-08-25T12:00:48.976Z caller=main.go:390 msg="No time or size retention was set so using the default time retention" duration=15d

level=info ts=2021-08-25T12:00:48.977Z caller=main.go:428 msg="Starting Prometheus" version="(version=2.29.1, branch=HEAD, revision=dcb07e8eac34b5ea37cd229545000b857f1c1637)"

level=info ts=2021-08-25T12:00:48.977Z caller=main.go:433 build_context="(go=go1.16.7, user=root@364730518a4e, date=20210811-14:48:27)"5.查看Prometheus进程

[root@prometheus-server prometheus-2.29.1.linux-amd64]# ps aux|grep prometheus

root 1677 2.6 8.1 780596 64948 pts/0 Sl+ 20:00 0:01 ./prometheus --config.file=prometheus.yml

root 1733 0.0 0.1 12344 1060 pts/1 R+ 20:01 0:00 grep --color=auto prometheus

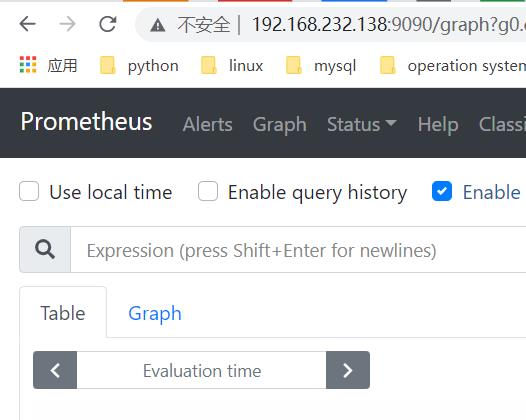

[root@prometheus-server prometheus-2.29.1.linux-amd64]#6.访问Prometheus

http://localhost:9090/graph --》web界面的

http://localhost:9090/metrics ---》查看prometheus的各种指标

接下来在被监控的服务器上安装exporter

在swarm manager上安装exporter为例

1.安装node_exporter

[root@docker-manager-1 ~]# mkdir /exporter

[root@docker-manager-1 ~]# cd /exporter/

[root@docker-manager-1 exporter]# ls

[root@docker-manager-1 exporter]# ls

node_exporter-1.2.2.linux-amd64.tar.gz

[root@docker-manager-1 exporter]#2.运行exporter

命令:nohup ./node_exporter --web.listen-address="0.0.0.0:9100" &

[root@docker-manager-1 node_exporter-1.2.2.linux-amd64]# nohup ./node_exporter --web.listen-address="0.0.0.0:9100" &

[1] 120539

[root@docker-manager-1 node_exporter-1.2.2.linux-amd64]# nohup: 忽略输入并把输出追加到'nohup.out'

[root@docker-manager-1 node_exporter-1.2.2.linux-amd64]# ps aux|grep exporter

root 120539 0.0 0.6 716436 11888 pts/0 Sl 11:23 0:00 ./node_exporter --web.listen-address=0.0.0.0:9100

root 120551 0.0 0.0 12324 996 pts/0 S+ 11:23 0:00 grep --color=auto exporter

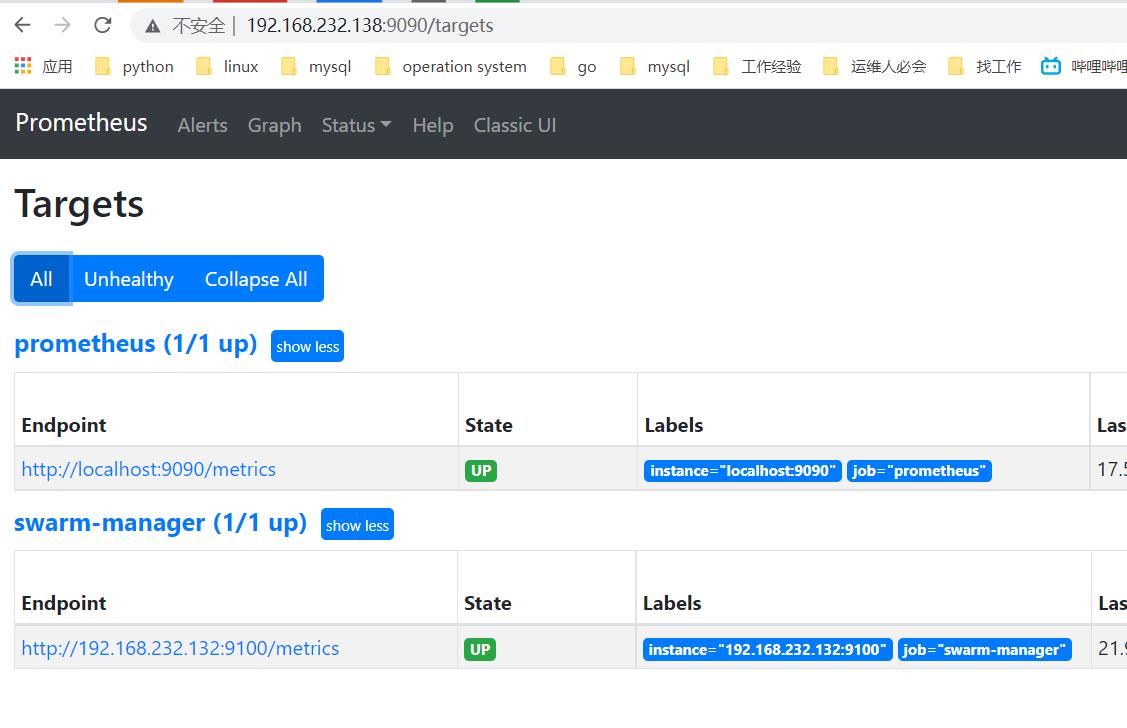

[root@docker-manager-1 node_exporter-1.2.2.linux-amd64]#3.在Prometheus-server上面修改Prometheus.yml文件

[root@prometheus-server prometheus-2.29.1.linux-amd64]# vim prometheus.yml

[root@prometheus-server prometheus-2.29.1.linux-amd64]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "swarm-manager"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.232.132:9100"]

4.重启Prometheus服务

[root@prometheus-server prometheus-2.29.1.linux-amd64]# ps aux|grep prome

root 1677 0.0 5.6 1044504 44944 ? Sl 05:49 0:18 ./prometheus --config.file=prometheus.yml

root 2552 0.0 0.1 12344 1076 pts/1 R+ 11:27 0:00 grep --color=auto prome

[root@prometheus-server prometheus-2.29.1.linux-amd64]# kill -9 1677

[root@prometheus-server prometheus-2.29.1.linux-amd64]# ps aux|grep prome

root 2556 0.0 0.1 12344 1196 pts/1 R+ 11:28 0:00 grep --color=auto prome

[root@prometheus-server prometheus-2.29.1.linux-amd64]# nohup ./prometheus --config.file=prometheus.yml &

[1] 2640

[root@prometheus-server prometheus-2.29.1.linux-amd64]# nohup: 忽略输入并把输出追加到'nohup.out'

^C

[root@prometheus-server prometheus-2.29.1.linux-amd64]# ps aux|grep prom

root 2640 4.0 11.5 782384 92084 pts/1 Sl 11:38 0:00 ./prometheus --config.file=prometheus.yml

root 2648 0.0 0.1 12344 1044 pts/1 S+ 11:38 0:00 grep --color=auto prom5.访问Prometheus

其他三个工具节点操作一致,在这里不再赘述

接下来在Prometheus server进行grafana的安装部署

1.创建grafana.repo文件

[root@prometheus-server yum.repos.d]# vim grafana.repo

[root@prometheus-server yum.repos.d]# cat grafana.repo

[grafana]

name=grafana

baseurl=https://packages.grafana.com/enterprise/rpm

repo_gpgcheck=1

enabled=1

gpgcheck=1

gpgkey=https://packages.grafana.com/gpg.key

sslverify=1

sslcacert=/etc/pki/tls/certs/ca-bundle.crt

[root@prometheus-server yum.repos.d]#2.安装grafana

[root@prometheus-server yum.repos.d]# yum install grafana -y3.运行grafana server

[root@prometheus-server yum.repos.d]# systemctl start grafana-server

[root@prometheus-server yum.repos.d]#

[root@prometheus-server yum.repos.d]# ps aux|grep grafana

root 3019 0.0 0.1 169472 800 ? Ss 14:34 0:00 gpg-agent --homedir /var/cache/dnf/grafana-ee12c6ab2813e349/pubring --use-standard-socket --daemon

grafana 3553 4.0 10.0 1302412 80140 ? Ssl 14:36 0:01 /usr/sbin/grafana-server --config=/etc/grafana/grafana.ini --pidfile=/var/run/grafana/grafana-server.pid --packaging=rpm cfg:default.paths.logs=/var/log/grafana cfg:default.paths.data=/var/lib/grafana cfg:default.paths.plugins=/var/lib/grafana/plugins cfg:default.paths.provisioning=/etc/grafana/provisioning

root 3563 0.0 0.1 12344 1192 pts/4 R+ 14:37 0:00 grep --color=auto grafana

[root@prometheus-server yum.repos.d]#

[root@prometheus-server yum.repos.d]# ss -anplut|grep grafana

tcp LISTEN 0 128 *:3000 *:* users:(("grafana-server",pid=3553,fd=8))

[root@prometheus-server yum.repos.d]#

[root@prometheus-server yum.repos.d]# grafana-server -v

Version 8.1.2 (commit: 103f8fa094, branch: HEAD)

[root@prometheus-server yum.repos.d]#4.访问grafana以上是关于项目实战2 | 基于Swarm+Prometheus实现双VIP可监控Web高可用集群的主要内容,如果未能解决你的问题,请参考以下文章