相关向量机 (RVM) -Matlab

Posted 这是一个很随便的名字

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了相关向量机 (RVM) -Matlab相关的知识,希望对你有一定的参考价值。

主要特点

- 用于二元分类 (RVC) 或回归 (RVR) 的 RVM 模型

- 多种核函数(线性、高斯、多项式、sigmoid、laplacian)

- 混合核函数(K =w1×K1+w2×K2+...+wn×Kn)

- 使用贝叶斯优化、遗传算法和粒子群优化进行参数优化

- 此版本的代码与低于 R2016b 的版本不兼容。

- 详细应用请看演示。

- 此代码仅供参考。

使用举例

01. 使用 RVM (RVC) 进行分类

使用 RVM 进行分类的演示

clc

clear all

close all

addpath(genpath(pwd))

% use fisheriris dataset

load fisheriris

inds = ~strcmp(species, 'setosa');

data_ = meas(inds, 3:4);

label_ = species(inds);

cvIndices = crossvalind('HoldOut', length(data_), 0.3);

trainData = data_(cvIndices, :);

trainLabel = label_(cvIndices, :);

testData = data_(~cvIndices, :);

testLabel = label_(~cvIndices, :);

% kernel function

kernel = Kernel('type', 'gaussian', 'gamma', 0.2);

% parameter

parameter = struct( 'display', 'on',...

'type', 'RVC',...

'kernelFunc', kernel);

rvm = BaseRVM(parameter);

% RVM model training, testing, and visualization

rvm.train(trainData, trainLabel);

results = rvm.test(testData, testLabel);

rvm.draw(results)运行结果:

*** RVM model (classification) train finished ***

running time = 0.1604 seconds

iterations = 20

number of samples = 70

number of RVs = 2

ratio of RVs = 2.8571%

accuracy = 94.2857%

*** RVM model (classification) test finished ***

running time = 0.0197 seconds

number of samples = 30

accuracy = 96.6667%

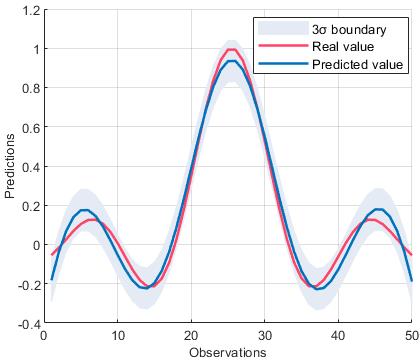

02. 使用 RVM (RVR) 进行回归

使用 RVM 进行回归的演示

clc

clear all

close all

addpath(genpath(pwd))

% sinc funciton

load sinc_data

trainData = x;

trainLabel = y;

testData = xt;

testLabel = yt;

% kernel function

kernel = Kernel('type', 'gaussian', 'gamma', 0.1);

% parameter

parameter = struct( 'display', 'on',...

'type', 'RVR',...

'kernelFunc', kernel);

rvm = BaseRVM(parameter);

% RVM model training, testing, and visualization

rvm.train(trainData, trainLabel);

results = rvm.test(testData, testLabel);

rvm.draw(results)运行结果:

*** RVM model (regression) train finished ***

running time = 0.1757 seconds

iterations = 76

number of samples = 100

number of RVs = 6

ratio of RVs = 6.0000%

RMSE = 0.1260

R2 = 0.8821

MAE = 0.0999

*** RVM model (regression) test finished ***

running time = 0.0026 seconds

number of samples = 50

RMSE = 0.1424

R2 = 0.8553

MAE = 0.110603. 内核函数

定义了一个名为Kernel的类来计算核函数矩阵。

%{

type -

linear : k(x,y) = x'*y

polynomial : k(x,y) = (γ*x'*y+c)^d

gaussian : k(x,y) = exp(-γ*||x-y||^2)

sigmoid : k(x,y) = tanh(γ*x'*y+c)

laplacian : k(x,y) = exp(-γ*||x-y||)

degree - d

offset - c

gamma - γ

%}

kernel = Kernel('type', 'gaussian', 'gamma', value);

kernel = Kernel('type', 'polynomial', 'degree', value);

kernel = Kernel('type', 'linear');

kernel = Kernel('type', 'sigmoid', 'gamma', value);

kernel = Kernel('type', 'laplacian', 'gamma', value);

例如,计算X和Y之间的核矩阵

X = rand(5, 2);

Y = rand(3, 2);

kernel = Kernel('type', 'gaussian', 'gamma', 2);

kernelMatrix = kernel.computeMatrix(X, Y);

>> kernelMatrix

kernelMatrix =

0.5684 0.5607 0.4007

0.4651 0.8383 0.5091

0.8392 0.7116 0.9834

0.4731 0.8816 0.8052

0.5034 0.9807 0.727404. 混合内核

使用带有 hybrid_kernel 的 RVM 进行回归的演示 (K = w1 K1+w2 K2+...+wn*Kn)

clc

clear all

close all

addpath(genpath(pwd))

% sinc funciton

load sinc_data

trainData = x;

trainLabel = y;

testData = xt;

testLabel = yt;

% kernel function

kernel_1 = Kernel('type', 'gaussian', 'gamma', 0.3);

kernel_2 = Kernel('type', 'polynomial', 'degree', 2);

kernelWeight = [0.5, 0.5];

% parameter

parameter = struct( 'display', 'on',...

'type', 'RVR',...

'kernelFunc', [kernel_1, kernel_2],...

'kernelWeight', kernelWeight);

rvm = BaseRVM(parameter);

% RVM model training, testing, and visualization

rvm.train(trainData, trainLabel);

results = rvm.test(testData, testLabel);

rvm.draw(results)05. 单核RVM参数优化

带参数优化的 RVM 模型演示

clc

clear all

close all

addpath(genpath(pwd))

% use fisheriris dataset

load fisheriris

inds = ~strcmp(species, 'setosa');

data_ = meas(inds, 3:4);

label_ = species(inds);

cvIndices = crossvalind('HoldOut', length(data_), 0.3);

trainData = data_(cvIndices, :);

trainLabel = label_(cvIndices, :);

testData = data_(~cvIndices, :);

testLabel = label_(~cvIndices, :);

% kernel function

kernel = Kernel('type', 'gaussian', 'gamma', 5);

% parameter optimization

opt.method = 'bayes'; % bayes, ga, pso

opt.display = 'on';

opt.iteration = 20;

% parameter

parameter = struct( 'display', 'on',...

'type', 'RVC',...

'kernelFunc', kernel,...

'optimization', opt);

rvm = BaseRVM(parameter);

% RVM model training, testing, and visualization

rvm.train(trainData, trainLabel);

results = rvm.test(trainData, trainLabel);

rvm.draw(results)

结果:

*** RVM model (classification) train finished ***

running time = 13.3356 seconds

iterations = 88

number of samples = 70

number of RVs = 4

ratio of RVs = 5.7143%

accuracy = 97.1429%

Optimized parameter table

gaussian_gamma

______________

7.8261

*** RVM model (classification) test finished ***

running time = 0.0195 seconds

number of samples = 70

accuracy = 97.1429%06. Hybrid-kernel-RVM 参数优化

带参数优化的 RVM 模型演示

%{

A demo for hybrid-kernel RVM model with Parameter Optimization

%}

clc

clear all

close all

addpath(genpath(pwd))

% data

load UCI_data

trainData = x;

trainLabel = y;

testData = xt;

testLabel = yt;

% kernel function

kernel_1 = Kernel('type', 'gaussian', 'gamma', 0.5);

kernel_2 = Kernel('type', 'polynomial', 'degree', 2);

% parameter optimization

opt.method = 'bayes'; % bayes, ga, pso

opt.display = 'on';

opt.iteration = 30;

% parameter

parameter = struct( 'display', 'on',...

'type', 'RVR',...

'kernelFunc', [kernel_1, kernel_2],...

'optimization', opt);

rvm = BaseRVM(parameter);

% RVM model training, testing, and visualization

rvm.train(trainData, trainLabel);

results = rvm.test(testData, testLabel);

rvm.draw(results)

结果:

*** RVM model (regression) train finished ***

running time = 24.4042 seconds

iterations = 377

number of samples = 264

number of RVs = 22

ratio of RVs = 8.3333%

RMSE = 0.4864

R2 = 0.7719

MAE = 0.3736

Optimized parameter 1×6 table

gaussian_gamma polynomial_gamma polynomial_offset polynomial_degree gaussian_weight polynomial_weight

______________ ________________ _________________ _________________ _______________ _________________

22.315 13.595 44.83 6 0.042058 0.95794

*** RVM model (regression) test finished ***

running time = 0.0008 seconds

number of samples = 112

RMSE = 0.7400

R2 = 0.6668

MAE = 0.486707. 交叉验证

在此代码中,支持两种交叉验证方法:“K-Folds”和“Holdout”。例如,5-Folds 的交叉验证是

parameter = struct( 'display', 'on',...

'type', 'RVC',...

'kernelFunc', kernel,...

'KFold', 5);

例如,比率为0.3的Holdout方法的交叉验证是

parameter = struct( 'display', 'on',...

'type', 'RVC',...

'kernelFunc', kernel,...

'HoldOut', 0.3);

08. 其他

%% custom optimization option

%{

opt.method = 'bayes'; % bayes, ga, pso

opt.display = 'on';

opt.iteration = 20;

opt.point = 10;

% gaussian kernel function

opt.gaussian.parameterName = {'gamma'};

opt.gaussian.parameterType = {'real'};

opt.gaussian.lowerBound = 2^-6;

opt.gaussian.upperBound = 2^6;

% laplacian kernel function

opt.laplacian.parameterName = {'gamma'};

opt.laplacian.parameterType = {'real'};

opt.laplacian.lowerBound = 2^-6;

opt.laplacian.upperBound = 2^6;

% polynomial kernel function

opt.polynomial.parameterName = {'gamma'; 'offset'; 'degree'};

opt.polynomial.parameterType = {'real'; 'real'; 'integer'};

opt.polynomial.lowerBound = [2^-6; 2^-6; 1];

opt.polynomial.upperBound = [2^6; 2^6; 7];

% sigmoid kernel function

opt.sigmoid.parameterName = {'gamma'; 'offset'};

opt.sigmoid.parameterType = {'real'; 'real'};

opt.sigmoid.lowerBound = [2^-6; 2^-6];

opt.sigmoid.upperBound = [2^6; 2^6];

%}

%% RVM model parameter

%{

'display' : 'on', 'off'

'type' : 'RVR', 'RVC'

'kernelFunc' : kernel function

'KFolds' : cross validation, for example, 5

'HoldOut' : cross validation, for example, 0.3

'freeBasis' : 'on', 'off'

'maxIter' : max iteration, for example, 1000

%}获取代码:https://ai.52learn.online/code/110

以上是关于相关向量机 (RVM) -Matlab的主要内容,如果未能解决你的问题,请参考以下文章