Prometheus===》企业版部署监控报警

Posted 一夜暴富--gogogo

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Prometheus===》企业版部署监控报警相关的知识,希望对你有一定的参考价值。

一、部署普罗米修斯

1.1二进制安装

#1.下载解压

cd /opt/package/

wget https://github.com/prometheus/prometheus/releases/download/v2.25.0/prometheus-2.25.0.linux-amd64.tar.gz

tar -xvf prometheus-2.25.0.linux-amd64.tar.gz -C /data/ota_soft/

cd /data/ota_soft/

mv prometheus-2.25.0.linux-amd64/ prometheus

#2.添加环境变量

vim /etc/profile #最后一行添加

export PROMETHEUS_HOME=/data/ota_soft/prometheus

PATH=$PROMETHEUS_HOME/bin:$PATH

source /etc/profile

#3.前台启动

cd /data/ota_soft/prometheus/

prometheus --config.file="prometheus.yml"

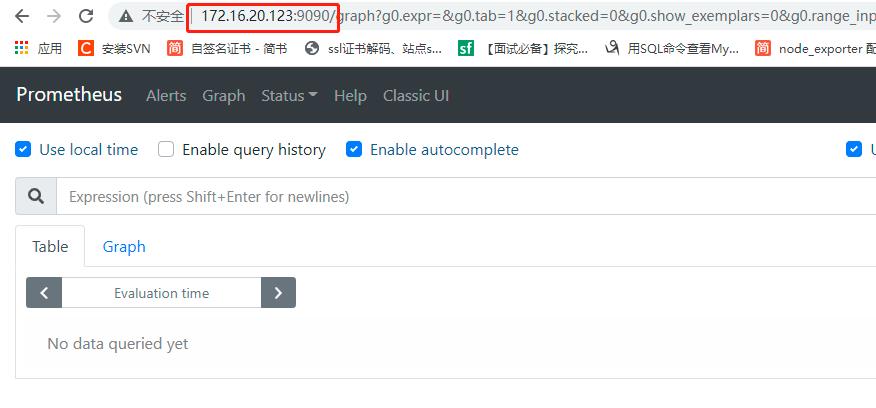

#4.访问IP:9090

1.2docker安装

#1.创建普罗米修斯用户

mkdir -p /data/prometheus/{data,config}

useradd -U -u 1000 prometheus

chown -R 1000:1000 /data/prometheus

#2.编写配置文件用于挂载

vim /data/prometheus/config/prometheus.yml

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

alerting:

alertmanagers:

- static_configs:

- targets:

#rule_files:

# - "rule/*.yml"

scrape_configs:

- job_name: 'node'

static_configs:

- targets: ['172.16.20.113:9100']

consul_sd_configs:

- server: 'prome:8500'

services: [node-exporter]

#3.docker安装

docker run --restart=always --name=prometheus -p 9090:9090 -v /etc/hosts:/etc/hosts -v /data/prometheus/config/:/etc/prometheus/ -v /data/prometheus/data/:/prometheus -d --user 1000 prom/prometheus

注:若出现没打开端口,则查看状态【docker ps -a】,看status是否是redstart,然后再查看log是否出现问题【docker logs prometheus】,一般来说是yml文件的格式问题,修正即可

二、安装node_exporter组件

监控服务器CPU、内存、磁盘、I/O等信息

#1.在被监控的主机上安装组件

cd /opt/package/

wget https://github.com/prometheus/node_exporter/releases/download/v1.1.2/node_exporter-1.1.2.linux-amd64.tar.gz

tar xvf node_exporter-1.1.2.linux-amd64.tar.gz -C /usr/local/

cd /usr/local/node_exporter-1.1.2.linux-amd64/

#2.前台启动(9100端口)

./node_exporter

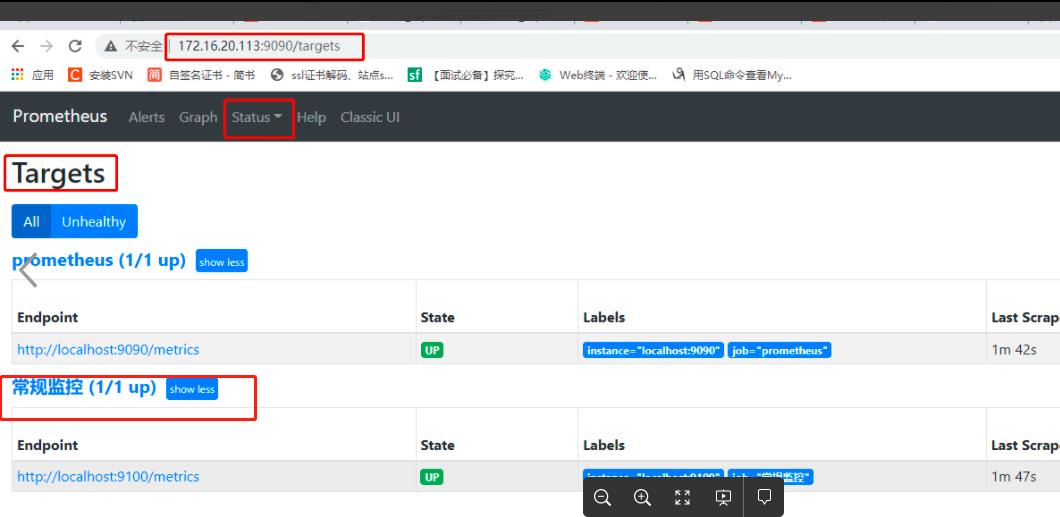

#3.修改普罗米修斯配置文件

cd /data/ota_soft/prometheus

vim prometheus.yml #按照格式添加

- job_name: 'node_exporter'

static_configs:

- targets: ['172.16.20.113:9100']

#重启普罗米修斯

./prometheus --config.file="prometheus.yml"

三、监控mysql

#1.安装mysql_exporter组件

cd /opt/package/

wget https://github.com/prometheus/mysqld_exporter/releases/download/v0.12.1/mysqld_exporter-0.12.1.linux-amd64.tar.gz

tar xf mysqld_exporter-0.12.1.linux-amd64.tar.gz -C /usr/local/

cd /usr/local/mysqld_exporter-0.12.1.linux-amd64/

ll #查看配置文件

-rw-r--r-- 1 3434 3434 11325 7月 29 2019 LICENSE

-rwxr-xr-x 1 3434 3434 14813452 7月 29 2019 mysqld_exporter

-rw-r--r-- 1 3434 3434 65 7月 29 2019 NOTICE

#2.安装mysql(略)

进入mysql给用户授权

grant select,replication client,process ON *.* to 'mysql_monitor'@'%' identified by '123456';

flush privileges;

#3.编写启动配置文件

cd /usr/local/mysqld_exporter-0.12.1.linux-amd64

vim .my.cnf

[client]

user=mysql_monitor

password=123456

port=3306

#4.前台启动监控(端口9104)

./mysqld_exporter --config.my-cnf=".my.cnf"

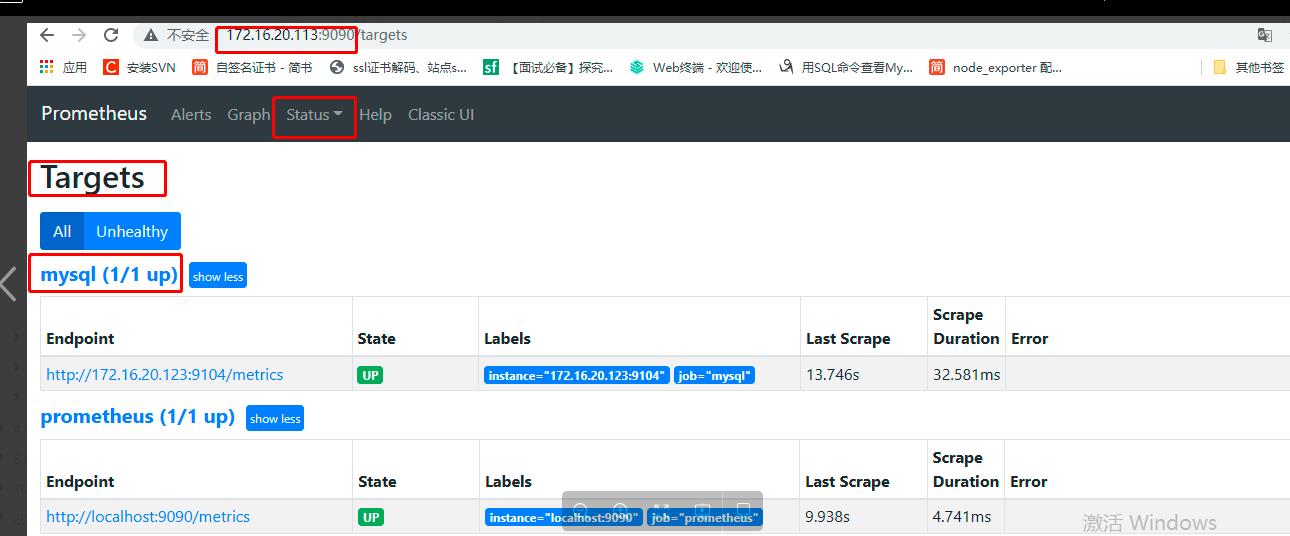

#5.修改普罗米修斯配置文件

vim prometheus.yml

- job_name: 'mysql'

static_configs:

- targets: ['192.168.12.11:9104']

#6.启动普罗米修斯

./prometheus --config.file=./prometheus.yml

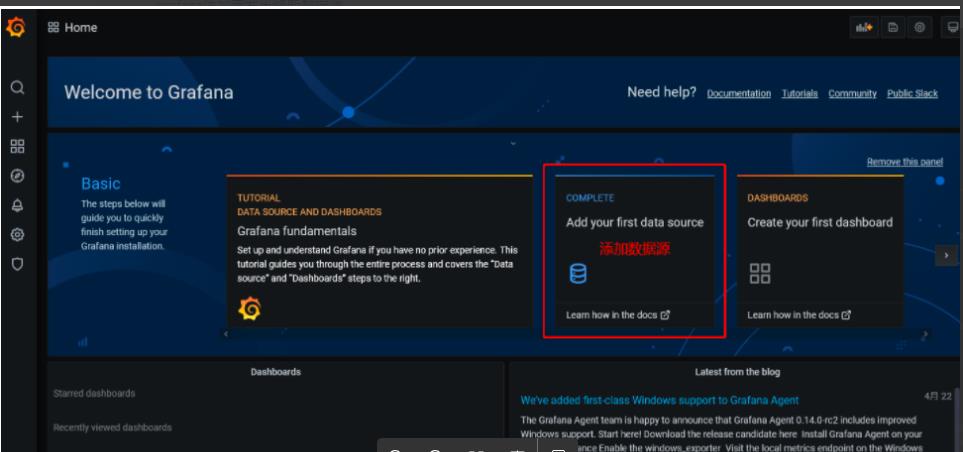

四、配合Grafana出图

Grafana是一个开源的度量分析和可视化工具,可以通过将采集的数据分析,查询,然后进行可视化的展示,并能实现报警。

4.1部署安装Grafana

#1.部署安装

cd /opt/package/

wget https://dl.grafana.com/oss/release/grafana-7.4.3-1.x86_64.rpm

yum localinstall grafana-7.4.3-1.x86_64.rpm

systemctl start grafana-server.service

netstat -tnlp #查看3000端口

#访问

IP:3000

默认用户密码:admin / admin

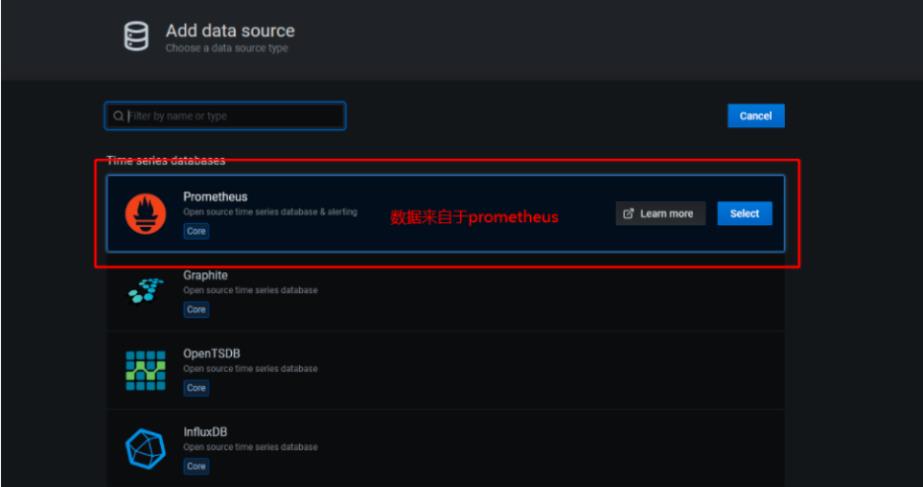

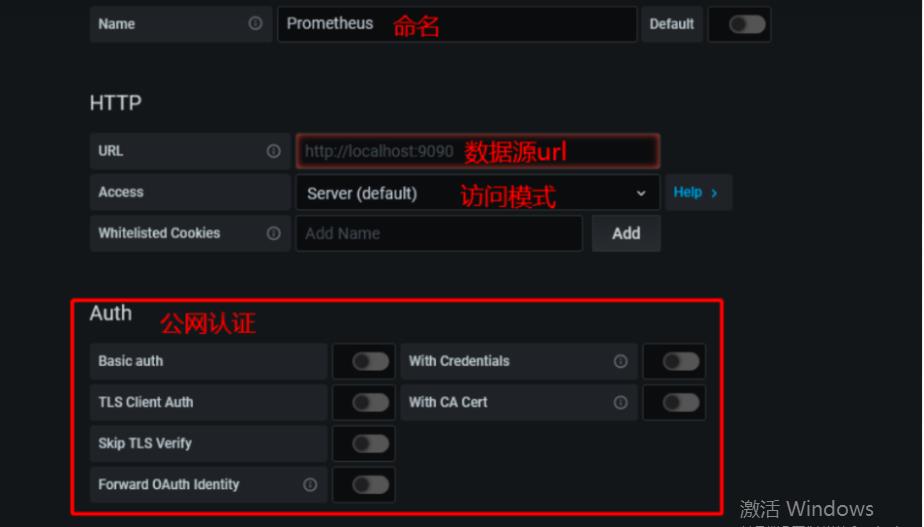

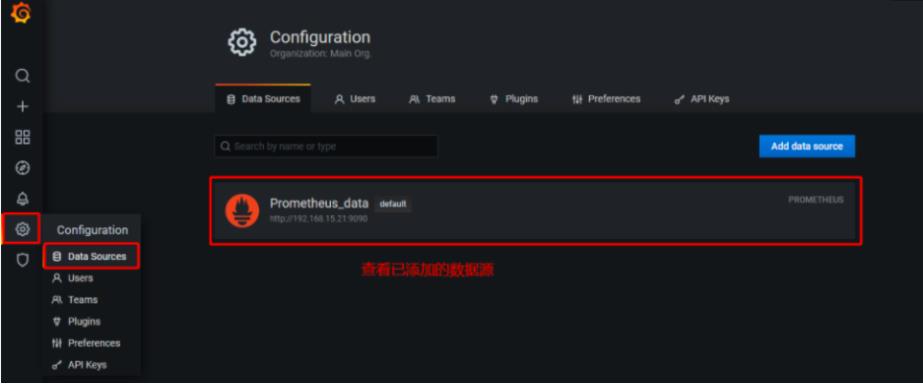

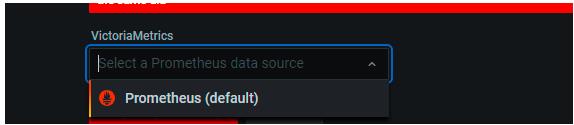

4.2添加普罗米修斯数据源

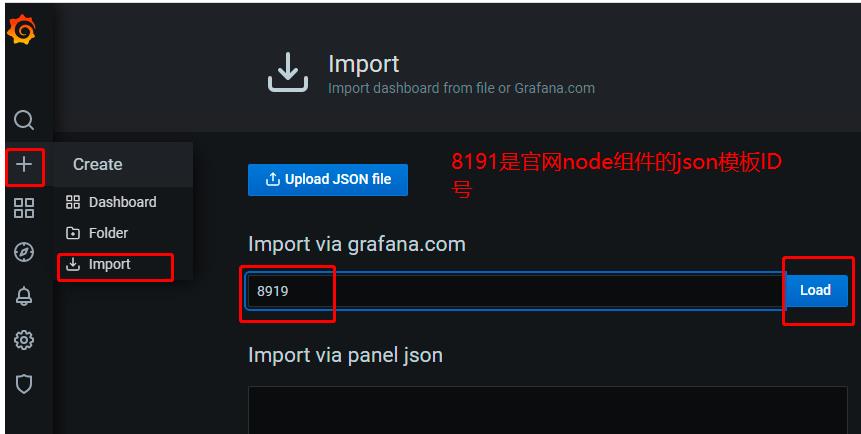

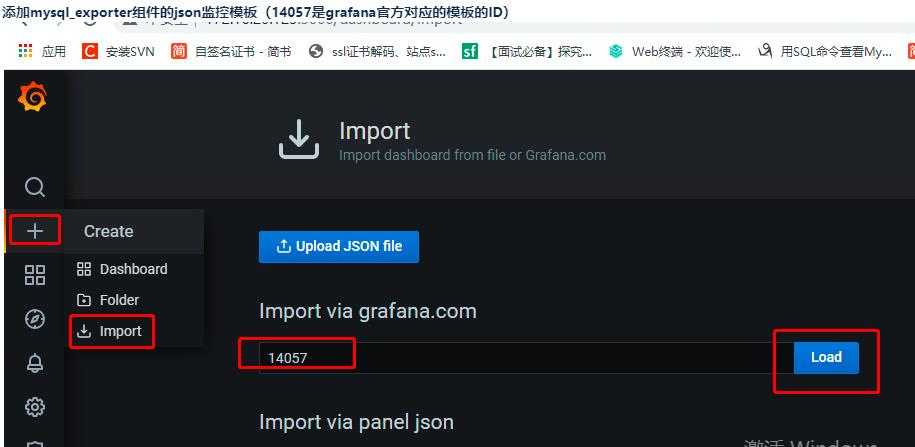

4.3添加node_exporter组件的json模板监控数据

4.4node_exporter监控成功

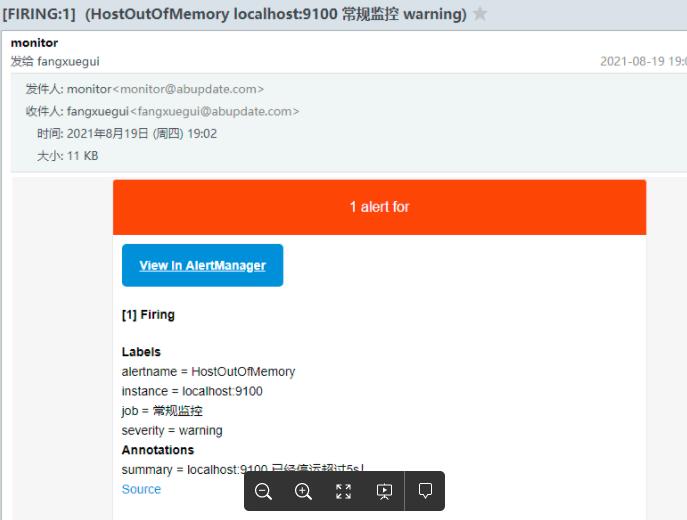

五、添加报警alertmanager

#1.docker安装alertmanager组件

mkdir /data/alertmanager

cd /data/alertmanager

vim alertmanager.yml

#修改配置文件 报警邮件配置

global:

resolve_timeout: 1h

smtp_smarthost: 'smtp.exmail.qq.com:465'

smtp_from: '1353421063@qq.com'

smtp_auth_username: '1353421063@qq.com'

smtp_auth_password: '邮箱开启smtp的授权码'

smtp_require_tls: false

#templates:

## - '/usr/local/alertmanager-0.21.0.linux-amd64/*.tmpl' #配置邮件模板,此处使用自带默认模板

route:

group_by: ['node_alerts'] #组名要与报警规则配置文件组名一致

group_wait: 10s

group_interval: 10s

repeat_interval: 12h

receiver: 'exmail'

receivers:

- name: 'exmail'

email_configs:

- to: 'fangxuegui@abupdate.com'

send_resolved: true

#启动容器

docker run -v /data/alertmanager/:/etc/alertmanager/ -p 3000:3000 --name alertmanager --restart=always --network host -d prom/alertmanager

#进入容器命令

docker ps 查看容器ID

docker exec -it 527e2434e329 sh

配置文件在/etc/alertmanager/下

以下4.5配置文件可通用

#1.二进制安装alertmanager组件

cd /opt/package/

wget http://github.com/prometheus/alertmanager/releases/download/v0.21.0/alertmanager-0.21.0.linux-amd64.tar.gz

tar xvf alertmanager-0.21.0.linux-amd64.tar.gz -C /usr/local/

#2.添加环境变量

vim /etc/profile

export ALTERMANAGER=/usr/local/altermanager-0.21.0.linux-amd64

PATH=$PATH:$GO_HOME:$GO_ROOT:$GO_PATH:$GO_HOME/bin:$PROMETHEUS_HOME:$ALTERMANAGER

source /etc/profile

#3.前台启动(端口9093)

/usr/local/alertmanager-0.21.0.linux-amd64/alertmanager --config.file=/usr/local/alertmanager-0.21.0.linux-amd64/alertmanager.yml

#4.编辑报警配置文件(收发邮件)

vim /usr/local/alertmanager-0.21.0.linux-amd64/alertmanager.yml

global:

resolve_timeout: 1h

smtp_smarthost: 'smtp.exmail.qq.com:465'

smtp_from: '1353421063@qq.com'

smtp_auth_username: '1353421063@qq.com'

smtp_auth_password: '邮箱开启smtp的授权码'

smtp_require_tls: false

#templates: #可以自定义邮件模板,此处注释掉就用默认模板

# - '/usr/local/alertmanager-0.21.0.linux-amd64/*.tmpl'

route:

group_by: ['node_alerts']

group_wait: 10s

group_interval: 10s

repeat_interval: 12h

receiver: 'exmail'

receivers:

- name: 'exmail'

email_configs:

- to: '1353421063@qq.com'

send_resolved: true

#5.具体配置解释

global 这个是全局设置

resolve_timeout 当告警的状态有firing变为resolve的以后还要呆多长时间,才宣布告警解除。这个主要是解决某些监控指标在阀值边缘上波动,一会儿好一会儿不好。

receivers 定义谁接收告警。也就是PrometheUS把告警内容发送到这个地方,然后这个地方的某些东东把告警发送给我们。

name就是个代称方便后面用

webhook_configs 这个是说 PrometheUS把告警发送给webhook,也就是一个http的url,当然这个url需要我们自己定义服务实现了。PrometheUS还支持其他的方式,具体可以参考官网:https://prometheus.io/docs/alerting/configuration/

send_resolved 当问题解决了是否也要通知一下

route 是个重点,告警内容从这里进入,寻找自己应该用那种策略发送出去

receiver 一级的receiver,也就是默认的receiver,当告警进来后没有找到任何子节点和自己匹配,就用这个receiver

group_by 告警应该根据那些标签进行分组

group_wait 同一组的告警发出前要等待多少秒,这个是为了把更多的告警一个批次发出去

group_interval 同一组的多批次告警间隔多少秒后,才能发出

repeat_interval 重复的告警要等待多久后才能再次发出去

routes 也就是子节点了,配置项和上面一样。告警会一层层的找,如果匹配到一层,并且这层的continue选项为true,那么他会再往下找,如果下层节点不能匹配那么他就用区配的这一层的配置发送告警。如果匹配到一层,并且这层的continue选项为false,那么他会直接用这一层的配置发送告警,就不往下找了。

match_re 用于匹配label。此处列出的所有label都匹配到才算匹配

inhibit_rules这个叫做抑制项,通过匹配源告警来抑制目的告警。比如说当我们的主机挂了,可能引起主机上的服务,数据库,中间件等一些告警,假如说后续的这些告警相对来说没有意义,我们可以用抑制项这个功能,让PrometheUS只发出主机挂了的告警。

source_match 根据label匹配源告警

target_match 根据label匹配目的告警

equal 此处的集合的label,在源和目的里的值必须相等。如果该集合的内的值再源和目的里都没有,那么目的告警也会被抑制。

#修改普罗米修斯配置文件添加报警

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- 172.16.20.113:9093 #(添加报警的IP和端口)

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

- "rule/*.yml" #(报警触发器文件的路径 rule需要创建)

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: 'node_exporter'

static_configs:

- targets: ['localhost:9100'

- job_name: 'mysql'

static_configs:

- targets: ['172.16.20.123:9104']

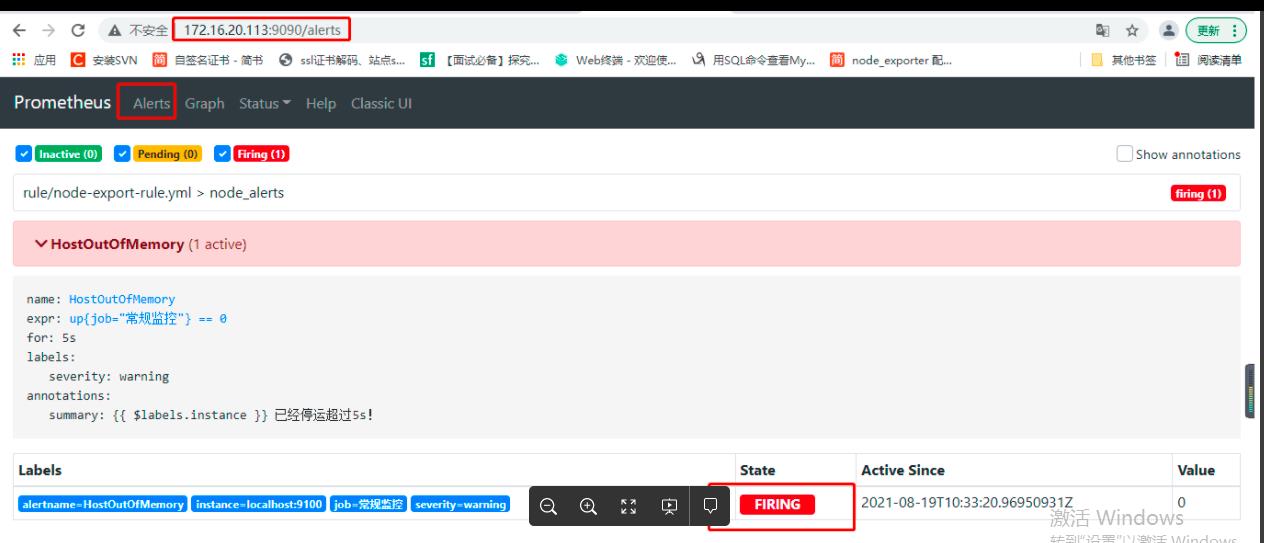

#5.编写报警规则

cd /data/ota_soft/prometheus

mkdir rule

cd rule

vim node-export-rule.yml

groups:

- name: node_alerts

rules:

- alert: node_alerts

expr: up{job="node_exporter"} == 0

for: 5s

labels:

severity: warning

annotations:

summary: "{{ $labels.instance }} 已经停运超过5s!"

#重启普罗米修斯 重启alertmanager 一切正常以后停掉node_exporter测试报警

注:截图中的常规监控就是node_exporter

常用报警规则:

cd /data/prometheus/config

vim alertmanager.yml

groups:

- name: node_alerts

rules:

- alert: HostOutOfMemory

expr: node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes * 100 < 10

for: 2m

labels:

severity: warning

annotations:

summary: Host out of memory (instance {{ $labels.instance }})

description: "Node memory is filling up (< 10% left)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostMemoryUnderMemoryPressure

expr: rate(node_vmstat_pgmajfault[1m]) > 1000

for: 2m

labels:

severity: warning

annotations:

summary: Host memory under memory pressure (instance {{ $labels.instance }})

description: "The node is under heavy memory pressure. High rate of major page faults\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostUnusualNetworkThroughputIn

expr: sum by (instance) (rate(node_network_receive_bytes_total[2m])) / 1024 / 1024 > 100

for: 5m

labels:

severity: warning

annotations:

summary: Host unusual network throughput in (instance {{ $labels.instance }})

description: "Host network interfaces are probably receiving too much data (> 100 MB/s)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostUnusualNetworkThroughputOut

expr: sum by (instance) (rate(node_network_transmit_bytes_total[2m])) / 1024 / 1024 > 100

for: 5m

labels:

severity: warning

annotations:

summary: Host unusual network throughput out (instance {{ $labels.instance }})

description: "Host network interfaces are probably sending too much data (> 100 MB/s)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostUnusualDiskReadRate

expr: sum by (instance) (rate(node_disk_read_bytes_total[2m])) / 1024 / 1024 > 50

for: 5m

labels:

severity: warning

annotations:

summary: Host unusual disk read rate (instance {{ $labels.instance }})

description: "Disk is probably reading too much data (> 50 MB/s)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostUnusualDiskWriteRate

expr: sum by (instance) (rate(node_disk_written_bytes_total[2m])) / 1024 / 1024 > 50

for: 2m

labels:

severity: warning

annotations:

summary: Host unusual disk write rate (instance {{ $labels.instance }})

description: "Disk is probably writing too much data (> 50 MB/s)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostOutOfDiskSpace

expr: (node_filesystem_avail_bytes * 100) / node_filesystem_size_bytes < 10 and ON (instance, device, mountpoint) node_filesystem_readonly == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host out of disk space (instance {{ $labels.instance }})

description: "Disk is almost full (< 10% left)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostDiskWillFillIn24Hours

expr: (node_filesystem_avail_bytes * 100) / node_filesystem_size_bytes < 10 and ON (instance, device, mountpoint) predict_linear(node_filesystem_avail_bytes{fstype!~"tmpfs"}[1h], 24 * 3600) < 0 and ON (instance, device, mountpoint) node_filesystem_readonly == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host disk will fill in 24 hours (instance {{ $labels.instance }})

description: "Filesystem is predicted to run out of space within the next 24 hours at current write rate\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostOutOfInodes

expr: node_filesystem_files_free{mountpoint ="/rootfs"} / node_filesystem_files{mountpoint="/rootfs"} * 100 < 10 and ON (instance, device, mountpoint) node_filesystem_readonly{mountpoint="/rootfs"} == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host out of inodes (instance {{ $labels.instance }})

description: "Disk is almost running out of available inodes (< 10% left)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostInodesWillFillIn24Hours

expr: node_filesystem_files_free{mountpoint ="/rootfs"} / node_filesystem_files{mountpoint="/rootfs"} * 100 < 10 and predict_linear(node_filesystem_files_free{mountpoint="/rootfs"}[1h], 24 * 3600) < 0 and ON (instance, device, mountpoint) node_filesystem_readonly{mountpoint="/rootfs"} == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host inodes will fill in 24 hours (instance {{ $labels.instance }})

description: "Filesystem is predicted to run out of inodes within the next 24 hours at current write rate\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostUnusualDiskReadLatency

expr: rate(node_disk_read_time_seconds_total[1m]) / rate(node_disk_reads_completed_total[1m]) > 0.1 and rate(node_disk_reads_completed_total[1m]) > 0

for: 2m

labels:

severity: warning

annotations:

summary: Host unusual disk read latency (instance {{ $labels.instance }})

description: "Disk latency is growing (read operations > 100ms)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostUnusualDiskWriteLatency

expr: rate(node_disk_write_time_seconds_total[1m]) / rate(node_disk_writes_completed_total[1m]) > 0.1 and rate(node_disk_writes_completed_total[1m]) > 0

for: 2m

labels:

severity: warning

annotations:

summary: Host unusual disk write latency (instance {{ $labels.instance }})

description: "Disk latency is growing (write operations > 100ms)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostHighCpuLoad

expr: 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) > 80

for: 0m

labels:

severity: warning

annotations:

summary: Host high CPU load (instance {{ $labels.instance }})

description: "CPU load is > 80%\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostCpuStealNoisyNeighbor

expr: avg by(instance) (rate(node_cpu_seconds_total{mode="steal"}[5m])) * 100 > 10

for: 0m

labels:

severity: warning

annotations:

summary: Host CPU steal noisy neighbor (instance {{ $labels.instance }})

description: "CPU steal is > 10%. A noisy neighbor is killing VM performances or a spot instance may be out of credit.\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostContextSwitching

expr: (rate(node_context_switches_total[5m])) / (count without(cpu, mode) (node_cpu_seconds_total{mode="idle"})) > 1000

for: 0m

labels:

severity: warning

annotations:

summary: Host context switching (instance {{ $labels.instance }})

description: "Context switching is growing on node (> 1000 / s)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostSwapIsFillingUp

expr: (1 - (node_memory_SwapFree_bytes / node_memory_SwapTotal_bytes)) * 100 > 80

for: 2m

labels:

severity: warning

annotations:

summary: Host swap is filling up (instance {{ $labels.instance }})

description: "Swap is filling up (>80%)\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostSystemdServiceCrashed

expr: node_systemd_unit_state{state="failed"} == 1

for: 0m

labels:

severity: warning

annotations:

summary: Host systemd service crashed (instance {{ $labels.instance }})

description: "systemd service crashed\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostPhysicalComponentTooHot

expr: node_hwmon_temp_celsius > 75

for: 5m

labels:

severity: warning

annotations:

summary: Host physical component too hot (instance {{ $labels.instance }})

description: "Physical hardware component too hot\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostNodeOvertemperatureAlarm

expr: node_hwmon_temp_crit_alarm_celsius == 1

for: 0m

labels:

severity: critical

annotations:

summary: Host node overtemperature alarm (instance {{ $labels.instance }})

description: "Physical node temperature alarm triggered\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostRaidArrayGotInactive

expr: node_md_state{state="inactive"} > 0

for: 0m

labels:

severity: critical

annotations:

summary: Host RAID array got inactive (instance {{ $labels.instance }})

description: "RAID array {{ $labels.device }} is in degraded state due to one or more disks failures. Number of spare drives is insufficient to fix issue automatically.\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostRaidDiskFailure

expr: node_md_disks{state="failed"} > 0

for: 2m

labels:

severity: warning

annotations:

summary: Host RAID disk failure (instance {{ $labels.instance }})

description: "At least one device in RAID array on {{ $labels.instance }} failed. Array {{ $labels.md_device }} needs attention and possibly a disk swap\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostKernelVersionDeviations

expr: count(sum(label_replace(node_uname_info, "kernel", "$1", "release", "([0-9]+.[0-9]+.[0-9]+).*")) by (kernel)) > 1

for: 6h

labels:

severity: warning

annotations:

summary: Host kernel version deviations (instance {{ $labels.instance }})

description: "Different kernel versions are running\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostKernelVersionDeviations

expr: count(sum(label_replace(node_uname_info, "kernel", "$1", "release", "([0-9]+.[0-9]+.[0-9]+).*")) by (kernel)) > 1

for: 6h

labels:

severity: warning

annotations:

summary: Host kernel version deviations (instance {{ $labels.instance }})

description: "Different kernel versions are running\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostOomKillDetected

expr: increase(node_vmstat_oom_kill[1m]) > 0

for: 0m

labels:

severity: warning

annotations:

summary: Host OOM kill detected (instance {{ $labels.instance }})

description: "OOM kill detected\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostEdacCorrectableErrorsDetected

expr: increase(node_edac_correctable_errors_total[1m]) > 0

for: 0m

labels:

severity: info

annotations:

summary: Host EDAC Correctable Errors detected (instance {{ $labels.instance }})

description: "Host {{ $labels.instance }} has had {{ printf \\"%.0f\\" $value }} correctable memory errors reported by EDAC in the last 5 minutes.\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostEdacUncorrectableErrorsDetected

expr: node_edac_uncorrectable_errors_total > 0

for: 0m

labels:

severity: warning

annotations:

summary: Host EDAC Uncorrectable Errors detected (instance {{ $labels.instance }})

description: "Host {{ $labels.instance }} has had {{ printf \\"%.0f\\" $value }} uncorrectable memory errors reported by EDAC in the last 5 minutes.\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostNetworkReceiveErrors

expr: rate(node_network_receive_errs_total[2m]) / rate(node_network_receive_packets_total[2m]) > 0.01

for: 2m

labels:

severity: warning

annotations:

summary: Host Network Receive Errors (instance {{ $labels.instance }})

description: "Host {{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf \\"%.0f\\" $value }} receive errors in the last five minutes.\\n VALUE = {{ $value }}\\n LABELS = {{ $labels }}"

- alert: HostNetworkTransmitErrors

expr: rate(node_network_transmit_errs_total[2m]) / rate(node_network_transmit_packets_total[2m]) > 0.01

for: 2m

labels:

severity: warning

annotations:

summary: Host Network Transmit Errors (instance {{ $labels.instance }以上是关于Prometheus===》企业版部署监控报警的主要内容,如果未能解决你的问题,请参考以下文章