深度学习和目标检测系列教程 17-300: 3 个类别面罩检测类别数据集训练yolov5s模型

Posted 刘润森!

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深度学习和目标检测系列教程 17-300: 3 个类别面罩检测类别数据集训练yolov5s模型相关的知识,希望对你有一定的参考价值。

@Author:Runsen

YOLO 是目前最火爆的的计算机视觉算法之一,今天使用的数据集来源:https://www.kaggle.com/andrewmvd/face-mask-detection

这是数据集可以创建一个模型来检测戴口罩、不戴口罩或不正确戴口罩的人。

该数据集包含属于3 个类别的853张图像,以及它们的 PASCAL VOC 格式的边界框。分别是

- 带口罩;

- 不戴口罩;

- 口罩佩戴不正确。

首先将voc形式变成yolo形式,voc是xml文件,需要通过ElementTree进行解析,得到bnd_box ,具体解析代码如下

import os

from xml.etree.ElementTree import ElementTree

def xml_to_yolo(path):

root = ElementTree().parse(path)

img_path = root.find('filename').text.replace('png','txt')

img_size = root.find('size')

width = int(img_size.find('width').text)

height = int(img_size.find('height').text)

with open('../dataset/label/' + img_path, 'w') as f:

lines = []

for node in root.findall('object'):

object_ = dict(class_=None, x=None, y=None, width=None, height=None)

# class

class_name = node.find('name').text

if(class_name == 'without_mask'):

object_['class_'] = '0'

elif(class_name == 'with_mask'):

object_['class_'] = '1'

else:

object_['class_'] = '2'

# bounding box

bnd_box = node.find("bndbox")

x_min = float(bnd_box[0].text)

y_min = float(bnd_box[1].text)

x_max = float(bnd_box[2].text)

y_max = float(bnd_box[3].text)

dw = float(1/width)

dh = float(1/height)

w = float(x_max - x_min)

h = float(y_max - y_min)

x = float((x_min + x_max)/2 -1)

y = float((y_min + y_max)/2 -1)

w = float(w * dw)

h = float(h * dh)

x = float(x * dw)

y = float(y * dh)

object_['x'] = str(x)

object_['y'] = str(y)

object_['width'] = str(w)

object_['height'] = str(h)

line = object_['class_'] + ' ' + object_['x'] + ' ' + object_['y'] + ' ' + object_['width'] + ' ' + object_['height']

lines.append(line)

lines.append('\\n')

for line in lines[:-1]:

f.write(line)

f.close

def process_data():

img_paths = []

for dirname, _, filenames in os.walk('../dataset/images'):

for filename in filenames:

img_paths.append(os.path.join('../dataset/images', filename)) # local machine

# xml

for dirname, _, filenames in os.walk('../dataset/xml'):

for filename in filenames:

annotation_path = (os.path.join(dirname, filename))

xml_to_yolo(annotation_path)

process_data()

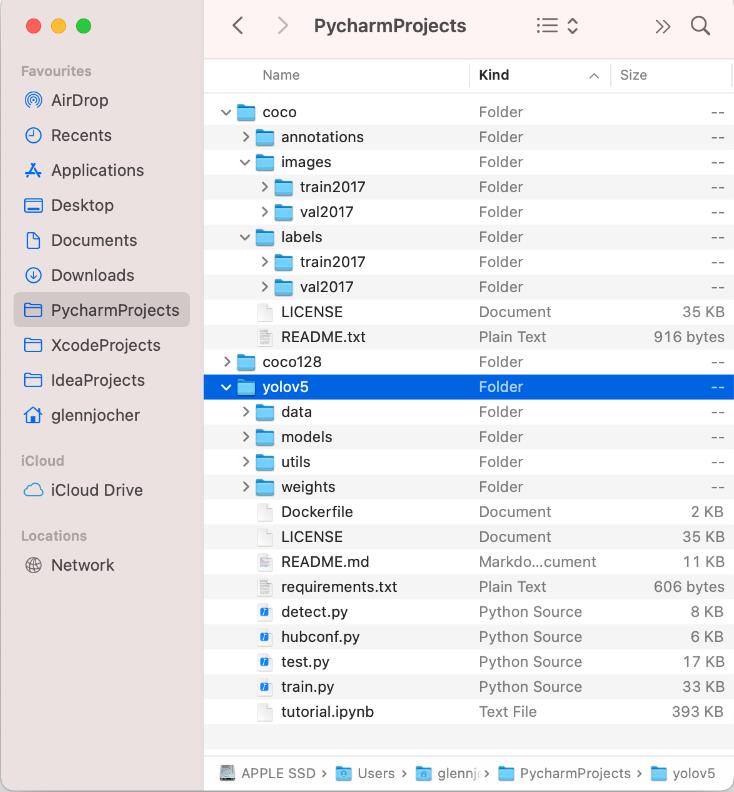

克隆Github的YOLOv5的代码:https://github.com/ultralytics/yolov5。

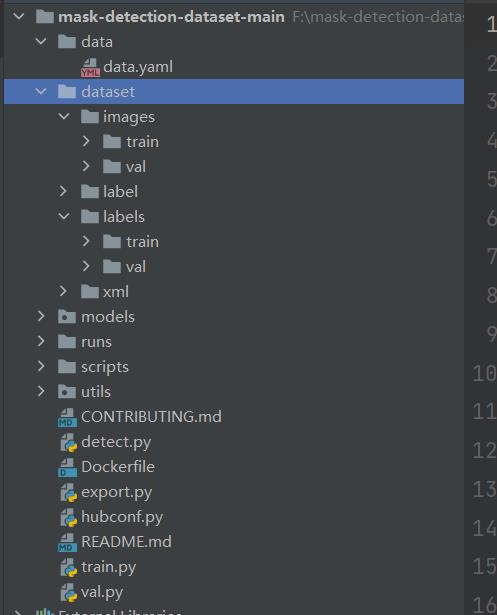

为了跟数据集的目录保持一致

在dataset新建images和labels文件夹存放对于的文件。

data文件夹下的data.yaml文件

train: ./dataset/images/train

val: ./dataset/images/val

# number of classes

nc: 3

# class names

names: ['without_mask','with_mask','mask_weared_incorrect']

model文件夹下的yolov5s.yaml文件

# Parameters

nc: 3 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Focus, [64, 3]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 9, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 1, SPP, [1024, [5, 9, 13]]],

[-1, 3, C3, [1024, False]], # 9

]

# YOLOv5 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

下载yolov5s.pt对应的权重,大约14M

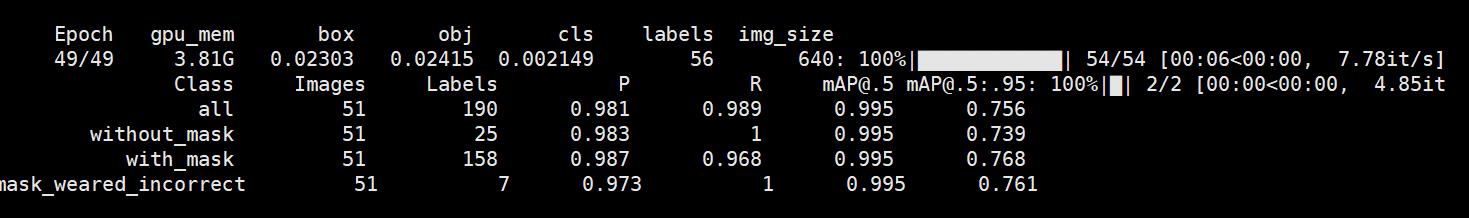

训练代码:python train.py --batch 16 --epochs 50 --data /data/data.yaml --cfg /models/yolov5s.yaml

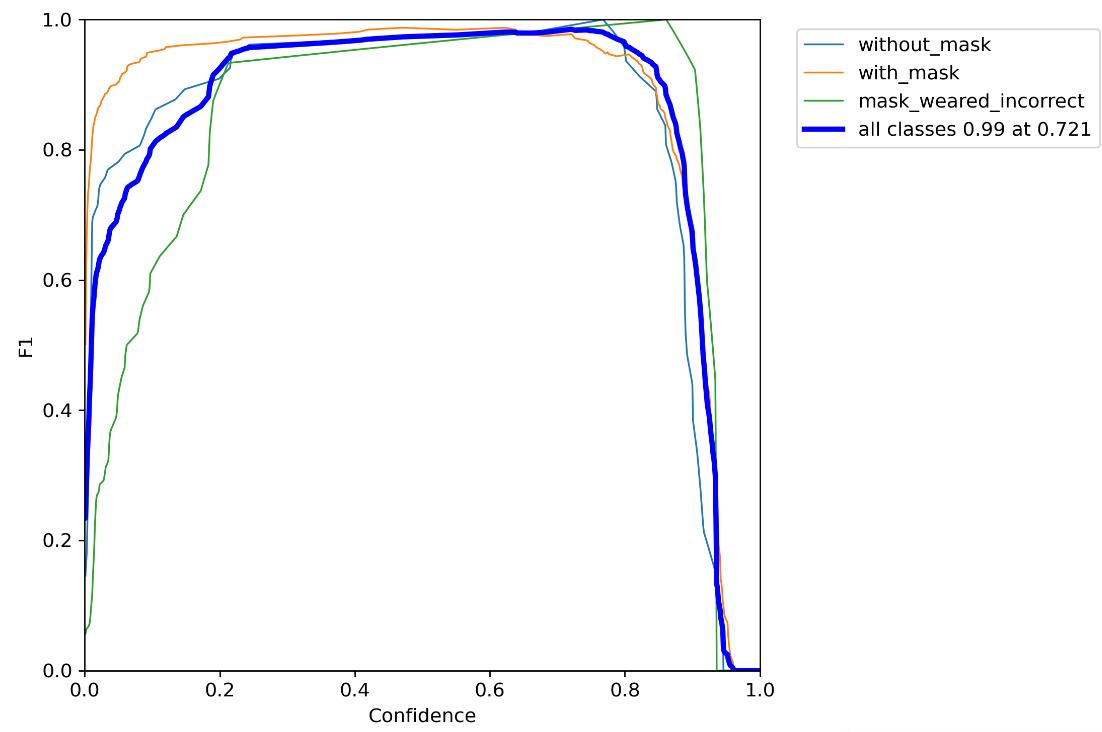

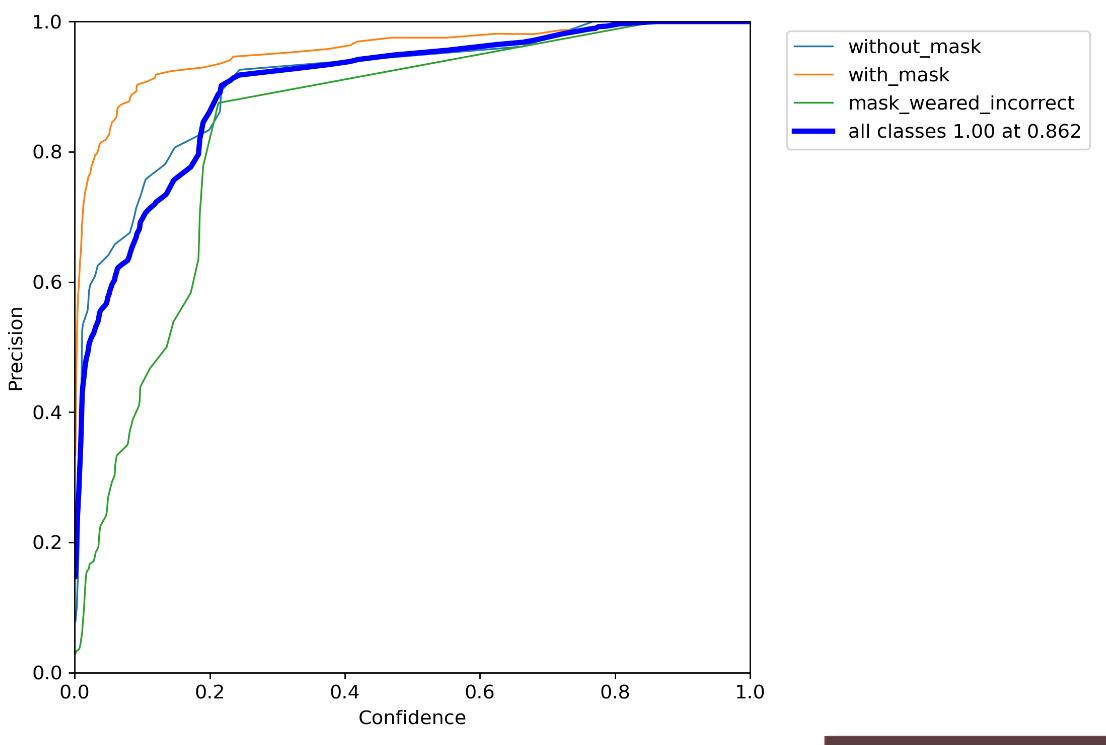

训练结果

关于P R mAP50 和mAP95的指标

mAP50,mAP60,mAP70……等等指的是取detector的IoU阈值大于0.5,大于0.6,大于0.7…的比例。数值越高,越好,如果阈值越大,精度会变低。

因为IoU大于0.95的小于IoU大于0.5

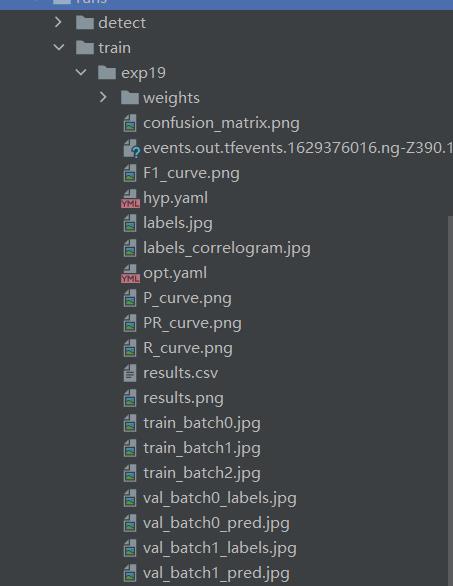

yolov5训练完,会生成runs的文件夹

训练结束会生成train文件夹,第一次训练完成一般默认是exp,如果再训练一次,就是exp2。exp19是我在不断的改batch 和epochs。

50次训练的指标结果

epoch, train/box_loss, train/obj_loss, train/cls_loss, metrics/precision, metrics/recall, metrics/mAP_0.5,metrics/mAP_0.5:0.95, val/box_loss, val/obj_loss, val/cls_loss, x/lr0, x/lr1, x/lr2

0, 0.11183, 0.061692, 0.036166, 0.0040797, 0.023207, 0.00094919, 0.00015348, 0.088802, 0.044058, 0.029341, 0.00053, 0.00053, 0.09523

1, 0.090971, 0.06624, 0.024651, 0.44734, 0.13502, 0.11474, 0.0279, 0.067798, 0.037852, 0.022663, 0.0010692, 0.0010692, 0.090369

2, 0.070342, 0.052357, 0.02153, 0.85191, 0.20848, 0.22242, 0.077028, 0.055487, 0.027934, 0.019595, 0.0016049, 0.0016049, 0.085505

3, 0.0699, 0.043899, 0.020244, 0.87295, 0.16442, 0.20657, 0.078501, 0.055767, 0.024396, 0.018845, 0.0021348, 0.0021348, 0.080635

4, 0.064317, 0.04001, 0.018747, 0.83973, 0.19818, 0.20992, 0.068715, 0.055507, 0.022381, 0.018095, 0.0026562, 0.0026562, 0.075756

5, 0.060517, 0.040462, 0.01871, 0.92677, 0.26977, 0.31691, 0.13554, 0.046723, 0.020782, 0.017638, 0.0031668, 0.0031668, 0.070867

6, 0.055565, 0.037719, 0.017239, 0.56328, 0.42257, 0.34731, 0.14537, 0.042455, 0.020308, 0.015934, 0.0036641, 0.0036641, 0.065964

7, 0.051137, 0.036675, 0.01443, 0.70063, 0.59002, 0.56658, 0.28446, 0.037626, 0.018761, 0.012734, 0.0041459, 0.0041459, 0.061046

8, 0.051564, 0.036086, 0.010754, 0.75331, 0.59469, 0.57015, 0.21859, 0.044253, 0.018153, 0.010504, 0.00461, 0.00461, 0.05611

9, 0.054336, 0.036489, 0.01014, 0.81292, 0.53958, 0.57799, 0.28552, 0.037579, 0.018368, 0.009468, 0.0050544, 0.0050544, 0.051154

10, 0.052323, 0.034572, 0.0095445, 0.85048, 0.62312, 0.6267, 0.338, 0.031516, 0.017655, 0.010057, 0.005477, 0.005477, 0.046177

11, 0.050382, 0.035035, 0.0086806, 0.86829, 0.59491, 0.61092, 0.3379, 0.032883, 0.017608, 0.0090742, 0.0058761, 0.0058761, 0.041176

12, 0.046103, 0.035051, 0.0082707, 0.8746, 0.60331, 0.72019, 0.42562, 0.030186, 0.016849, 0.0087845, 0.00625, 0.00625, 0.03615

13, 0.045144, 0.032905, 0.0071727, 0.50942, 0.80319, 0.76972, 0.38975, 0.033539, 0.016185, 0.0083475, 0.0065973, 0.0065973, 0.031097

14, 0.043005, 0.031431, 0.0076075, 0.94694, 0.59886, 0.75893, 0.45433, 0.028811, 0.016204, 0.0087185, 0.0069167, 0.0069167, 0.026017

15, 0.042583, 0.032493, 0.0075345, 0.54628, 0.78986, 0.70483, 0.39052, 0.034077, 0.016426, 0.010784, 0.007207, 0.007207, 0.020907

16, 0.044892, 0.032174, 0.0079312, 0.93707, 0.62972, 0.75324, 0.417, 0.031565, 0.015583, 0.0074945, 0.0074674, 0.0074674, 0.015767

17, 0.04351, 0.033322, 0.0071257, 0.9655, 0.60346, 0.75329, 0.43919, 0.029984, 0.015935, 0.0071412, 0.0076971, 0.0076971, 0.010597

18, 0.041603, 0.032276, 0.0070445, 0.56572, 0.84931, 0.80283, 0.50439, 0.027445, 0.015567, 0.0067956, 0.0077031, 0.0077031, 0.0077031

19, 0.03878, 0.031363, 0.0064713, 0.57055, 0.85292, 0.81709, 0.54818, 0.02845, 0.014806, 0.006212以上是关于深度学习和目标检测系列教程 17-300: 3 个类别面罩检测类别数据集训练yolov5s模型的主要内容,如果未能解决你的问题,请参考以下文章