爬虫实战百度贴吧(想搜什么帖子都可以)

Posted ZSYL

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫实战百度贴吧(想搜什么帖子都可以)相关的知识,希望对你有一定的参考价值。

前言

百度贴吧—全球领先的中文社区!

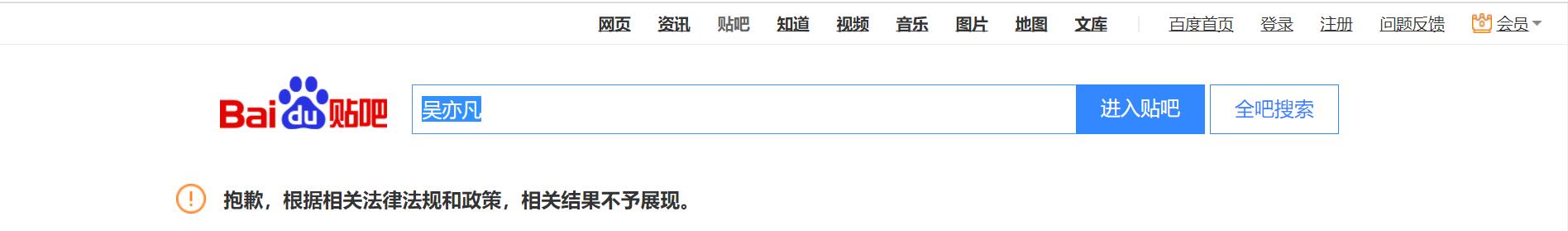

里面搜你想知道的 getAll!

既然不能吃瓜,那就玩游戏吧!

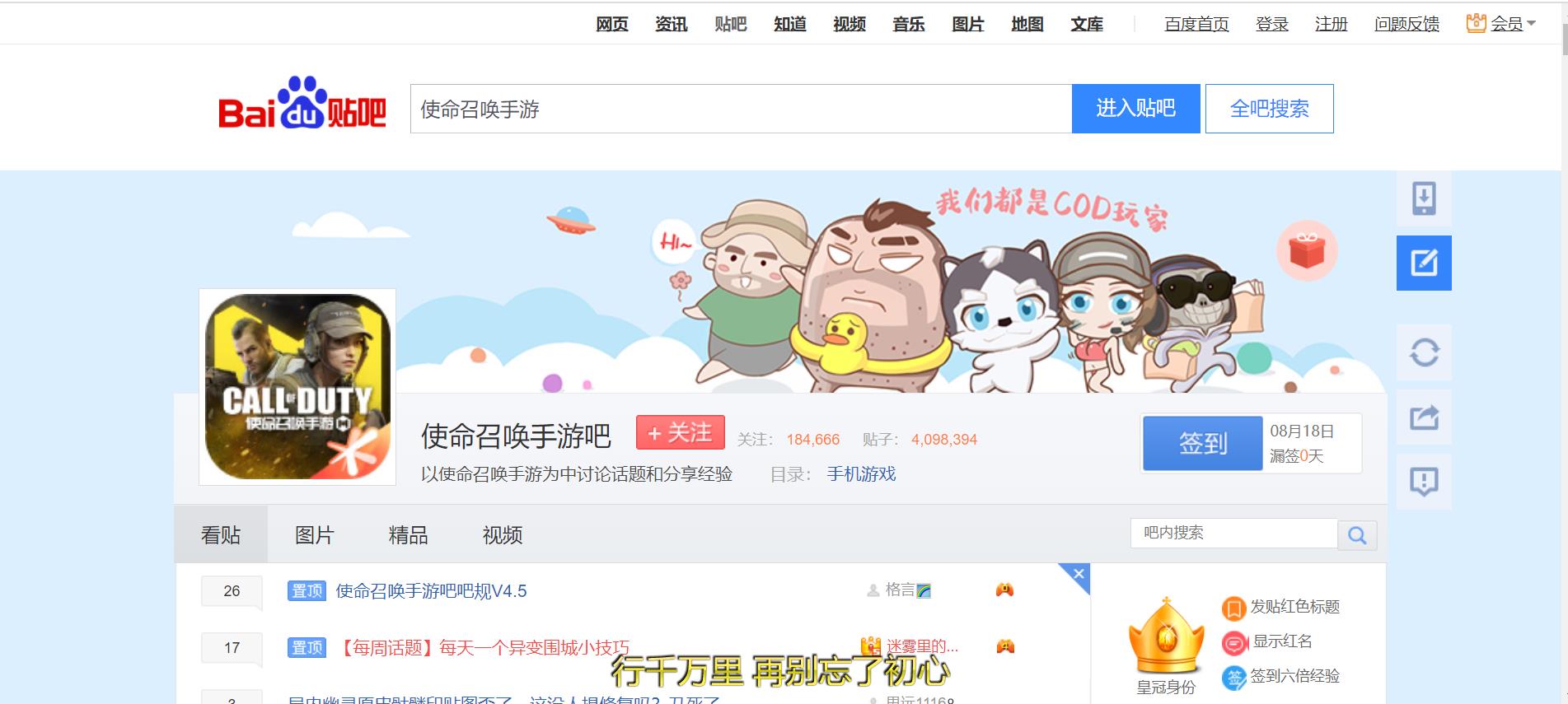

使命召唤回归原味经典!

当年多么热爱的枪战游戏哇!

1. 获取数据

百度贴吧 https://tieba.baidu.com/index.html

关键字搜索:https://tieba.baidu.com/f?ie=utf-8&kw=使命召唤

def get_data(self, url):

response = requests.get(url, headers=self.headers)

print(url)

return response.content

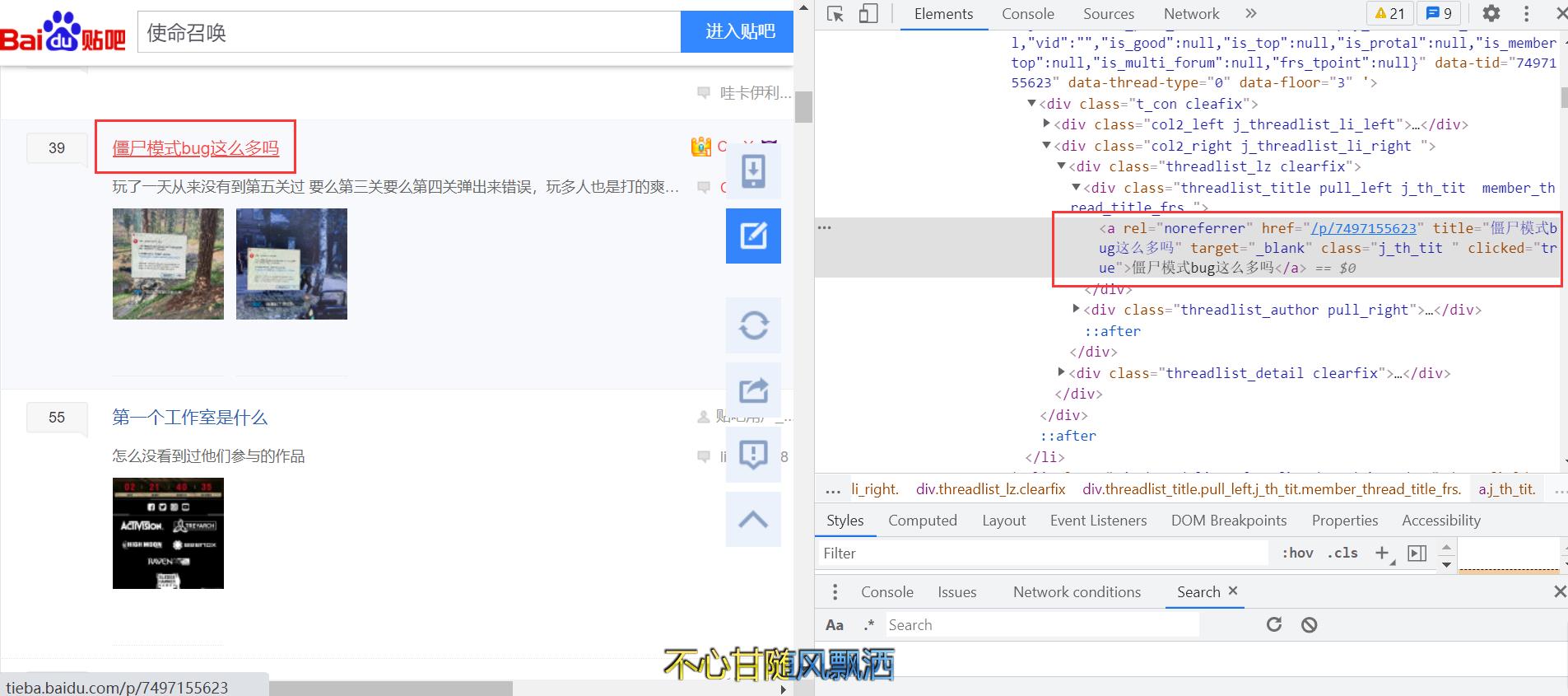

2. 解析数据

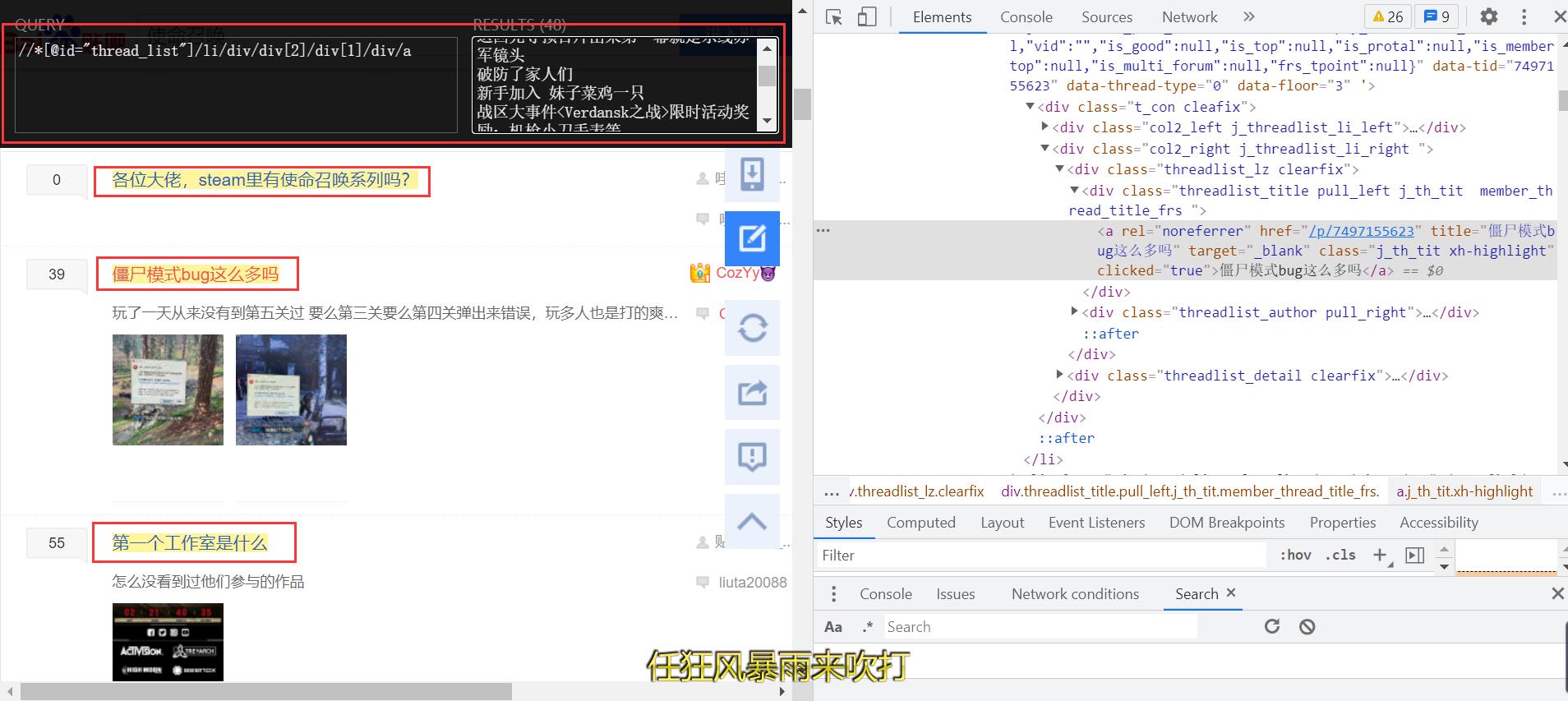

使用XPath获取所有贴吧内容的title和link,注意:去除广告。

XPath Helper工具不断调试,便捷插件安装请参考:Chrome安装爬虫必备插件:Xpath Helper高效解析网页内容(实测有效)

def parse_data(self, data):

# 创建element对象

data = data.decode().replace('<!--', '').replace('-->', '')

html = etree.HTML(data)

el_list = html.xpath('//li[@class=" j_thread_list clearfix thread_item_box"]/div/div[2]/div[1]/div[1]/a')

# print(len(el_list))

data_list = []

for el in el_list:

temp = {}

temp['title'] = el.xpath('./text()')[0]

temp['link'] = 'https://tieba.baidu.com/' + el.xpath('./@href')[0]

data_list.append(temp)

# 获取下一页

try:

next_url = 'https:' + html.xpath('//a[contains(text(), "下一页")]/@href')[0] # //a[@class="next pagination-item"]/@href

except:

next_url = None

return data_list, next_url

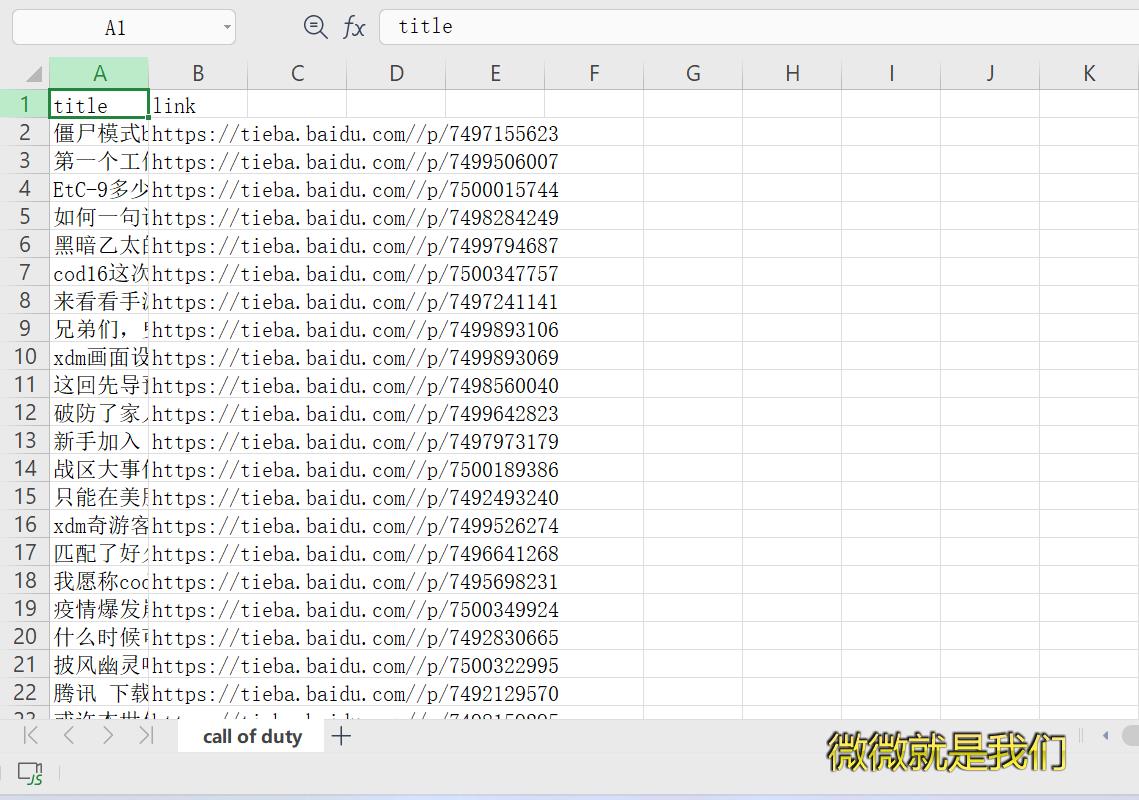

3. 保存数据

def save_data(self, data_list):

for data in data_list:

self.ws.append(list(data.values())) # 添加字典的values

self.num += 1

4. 完整代码

import requests

from lxml import etree

import openpyxl

class Tieba(object):

def __init__(self, name):

self.url = 'https://tieba.baidu.com/f?kw={}&ie=utf-8&pn=0'.format(name)

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4542.2 Safari/537.36',

}

self.wb = openpyxl.Workbook() # 创建工作簿

self.ws = self.wb.active # 激活工作表sheet

self.ws.title = 'call of duty' # 更改sheet名

self.ws.append(['title', 'link']) # 设置表头

self.num = 0

def get_data(self, url):

response = requests.get(url, headers=self.headers)

print(url)

return response.content

def parse_data(self, data):

# 创建element对象

data = data.decode().replace('<!--', '').replace('-->', '')

html = etree.HTML(data)

el_list = html.xpath('//li[@class=" j_thread_list clearfix thread_item_box"]/div/div[2]/div[1]/div[1]/a')

# print(len(el_list))

data_list = []

for el in el_list:

temp = {}

temp['title'] = el.xpath('./text()')[0]

temp['link'] = 'https://tieba.baidu.com/' + el.xpath('./@href')[0]

data_list.append(temp)

# 获取下一页

try:

next_url = 'https:' + html.xpath('//a[contains(text(), "下一页")]/@href')[0] # //a[@class="next pagination-item"]/@href

except:

next_url = None

return data_list, next_url

def save_data(self, data_list):

for data in data_list:

self.ws.append(list(data.values())) # 添加字典的values

self.num += 1

def run(self):

# url

# headers

next_url = self.url

while next_url: # 不到最后一页,不为None

# 发送请求获取响应

data = self.get_data(next_url)

# 从响应中提取数据和翻页用的数据

data_list, next_url = self.parse_data(data)

self.save_data(data_list)

# 测试需要

# if self.num > 200:

# break

# # 判断是否终结

# if not next_url:

# break

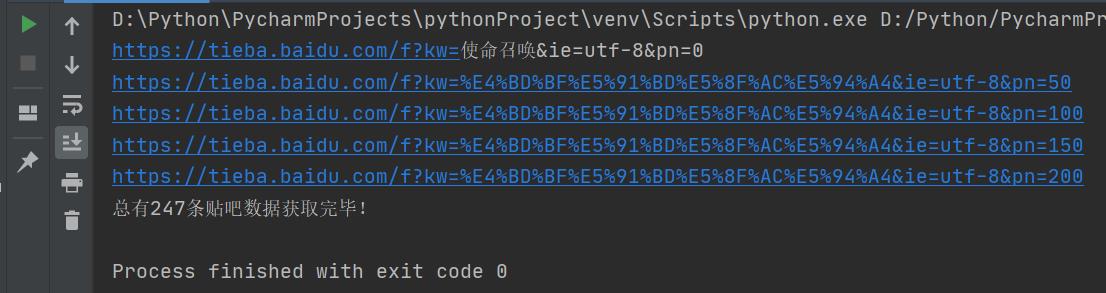

if __name__ == '__main__':

tieba = Tieba('使命召唤')

tieba.run()

tieba.wb.save('call of duty’s tieba_data.xlsx')

print(f'总有{tieba.num}条贴吧数据获取完毕!')

5. 效果展示

加油!

感谢!

努力!

以上是关于爬虫实战百度贴吧(想搜什么帖子都可以)的主要内容,如果未能解决你的问题,请参考以下文章