K8S集群架构的二进制部署——K8S集群学习的基础

Posted 码海小虾米_

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了K8S集群架构的二进制部署——K8S集群学习的基础相关的知识,希望对你有一定的参考价值。

一、部署etcd

Master上操作

[root@192 etcd-cert]# cd /usr/local/bin/

[root@192 bin]# rz -E(cfssl cfssl-certinfo cfssljson)

[root@192 bin]# chmod +x *

[root@192 bin]# ls

cfssl cfssl-certinfo cfssljson

//生成ETCD证书

[root@192 ~]# mkdir /opt/k8s

[root@192 ~]# cd /opt/k8s/

[root@192 k8s]# rz -E(etcd-cert.sh etcd.sh[需要修改])

[root@192 k8s]# chmod +x etcd*

[root@192 k8s]# mkdir etcd-cert

[root@192 k8s]# mv etcd-cert.sh etcd-cert

[root@192 k8s]# cd etcd-cert/

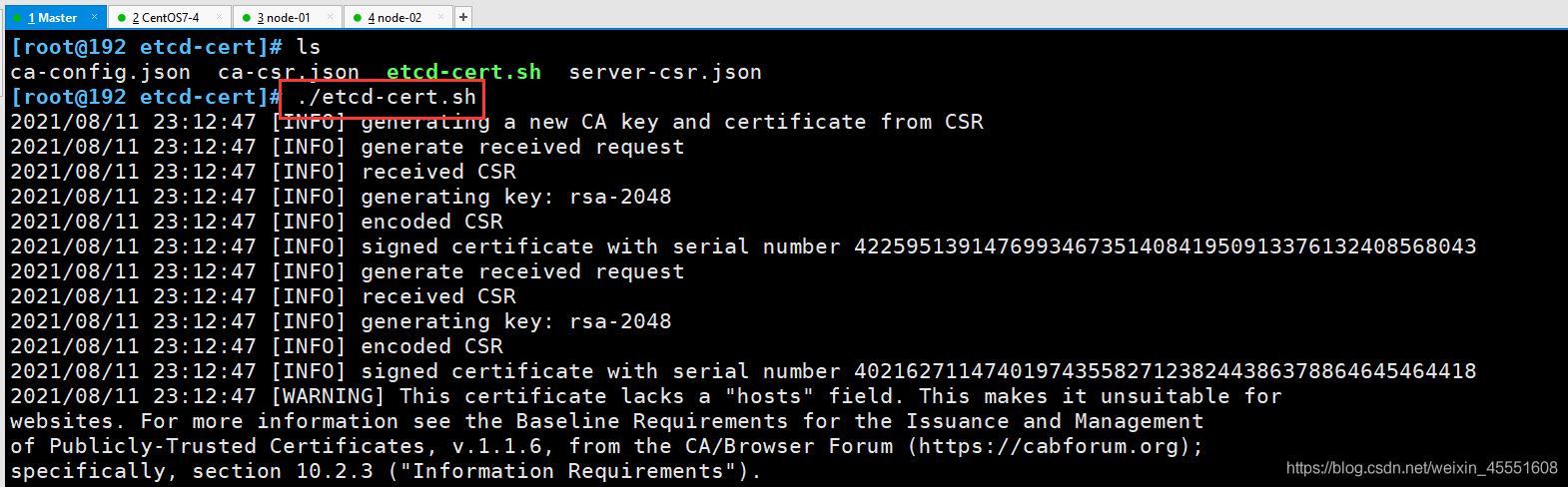

[root@192 etcd-cert]# ./etcd-cert.sh

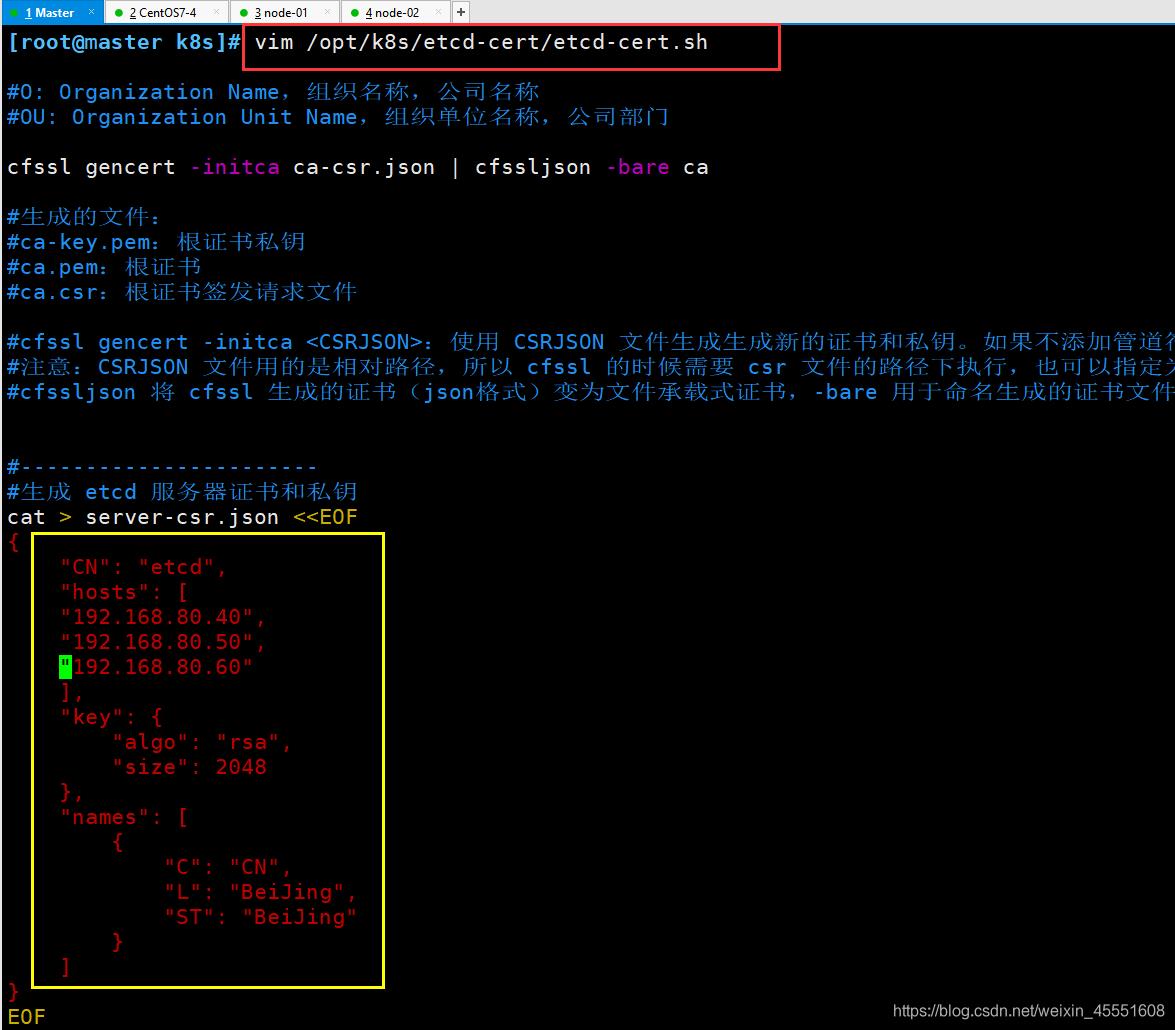

① 生成ETCD证书,etcd-cert.sh需要进行修改集群ip

然后再生成ETCD证书

//ETCD 二进制包

[root@192 k8s]# cd /opt/k8s/

[root@192 k8s]# rz -E(zxvf etcd-v3.3.10-linux-amd64.tar.gz)

rz waiting to receive.

[root@192 k8s]# ls

etcd-cert etcd.sh etcd-v3.3.10-linux-amd64.tar.gz

[root@192 k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

//配置文件,命令文件,证书

[root@192 k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@192 k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

//证书拷贝

[root@192 k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

//进入卡住状态等待其他节点加入

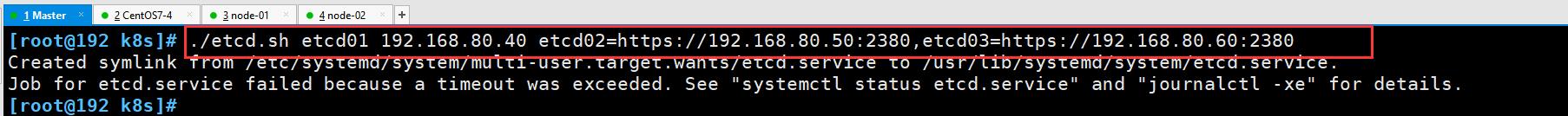

[root@192 k8s]# ./etcd.sh etcd01 192.168.80.40 etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380

② 进入卡住状态等待其他节点加入

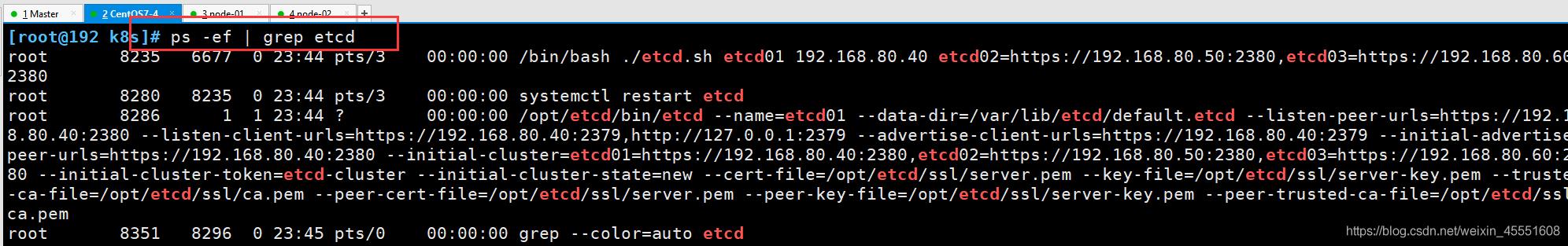

[root@192 k8s]# ps -ef | grep etcd

root 8235 6677 0 23:44 pts/3 00:00:00 /bin/bash ./etcd.sh etcd01 192.168.80.40 etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380

root 8280 8235 0 23:44 pts/3 00:00:00 systemctl restart etcd

root 8286 1 1 23:44 ? 00:00:00 /opt/etcd/bin/etcd --name=etcd01 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.80.40:2380 --listen-client-urls=https://192.168.80.40:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.80.40:2379 --initial-advertise-peer-urls=https://192.168.80.40:2380 --initial-cluster=etcd01=https://192.168.80.40:2380,etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380 --initial-cluster-token=etcd-cluster --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

root 8351 8296 0 23:45 pts/0 00:00:00 grep --color=auto etcd

③ 另开一个master终端会发现etcd进程已经开启

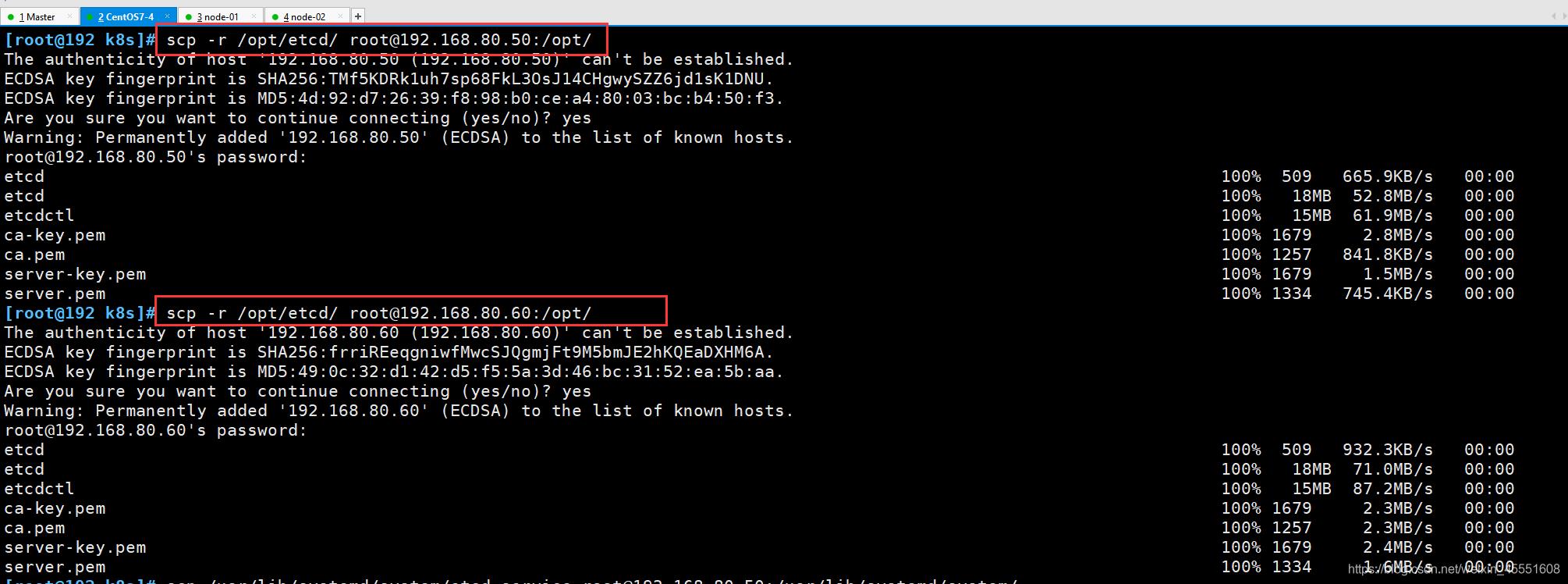

//拷贝证书去其他节点

scp -r /opt/etcd/ root@192.168.80.50:/opt/

scp -r /opt/etcd/ root@192.168.80.60:/opt/

//启动脚本拷贝其他节点

scp /usr/lib/systemd/system/etcd.service root@192.168.80.50:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@192.168.80.60:/usr/lib/systemd/system/

④ 拷贝证书去其他节点

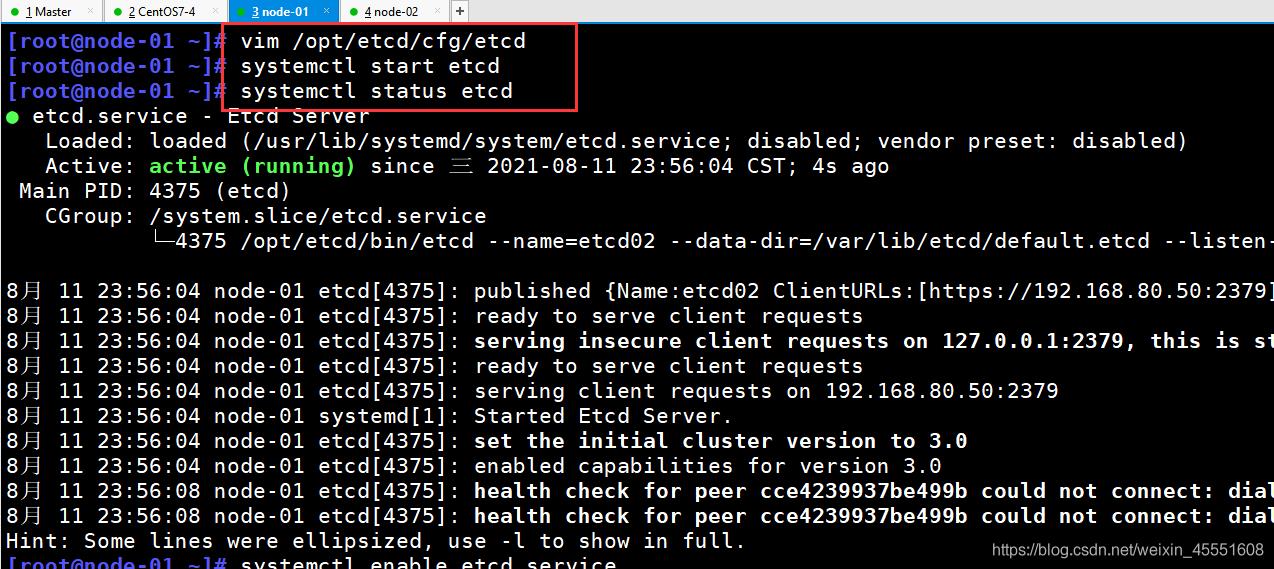

⑤ node-01上操作

[root@node-01 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" ##修改为 etcd02

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.50:2380" #修改为192.168.80.50

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.50:2379" #修改为192.168.80.50

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.50:2380" #修改为192.168.80.50

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.50:2379" #修改为192.168.80.50

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.40:2380,etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

//启动etcd

[root@node-01 ~]# systemctl start etcd

[root@node-01 ~]# systemctl status etcd

[root@node-01 ~]# systemctl enable etcd.service

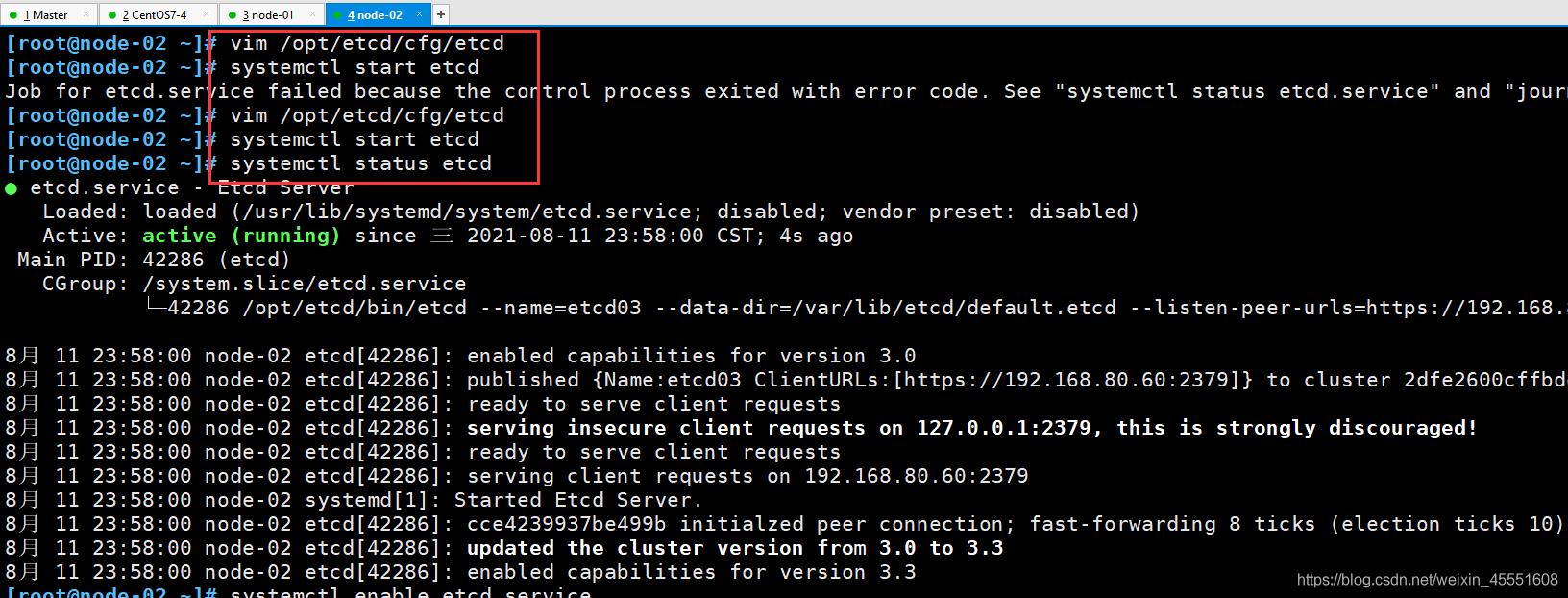

⑥ node-02上操作

[root@node-02 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03" ##修改为 etcd03

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.60:2380" #修改为192.168.80.60

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.60:2379" #修改为192.168.80.60

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.60:2380" #修改为192.168.80.60

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.60:2379" #修改为192.168.80.60

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.40:2380,etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

//启动etcd

[root@node-02 ~]# systemctl start etcd

[root@node-02 ~]# systemctl status etcd

[root@node-02 ~]# systemctl enable etcd.service

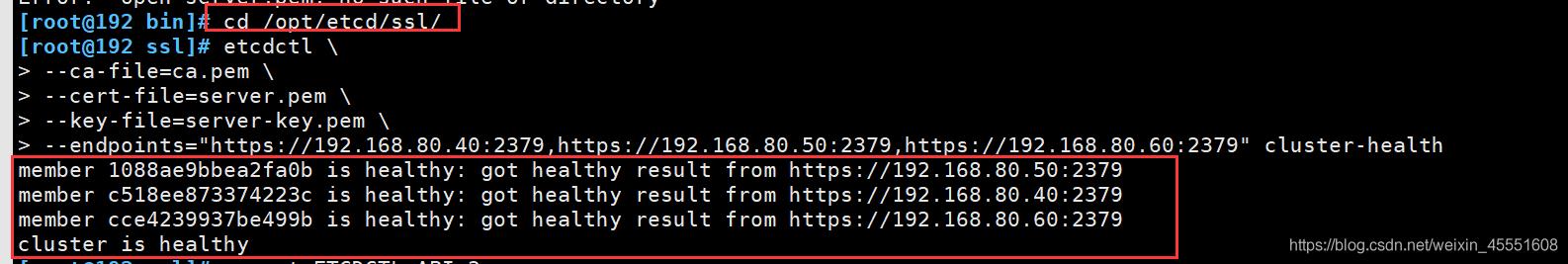

⑦ 检查集群状态(Master上操作)

[root@192 bin]# ln -s /opt/etcd/bin/etcdctl /usr/local/bin/

[root@192 bin]# cd /opt/etcd/ssl/

[root@192 ssl]# etcdctl \\

--ca-file=ca.pem \\

--cert-file=server.pem \\

--key-file=server-key.pem \\

--endpoints="https://192.168.80.40:2379,https://192.168.80.50:2379,https://192.168.80.60:2379" cluster-health

member 1088ae9bbea2fa0b is healthy: got healthy result from https://192.168.80.50:2379

member c518ee873374223c is healthy: got healthy result from https://192.168.80.40:2379

member cce4239937be499b is healthy: got healthy result from https://192.168.80.60:2379

cluster is healthy

---------------------------------------------------------------------------------------

--ca-file:识别HTTPS端使用SSL证书文件

--cert-file:使用此SSL密钥文件标识HTTPS客户端

--key-file:使用此CA证书验证启用https的服务器的证书

--endpoints:集群中以逗号分隔的机器地址列表

cluster-health:检查etcd集群的运行状况

---------------------------------------------------------------------------------------

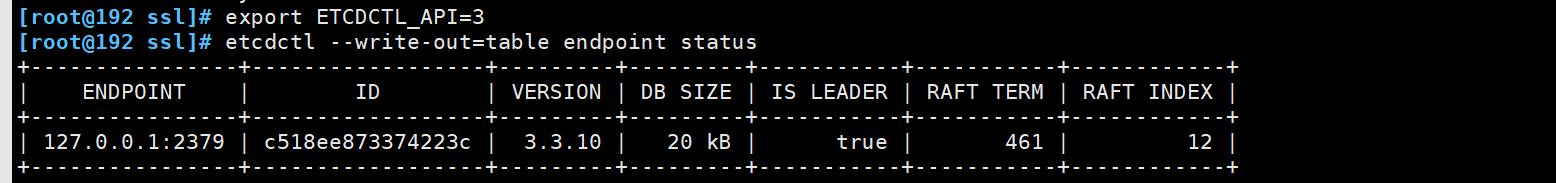

⑧ 切换到etcd3版本进行查看集群节点状态和成员列表

[root@192 ssl]# export ETCDCTL_API=3 #v2和v3命令略有不同,etc2和etc3也是不兼容的,默认是v2版本

[root@192 ssl]# etcdctl --write-out=table endpoint status

+----------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+----------------+------------------+---------+---------+-----------+-----------+------------+

| 127.0.0.1:2379 | c518ee873374223c | 3.3.10 | 20 kB | true | 461 | 12 |

+----------------+------------------+---------+---------+-----------+-----------+------------+

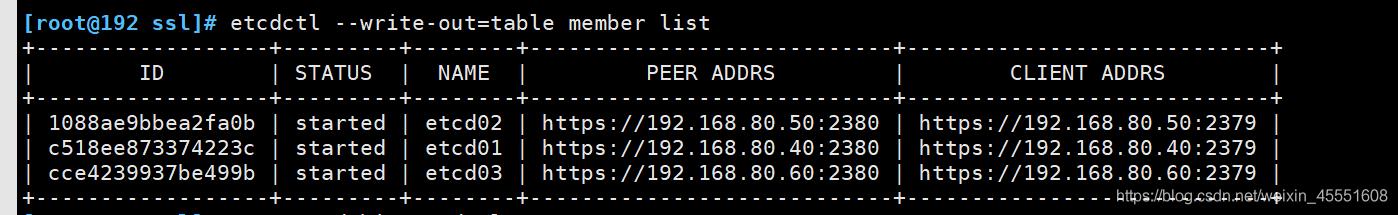

[root@192 ssl]# etcdctl --write-out=table member list

+------------------+---------+--------+----------------------------+----------------------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |

+------------------+---------+--------+----------------------------+----------------------------+

| 1088ae9bbea2fa0b | started | etcd02 | https://192.168.80.50:2380 | https://192.168.80.50:2379 |

| c518ee873374223c | started | etcd01 | https://192.168.80.40:2380 | https://192.168.80.40:2379 |

| cce4239937be499b | started | etcd03 | https://192.168.80.60:2380 | https://192.168.80.60:2379 |

[root@192 ssl]# export ETCDCTL_API=2 #再切回v2版本

二、部署 docker 引擎

所有的node节点上安装docker

[root@node-01 ~]# cd /etc/yum.repos.d/

[root@node-01 yum.repos.d]# mv repo.bak/* ./

[root@node-01 yum.repos.d]# ls

CentOS-Base.repo CentOS-Debuginfo.repo CentOS-Media.repo CentOS-Vault.repo repo.bak

CentOS-CR.repo CentOS-fasttrack.repo CentOS-Sources.repo local.repo

[root@node-01 yum.repos.d]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@node-01 yum.repos.d]# yum-config-manager --add-repo