31.分隔符异常分析

Posted 大勇若怯任卷舒

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了31.分隔符异常分析相关的知识,希望对你有一定的参考价值。

31.1 异常情况

- 创建Hive表test_hive_delimiter,使用“\\u001B”分隔符

create external table test_hive_delimiter

(

id int,

name string,

address string

)

row format delimited fields terminated by '\\u001B'

stored as textfile location '/fayson/test_hive_delimiter';

- 使用sqoop抽取mysql中test表数据到hive表(test_hive_delimiter)

[root@ip-172-31-6-148 ~]# sqoop import --connect jdbc:mysql://ip-172-31-6-148.fayson.com:3306/fayson -username root -password 123456 --table test -m 1 --hive-import --fields-terminated-by "\\0x001B" --target-dir /fayson/test_hive_delimiter --hive-table test_hive_delimiter

[root@ip-172-31-6-148 ~]# hadoop fs -ls /fayson/test_hive_delimiter

Found 2 items

-rw-r--r-- 3 fayson supergroup 0 2017-09-06 13:46 /fayson/test_hive_delimiter/_SUCCESS

-rwxr-xr-x 3 fayson supergroup 56 2017-09-06 13:46 /fayson/test_hive_delimiter/part-m-00000

[root@ip-172-31-6-148 ~]# hadoop fs -ls /fayson/test_hive_delimiter/part-m-00000

-rwxr-xr-x 3 fayson supergroup 56 2017-09-06 13:46 /fayson/test_hive_delimiter/part-m-00000

[root@ip-172-31-6-148 ~]#

- 查看test_hive_delimiter表数据

[root@ip-172-31-6-148 ~]# beeline

Beeline version 1.1.0-cdh5.12.1 by Apache Hive

beeline> !connect jdbc:hive2://localhost:10000/;principal=hive/ip-172-31-6-148.fayson.com@FAYSON.COM

...

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://localhost:10000/> select * from test_hive_delimiter;

...

INFO : OK

+-------------------------+---------------------------+------------------------------+--+

| test_hive_delimiter.id | test_hive_delimiter.name | test_hive_delimiter.address |

+-------------------------+---------------------------+------------------------------+--+

| NULL | NULL | NULL |

| NULL | NULL | NULL |

| NULL | NULL | NULL |

+-------------------------+---------------------------+------------------------------+--+

3 rows selected (0.287 seconds)

0: jdbc:hive2://localhost:10000/>

- Hive表的建表语句:

- 通过sqoop抽取Mysql表数据到hive表,发现hive表所有列显示为null

- Hive表的分隔符为“\\u001B”,sqoop指定的分隔符也是“\\u001B”

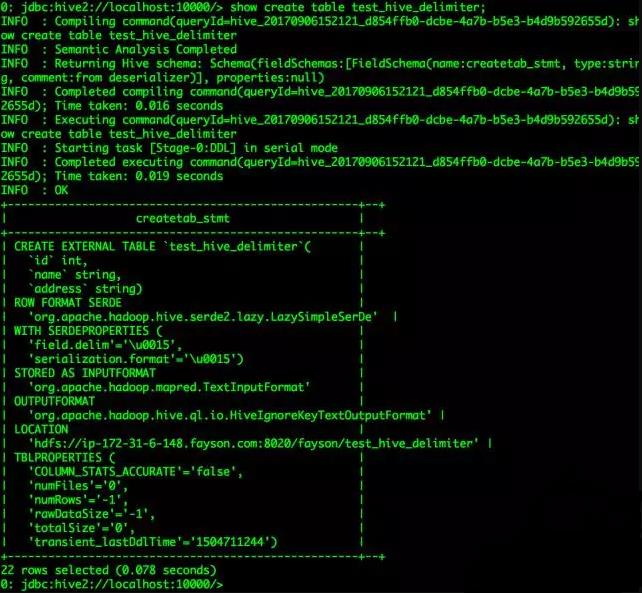

- 通过命令show create table test_hive_delimiter查看建表语句如下:

0: jdbc:hive2://localhost:10000/> show create table test_hive_delimiter;

...

INFO : OK

+----------------------------------------------------+--+

| createtab_stmt |

+----------------------------------------------------+--+

| CREATE EXTERNAL TABLE `test_hive_delimiter`( |

| `id` int, |

| `name` string, |

| `address` string) |

| ROW FORMAT SERDE |

| 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe' |

| WITH SERDEPROPERTIES ( |

| 'field.delim'='\\u0015', |

| 'serialization.format'='\\u0015') |

| STORED AS INPUTFORMAT |

| 'org.apache.hadoop.mapred.TextInputFormat' |

| OUTPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' |

| LOCATION |

| 'hdfs://ip-172-31-6-148.fayson.com:8020/fayson/test_hive_delimiter' |

| TBLPROPERTIES ( |

| 'COLUMN_STATS_ACCURATE'='false', |

| 'numFiles'='0', |

| 'numRows'='-1', |

| 'rawDataSize'='-1', |

| 'totalSize'='0', |

| 'transient_lastDdlTime'='1504705887') |

+----------------------------------------------------+--+

22 rows selected (0.084 seconds)

0: jdbc:hive2://localhost:10000/>

- 发现Hive的原始建表语句中的分隔符是“\\u001B”而通过show create table test_hive_delimiter命令查询出来的分隔符为“\\u0015”,分隔符被修改了。

31.2 解决异常

- 分隔符“\\u001B”为十六进制,而Hive的分隔符实际是八进制,所以在使用十六进制的分隔符时会被Hive转义,所以出现使用“\\u001B”分隔符创建hive表后显示的分隔符为“\\u0015”。

- 在不改变数据文件分隔符的情况下,要先将十六进制分隔符转换成八进制分隔符来创建Hive表。

- 将十六进制分隔符转换为八进制分隔符

- “\\u001B”转换八进制为“\\033”,在线转换工具:http://tool.lu/hexconvert/

- 修改建表语句使用八进制“\\033”作为分隔符

create external table test_hive_delimiter

(

id int,

name string,

address string

)

row format delimited fields terminated by '\\033'

stored as textfile location '/fayson/test_hive_delimiter';

- 使用命令show create table test_hive_delimiter查看建表语句

0: jdbc:hive2://localhost:10000/> show create table test_hive_delimiter;

...

INFO : OK

+----------------------------------------------------+--+

| createtab_stmt |

+----------------------------------------------------+--+

| CREATE EXTERNAL TABLE `test_hive_delimiter`( |

| `id` int, |

| `name` string, |

| `address` string) |

| ROW FORMAT SERDE |

| 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe' |

| WITH SERDEPROPERTIES ( |

| 'field.delim'='\\u001B', |

| 'serialization.format'='\\u001B') |

| STORED AS INPUTFORMAT |

| 'org.apache.hadoop.mapred.TextInputFormat' |

| OUTPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' |

| LOCATION |

| 'hdfs://ip-172-31-6-148.fayson.com:8020/fayson/test_hive_delimiter' |

| TBLPROPERTIES ( |

| 'COLUMN_STATS_ACCURATE'='false', |

| 'numFiles'='0', |

| 'numRows'='-1', |

| 'rawDataSize'='-1', |

| 'totalSize'='0', |

| 'transient_lastDdlTime'='1504707693') |

+----------------------------------------------------+--+

22 rows selected (0.079 seconds)

0: jdbc:hive2://localhost:10000/>

- 查询test_hive_delimiter表数据

0: jdbc:hive2://localhost:10000/> select * from test_hive_delimiter;

...

INFO : OK

+-------------------------+---------------------------+------------------------------+--+

| test_hive_delimiter.id | test_hive_delimiter.name | test_hive_delimiter.address |

+-------------------------+---------------------------+------------------------------+--+

| 1 | fayson | guangdong |

| 2 | zhangsan | shenzheng |

| 3 | lisi | shanghai |

+-------------------------+---------------------------+------------------------------+--+

3 rows selected (0.107 seconds)

0: jdbc:hive2://localhost:10000/>

- 将十六进制的”\\u001B”转换为八进制的”\\033”建表,异常解决。

- 注意:

- Hive建表时使用十六进制分割符需要注意,部分分隔符会被转义(如:001B/001C等)

- Sqoop指定十六进制分隔符,为什么是“\\0x001B”而不是“\\u001B”,可参考Sqoop

大数据视频推荐:

CSDN

大数据语音推荐:

企业级大数据技术应用

大数据机器学习案例之推荐系统

自然语言处理

大数据基础

人工智能:深度学习入门到精通

以上是关于31.分隔符异常分析的主要内容,如果未能解决你的问题,请参考以下文章